Well if it's just the first version, don't worry too hard about the edge cases~

@rogloh said:

Sounds good too. Seems weird to put a lot of audio burden in the video COG but HDMI and audio is coupled somewhat closely together. The other way to go is to have some sort of pull model where the HDMI pulls out audio samples at its own rate from some small FIFO and the audio source needs to monitor this FIFO's fill/drain levels and feed it with samples. Might create more jitter this way though as it would need to adapt its output rate slightly to keep the level from draining/filling too quickly. Probably messy to manage the buffer.

No mess if the generator agrees on the overall sample rate (as with the mailbox system) or times itself to the FIFO. In such a system the video cog would also be responsible for the analog audio outputs, so it really becomes an audio+video cog. An interesting idea regarding this: If 32kHz is used, the 640/854/etc x 480 HDMI timing can be fudged (by creating a few extra VBlank lines) such that the line frequency is also 32kHz, matching the audio exactly. This would be super useful for doing multichannel, since otherwise you'd sometimes need to process and encode 2 packets per line.

In prep for HDMI audio I tightened up the original text processing loop a bit and split it up for polling audio which was getting too slow if I tried to do it in the inner critical REP loop itself. There is a nice natural split position half way though the output as I already needed to deal with half the number of characters in double wide mode. So I now do either one iteration of the outer loop for double wide chars or two iterations for normal wide and I tidied up my skipf mask at the end of the loop in the process saving a couple of instructions. This new code is working in both double and normal width text mode. New relevant snippet shown below.

I'm now working on something similar in the graphics processing/pixel doubling code which also needs to poll audio without bogging down too much. I believe I can shrink the number of outer loop iterations a lot by always maximizing the available LUT space for transfers using a few conditional altd instructions before some setq2's. May take a few more instructions which I didn't have room for before but should be hundreds less cycles overall, especially for 24bpp mode which is the troublesome one. Moving to something like 36x3=108 clocks of housekeeping overhead vs 28x16=448 before. This is a useful savings if achievable.

if_nz setd textloop, #18 '18 instructions executed for normal text

if_z setd textloop, #24 '24 instructions executed for double wide text

wrnz temp1 'double = 0, normal = 1

mov a, #%11000 wc 'reset starting lookup index

if_nz setbyte a, #%11101110, #2 'skip pixel doubled code when normal wide chars

textloop rep #0-0, #COLS/2 '1940 clocks for 40 double wide chars, 2920 for 80 chars

skipf a 'skip 2 of the next 5 instructions

xor a, #%11110 'flip skip sequence for next time

rdlut d, pb 'read pair of characters/colours

getword c, d, #1 'select first word in long (skipf)

getword c, d, #0 'select second word in long (skipf)

sub pb, #1 'decrement LUT read index (skipf)

getbyte b, c, #0 'extract font offset for char

shr b, #2 'divide by 4

add b, #$110 'add to font base

rdlut pixels, b 'determine font lookup address

movbyts pixels, c 'get font for character's scanline

testflash bitl c, #15 wcz 'test (and clear) flashing bit

flash if_c and pixels, #$ff 'make it all background if flashing

movbyts c, #%01010101 'colours becomes BF_BF_BF_BF

mov b, c 'grab a copy for muxing step next

rol b, #4 'b becomes FB_FB_FB_FB

setq F0FF000F 'mux mask adjusts fg and bg colours

muxq c, b 'c becomes FF_FB_BF_BB

' wide normal

setword pixels, pixels, #1 ' * | replicate low words in long

mergew pixels ' * | ...to then double pixels

mov b, c ' * | save a copy before we lose colours

movbyts c, pixels ' * * compute 4 lower colours of char

ror pixels, #8 ' * | get upper 8 pixels

movbyts b, pixels ' * | compute 4 higher colours of char

wrlut b, ptrb-- ' * | save it to LUT RAM

wrlut c, ptrb-- ' * * save it to LUT RAM

call #audio_poll_repo

djnf temp1, #textloop

Just modified my pixel doubling code to take advantage of a reduced housekeeping loop size and fixed that 24bit mode critical inner loop and now call the audio poller a few times during this workload. I timestamped the 24bpp doubling work and other line maintenance during the active portion and it is now down to using up ~3750 clocks of the 6400 budget. This should now leave enough for the audio & TERC4 workload I'd hope.

Had a nightmare bug to figure out until I changed this

_ret_ pop ptra

back to this

pop ptra

ret

Getting rusty in my old age.

Other colour modes are busted right now with pixel doubling but they should require less cycles so I'm not too concerned about measuring those at this stage.

UPDATE: fixed some other depths of doubling:

16bpp uses ~3130

8bpp uses ~1600

4bpp uses ~1445

2bpp uses ~630

1bpp uses ~375

UPDATE2: The trick now will be getting all the extra HDMI specific sync logic/streamer code to fit, replacing what I already do for DVI, and creating a suitable init sequence for the new stuff. Down to 43 free COGRAM longs.

@rogloh said:

Had a nightmare bug to figure out until I changed this

_ret_ pop ptra

back to this

pop ptra

ret

Getting rusty in my old age.

Yea, that one should really be added to the hardware errata list. Though I don't remember what kind of glitch this causes (is it just popping once and using it for both the RET and the POP?)

UPDATE: fixed some other depths of doubling:

16bpp uses ~3130

8bpp uses ~1600

4bpp uses ~1445

2bpp uses ~630

1bpp uses ~375

@rogloh said:

Had a nightmare bug to figure out until I changed this

_ret_ pop ptra

back to this

pop ptra

ret

Getting rusty in my old age.

Yea, that one should really be added to the hardware errata list. Though I don't remember what kind of glitch this causes (is it just popping once and using it for both the RET and the POP?)

@Wuerfel_21 said:

An interesting idea regarding this: If 32kHz is used, the 640/854/etc x 480 HDMI timing can be fudged (by creating a few extra VBlank lines) such that the line frequency is also 32kHz, matching the audio exactly. This would be super useful for doing multichannel, since otherwise you'd sometimes need to process and encode 2 packets per line.

If line freq is 32kHz then exactly three 96kHz samples per line, presumably. Do most/all HDMI sinks support 96kHz?

P.S. I have acres of whitespace to scroll past whenever I edit one of my posts.

@rogloh said:

Had a nightmare bug to figure out until I changed this

_ret_ pop ptra

back to this

pop ptra

ret

Getting rusty in my old age.

Yea, that one should really be added to the hardware errata list. Though I don't remember what kind of glitch this causes (is it just popping once and using it for both the RET and the POP?)

That's one way of putting it. Though a relative LOC instruction does the same thing.

@Wuerfel_21 said:

An interesting idea regarding this: If 32kHz is used, the 640/854/etc x 480 HDMI timing can be fudged (by creating a few extra VBlank lines) such that the line frequency is also 32kHz, matching the audio exactly. This would be super useful for doing multichannel, since otherwise you'd sometimes need to process and encode 2 packets per line.

If line freq is 32kHz then exactly three 96kHz samples per line, presumably. Do most/all HDMI sinks support 96kHz?

I don't think so. The only required formats are 32/44.1/48kHz stereo. AVRs probably all take 96, TVs maybe, PC monitors probably not (my ASUS PA248QV doesn't even say anything about audio in the spec sheet other than "Speaker: Yes").

Though for stereo 96kHz the line rate matching is not so much of an issue, since you can fit up to 4 samples in one packet for stereo. For multichannel you can only fit 1 (there's always 8 channels even if you're, e.g. only doing 4.0 or 5.1)

Side note that's somewhat on-topic: The HDMI/CEA spec for surround channel mapping is apparently kinda broken?

So:

5.1 is an inherently compromised layout. The surround speakers are more to the side than to the rear (+/- 110° from center per ITU spec). This makes sense, most people have their sofa up against a wall and physically can't have proper rear speakers. This does mean there's a gaping 140° hole in the back and the front/rear speakers aren't symmetrical, but that's a fair compromise.

This means there are two variations on "4.0 surround": The classic "quadro" square layout and the 30°/110° 5.1 layout minus the center speaker. These are not equivalent. (The square layout is mathematically superior, but not as practical to actually set up)

(tangent: 7.1 has no formal spec! Though 135° azimuths are an ok guess? 150° is ideal to be opposite from the front L/Rs - something something energy vectors)

HDMI InfoFrames are based on CEA-861 (also probably used by DisplayPort audio?)

old versions of CEA-861 seem to ignore the above facts and feature this mindboggling graphic

(in that, FL+FR+RL+RR is 4.0 (code 0x08), FC is added for 5.0 (code 0x0A) and RLC/RRC are added for 7.0 (code 0x10) - has anyone ever needed discrete FLC/FRC channels? - note that the ITU spec azimuth for FL/FR is only +/-30° out from center!!)

in later versions of CEA-861, they realize the error of their ways and updated the figure and the channel names (though they missed some in the tables! Terrible find-and-replace job!)

LS/RS are now explicitly the 5.1 +/-110° surrounds. This includes the 4.0 layout (code 0x08).

This means there's no standard CEA/HDMI channel layout that isn't weirdly lopsided! Which, considering that more modern systems really treat the discrete channels more as suggestions than something to directly send to a speaker... is no good for making these suggestions.

Even in Dolby Atmos proprietary lala-land, which is supposedly speaker independent, there are still 5.1-derived "bed" channels! AAAAAAA! This is where anything that isn't a 3D object goes. The bed channels can be 7.1.4 or something like that, which is less bad, but urrrgh why~ (another fun Atmos fact: almost no receiver with the extra amp channels can take them as discrete analog or PCM input channels! 7.1's the limit! There is software for mastering Atmos, but guess what, the master bitstream format they output also isn't compatible with actual Atmos AVRs (you are supposed to use a "dumb" 12 channel interface for monitoring)... The conversion to the consumer bitstream (still apparently some iteration on ye-olde AC3) is apparently "handled by the distribution service". ??????? What distribution service? What if you just want to post a file to a normal-donkey website????)

This entire headache had already been solved by Ambisonics and related research in the 70s before any of this happened - you don't record speaker channels, you record the 3-dimensional soundfield, approximated by n-th order spherical harmonics and then the hardware at the very end turns it into speaker channels - and guess what format is predominantly used for 360° VR videos...

Sorry for the unprompted rant, but if an idiot like me who's read a few papers and specs can spot something obviously amiss...

P.S. I have acres of whitespace to scroll past whenever I edit one of my posts.

Running into a potential issue coding up stuff for HDMI data island packet transfers. Can the streamer handle two back to back commands using the HUB RAM from different source addresses without introducing a gap?

I'd like to do something like the following snippet...

rdfast #0, ptrb ' setup FIFO source address for fifo data, to pre-fill it

...

xcont xhub32, #0 ' send 32 longs from hub

rdfast #0, next ' setup next source address for fifo data

xcont xhub32, #0 ' send another 32 longs from hub from second address

...

xhub32 long X_RFLONG_32P_4DAC8 | 32

My question is, will the second rdfast mess up the first? Will it corrupt the streaming in progress? I don't want to wait for the FIFO to empty before setting up the next transfer as is done by setting bit31 in the D field because that will resync me to to point when the first transfer is complete and I have other things I wish to do in this time. I basically want to issue both streamer instructions closely together and essentially buy myself ~640 clocks for other work I can go do in this time.

I'm pretty much guessing this approach above isn't going to be possible given the FIFO prefill time.

Normally FBLOCK can be used to setup the next address in advance when doing multiple FIFO transfers with wrapping etc but this seems to be for 64 byte block transfers and I'm sending 32 longs. Perhaps if I align the data it still could be possible to use somehow? Will FBLOCK also work with the streamer?

In theory I could send 64 longs in one streamer command, but the second packet data (clock regen) is pre-encoded (static) which introduces complexities during VSYNC where it needs to be encoded differently.

Yes, this is what FBLOCK is explicitly for. It works on 64 byte blocks, so 32 longs means you need D=2. For smooth changeover, all your buffers must be whole blocks apart and long-aligned. I don't even think you need the 2nd streamer instruction, you can just do one 64 long command.

@Wuerfel_21 said:

Yes, this is what FBLOCK is explicitly for. It works on 64 byte blocks, so 32 longs means you need D=2. For smooth changeover, all your buffers must be whole blocks apart and long-aligned. I don't even think you need the 2nd streamer instruction, you can just do one 64 long command.

Um yeah it's worth an attempt. I hope it works correctly in conjunction with the streamer as I've only used it before without the streamer.

The issue with coupling is the VSYNC encode version of the clock regen packet that immediately follows audio is different. I guess I can encode the audio packet to a different address on the scanline before vsync starts and do that until the second last line of vsync - just messy to check all the different scan lines for different work. Also in some cases I don't send a clock regen packet and would prefer to send a NULL pkt instead.

I might have just managed to get some sort of HDMI implementation to barely fit inside my P2 video driver. Worked on the tricky sync code today and found I could use skipf to an advantage here. I have 1 COGRAM long left right now but I think I can potentially free about 4 more when I get desperate. In addition I have kept the VGA's VSYNC pin toggle code in place (5 pin mode) done at VSYNC time so maybe there is hope for VGA+HDMI simultaneously depending on what else is required there in the runtime code, if anything. This code compiles but is untested and probably has bugs.

Still need to add into the small amount of spare LUTRAM a couple more things to complete this to my satisfaction for testing:

1) audio volume control (mostly just a couple of multiplies - hopefully 16 bit only)

2) chain loading some more code after the TERC4 packet encoding stage which will do some audio polling to the end of the scan line. Am anticipating that I'll probably need to latch a timestamp from the start of the scan line so the code knows when to stop before the end the scan line and return for next hsync to be prepared. Right now that polling code after everything on the line has been done and the COG is just sitting idle probably won't fit the space.

For now I will probably need to align the horizontal sync to the start of the island data and keep it within the first 64 pixels once the data island begins. It's tricky to have it change anywhere in the middle of the back porch following the island as this adds more streamer commands and COGRAM storage.

In theory this code has the ability to send out two Infoframes on the last VSYNC scan line (or whatever packet is encoded by the client into HUB RAM). It's slightly messy because the driver could either decide to send a null packet or a clock regeneration packet prior to these two Infroframes, so the setup code would have to copy this extra packet data into two HUB locations to have the packets ready and stored contiguously for both packet send options, but this should be doable.

Later on if interlacing support is added (480i/576i) then all pixels will have to be doubled by default and even quadrupled if the resolution drops to 320 or 360 pixels via my horizontal double wide option flag configured per region. This will take up more code space but my plan for that is to move the doubling/quadrupling code into LUTRAM overlays, which can then be read in to either double or quadruple pixels as needed. Doing this also frees some extra COGRAM space, ~32 longs IIRC, which can be helpful for also changing the text mode implementation to optionally pixel quadruple, as well any extra vsync changes for interlaced timing.

'--------------------------------------------------------------------------------------------------

' Subroutines

'--------------------------------------------------------------------------------------------------

' active vsync blank

blank_vsync skipf skipfmask_vsync '

cmp pa, #1 wz ' * z=1 if pa=1 (last sync line)

blank skipf skipfmask_vblank ' | *

hsync skipf skipfmask_active ' | |

xzero m_hfp, hsync0 ' * * * send shortended fp before preample

cmpsub hdmi_regen_counter, hdmi_regen_period wc

' * * * check if time to send regen pkt (c=1)

xcont m_imm8, preamble ' * | * send preamble before island

xcont m_imm8, preamble_vsync ' | * | send preamble before island (vsync line)

rdfast #2, audio_pktbuf ' * | * prepare encoded audio pkt

wrlong status, statusaddr ' * * * update clients with scan status

if_c fblock #0, aclk_pktbuf ' * | * send aclk pkt (normal encode)

if_nc fblock #0, null_pktbuf ' * | * send null when no regen packet ready

if_c fblock #0, aclkv_pktbuf ' | * | send aclk pkt (vsync encoded)

if_nc fblock #0, nullv_pktbuf ' | * | send null when no regen packet ready

xcont m_imm2, dataguard ' * | * send leading guard band

xcont m_imm2, dataguard_vsync ' | * | send leading guard band (vsync)

alt0 xcont m_hubpkt, #0 ' * * * send two packets

if_z xcont m_hubpkt, #0 ' | * | send two more packets

setq2 #256-1 ' * | | read large palette for this scan line

rdlong 0, paletteaddr ' * | |

xcont m_imm2, dataguard ' * | * trailing guard band

_ret_ xcont m_hbp, hsync0 ' * | | send shortened back porch before preamble+gb

xcont m_imm2, dataguard_vsync ' * | trailing guard band sync encoded

xcont m_hbp2, hsync0 ' * * only control, no pre+gb for blank/sync lines

if_z altd alt0, #m_vi2 ' * | shrink vsync vis line for extra 64 pixel pkts

xcont m_vi, hsync0 ' * * generate blank line pixels

call #\loadencoder ' * * encode next audio pkt & poll audio

_ret_ djnz pa, #blank_vsync ' * | repeat

_ret_ djnz pa, #blank ' * repeat

skipfmask_vsync long %111100010011010100110 'check this mask

skipfmask_vblank long %10010110111010110000100010 'check this mask

skipfmask_active long %0000000010101100001000

'hub RAM addresses of packet buffers stored here

audio_pktbuf long 0

aclk_pktbuf long 0

aclkv_pktbuf long 0

null_pktbuf long 0

nullv_pktbuf long 0

avi_pktbuf long 0 ' dual pkt buf here audio+video infoframes, always sent on last vsync line

m_hubpkt long X_RFLONG_32P_4DAC8 | X_PINS_ON | 64

m_imm8 long X_IMM_1X32_4DAC8 | X_PINS_ON | 8

m_imm2 long X_IMM_1X32_4DAC8 | X_PINS_ON | 2

preamble long %0010101011_0010101011_1101010100_10 'preamble pattern when vsync is inactive (assumes hsync inactive)

preamble_vsync long %0010101011_0010101011_0101010100_10 'preamble pattern when vsync is active (assumes hsync inactive)

dataguard long %0100110011_0100110011_1010001110_10

dataguard_vsync long %0100110011_0100110011_0101100011_10

video_guard long %1011001100_0100110011_1011001100_10

video_preamble long %1101010100_0010101011_1101010100_10

As previously discovered, the preamble doesn't have to be exactly 8 pixels, it just needs to be at least 8 pixels. So you can remove some complexity by turning the entire front porch into a preamble. (This checks out with the conformance document I found)

@evanh said:

I just stuck to plain stereo. 5.1 and the likes always seemed a mess. The subs always seemed to pump out a monotonous throb and nothing else.

I mean it is a mess Though if the sub sounds like that, level/crossover is probably set weirdly. (possibly because someone didn't understand that "small speaker" in the settings means "really small speaker" and any real speaker (yes even if bought at ALDI) should be set to "large" and that leads to setting the crossover so low that the sub only gets LFE and very low sub-bass). Of course nothing stops one from getting properly big speakers (as one would use for a good stereo setup), hook up 4 or 5 of those and just not having a sub at all. I actually really like the ProLogic style phase-amplitude matrix systems for music, nicely spreads the sound all around the room, a lot more like listening on headphones than normal stereo speakers.

@Wuerfel_21 said:

As previously discovered, the preamble doesn't have to be exactly 8 pixels, it just needs to be at least 8 pixels. So you can remove some complexity by turning the entire front porch into a preamble. (This checks out with the conformance document I found)

Ok, just wasn't sure about doing that. I do plan to make the first bit of the data packet align with the hsync, so the preamble would be certainly remain part of the front porch.

I had another thought about how to tidy things up a bit. If I keep one buffer area in HUB RAM with the following sequence of contiguous 32 pixel packets it should save longs as I can compute 128 byte offsets to select different output packets. No need to keep lots of addresses in COGRAM and I can just have a single InfoFrame update too which is simpler. I would just need to send the Null or Clock regen packet as the first of the pair (reverse the order). Am going to look into this. It may improve things quite a bit.

Static Null packet (normal)

Static Clock regen packet(normal)

Static Null packet (vsync)

Static Clock regen packet (vsync)

Audio encoded packet (either vsync or normal encoded by software)

InfoFrame packet 1 (only ever sent on vsync line, contents can vary with client COG's changes)

InfoFrame packet 2 (ditto)

(etc...)

Update: now I have 5 free COGRAM longs after this change. Here's the updated code:

' active vsync blank

blank_vsync cmp pa, #1 wz ' z=1 if pa=1 (last sync line)

skipf skipfmask_vsync ' do vsync line

blank skipf skipfmask_vblank ' | do other blanking line

hsync skipf skipfmask_active ' | | do active line

xzero m_hfp, hsync0 ' * * * send shortened fp before preample

cmpsub hdmi_regen_counter, hdmi_regen_period wc

' * * * c=1 if time to send a clock regen pkt

mov pb, pktbuf ' * * * setup base packet buffer address

add pb, #256 ' | * | offset to send in vsync line

if_c add pb, #128 ' * * * offset to send clock regen pkt (c=1)

xcont m_imm8, preamble ' * | * send preamble before island

xcont m_imm8, preamble_vsync ' | * | send preamble before island (vsync line)

rdfast #2, pb ' * * *

wrlong status, statusaddr ' * * * update clients with scan status

fblock #0, pktbuf_audio ' * * * setup next packet start address

xcont m_imm2, dataguard ' * | * send leading guard band

xcont m_imm2, dataguard_vsync ' | * | send leading guard band (vsync)

alt0 xcont m_hubpkt, #0 ' * * * send two packets

if_z xcont m_hubpkt, #0 ' | * | send two more packets

setq2 #256-1 ' * | | read large palette for this scan line

rdlong 0, paletteaddr ' * | |

xcont m_imm2, dataguard ' * | * trailing guard band

_ret_ xcont m_hbp, hsync0 ' * | | send shortened back porch before preamble+gb

xcont m_imm2, dataguard_vsync ' * | trailing guard band sync encoded

xcont m_hbp2, hsync0 ' * * only control, no pre+gb for blank/sync lines

if_z altd alt0, #m_vi2 ' * | shrink vsync vis line for extra 64 pixel pkts

xcont m_vi, hsync0 ' * * generate blank line pixels

call #\loadencoder ' * * encode next audio pkt & poll audio

_ret_ djnz pa, #blank_vsync ' * | repeat

_ret_ djnz pa, #blank ' * repeat

'hub RAM address of packet buffers stored here contiguously in this sequence. Must be aligned to 64 byte boundary.

' null

' audio clock regen

' null (vsync)

' audio clock regen (vsync)

' audio packet sample (both)

' avi infoframe(s) (only vsync encoded)

pktbuf long 0

pktbuf_audio long 0

Almost there with the code for HDMI but still cranking on packet setup during init and sync polarity stuff. That gets messy to deal with and is prone to error.

Confidence is high right now that this should fit both P2 timing wise and COG/LUT RAM wise. Something should work at least once bugs are sorted.

I'm going to load the 256 entry palette and mouse sprite code into LUT RAM during HSYNC time when 2 packets are sent out (64 pixels = 640 P2 clocks). Then during horizontal back porch I can run the mouse sprite which takes about 480 clocks to execute. This will be nice and delayed and should give the prior issued external memory access from the previous scan line plenty of time to complete to be ready to overlay the sprite over this returned pixel data. Essentially the back porch will need to be setup with at least 48 pixels in order to have time to execute this sprite code before the video guard is sent.

This seems reasonable and normal 640x480 mode at 25.2MHz uses the following pixel timing for blanking:

HFP=16, HSYNC=96, HBP=48. We can move the extra 32 pixels from HSYNC into BP to ensure we have sufficient time for a mouse sprite to be rendered. My setup code can probably just reallocate any excess HSYNC size that exceeds 64 pixels into the HBP. This simplifies the multi-packet encoding.

27MHz HDMI (widescreen 720x480) typically uses different timing like this:

HFP=16, HSYNC=62 HBP=60. This timing can be modified to send 16, 64 and 58 pixels instead which should still be sufficient for a mouse sprite. Any time HSYNC is configured with less than 64 pixels, the missing amount can simply be stolen from the HBP.

The HFP needs to be at least 8+2 pixels to send a preamble and data guard band but my code (currently) expects a non-zero control portion of around 2 pixels for this as well (16 clocks). This time is useful for figuring out the packets to go out and to preload the FIFO for streaming these packets. So realistically the HFP should be reduced no less than 12 pixels for the code to work, though the default of 16 is fine, and I think it needs at least 14 in order to sync correctly anyway.

This means that blanking can't really be reduced further than about 14+64+48 or so, and using 128 total horizontal blanking pixels is probably safer. You could probably then achieve (1360+128)(768+3?)30fps on a P2 @ 344MHz on some low refresh rate display device that can accept the absolute minimum of blanking. Higher resolutions = lower frame rates. 1280x720p24 is probably achievable at ~254MHz with 750 vertical total lines being sent and the reduced horizontal blanking, although is an uncommon display resolution and refresh combination. Even @pik33 's 1024x576p special favourite resolution could potentially even be done sending out 1152x625x50Hz at a blistering P2 speed of 360MHz if a display accepts this timing.

Separately to all this, I was thinking about an alternative way to pass in audio sample data if a spare pin can't be used as a repo. If we use the repo pin idea with the HDMI pins we have to give up the bitDAC IO on the pins and probably drive the TMDS data at voltage levels outside the spec (which typically works but I don't like it that much). The alternative way could just use a single HUB memory location instead of a more complicated FIFO in order to keep the resource use low and an ATN could be signalled to interested COGs.

Right now my driver doesn't really make good use of ATN so an audio COG could potentially write stereo 16 bit data into a known memory address and then issue a COGATN. The polling code could be altered from this code...

audio_poll_repo

cmp audio_inbuf_level,#4 wc

.pptchd if_c testp #0-0 wc ' TESTP is a D-only instr

if_c altd audio_inbuf_level,.inbuf_incr

.pptchs if_c rdpin 0-0,#0-0

ret wcz

.inbuf_incr long audio_inbuffer + (1<<9)

to the following code below. It just requires one more COG long to hold the HUBRAM audio sample address and some suitable setup amongst COGs to share this common address. Not quite as easy as the Repo pin method, but still probably very simple to achieve.

UPDATE: I think it's now fully coded unless I've missed something. There will be bugs no doubt but I should be able to start checking/testing very soon.

Current storage:

COGRAM - 498 used of 502 total longs

HDMI encoding overlay - 240 of 240 LUT RAM entries used, though this could be split into two different parts eventually, audio + encode

Driver setup overlay - 271 of 272 LUT RAM entries used, any more than 272 prevents the loading of the HDMI overlay during init time for packet encoding, though this part could be moved to HUBexec if required.

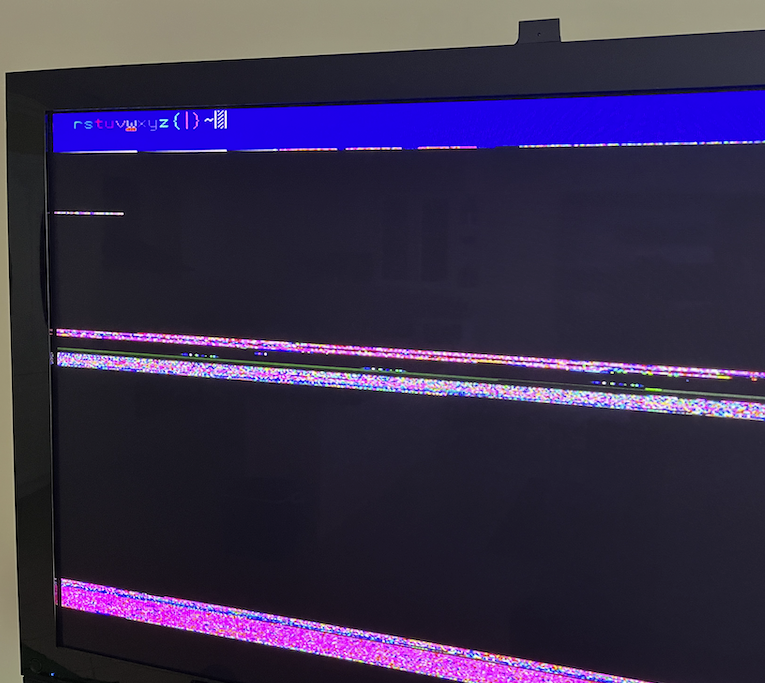

It's alive! Well HDMI framed video anyway with my "Chimera" test driver (a hybridised meld of @Wuerfel_21's audio +TERC encoder and my P2 video driver). Audio packets are being encoded yet apparently not being produced but that should be figured out soon.

Had 4 real bugs to resolve so far to get to this point. All I can say is that the P2 DEBUG stuff and my TMDS decoder program saved the day, and it only took me a few hours instead of potentially days to sort this lot out.

had placed an extra push operation after a call, messing up call stack on return and crashing badly

inadvertent cut/paste of an instruction in between a setq2 and wrlong, resulting in only single LUT write instead of full packet burst

inadvertent cut/paste of another instruction in between an alts and muxq during code rearranging, messing up data guard symbol during sync

back porch size calculation error, off by 2 pixels on some lines

Once those were sorted it now seems to be stable and I can now work with this.

In other testing last night I discovered the worst case for a 640x480 resolution output was about 7104 clocks to execute the scan line from the start of horizontal blanking in 24 bit pixel doubling mode including audio/TERC4 encoding and up to the final audio poller sample operation before returning and starting the next line. I will probably also need to also add another 134 clocks or so to this if the region changes on the scan line and it might also need a couple of hundred more cycles per extra audio sample included in the audio packets. TBD. But even with those it should still fit the budget of 8000 clocks (6400 active, 1600 blanking). I should know soon.

Hi @Wuerfel_21 I'm having some problems with your resampling code which I took from your video-nextgen branch on GitHub.

If I try to get it to lock the output at 48000Hz by setting up the audio_period with that rate in mind computed as (clkfreq+24000)/48000, I get zero for the integer and fractional portions of resample_ratio and no samples are emitted because the resample_phase_int is stuck at 0 and never increases.

If I manually patch the resample_ratio_int value to a "1" by default at boot I do get samples emitted but it's arriving at 24000Hz (half rate). If I set it to 2, I then get 1/3 the samples per second. Maybe I should set it to some other value... ideally it should be computed but it's not touched when the sample period is found to be one of the known 32/44.1/48kHz rates.

I also tried a different non-standard sample rates but it doesn't seem to output samples at the correct rate 48kHz rate either, got about 38kHz or something like that IIRC (approx half of what I'd nominated, but can't recall exact number). Will need to retest that specifically again tonight and update this. I might have used a rate that is higher than the 48kHz rate, not lower.

So is this branch's audio code currently working or broken to your knowledge? I hope I haven't missed something important but I believe I now have the same algorithm coded as yours - virtually copied verbatim but with a couple of minor custom changes - your patch skipping now has a variable controlling it as it executes for LUTRAM as an overlay and I can't patch it as easily. Here are the relevant snippets of interest...

mov temp3,#0

.sploop

tjz resample_phase_int,#.dosp

.feed

tjz audio_inbuf_level,#.spdone

' debug(UHEX_LONG(audio_inbuffer))

mov audio_hist+0,audio_hist+1

mov audio_hist+1,audio_hist+2

mov audio_hist+2,audio_hist+3

mov audio_hist+3,audio_inbuffer+0

mov audio_inbuffer+0,audio_inbuffer+1

mov audio_inbuffer+1,audio_inbuffer+2

mov audio_inbuffer+2,audio_inbuffer+3

sub audio_inbuf_level,#1

getword spleft, audio_hist+3, #1 ' get left sample

muls spleft, lvol ' attenuated left sample

sar spleft, #15 ' convert left sample to 16 lsbs

muls audio_hist+3, rvol ' attenuate right sample

sar audio_hist+3, #15 ' convert right sample to 16 lsbs

setword audio_hist+3, spleft, #1 ' combine both samples

' debug(UHEX_LONG(audio_hist))

djnz resample_phase_int,#.feed ' always fall through if ratio < 2

.dosp ' Create an output sample using cubic resampling

'debug(UHEX_LONG(spleft))

'debug(UHEX_LONG(spright))

.... <skipped>

decmod resample_antidrift_phase,resample_antidrift_period wc

if_c mov resample_phase_frac,#0

if_nc add resample_phase_frac,resample_ratio_frac wc

addx resample_phase_int,resample_ratio_int

' Set sample as valid in header

.... <skipped>

add hdmi_regen_counter,#1

incmod temp3,#2-1 wc ' This limits it to encoding 2 samples per scanline, which ought to be enough

' Hacky check to fix sampling jitter:

' Packets where frac->int overflow occurs are only allowed in first packet slot

' This is probably bad and a better way should be found.

mov temp1,resample_phase_frac

tjz patch_enabled, #.skippatch

.jptch1 if_nc add temp1,resample_ratio_frac wc ' Check if next frac add or

.jptch2 if_nc cmp resample_antidrift_phase,#1 wc ' antidrift overflows

.skippatch

if_nc jmp #.sploop

.spdone

' fall straight into packet encoder

And here's the setup code...I have zeroed all the values at startup.

' audio-related, all needs zero-ing before use

audio_hist long 0[4]

audio_inbuffer long 0[4]

audio_inbuf_level long 0

resample_ratio_frac long 0

resample_ratio_int long 0

resample_antidrift_period long 0

resample_phase_frac long 0

resample_phase_int long 0

resample_antidrift_phase long 0

spdif_phase long 0

hdmi_regen_counter long 0

... <skipped>

' Setup resampling rate

' If it matches any standard rate we know then we disable resampling

rdlong temp2,#@clkfreq ' grab system clock

' Check 32kHz

loc pa,#\32_000

call #util_check_sr_fuzzy

if_c setnib spdif_status+0,#%0011, #6

if_c jmp #.nctssetup

loc pa,#\44_100

call #util_check_sr_fuzzy

if_c setnib spdif_status+0,#%0000, #6

if_c jmp #.nctssetup

loc pa,#\48_000

call #util_check_sr_fuzzy ' gets 48k ideal period into temp1

setnib spdif_status+0,#%0010, #6 ' Always set 48k

if_c jmp #.nctssetup

' Ok, resample whatever it is to 48k

' debug(udec(temp1))

' debug(udec(audio_period))

qdiv temp1, audio_period ' temp1 still has 48kHz period

getqx resample_ratio_int

getqy temp1

qfrac temp1, audio_period

getqx resample_ratio_frac

mov resample_antidrift_period, audio_period ' Always set NC

sub resample_antidrift_period, #1

mov resample_antidrift_phase, resample_antidrift_period ' Init to max valud because DECMOD

.nctssetup

if_c mov temp1, audio_period ' If not resampling, use input rate

' Disable de-jitter code if not resampling or fractional ratio >= 0.5

mov patch_enabled, #1

tjns resample_ratio_frac,#.no_djkill

tjnz resample_antidrift_period,#.no_djkill

mov patch_enabled, #0

debug("Disabled de-jitter!")

.no_djkill

' Setup audio clock regen packet

mov packet_header, #$00_00_00_01 ' Clock packet

debug(udec(resample_ratio_int))

debug(uhex(resample_ratio_frac))

' debug(udec(temp2))

mov temp3, ##10000

mul temp3, temp1

shr temp3, #1 wc

add temp2, temp3

rcl temp3, #1

qdiv temp2, temp3 ' clkfreq/period (rounded)

getqx temp2 ' gives multiplier that shifts 1280/period into optimal range

mul temp1, temp2 ' CTS value

mov hdmi_regen_period, temp2

mul hdmi_regen_period, #10

mul temp2, ##1280 ' N value

debug("N: ",udec_(temp2)," CTS: ",udec_(temp1))

' debug(udec(hdmi_regen_period))

' Pack them into the clown car, honk honk

setr temp3, #packet_data0

rep @.nctspck, #4

alti temp3, #%111_000_000

movbyts temp1, #%%0123

alti temp3, #%111_000_000

movbyts temp2, #%%3012

.nctspck

rczr a wcz

mov packet_extra, ##$FE_FF_FF ' Start bit, hsync

if_c setbyte packet_extra, #0, #1 ' positive vsync

if_nz setbyte packet_extra, #0, #0 ' negative hsync

loc pb, #\clkpkt

call #terc_encode

xor packet_extra, ##$FF00

' setbyte packet_extra, #$FF, #1 ' VSync version

loc pb,#\clkpktv

call #terc_encode

setq #9-1

rdlong packet_header, ##$80000 ' clear packet data

jmp #fieldloop 'begin outputting video frames

util_check_sr_fuzzy

'' Compute period of sample rate in PA into temp1 (using clkfreq in temp2)

'' Set C if audio_period is close enough

mov pb,pa

shr pb,#1

add pb,temp2

qdiv pb,pa

getqx temp1

mov pb,temp1

sub pb,audio_period

abs pb

_ret_ cmp pb,#1 wc

util_infoframe_chksum

setbyte packet_data0+0,#0,#0

setbyte packet_header+0,#0,#3

setbyte packet_data0+1,#0,#3

setbyte packet_data1+1,#0,#3

setbyte packet_data2+1,#0,#3

setbyte packet_data3+1,#0,#3

getbyte temp1,packet_header,#2 ' grab length

add temp1,#4-1

mov temp2,#0

.chklp

altgb temp1,#packet_header

getbyte temp3

sub temp2,temp3

djnf temp1,#.chklp

' debug("infoframe checksum ",uhex_(temp2))

_ret_ setbyte packet_data0,temp2,#0

It should definitely work - just build whatever you copied it out of to verify.

All zeroes is the correct setting for bypass. The ratio is indeed zero, but the antidrift counter will underflow every time, so it doesn't matter (antidrift is normally used to fix rounding error in the ratio).

@Wuerfel_21 said:

It should definitely work - just build whatever you copied it out of to verify.

All zeroes is the correct setting for bypass. The ratio is indeed zero, but the antidrift counter will underflow every time, so it doesn't matter (antidrift is normally used to fix rounding error in the ratio).

Okay I reset it back to zero and do see some samples generated now - however it seems to be getting into a regime where there's only ever one sample seen per packet and I see an audio packet rate of 31.5kHz based on the number of samples and the number of pixels and 25.2Mpixels/s. Maybe I'm not sampling often enough on the scan line. I sample audio at the following times on the scan line:

start of line (0 clocks)

half way through text processing and also at end of 80 chars rendered, ~1600 & 3200 clock cycles later

(or) in graphics mode per each pixel group doubling iteration of 240 output longs

at end of TERC encode ~ 1300-2000 cycles later.

then repeatedly in a tight loop until near the end of the scan line when I return for the next line

Here's what I get: ~31.5kHz rate

❯ grep Sample out | more

Sample Interval Type Red(ch2) Green(ch1) Blue(ch0) Clock RRGGBB TMDS/CTS 128*Fs FsClk FifoSize Action

587 Sample 0 right = 000000, left = 000000, flags=00 Pr=0 Cr=0, Pl=0 Cl=0 ParityR=0 ParityL=0 Channel status count=0 0 1 SAMPLE WRITE

1387 Sample 0 right = 028900, left = 028900, flags=00 Pr=0 Cr=0, Pl=0 Cl=0 ParityR=0 ParityL=0 Channel status count=1 0 2 SAMPLE WRITE

2187 Sample 0 right = 03C500, left = 03C500, flags=00 Pr=0 Cr=0, Pl=0 Cl=0 ParityR=0 ParityL=0 Channel status count=2 0 3 SAMPLE WRITE

2987 Sample 0 right = 062000, left = 062000, flags=88 Pr=1 Cr=0, Pl=1 Cl=0 ParityR=1 ParityL=1 Channel status count=3 0 4 SAMPLE WRITE

3787 Sample 0 right = 073800, left = 073800, flags=00 Pr=0 Cr=0, Pl=0 Cl=0 ParityR=0 ParityL=0 Channel status count=4 0 5 SAMPLE WRITE

4587 Sample 0 right = 093000, left = 093000, flags=00 Pr=0 Cr=0, Pl=0 Cl=0 ParityR=0 ParityL=0 Channel status count=5 0 6 SAMPLE WRITE

5387 Sample 0 right = 0A0B00, left = 0A0B00, flags=88 Pr=1 Cr=0, Pl=1 Cl=0 ParityR=1 ParityL=1 Channel status count=6 0 7 SAMPLE WRITE

....

1565387 Sample 0 right = F9DF00, left = F9DF00, flags=88 Pr=1 Cr=0, Pl=1 Cl=0 ParityR=1 ParityL=1 Channel status count=79 2927 -971 SAMPLE WRITE

1566187 Sample 0 right = F8C700, left = F8C700, flags=00 Pr=0 Cr=0, Pl=0 Cl=0 ParityR=0 ParityL=0 Channel status count=80 2928 -971 SAMPLE WRITE

1566987 Sample 0 right = F6CF00, left = F6CF00, flags=00 Pr=0 Cr=0, Pl=0 Cl=0 ParityR=0 ParityL=0 Channel status count=81 2930 -972 SAMPLE WRITE

1567787 Sample 0 right = F5F400, left = F5F400, flags=88 Pr=1 Cr=0, Pl=1 Cl=0 ParityR=1 ParityL=1 Channel status count=82 2931 -972 SAMPLE WRITE

1568587 Sample 0 right = F48900, left = F48900, flags=00 Pr=0 Cr=0, Pl=0 Cl=0 ParityR=0 ParityL=0 Channel status count=83 2933 -973 SAMPLE WRITE

1569387 Sample 0 right = F3FE00, left = F3FE00, flags=88 Pr=1 Cr=0, Pl=1 Cl=0 ParityR=1 ParityL=1 Channel status count=84 2934 -973 SAMPLE WRITE

1570187 Sample 0 right = F34100, left = F34100, flags=00 Pr=0 Cr=0, Pl=0 Cl=0 ParityR=0 ParityL=0 Channel status count=85 2936 -974 SAMPLE WRITE

1570987 Sample 0 right = F30100, left = F30100, flags=88 Pr=1 Cr=0, Pl=1 Cl=0 ParityR=1 ParityL=1 Channel status count=86 2937 -974 SAMPLE WRITE

1571787 Sample 0 right = F31100, left = F31100, flags=00 Pr=0 Cr=0, Pl=0 Cl=0 ParityR=0 ParityL=0 Channel status count=87 2939 -975 SAMPLE WRITE

1572587 Sample 0 right = F39000, left = F39000, flags=00 Pr=0 Cr=0, Pl=0 Cl=0 ParityR=0 ParityL=0 Channel status count=88 2941 -976 SAMPLE WRITE

❯ grep Sample out | wc

1967 45231 318232

Am wondering if it is related to this hacky stuff...if it exits the loop early it might only allow a single sample per audio packet. When I increased the first line incmod value to 3-1 instead of 2-1 it still issued only one sample per line. Maybe this patch is not being skipped and forces an exit due to comparing zero with the #1 value which sets carry and exits.

incmod temp3,#3-1 wc ' This limits it to encoding 2 samples per scanline, which ought to be enough

' Hacky check to fix sampling jitter:

' Packets where frac->int overflow occurs are only allowed in first packet slot

' This is probably bad and a better way should be found.

mov temp1,resample_phase_frac

tjz patch_enabled, #.skippatch

.jptch1 if_nc add temp1,resample_ratio_frac wc ' Check if next frac add or

.jptch2 if_nc cmp resample_antidrift_phase,#1 wc ' antidrift overflows

.skippatch

if_nc jmp #.sploop

.spdone

The logic here seems to not disable this patch if the tjns line is executed which it will be because resample_ratio_frac is never set after startup and it remains at zero.

' Disable de-jitter code if not resampling or fractional ratio >= 0.5

mov patch_enabled, #1

tjns resample_ratio_frac,#.no_djkill

tjnz resample_antidrift_period,#.no_djkill

mov patch_enabled, #0

' debug("Disabled de-jitter!")

.no_djkill

EDIT: Yep, when I manually disabled the dejitter hack it seems to work now and I get ~2997 samples in 1572229 pixels which is 2997/1572229 * 25.2MHz ~ 48kHz. So that code is not working correctly.

1569099 Sample 1 right = 0C6F00, left = 0C6F00, flags=00 Pr=0 Cr=0, Pl=0 Cl=0 ParityR=0 ParityL=0 Channel status count=0 2982 7 SAMPLE WRITE

1569899 Sample 0 right = 0CBE00, left = 0CBE00, flags=00 Pr=0 Cr=0, Pl=0 Cl=0 ParityR=0 ParityL=0 Channel status count=1 2983 7 SAMPLE WRITE

1570699 Sample 0 right = 0CEE00, left = 0CEE00, flags=CC Pr=1 Cr=1, Pl=1 Cl=1 ParityR=0 ParityL=0 Channel status count=2 2985 6 SAMPLE WRITE

1570699 Sample 1 right = 0CFE00, left = 0CFE00, flags=88 Pr=1 Cr=0, Pl=1 Cl=0 ParityR=1 ParityL=1 Channel status count=3 2985 7 SAMPLE WRITE

1571499 Sample 0 right = 0CEE00, left = 0CEE00, flags=00 Pr=0 Cr=0, Pl=0 Cl=0 ParityR=0 ParityL=0 Channel status count=4 2986 7 SAMPLE WRITE

1572299 Sample 0 right = 0CBE00, left = 0CBE00, flags=00 Pr=0 Cr=0, Pl=0 Cl=0 ParityR=0 ParityL=0 Channel status count=5 2988 6 SAMPLE WRITE

1572299 Sample 1 right = 0C6F00, left = 0C6F00, flags=00 Pr=0 Cr=0, Pl=0 Cl=0 ParityR=0 ParityL=0 Channel status count=6 2988 7 SAMPLE WRITE

❯ grep Sample out | wc

2997 68921 479514

Update: looks like we just made it with pixel doubling and audio. When three samples are encoded per scan line it takes 7716 clocks and two samples takes 7492 clocks. I do need to add the optional next region processing code to this which is ~134 more clocks. 7716 + 134 = 7850 out of 8000 clocks per line. Tight.

When I forced it to encode 4 samples per audio frame I found it took 7940 clocks which is just enough to fit but would mean only one region would be achievable as there is insufficient time to do region processing. I may be able to move some of that work into the back porch if there is any time left after the mouse stuff but that would be for later. Mouse appears to be completed at 1308 clocks into the scan line (~131 pixels in from start of FP), and we have 1600 clocks in the blanking interval. Doing that could enable up to 4 samples per line for 96kHz audio transmitted on a 31.5kHz video line frequency. Right now the limit would be 31.5 * 3 = 94.5kHz.

At 27MHz there are more clocks per scan line so 96kHz may work there.

@Wuerfel_21 said:

Oh.... will have to investigate how that ever worked... it certainly does for me...

Might work if you declared an uninitialized variable as res 1 instead of long 0 and had something different in HUB RAM at that underlying location which may make the variable a negative value and have it work for you.

Oh, no, there's something deeply cursed occurring.

If I apply the above fix to Tempest 2000 (uses 44.1kHz bypass), the audio timing gets messed up - ?????

Okay, so apparently whatever buggy nonsense comes out with the dejitter code wrongfully enabled, my monitor likes, but correct 44.1 output where some samples are delayed (because I enabled debug mode and there's some prints in the MOD player code)? NO, that gets dropouts.

tl;dr; code posted above is good.

@rogloh before I merge the new code into mainline on any project, I'll ask you and your prickly picky equipment to sanity check it

@Wuerfel_21 said:

Okay, so apparently whatever buggy nonsense comes out with the dejitter code wrongfully enabled, my monitor likes, but correct 44.1 output where some samples are delayed (because I enabled debug mode and there's some prints in the MOD player code)? NO, that gets dropouts.

tl;dr; code posted above is good.

I suspect some HDMI monitors might take all sorts of abuse and fill in the gaps during missing audio samples etc as long as they get something at some fixed rate. So you may not have noticed the issue. DEBUG mode would introduce a lot of larger jitter though and probably is noticeable. This bug would simply send some samples constantly at 31.5kHz. It's essentially resampling to 31.5kHz.

@rogloh before I merge the new code into mainline on any project, I'll ask you and your prickly picky equipment to sanity check it

I tested your fix on your code with my Plasma (using vgatest) as well as in my own driver. Nice zero overhead fix, seems good.

@rogloh before I merge the new code into mainline on any project, I'll ask you and your prickly picky equipment to sanity check it

I tested your fix on your code with my Plasma (using vgatest) as well as in my own driver. Nice zero overhead fix, seems good.

I meant the whole applications as seen above, timing bugs can creep in from the audio driver. Have you built the Tempest 2000 source before? It has a similar branch with the new HDMI driver.

@rogloh before I merge the new code into mainline on any project, I'll ask you and your prickly picky equipment to sanity check it

I tested your fix on your code with my Plasma (using vgatest) as well as in my own driver. Nice zero overhead fix, seems good.

I meant the whole applications as seen above, timing bugs can creep in from the audio driver. Have you built the Tempest 2000 source before? It has a similar branch with the new HDMI driver.

I haven't seen Tempest 2000 before, no, but in the meantime I'll try full NeoYume with this and report back.

Update: played NeoYume first level of MSlug - audio survived fine.

@rogloh said:

I haven't seen Tempest 2000 before, no, but in the meantime I'll try full NeoYume with this and report back.

Update: played NeoYume first level of MSlug - audio survived fine.

Comments

Well if it's just the first version, don't worry too hard about the edge cases~

No mess if the generator agrees on the overall sample rate (as with the mailbox system) or times itself to the FIFO. In such a system the video cog would also be responsible for the analog audio outputs, so it really becomes an audio+video cog. An interesting idea regarding this: If 32kHz is used, the 640/854/etc x 480 HDMI timing can be fudged (by creating a few extra VBlank lines) such that the line frequency is also 32kHz, matching the audio exactly. This would be super useful for doing multichannel, since otherwise you'd sometimes need to process and encode 2 packets per line.

In prep for HDMI audio I tightened up the original text processing loop a bit and split it up for polling audio which was getting too slow if I tried to do it in the inner critical REP loop itself. There is a nice natural split position half way though the output as I already needed to deal with half the number of characters in double wide mode. So I now do either one iteration of the outer loop for double wide chars or two iterations for normal wide and I tidied up my skipf mask at the end of the loop in the process saving a couple of instructions. This new code is working in both double and normal width text mode. New relevant snippet shown below.

I'm now working on something similar in the graphics processing/pixel doubling code which also needs to poll audio without bogging down too much. I believe I can shrink the number of outer loop iterations a lot by always maximizing the available LUT space for transfers using a few conditional altd instructions before some setq2's. May take a few more instructions which I didn't have room for before but should be hundreds less cycles overall, especially for 24bpp mode which is the troublesome one. Moving to something like 36x3=108 clocks of housekeeping overhead vs 28x16=448 before. This is a useful savings if achievable.

if_nz setd textloop, #18 '18 instructions executed for normal text if_z setd textloop, #24 '24 instructions executed for double wide text wrnz temp1 'double = 0, normal = 1 mov a, #%11000 wc 'reset starting lookup index if_nz setbyte a, #%11101110, #2 'skip pixel doubled code when normal wide chars textloop rep #0-0, #COLS/2 '1940 clocks for 40 double wide chars, 2920 for 80 chars skipf a 'skip 2 of the next 5 instructions xor a, #%11110 'flip skip sequence for next time rdlut d, pb 'read pair of characters/colours getword c, d, #1 'select first word in long (skipf) getword c, d, #0 'select second word in long (skipf) sub pb, #1 'decrement LUT read index (skipf) getbyte b, c, #0 'extract font offset for char shr b, #2 'divide by 4 add b, #$110 'add to font base rdlut pixels, b 'determine font lookup address movbyts pixels, c 'get font for character's scanline testflash bitl c, #15 wcz 'test (and clear) flashing bit flash if_c and pixels, #$ff 'make it all background if flashing movbyts c, #%01010101 'colours becomes BF_BF_BF_BF mov b, c 'grab a copy for muxing step next rol b, #4 'b becomes FB_FB_FB_FB setq F0FF000F 'mux mask adjusts fg and bg colours muxq c, b 'c becomes FF_FB_BF_BB ' wide normal setword pixels, pixels, #1 ' * | replicate low words in long mergew pixels ' * | ...to then double pixels mov b, c ' * | save a copy before we lose colours movbyts c, pixels ' * * compute 4 lower colours of char ror pixels, #8 ' * | get upper 8 pixels movbyts b, pixels ' * | compute 4 higher colours of char wrlut b, ptrb-- ' * | save it to LUT RAM wrlut c, ptrb-- ' * * save it to LUT RAM call #audio_poll_repo djnf temp1, #textloopJust modified my pixel doubling code to take advantage of a reduced housekeeping loop size and fixed that 24bit mode critical inner loop and now call the audio poller a few times during this workload. I timestamped the 24bpp doubling work and other line maintenance during the active portion and it is now down to using up ~3750 clocks of the 6400 budget. This should now leave enough for the audio & TERC4 workload I'd hope.

Had a nightmare bug to figure out until I changed this

back to this

pop ptra retGetting rusty in my old age.

Other colour modes are busted right now with pixel doubling but they should require less cycles so I'm not too concerned about measuring those at this stage.

UPDATE: fixed some other depths of doubling:

16bpp uses ~3130

8bpp uses ~1600

4bpp uses ~1445

2bpp uses ~630

1bpp uses ~375

UPDATE2: The trick now will be getting all the extra HDMI specific sync logic/streamer code to fit, replacing what I already do for DVI, and creating a suitable init sequence for the new stuff. Down to 43 free COGRAM longs.

Yea, that one should really be added to the hardware errata list. Though I don't remember what kind of glitch this causes (is it just popping once and using it for both the RET and the POP?)

It's a feature, the GETPC feature:

https://forums.parallax.com/discussion/comment/1426230/#Comment_1426230

If line freq is 32kHz then exactly three 96kHz samples per line, presumably. Do most/all HDMI sinks support 96kHz?

P.S. I have acres of whitespace to scroll past whenever I edit one of my posts.

That's one way of putting it. Though a relative LOC instruction does the same thing.

I don't think so. The only required formats are 32/44.1/48kHz stereo. AVRs probably all take 96, TVs maybe, PC monitors probably not (my ASUS PA248QV doesn't even say anything about audio in the spec sheet other than "Speaker: Yes").

Though for stereo 96kHz the line rate matching is not so much of an issue, since you can fit up to 4 samples in one packet for stereo. For multichannel you can only fit 1 (there's always 8 channels even if you're, e.g. only doing 4.0 or 5.1)

Side note that's somewhat on-topic: The HDMI/CEA spec for surround channel mapping is apparently kinda broken?

So:

Sorry for the unprompted rant, but if an idiot like me who's read a few papers and specs can spot something obviously amiss...

Huh?

Running into a potential issue coding up stuff for HDMI data island packet transfers. Can the streamer handle two back to back commands using the HUB RAM from different source addresses without introducing a gap?

I'd like to do something like the following snippet...

rdfast #0, ptrb ' setup FIFO source address for fifo data, to pre-fill it ... xcont xhub32, #0 ' send 32 longs from hub rdfast #0, next ' setup next source address for fifo data xcont xhub32, #0 ' send another 32 longs from hub from second address ... xhub32 long X_RFLONG_32P_4DAC8 | 32My question is, will the second rdfast mess up the first? Will it corrupt the streaming in progress? I don't want to wait for the FIFO to empty before setting up the next transfer as is done by setting bit31 in the D field because that will resync me to to point when the first transfer is complete and I have other things I wish to do in this time. I basically want to issue both streamer instructions closely together and essentially buy myself ~640 clocks for other work I can go do in this time.

I'm pretty much guessing this approach above isn't going to be possible given the FIFO prefill time.

Normally FBLOCK can be used to setup the next address in advance when doing multiple FIFO transfers with wrapping etc but this seems to be for 64 byte block transfers and I'm sending 32 longs. Perhaps if I align the data it still could be possible to use somehow? Will FBLOCK also work with the streamer?

In theory I could send 64 longs in one streamer command, but the second packet data (clock regen) is pre-encoded (static) which introduces complexities during VSYNC where it needs to be encoded differently.

Yes, this is what FBLOCK is explicitly for. It works on 64 byte blocks, so 32 longs means you need D=2. For smooth changeover, all your buffers must be whole blocks apart and long-aligned. I don't even think you need the 2nd streamer instruction, you can just do one 64 long command.

Um yeah it's worth an attempt. I hope it works correctly in conjunction with the streamer as I've only used it before without the streamer.

The issue with coupling is the VSYNC encode version of the clock regen packet that immediately follows audio is different. I guess I can encode the audio packet to a different address on the scanline before vsync starts and do that until the second last line of vsync - just messy to check all the different scan lines for different work. Also in some cases I don't send a clock regen packet and would prefer to send a NULL pkt instead.

I just stuck to plain stereo. 5.1 and the likes always seemed a mess. The subs always seemed to pump out a monotonous throb and nothing else.

I might have just managed to get some sort of HDMI implementation to barely fit inside my P2 video driver. Worked on the tricky sync code today and found I could use skipf to an advantage here. I have 1 COGRAM long left right now but I think I can potentially free about 4 more when I get desperate. In addition I have kept the VGA's VSYNC pin toggle code in place (5 pin mode) done at VSYNC time so maybe there is hope for VGA+HDMI simultaneously depending on what else is required there in the runtime code, if anything. This code compiles but is untested and probably has bugs.

Worked on the tricky sync code today and found I could use skipf to an advantage here. I have 1 COGRAM long left right now but I think I can potentially free about 4 more when I get desperate. In addition I have kept the VGA's VSYNC pin toggle code in place (5 pin mode) done at VSYNC time so maybe there is hope for VGA+HDMI simultaneously depending on what else is required there in the runtime code, if anything. This code compiles but is untested and probably has bugs.

Still need to add into the small amount of spare LUTRAM a couple more things to complete this to my satisfaction for testing:

1) audio volume control (mostly just a couple of multiplies - hopefully 16 bit only)

2) chain loading some more code after the TERC4 packet encoding stage which will do some audio polling to the end of the scan line. Am anticipating that I'll probably need to latch a timestamp from the start of the scan line so the code knows when to stop before the end the scan line and return for next hsync to be prepared. Right now that polling code after everything on the line has been done and the COG is just sitting idle probably won't fit the space.

For now I will probably need to align the horizontal sync to the start of the island data and keep it within the first 64 pixels once the data island begins. It's tricky to have it change anywhere in the middle of the back porch following the island as this adds more streamer commands and COGRAM storage.

In theory this code has the ability to send out two Infoframes on the last VSYNC scan line (or whatever packet is encoded by the client into HUB RAM). It's slightly messy because the driver could either decide to send a null packet or a clock regeneration packet prior to these two Infroframes, so the setup code would have to copy this extra packet data into two HUB locations to have the packets ready and stored contiguously for both packet send options, but this should be doable.

Later on if interlacing support is added (480i/576i) then all pixels will have to be doubled by default and even quadrupled if the resolution drops to 320 or 360 pixels via my horizontal double wide option flag configured per region. This will take up more code space but my plan for that is to move the doubling/quadrupling code into LUTRAM overlays, which can then be read in to either double or quadruple pixels as needed. Doing this also frees some extra COGRAM space, ~32 longs IIRC, which can be helpful for also changing the text mode implementation to optionally pixel quadruple, as well any extra vsync changes for interlaced timing.

'-------------------------------------------------------------------------------------------------- ' Subroutines '-------------------------------------------------------------------------------------------------- ' active vsync blank blank_vsync skipf skipfmask_vsync ' cmp pa, #1 wz ' * z=1 if pa=1 (last sync line) blank skipf skipfmask_vblank ' | * hsync skipf skipfmask_active ' | | xzero m_hfp, hsync0 ' * * * send shortended fp before preample cmpsub hdmi_regen_counter, hdmi_regen_period wc ' * * * check if time to send regen pkt (c=1) xcont m_imm8, preamble ' * | * send preamble before island xcont m_imm8, preamble_vsync ' | * | send preamble before island (vsync line) rdfast #2, audio_pktbuf ' * | * prepare encoded audio pkt wrlong status, statusaddr ' * * * update clients with scan status if_c fblock #0, aclk_pktbuf ' * | * send aclk pkt (normal encode) if_nc fblock #0, null_pktbuf ' * | * send null when no regen packet ready if_c fblock #0, aclkv_pktbuf ' | * | send aclk pkt (vsync encoded) if_nc fblock #0, nullv_pktbuf ' | * | send null when no regen packet ready xcont m_imm2, dataguard ' * | * send leading guard band xcont m_imm2, dataguard_vsync ' | * | send leading guard band (vsync) alt0 xcont m_hubpkt, #0 ' * * * send two packets if_z xcont m_hubpkt, #0 ' | * | send two more packets setq2 #256-1 ' * | | read large palette for this scan line rdlong 0, paletteaddr ' * | | xcont m_imm2, dataguard ' * | * trailing guard band _ret_ xcont m_hbp, hsync0 ' * | | send shortened back porch before preamble+gb xcont m_imm2, dataguard_vsync ' * | trailing guard band sync encoded xcont m_hbp2, hsync0 ' * * only control, no pre+gb for blank/sync lines if_z altd alt0, #m_vi2 ' * | shrink vsync vis line for extra 64 pixel pkts xcont m_vi, hsync0 ' * * generate blank line pixels call #\loadencoder ' * * encode next audio pkt & poll audio _ret_ djnz pa, #blank_vsync ' * | repeat _ret_ djnz pa, #blank ' * repeat skipfmask_vsync long %111100010011010100110 'check this mask skipfmask_vblank long %10010110111010110000100010 'check this mask skipfmask_active long %0000000010101100001000 'hub RAM addresses of packet buffers stored here audio_pktbuf long 0 aclk_pktbuf long 0 aclkv_pktbuf long 0 null_pktbuf long 0 nullv_pktbuf long 0 avi_pktbuf long 0 ' dual pkt buf here audio+video infoframes, always sent on last vsync line m_hubpkt long X_RFLONG_32P_4DAC8 | X_PINS_ON | 64 m_imm8 long X_IMM_1X32_4DAC8 | X_PINS_ON | 8 m_imm2 long X_IMM_1X32_4DAC8 | X_PINS_ON | 2 preamble long %0010101011_0010101011_1101010100_10 'preamble pattern when vsync is inactive (assumes hsync inactive) preamble_vsync long %0010101011_0010101011_0101010100_10 'preamble pattern when vsync is active (assumes hsync inactive) dataguard long %0100110011_0100110011_1010001110_10 dataguard_vsync long %0100110011_0100110011_0101100011_10 video_guard long %1011001100_0100110011_1011001100_10 video_preamble long %1101010100_0010101011_1101010100_10As previously discovered, the preamble doesn't have to be exactly 8 pixels, it just needs to be at least 8 pixels. So you can remove some complexity by turning the entire front porch into a preamble. (This checks out with the conformance document I found)

I mean it is a mess Though if the sub sounds like that, level/crossover is probably set weirdly. (possibly because someone didn't understand that "small speaker" in the settings means "really small speaker" and any real speaker (yes even if bought at ALDI) should be set to "large" and that leads to setting the crossover so low that the sub only gets LFE and very low sub-bass). Of course nothing stops one from getting properly big speakers (as one would use for a good stereo setup), hook up 4 or 5 of those and just not having a sub at all. I actually really like the ProLogic style phase-amplitude matrix systems for music, nicely spreads the sound all around the room, a lot more like listening on headphones than normal stereo speakers.

Though if the sub sounds like that, level/crossover is probably set weirdly. (possibly because someone didn't understand that "small speaker" in the settings means "really small speaker" and any real speaker (yes even if bought at ALDI) should be set to "large" and that leads to setting the crossover so low that the sub only gets LFE and very low sub-bass). Of course nothing stops one from getting properly big speakers (as one would use for a good stereo setup), hook up 4 or 5 of those and just not having a sub at all. I actually really like the ProLogic style phase-amplitude matrix systems for music, nicely spreads the sound all around the room, a lot more like listening on headphones than normal stereo speakers.

Ok, just wasn't sure about doing that. I do plan to make the first bit of the data packet align with the hsync, so the preamble would be certainly remain part of the front porch.

I had another thought about how to tidy things up a bit. If I keep one buffer area in HUB RAM with the following sequence of contiguous 32 pixel packets it should save longs as I can compute 128 byte offsets to select different output packets. No need to keep lots of addresses in COGRAM and I can just have a single InfoFrame update too which is simpler. I would just need to send the Null or Clock regen packet as the first of the pair (reverse the order). Am going to look into this. It may improve things quite a bit.

Static Null packet (normal)

Static Clock regen packet(normal)

Static Null packet (vsync)

Static Clock regen packet (vsync)

Audio encoded packet (either vsync or normal encoded by software)

InfoFrame packet 1 (only ever sent on vsync line, contents can vary with client COG's changes)

InfoFrame packet 2 (ditto)

(etc...)

Update: now I have 5 free COGRAM longs after this change. Here's the updated code:

Here's the updated code:

' active vsync blank blank_vsync cmp pa, #1 wz ' z=1 if pa=1 (last sync line) skipf skipfmask_vsync ' do vsync line blank skipf skipfmask_vblank ' | do other blanking line hsync skipf skipfmask_active ' | | do active line xzero m_hfp, hsync0 ' * * * send shortened fp before preample cmpsub hdmi_regen_counter, hdmi_regen_period wc ' * * * c=1 if time to send a clock regen pkt mov pb, pktbuf ' * * * setup base packet buffer address add pb, #256 ' | * | offset to send in vsync line if_c add pb, #128 ' * * * offset to send clock regen pkt (c=1) xcont m_imm8, preamble ' * | * send preamble before island xcont m_imm8, preamble_vsync ' | * | send preamble before island (vsync line) rdfast #2, pb ' * * * wrlong status, statusaddr ' * * * update clients with scan status fblock #0, pktbuf_audio ' * * * setup next packet start address xcont m_imm2, dataguard ' * | * send leading guard band xcont m_imm2, dataguard_vsync ' | * | send leading guard band (vsync) alt0 xcont m_hubpkt, #0 ' * * * send two packets if_z xcont m_hubpkt, #0 ' | * | send two more packets setq2 #256-1 ' * | | read large palette for this scan line rdlong 0, paletteaddr ' * | | xcont m_imm2, dataguard ' * | * trailing guard band _ret_ xcont m_hbp, hsync0 ' * | | send shortened back porch before preamble+gb xcont m_imm2, dataguard_vsync ' * | trailing guard band sync encoded xcont m_hbp2, hsync0 ' * * only control, no pre+gb for blank/sync lines if_z altd alt0, #m_vi2 ' * | shrink vsync vis line for extra 64 pixel pkts xcont m_vi, hsync0 ' * * generate blank line pixels call #\loadencoder ' * * encode next audio pkt & poll audio _ret_ djnz pa, #blank_vsync ' * | repeat _ret_ djnz pa, #blank ' * repeat 'hub RAM address of packet buffers stored here contiguously in this sequence. Must be aligned to 64 byte boundary. ' null ' audio clock regen ' null (vsync) ' audio clock regen (vsync) ' audio packet sample (both) ' avi infoframe(s) (only vsync encoded) pktbuf long 0 pktbuf_audio long 0Almost there with the code for HDMI but still cranking on packet setup during init and sync polarity stuff. That gets messy to deal with and is prone to error.

Confidence is high right now that this should fit both P2 timing wise and COG/LUT RAM wise. Something should work at least once bugs are sorted.

I'm going to load the 256 entry palette and mouse sprite code into LUT RAM during HSYNC time when 2 packets are sent out (64 pixels = 640 P2 clocks). Then during horizontal back porch I can run the mouse sprite which takes about 480 clocks to execute. This will be nice and delayed and should give the prior issued external memory access from the previous scan line plenty of time to complete to be ready to overlay the sprite over this returned pixel data. Essentially the back porch will need to be setup with at least 48 pixels in order to have time to execute this sprite code before the video guard is sent.

This seems reasonable and normal 640x480 mode at 25.2MHz uses the following pixel timing for blanking:

HFP=16, HSYNC=96, HBP=48. We can move the extra 32 pixels from HSYNC into BP to ensure we have sufficient time for a mouse sprite to be rendered. My setup code can probably just reallocate any excess HSYNC size that exceeds 64 pixels into the HBP. This simplifies the multi-packet encoding.

27MHz HDMI (widescreen 720x480) typically uses different timing like this:

HFP=16, HSYNC=62 HBP=60. This timing can be modified to send 16, 64 and 58 pixels instead which should still be sufficient for a mouse sprite. Any time HSYNC is configured with less than 64 pixels, the missing amount can simply be stolen from the HBP.

The HFP needs to be at least 8+2 pixels to send a preamble and data guard band but my code (currently) expects a non-zero control portion of around 2 pixels for this as well (16 clocks). This time is useful for figuring out the packets to go out and to preload the FIFO for streaming these packets. So realistically the HFP should be reduced no less than 12 pixels for the code to work, though the default of 16 is fine, and I think it needs at least 14 in order to sync correctly anyway.

This means that blanking can't really be reduced further than about 14+64+48 or so, and using 128 total horizontal blanking pixels is probably safer. You could probably then achieve (1360+128)(768+3?)30fps on a P2 @ 344MHz on some low refresh rate display device that can accept the absolute minimum of blanking. Higher resolutions = lower frame rates. 1280x720p24 is probably achievable at ~254MHz with 750 vertical total lines being sent and the reduced horizontal blanking, although is an uncommon display resolution and refresh combination. Even @pik33 's 1024x576p special favourite resolution could potentially even be done sending out 1152x625x50Hz at a blistering P2 speed of 360MHz if a display accepts this timing.

Separately to all this, I was thinking about an alternative way to pass in audio sample data if a spare pin can't be used as a repo. If we use the repo pin idea with the HDMI pins we have to give up the bitDAC IO on the pins and probably drive the TMDS data at voltage levels outside the spec (which typically works but I don't like it that much). The alternative way could just use a single HUB memory location instead of a more complicated FIFO in order to keep the resource use low and an ATN could be signalled to interested COGs.

Right now my driver doesn't really make good use of ATN so an audio COG could potentially write stereo 16 bit data into a known memory address and then issue a COGATN. The polling code could be altered from this code...

audio_poll_repo cmp audio_inbuf_level,#4 wc .pptchd if_c testp #0-0 wc ' TESTP is a D-only instr if_c altd audio_inbuf_level,.inbuf_incr .pptchs if_c rdpin 0-0,#0-0 ret wcz .inbuf_incr long audio_inbuffer + (1<<9)to the following code below. It just requires one more COG long to hold the HUBRAM audio sample address and some suitable setup amongst COGs to share this common address. Not quite as easy as the Repo pin method, but still probably very simple to achieve.

audio_poll_repo cmp audio_inbuf_level,#4 wc if_c jnatn #exitpoll if_c altd audio_inbuf_level,.inbuf_incr .pptchs if_c rdlong 0-0,audio_addr exitpoll ret wcz .inbuf_incr long audio_inbuffer + (1<<9)UPDATE: I think it's now fully coded unless I've missed something. There will be bugs no doubt but I should be able to start checking/testing very soon.

Current storage:

COGRAM - 498 used of 502 total longs

HDMI encoding overlay - 240 of 240 LUT RAM entries used, though this could be split into two different parts eventually, audio + encode

Driver setup overlay - 271 of 272 LUT RAM entries used, any more than 272 prevents the loading of the HDMI overlay during init time for packet encoding, though this part could be moved to HUBexec if required.

It's alive! Well HDMI framed video anyway with my "Chimera" test driver (a hybridised meld of @Wuerfel_21's audio +TERC encoder and my P2 video driver). Audio packets are being encoded yet apparently not being produced but that should be figured out soon.

Had 4 real bugs to resolve so far to get to this point. All I can say is that the P2 DEBUG stuff and my TMDS decoder program saved the day, and it only took me a few hours instead of potentially days to sort this lot out.

Once those were sorted it now seems to be stable and I can now work with this.

In other testing last night I discovered the worst case for a 640x480 resolution output was about 7104 clocks to execute the scan line from the start of horizontal blanking in 24 bit pixel doubling mode including audio/TERC4 encoding and up to the final audio poller sample operation before returning and starting the next line. I will probably also need to also add another 134 clocks or so to this if the region changes on the scan line and it might also need a couple of hundred more cycles per extra audio sample included in the audio packets. TBD. But even with those it should still fit the budget of 8000 clocks (6400 active, 1600 blanking). I should know soon.

Hi @Wuerfel_21 I'm having some problems with your resampling code which I took from your video-nextgen branch on GitHub.

If I try to get it to lock the output at 48000Hz by setting up the audio_period with that rate in mind computed as (clkfreq+24000)/48000, I get zero for the integer and fractional portions of resample_ratio and no samples are emitted because the resample_phase_int is stuck at 0 and never increases.

If I manually patch the resample_ratio_int value to a "1" by default at boot I do get samples emitted but it's arriving at 24000Hz (half rate). If I set it to 2, I then get 1/3 the samples per second. Maybe I should set it to some other value... ideally it should be computed but it's not touched when the sample period is found to be one of the known 32/44.1/48kHz rates.

I also tried a different non-standard sample rates but it doesn't seem to output samples at the correct rate 48kHz rate either, got about 38kHz or something like that IIRC (approx half of what I'd nominated, but can't recall exact number). Will need to retest that specifically again tonight and update this. I might have used a rate that is higher than the 48kHz rate, not lower.

So is this branch's audio code currently working or broken to your knowledge? I hope I haven't missed something important but I believe I now have the same algorithm coded as yours - virtually copied verbatim but with a couple of minor custom changes - your patch skipping now has a variable controlling it as it executes for LUTRAM as an overlay and I can't patch it as easily. Here are the relevant snippets of interest...