@TonyB_ said:

Quoting old messages seems to only quote the last replier's response. Nested quotes can be done manually, by quoting the earlier message, replacing > with >> and merging the two quotes, e.g.

Hey Ada, I think I just found a way to save 2 instructions in the text rendering code. This means we could either do one cursor and keep the flash attribute OR two cursors (one mouse, one text) which would be great.

scanfunc_text

rep @.endscan,#2 'do twice

rfword color 'get color + char data

getbyte data, color, #0 'get character

rczr data 'divide by 4

rdlut pixels, data 'read pixels in font

movbyts pixels, color 'make pixels in LSB of long valid

testflash bitl color, #15 wcz 'test (and clear) flashing bit

flash if_c and pixels, #$ff 'make it all background if flashing

decmod curs_count, #511 wc 'test for cursor, wrap to large num

if_c mov color, curs_color 'choose cursor color for all nibbles

if_nc movbyts color, #%01010101 'colours becomes BF_BF_BF_BF

mov data, color 'grab a copy for muxing step next

rol data, #4 'data becomes FB_FB_FB_FB

setq ##$F0FF000F 'mux mask adjusts fg and bg colours

muxq color, data 'color becomes FF_FB_BF_BB

movbyts color, pixels 'select pixel colours for char

xcont X_IMM_8X4_LUT, color 'write colour indices into streamer as 8 nibbles

.endscan ret wcz 'restore flags

Note: I used a ## constant here in my original code, which now needs to be stored in COGRAM, otherwise the timing values I counted up before are not valid. I was assuming space for that.

Did a little analysis of the P2 clock cycle use in my video driver during pixel doubling with thoughts of whether HDMI is still feasible while streaming out the prior scan line simultaneously, which is what my driver model does.

Here are the clocks taken by my pixel doubling loop for different colour depths at 640x480. I've focussed on the 24 bit RGB/32 bpp one which is the most resource hungry. Accounting for most of the doubling work it looks like there'd be 2752 cycles free in the active portion. There are a few other things the code might still need to do such as trigger PSRAM reading and mouse sprite stuff but it'd hopefully leave us 2200 cycles for HDMI encode+audio. I also tested the block transfers into the COG and it appears that for reads & writes it takes about approx 25% more cycles than usual when simultaneously streaming longs into the FIFO using a 10:1 sysclk/pixelclock ratio, due to the competition for HUB window bandwidth. I measured ~648 clocks for 512 long transfers vs ~521 when not streaming and budgeted for this additional transfer overhead in the table.

Main drama is probably where this extra HDMI encoding code can fit. For modes that don't use the 256 entry colour palette I could gain 240 longs there (need to keep 16 free for the text palette and other simpler LUT modes). Whether those 240 longs would be enough for HDMI+audio resampling, not quite sure yet. I could potentially read in some of the HDMI code after doubling and swap it out after but that burns a lot of clocks too.

@rogloh said:

Just tried patching my code so it outputs half the active pixels at half the rate in DVI mode with a setq before the active pixels begin using the divide by 20 value and restored at the end of active portion back to divide by 10 so the other sync timing stuff still all works. Couldn't get an image on screen. Might still be something I'm doing wrong or maybe this repetition only works in immediate mode...? My code is using the streamer.

Update: just verified this same approach of the extra setq instructions with VGA instead of DVI in my driver does double the pixels on screen so it would appear my patched code is okay. It's just that DVI output doesn't like it. I wonder if the streamer's NCO value still somehow affects the DVI encoder block's timing and messes with it or we have to do some special initial priming for it to work.

Yeah, this was tested before. Try deploying your 10b capture code, I'd love to see what's actually going on.

@rogloh said:

Hey Ada, I think I just found a way to save 2 instructions in the text rendering code. This means we could either do one cursor and keep the flash attribute OR two cursors (one mouse, one text) which would be great.

Clever use of MOVBYTS!

Note: I used a ## constant here in my original code, which now needs to be stored in COGRAM, otherwise the timing values I counted up before are not valid. I was assuming space for that.

I was assuming that already. It's easier to write it out with ##...

@rogloh said:

Did a little analysis of the P2 clock cycle use in my video driver during pixel doubling with thoughts of whether HDMI is still feasible while streaming out the prior scan line simultaneously, which is what my driver model does.

Here are the clocks taken by my pixel doubling loop for different colour depths at 640x480. I've focussed on the 24 bit RGB/32 bpp one which is the most resource hungry. Accounting for most of the doubling work it looks like there'd be 2752 cycles free in the active portion. There are a few other things the code might still need to do such as trigger PSRAM reading and mouse sprite stuff but it'd hopefully leave us 2200 cycles for HDMI encode+audio. I also tested the block transfers into the COG and it appears that for reads & writes it takes about approx 25% more cycles than usual when simultaneously streaming longs into the FIFO using a 10:1 sysclk/pixelclock ratio, due to the competition for HUB window bandwidth. I measured ~648 clocks for 512 long transfers vs ~521 when not streaming and budgeted for this additional transfer overhead in the table.

Main drama is probably where this extra HDMI encoding code can fit. For modes that don't use the 256 entry colour palette I could gain 240 longs there (need to keep 16 free for the text palette and other simpler LUT modes). Whether those 240 longs would be enough for HDMI+audio resampling, not quite sure yet. I could potentially read in some of the HDMI code after doubling and swap it out after but that burns a lot of clocks too.

Disclaimer: I am not 100% on my HDMI code being as optimized as it could be. You may be able to crunch it down some at the expense of cycles.

Current size seems to be ~128 longs for CRC+encode (96 code, 16 terc symbols) and another ~128 for audio buffer management, resampling and packet building. Take some scanfunc stuff, give some variables, so 256 longs. Maybe more like 200 if you remove the resampler or downgrade to linear interpolation (cubic really uses a lot of code space).

There's also the 32 long packet buffer, but that can be moved into hub (easier to stream it out from there, too).

But oh yeah, it's kind of a lot of code. I feel like doing a 1:1 upgrade of p2videodrv might not be in the cards, some features might need to be left on the table for a new driver.

@Wuerfel_21 said:

But oh yeah, it's kind of a lot of code. I feel like doing a 1:1 upgrade of p2videodrv might not be in the cards, some features might need to be left on the table for a new driver.

If I do choose to add HDMI I sort of plan to keep my existing output variant as is (possibly make use of the alternative NCO rate for pixel doubling at some point) and then have a mostly different codebase that gets read in at startup time and this would remove all remnants of PAL/NTSC sync stuff and be dedicated for HDMI output only. Even if rewritten it would try its best to keep the key features of my existing driver and understand the same data structures. Things like PSRAM, text mode, mouse, all colour modes would try to be prioritized.

If clocks are available I might be able to read in some of the HDMI code on the fly into upper LUT RAM. Last night I realized you actually only have to read the code in, you don't need to copy it back as these longs are only ever used for doubling so they get refreshed automatically by the process on the next line. So that's one bonus.

If needed I could make a couple of sacrifices that may help, though I'd try to avoid this as both of these are useful. Also doubling/pixel repetition is sort of needed anyway if you ever wanted to output interlaced SDTV over HDMI.

1) No doubling in 32bpp mode, maybe also in 16bpp mode too. Or even no doubling at all.

2) No 8bpp palette mode support with HDMI. Frees another 240 longs.

I won't like dropping either thing.

One other idea I had (will have to look into it) is to just do audio processing per scanline and save samples to a buffer. This will lag audio by one frame but let me do a lot of packet encoding work in the mostly wasted vertical blanking time. I recall when I was coding my HDMI TERC stuff way back that was my plan and I thought I'd even checked the budget for that, thinking it was doable. But that was years ago and I may have been wrong, or may have been doing part of the work on the active scan line as well. I can't remember fully.

UPDATE: looking at my old first attempt code and roughly counting instruction cycles it looks like it took in the vicinity of 2660 cycles just to TERC encode a packet - using crcbit instead of crcnib is obviously not nearly as efficient as your stuff Ada , so that won't fly to do in the vertical interval - that burns 218 scan lines of the 525 sent if 525 packets are encoded. I think I'd probably planned to pack 4 stereo audio samples in each packet or something that would have cut down the number of packets being encoded/sent, but knowing what we do now about my TV/Amp pickiness about audio timing it probably wouldn't have worked out too well there anyway.

1) No doubling in 32bpp mode, maybe also in 16bpp mode too. Or even no doubling at all.

2) No 8bpp palette mode support with HDMI. Frees another 240 longs.

I won't like dropping either thing.

Oh those are both rather important I think.

The mouse cursor is also a rather nice feature, IMO, should defo keep that.

UPDATE: looking at my old first attempt code and roughly counting instruction cycles it looks like it took in the vicinity of 2660 cycles just to TERC encode a packet - using crcbit instead of crcnib is obviously not nearly as efficient as your stuff Ada , so that won't fly to do in the vertical interval - that burns 218 scan lines of the 525 sent if 525 packets are encoded. I think I'd probably planned to pack 4 stereo audio samples in each packet or something that would have cut down the number of packets being encoded/sent, but knowing what we do now about my TV/Amp pickiness about audio timing it probably wouldn't have worked out too well there anyway.

mine is at 2006 for CRC+TERC - this includes 76 dummy scanfunc calls at 8 cycles a pop, so 1398 real cycles. The CRC is broken up to meet scanfunc timing, so that could be sped up a bit further.

Super Off-Topic: 16bpp 320x240 I think will be the nice mode for polygon rendering - can fit one (or squeezing it, two) buffer in Hub RAM comfortably, is a real color space that can do arbitrary gradients, pixel count in range for simple scenes at 60 FPS or complex scenes at 30 FPS (using fillrate estimates from that texture map experiment code I still haven't published).

Those being (at peak - narrow spans have large-ish overhead - note that my test just fills rectangles, no triangle raster processing)

~26cy/px for RGB gouraud

~26cy/px for raw texture

~37cy/px for texture + mono gouraud (lighting or fog)

~88cy/px for bilinear filtered texture + mono gouraud (lighting or fog)

(all this for 8bpp index textures with no clamp/repeat, approximate perspective correction and dithering to 16bpp)

(So if using texture+lighting on 2 render cogs at 320 MHz targeting 60 FPS, a depth complexity of ~3.4 is possible)

Ultra Off-Topic: My KDE plasma shell and VSCodium crashed while trying to write this post. I don't even...

1) No doubling in 32bpp mode, maybe also in 16bpp mode too. Or even no doubling at all.

2) No 8bpp palette mode support with HDMI. Frees another 240 longs.

I won't like dropping either thing.

Oh those are both rather important I think.

Yeah I know, that's the problem. 640x480 8bpp LUT mode fits very nicely in HUB for those without PSRAM, and pixel doubling is good for game emulator/sprite driver COG stuff - although if these COGs did the repetition work into 640 pixel linebuffers, that'd still work. I can still do 40 column text in my code.

The mouse cursor is also a rather nice feature, IMO, should defo keep that.

Yep. Mouse sprite for GUI is slick.

mine is at 2006 for CRC+TERC - this includes 76 dummy scanfunc calls at 8 cycles a pop, so 1398 real cycles. The CRC is broken up to meet scanfunc timing, so that could be sped up a bit further.

Yeah was looking at your code a moment ago. Scanfunc stuff goes away. It's probably something that fits ok in my budget. Instruction space is the biggest issue IMO but I might be able to read in 240 longs dynamically and that'd take about 300 or so clock cycles.

One other thing I realized I could do if LUT space is at a premium is to separate the steps of the processing like you did, with CRC stuff first then TERC4 encode. The code for both steps could be read into the LUT at different times before being executed, then you don't need the full instruction space for all HDMI work to fit inside the COG simultaneously. That sort of more fine grained overlay approach should help allow us to keep the 256 entry palette mode with any luck. So the sacrifice might then be that this HDMI driver variant is crashable if its HUBRAM is corrupted. That's not ideal but it's still a reasonable tradeoff to make IMO as most other system code already works that way anyway. Even things like the mouse sprite code could work that way too, and free more COG RAM.

@rogloh said:

Just tried patching my code so it outputs half the active pixels at half the rate in DVI mode with a setq before the active pixels begin using the divide by 20 value and restored at the end of active portion back to divide by 10 so the other sync timing stuff still all works. Couldn't get an image on screen. Might still be something I'm doing wrong or maybe this repetition only works in immediate mode...? My code is using the streamer.

Is testing still ongoing or is hardware DVI/HDMI pixel repetition using the streamer definitely not possible?

@rogloh said:

Just tried patching my code so it outputs half the active pixels at half the rate in DVI mode with a setq before the active pixels begin using the divide by 20 value and restored at the end of active portion back to divide by 10 so the other sync timing stuff still all works. Couldn't get an image on screen. Might still be something I'm doing wrong or maybe this repetition only works in immediate mode...? My code is using the streamer.

Is testing still ongoing or is hardware DVI/HDMI pixel repetition using the streamer definitely not possible?

Well my first attempt failed. If you'd like to try it go ahead. It'd be great it this was somehow possible, although if it means underflowing the streamer's command buffer for each pixel sent in immediate mode that could be a real problem. Ideally it could work with the FIFO and data sourced from HUB RAM. Once I get my setup with the HDMI capture software all ready again I'll probably try it another time to at least see what is being output. Alternatively this can be investigated with a logic analyzer and a slowly clocked P2 outputting DVI encoded pixels.

I don't think there's a difference between command types at that layer. The TMDS encoder appears to just sit inbetween the 32 pin outputs from the streamer and the actual OUTA bus.

My only thought was if the streamer was too slow to fill the pipeline initially due to 1/2 NCO rate it could get into some sort of messed up state where all pixels after that were badly encoded and messed up the monitor tracking pixel boundaries or something like that. Perhaps the first pixel is special and needs to be primed first in the buffer then we switch the NCO rate to half speed. But it was only a guess and has no Verilog analysis or real evidence base to it. Theory only.

@rogloh said:

Just tried patching my code so it outputs half the active pixels at half the rate in DVI mode with a setq before the active pixels begin using the divide by 20 value and restored at the end of active portion back to divide by 10 so the other sync timing stuff still all works. Couldn't get an image on screen. Might still be something I'm doing wrong or maybe this repetition only works in immediate mode...? My code is using the streamer.

Is testing still ongoing or is hardware DVI/HDMI pixel repetition using the streamer definitely not possible?

Well my first attempt failed. If you'd like to try it go ahead. It'd be great it this was somehow possible, although if it means underflowing the streamer's command buffer for each pixel sent in immediate mode that could be a real problem. Ideally it could work with the FIFO and data sourced from HUB RAM. Once I get my setup with the HDMI capture software all ready again I'll probably try it another time to at least see what is being output. Alternatively this can be investigated with a logic analyzer and a slowly clocked P2 outputting DVI encoded pixels.

No rush, just asking as I don't have the Eval DVI board.

@Wuerfel_21 said:

I don't think there's a difference between command types at that layer. The TMDS encoder appears to just sit inbetween the 32 pin outputs from the streamer and the actual OUTA bus.

Different bpp settings might either work or not equally, but perhaps the one most likely to work is RFLONG -> RGB24, i.e. 1 long per pixel.

@rogloh said:

My only thought was if the streamer was too slow to fill the pipeline initially due to 1/2 NCO rate it could get into some sort of messed up state where all pixels after that were badly encoded and messed up the monitor tracking pixel boundaries or something like that. Perhaps the first pixel is special and needs to be primed first in the buffer then we switch the NCO rate to half speed. But it was only a guess and has no Verilog analysis or real evidence base to it. Theory only.

FIFO filling shouldn't be a problem as that happens at one long per clock. 1/2 NCO rate means it takes twice as long before FIFO is refilled due to half speed reading from the FIFO.

@TonyB_ said:

Different bpp settings might either work or not equally, but perhaps the one most likely to work is RFLONG -> RGB24, i.e. 1 long per pixel.

Ok, I might have been trying with text (which is 4bpp)

I wonder is there is some weird pipeline related problem where streamer rate changes at a different time than the serializer reload. Maybe cut the blanking intervals in half and run everything at 1/20? I think that would prevent HDMI audio though.

@SaucySoliton said:

I wonder is there is some weird pipeline related problem where streamer rate changes at a different time than the serializer reload. Maybe cut the blanking intervals in half and run everything at 1/20? I think that would prevent HDMI audio though.

Good idea, run streamer at sysclk/20 all the time to test pixel repetition and worry about HDMI audio later.

@Wuerfel_21 said:

@rogloh scanfunc text proof-of-concept

(Haven't actually checked if the audio is still working...)

Well done! I knew you'd do this A nice little challenge wasn't it.

Without freeing two more instructions by retiming everything, the second (mouse) text cursor could be made a choice to have at setup time or even dynamically. So whenever you disable the text flashing option on the screen you gain the second cursor instead. That could work out nicely actually. It just means that any text based UI's that need a mouse cursor would have no flashing text. Can't recall if the old Borland/Turbo C style IDEs used flashing text much - I don't think they did as they might have used brighter background colours which disabled flashing.

EDIT: if you do a second mouse cursor I think it needs to be the second one applied to fully cover the solid block over the text and any text cursor so some co-ordinate or code reordering may be required, if this is dynamic.

So with flashing enabled:

do flashing text

do cursor 1 = text

With flashing disabled:

do cursor 2 = text

do cursor 1 = mouse

@Rayman said:

@Wuerfel_21 What are you up to here?

Doubling horizontal pixels so can show QVGA at VGA resolution?

She has basically taken my text rendering code and has integrated into her HDMI driver. This capture above is 80 column text at VGA 640x480 resolution being output over HDMI (and in theory audio will work with this too). I think the font is double high if she used my p2font16 or could be something shrunk from the P1 font. 15 rows x 32 pix font = 480 lines or the capture interface was expecting interlaced material and showing one field stretched. But that's unimportant for now.

Hey I just managed to use my capture code from a couple of weeks back to capture my video driver outputting RGB24 with NCO at sysclk/20 rate in active part of the scan line. Interestingly my TM decoder program barfed on the clock. Looks like the clock is not continuing to be output at the 5 high/5 low levels after this switchover. I think the individual bits are being doubled or something...strange transition from 10 to 16 to 20 bits for the clock (I will have to examine this further by getting my program to output more details than just skipping over the bad data).

@SaucySoliton said:

I wonder is there is some weird pipeline related problem where streamer rate changes at a different time than the serializer reload. Maybe cut the blanking intervals in half and run everything at 1/20? I think that would prevent HDMI audio though.

Yes this the sort of issue I was wondering about too - you put it more succinctly.

301 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02

302 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02

303 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02

304 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02

305 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02

306 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02

307 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02

308 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02

309 * Invalid bit count of 16 ignored

310 * Invalid bit count of 20 ignored

311 * Invalid bit count of 20 ignored

312 * Invalid bit count of 20 ignored

313 * Invalid bit count of 20 ignored

314 * Invalid bit count of 20 ignored

315 * Invalid bit count of 20 ignored

316 * Invalid bit count of 20 ignored

At the end of the active portion back to the normal NCO rate it also does this... and goes via 14 clock bits back to 10.

626 * Invalid bit count of 20 ignored

627 * Invalid bit count of 20 ignored

628 * Invalid bit count of 20 ignored

629 * Invalid bit count of 14 ignored

630 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02

631 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02

632 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02

633 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02

@Rayman said:

@Wuerfel_21 What are you up to here?

Doubling horizontal pixels so can show QVGA at VGA resolution?

She has basically taken my text rendering code and has integrated into her HDMI driver. This capture above is 80 column text at VGA 640x480 resolution being output over HDMI (and in theory audio will work with this too). I think the font is double high if she used my p2font16 or could be something shrunk from the P1 font. 15 rows x 32 pix font = 480 lines or the capture interface was expecting interlaced material and showing one field stretched. But that's unimportant for now.

Yes, font is rendered at double height. (and it is p2font16, just because that's already in interleaved format). Just because that was easier to hook up. It is re-doing it every line, it's just that the line counter is set up for 2x scaling. I did verify audio on my ASUS monitor, but that's the least picky out of my available sinks. Try it on your Pioneer to be sure.

This is what it should look like between the transitions (at normal NCO rate the whole time). I'm sending a test pattern that increments the blue channel by 4 each pixel.

@rogloh said:

Ok doesn't look good - here's the TMDS data when it switches NCO rates. Look at the clock bits

Oh wow, something's really broken in there. 11/9 duty clock, how come? I guess it sources it from the NCO counter somewhere? In that verilog snippet the serializer had its own counter, why not use that?

So for a very theoretical P2 rev D, this should really be fixed in some way (+ add harware TERC4 modulation - possibly as %xxxxxxxx_xxxxxxxx_RRRRGGGG_BBBB010x ?)

The NCO switching is very simple with setq... here's my hacked up code with xxx and yyy being the two NCO rates I hacked in.

if_z call #borderregion 'and go back to border generation

setq xxx

xcont m_rf, palselect 'generate the visible line

sub regionsize, #1 wz 'decrement the region's size count

if_z call #newregion 'when 0, reload another region

call videomode 'create the next video scan line

setq yyy

xcont m_bs, hsync0 'generate horizontal FP blanking

@rogloh said:

Ok doesn't look good - here's the TMDS data when it switches NCO rates. Look at the clock bits

Oh wow, something's really broken in there. 11/9 duty clock, how come? I guess it sources it from the NCO counter somewhere? In that verilog snippet the serializer had its own counter, why not use that?

So for a very theoretical P2 rev D, this should really be fixed in some way (+ add harware TERC4 modulation - possibly as %xxxxxxxx_xxxxxxxx_RRRRGGGG_BBBB010x ?)

Yeah TERC4 encode would be nice, plus HW pixel replication for DVI. Once you open up another rev the requests will start flying though.

I think that Verilog might be from Rev A, the channel command formats didn't quite line up as I expected, or I was misreading it.

Comments

Can be adjusted here - https://forums.parallax.com/profile/quotes

@Wuerfel_21 ,

Hey Ada, I think I just found a way to save 2 instructions in the text rendering code. This means we could either do one cursor and keep the flash attribute OR two cursors (one mouse, one text) which would be great.

scanfunc_text rep @.endscan,#2 'do twice rfword color 'get color + char data getbyte data, color, #0 'get character rczr data 'divide by 4 rdlut pixels, data 'read pixels in font movbyts pixels, color 'make pixels in LSB of long valid testflash bitl color, #15 wcz 'test (and clear) flashing bit flash if_c and pixels, #$ff 'make it all background if flashing decmod curs_count, #511 wc 'test for cursor, wrap to large num if_c mov color, curs_color 'choose cursor color for all nibbles if_nc movbyts color, #%01010101 'colours becomes BF_BF_BF_BF mov data, color 'grab a copy for muxing step next rol data, #4 'data becomes FB_FB_FB_FB setq ##$F0FF000F 'mux mask adjusts fg and bg colours muxq color, data 'color becomes FF_FB_BF_BB movbyts color, pixels 'select pixel colours for char xcont X_IMM_8X4_LUT, color 'write colour indices into streamer as 8 nibbles .endscan ret wcz 'restore flagsNote: I used a ## constant here in my original code, which now needs to be stored in COGRAM, otherwise the timing values I counted up before are not valid. I was assuming space for that.

Did a little analysis of the P2 clock cycle use in my video driver during pixel doubling with thoughts of whether HDMI is still feasible while streaming out the prior scan line simultaneously, which is what my driver model does.

Here are the clocks taken by my pixel doubling loop for different colour depths at 640x480. I've focussed on the 24 bit RGB/32 bpp one which is the most resource hungry. Accounting for most of the doubling work it looks like there'd be 2752 cycles free in the active portion. There are a few other things the code might still need to do such as trigger PSRAM reading and mouse sprite stuff but it'd hopefully leave us 2200 cycles for HDMI encode+audio. I also tested the block transfers into the COG and it appears that for reads & writes it takes about approx 25% more cycles than usual when simultaneously streaming longs into the FIFO using a 10:1 sysclk/pixelclock ratio, due to the competition for HUB window bandwidth. I measured ~648 clocks for 512 long transfers vs ~521 when not streaming and budgeted for this additional transfer overhead in the table.

Main drama is probably where this extra HDMI encoding code can fit. For modes that don't use the 256 entry colour palette I could gain 240 longs there (need to keep 16 free for the text palette and other simpler LUT modes). Whether those 240 longs would be enough for HDMI+audio resampling, not quite sure yet. I could potentially read in some of the HDMI code after doubling and swap it out after but that burns a lot of clocks too.

Yeah, this was tested before. Try deploying your 10b capture code, I'd love to see what's actually going on.

Clever use of MOVBYTS!

I was assuming that already. It's easier to write it out with ##...

Disclaimer: I am not 100% on my HDMI code being as optimized as it could be. You may be able to crunch it down some at the expense of cycles.

Current size seems to be ~128 longs for CRC+encode (96 code, 16 terc symbols) and another ~128 for audio buffer management, resampling and packet building. Take some scanfunc stuff, give some variables, so 256 longs. Maybe more like 200 if you remove the resampler or downgrade to linear interpolation (cubic really uses a lot of code space).

There's also the 32 long packet buffer, but that can be moved into hub (easier to stream it out from there, too).

But oh yeah, it's kind of a lot of code. I feel like doing a 1:1 upgrade of p2videodrv might not be in the cards, some features might need to be left on the table for a new driver.

If I do choose to add HDMI I sort of plan to keep my existing output variant as is (possibly make use of the alternative NCO rate for pixel doubling at some point) and then have a mostly different codebase that gets read in at startup time and this would remove all remnants of PAL/NTSC sync stuff and be dedicated for HDMI output only. Even if rewritten it would try its best to keep the key features of my existing driver and understand the same data structures. Things like PSRAM, text mode, mouse, all colour modes would try to be prioritized.

If clocks are available I might be able to read in some of the HDMI code on the fly into upper LUT RAM. Last night I realized you actually only have to read the code in, you don't need to copy it back as these longs are only ever used for doubling so they get refreshed automatically by the process on the next line. So that's one bonus.

If needed I could make a couple of sacrifices that may help, though I'd try to avoid this as both of these are useful. Also doubling/pixel repetition is sort of needed anyway if you ever wanted to output interlaced SDTV over HDMI.

1) No doubling in 32bpp mode, maybe also in 16bpp mode too. Or even no doubling at all.

2) No 8bpp palette mode support with HDMI. Frees another 240 longs.

I won't like dropping either thing.

One other idea I had (will have to look into it) is to just do audio processing per scanline and save samples to a buffer. This will lag audio by one frame but let me do a lot of packet encoding work in the mostly wasted vertical blanking time. I recall when I was coding my HDMI TERC stuff way back that was my plan and I thought I'd even checked the budget for that, thinking it was doable. But that was years ago and I may have been wrong, or may have been doing part of the work on the active scan line as well. I can't remember fully.

UPDATE: looking at my old first attempt code and roughly counting instruction cycles it looks like it took in the vicinity of 2660 cycles just to TERC encode a packet - using crcbit instead of crcnib is obviously not nearly as efficient as your stuff Ada , so that won't fly to do in the vertical interval - that burns 218 scan lines of the 525 sent if 525 packets are encoded. I think I'd probably planned to pack 4 stereo audio samples in each packet or something that would have cut down the number of packets being encoded/sent, but knowing what we do now about my TV/Amp pickiness about audio timing it probably wouldn't have worked out too well there anyway.

, so that won't fly to do in the vertical interval - that burns 218 scan lines of the 525 sent if 525 packets are encoded. I think I'd probably planned to pack 4 stereo audio samples in each packet or something that would have cut down the number of packets being encoded/sent, but knowing what we do now about my TV/Amp pickiness about audio timing it probably wouldn't have worked out too well there anyway.

Oh those are both rather important I think.

The mouse cursor is also a rather nice feature, IMO, should defo keep that.

mine is at 2006 for CRC+TERC - this includes 76 dummy scanfunc calls at 8 cycles a pop, so 1398 real cycles. The CRC is broken up to meet scanfunc timing, so that could be sped up a bit further.

Super Off-Topic: 16bpp 320x240 I think will be the nice mode for polygon rendering - can fit one (or squeezing it, two) buffer in Hub RAM comfortably, is a real color space that can do arbitrary gradients, pixel count in range for simple scenes at 60 FPS or complex scenes at 30 FPS (using fillrate estimates from that texture map experiment code I still haven't published).

Those being (at peak - narrow spans have large-ish overhead - note that my test just fills rectangles, no triangle raster processing)

(all this for 8bpp index textures with no clamp/repeat, approximate perspective correction and dithering to 16bpp)

(So if using texture+lighting on 2 render cogs at 320 MHz targeting 60 FPS, a depth complexity of ~3.4 is possible)

Ultra Off-Topic: My KDE plasma shell and VSCodium crashed while trying to write this post. I don't even...

Yeah I know, that's the problem. 640x480 8bpp LUT mode fits very nicely in HUB for those without PSRAM, and pixel doubling is good for game emulator/sprite driver COG stuff - although if these COGs did the repetition work into 640 pixel linebuffers, that'd still work. I can still do 40 column text in my code.

Yep. Mouse sprite for GUI is slick.

Yeah was looking at your code a moment ago. Scanfunc stuff goes away. It's probably something that fits ok in my budget. Instruction space is the biggest issue IMO but I might be able to read in 240 longs dynamically and that'd take about 300 or so clock cycles.

One other thing I realized I could do if LUT space is at a premium is to separate the steps of the processing like you did, with CRC stuff first then TERC4 encode. The code for both steps could be read into the LUT at different times before being executed, then you don't need the full instruction space for all HDMI work to fit inside the COG simultaneously. That sort of more fine grained overlay approach should help allow us to keep the 256 entry palette mode with any luck. So the sacrifice might then be that this HDMI driver variant is crashable if its HUBRAM is corrupted. That's not ideal but it's still a reasonable tradeoff to make IMO as most other system code already works that way anyway. Even things like the mouse sprite code could work that way too, and free more COG RAM.

Is testing still ongoing or is hardware DVI/HDMI pixel repetition using the streamer definitely not possible?

Well my first attempt failed. If you'd like to try it go ahead. It'd be great it this was somehow possible, although if it means underflowing the streamer's command buffer for each pixel sent in immediate mode that could be a real problem. Ideally it could work with the FIFO and data sourced from HUB RAM. Once I get my setup with the HDMI capture software all ready again I'll probably try it another time to at least see what is being output. Alternatively this can be investigated with a logic analyzer and a slowly clocked P2 outputting DVI encoded pixels.

I don't think there's a difference between command types at that layer. The TMDS encoder appears to just sit inbetween the 32 pin outputs from the streamer and the actual OUTA bus.

My only thought was if the streamer was too slow to fill the pipeline initially due to 1/2 NCO rate it could get into some sort of messed up state where all pixels after that were badly encoded and messed up the monitor tracking pixel boundaries or something like that. Perhaps the first pixel is special and needs to be primed first in the buffer then we switch the NCO rate to half speed. But it was only a guess and has no Verilog analysis or real evidence base to it. Theory only.

No rush, just asking as I don't have the Eval DVI board.

Different bpp settings might either work or not equally, but perhaps the one most likely to work is RFLONG -> RGB24, i.e. 1 long per pixel.

FIFO filling shouldn't be a problem as that happens at one long per clock. 1/2 NCO rate means it takes twice as long before FIFO is refilled due to half speed reading from the FIFO.

Ok, I might have been trying with text (which is 4bpp)

I wonder is there is some weird pipeline related problem where streamer rate changes at a different time than the serializer reload. Maybe cut the blanking intervals in half and run everything at 1/20? I think that would prevent HDMI audio though.

@rogloh scanfunc text proof-of-concept

(Haven't actually checked if the audio is still working...)

Good idea, run streamer at sysclk/20 all the time to test pixel repetition and worry about HDMI audio later.

Well done! I knew you'd do this A nice little challenge wasn't it.

A nice little challenge wasn't it.

Without freeing two more instructions by retiming everything, the second (mouse) text cursor could be made a choice to have at setup time or even dynamically. So whenever you disable the text flashing option on the screen you gain the second cursor instead. That could work out nicely actually. It just means that any text based UI's that need a mouse cursor would have no flashing text. Can't recall if the old Borland/Turbo C style IDEs used flashing text much - I don't think they did as they might have used brighter background colours which disabled flashing.

EDIT: if you do a second mouse cursor I think it needs to be the second one applied to fully cover the solid block over the text and any text cursor so some co-ordinate or code reordering may be required, if this is dynamic.

So with flashing enabled:

do flashing text

do cursor 1 = text

With flashing disabled:

do cursor 2 = text

do cursor 1 = mouse

@Wuerfel_21 What are you up to here?

Doubling horizontal pixels so can show QVGA at VGA resolution?

She has basically taken my text rendering code and has integrated into her HDMI driver. This capture above is 80 column text at VGA 640x480 resolution being output over HDMI (and in theory audio will work with this too). I think the font is double high if she used my p2font16 or could be something shrunk from the P1 font. 15 rows x 32 pix font = 480 lines or the capture interface was expecting interlaced material and showing one field stretched. But that's unimportant for now.

Hey I just managed to use my capture code from a couple of weeks back to capture my video driver outputting RGB24 with NCO at sysclk/20 rate in active part of the scan line. Interestingly my TM decoder program barfed on the clock. Looks like the clock is not continuing to be output at the 5 high/5 low levels after this switchover. I think the individual bits are being doubled or something...strange transition from 10 to 16 to 20 bits for the clock (I will have to examine this further by getting my program to output more details than just skipping over the bad data).

Yes this the sort of issue I was wondering about too - you put it more succinctly.

301 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 302 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 303 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 304 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 305 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 306 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 307 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 308 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 309 * Invalid bit count of 16 ignored 310 * Invalid bit count of 20 ignored 311 * Invalid bit count of 20 ignored 312 * Invalid bit count of 20 ignored 313 * Invalid bit count of 20 ignored 314 * Invalid bit count of 20 ignored 315 * Invalid bit count of 20 ignored 316 * Invalid bit count of 20 ignoredAt the end of the active portion back to the normal NCO rate it also does this... and goes via 14 clock bits back to 10.

626 * Invalid bit count of 20 ignored 627 * Invalid bit count of 20 ignored 628 * Invalid bit count of 20 ignored 629 * Invalid bit count of 14 ignored 630 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 631 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 632 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 633 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02Ok doesn't look good - here's the TMDS data when it switches NCO rates. Look at the clock bits

Decoding TMDS using channel bits: Sample Interval Type Red(ch2) Green(ch1) Blue(ch0) Clock RRGGBB TMDS/CTS 128*Fs FsClk FifoSize Action ... 302 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 303 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 304 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 305 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 306 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 307 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 308 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 309 Data:-> RGB (00_00_00) 0000000100000000 0000000100000000 0000000100000000 0000000000011111 000000 310 Data:-> RGB (11_11_41) 00000011111111110000 00000011111111110000 00000001111111000000 00000000000111111111 111141 311 Data:-> RGB (EF_EF_95) 00000001000000001111 00000001000000001111 00000001111110001100 00000000000111111111 efef95 312 Data:-> RGB (11_11_C9) 00000011111111110000 00000011111111110000 00000011111110111000 00000000000111111111 1111c9 313 Data:-> RGB (EF_EF_1C) 00000001000000001111 00000001000000001111 00000001111100001011 00000000000111111111 efef1c 314 Data:-> RGB (11_11_BE) 00000011111111110000 00000011111111110000 00000001000011000000 00000000000111111111 1111be 315 Data:-> RGB (EF_EF_6A) 00000001000000001111 00000001000000001111 00000001000010001100 00000000000111111111 efef6a 316 Data:-> RGB (11_11_D9) 00000011111111110000 00000011111111110000 00000001111101001000 00000000000111111111 1111d9 317 Data:-> RGB (EF_EF_1C) 00000001000000001111 00000001000000001111 00000011000111110100 00000000000111111111 efef1c 318 Data:-> RGB (FE_FE_51) 00000001000000000000 00000001000000000000 00000001000111001111 00000000000111111111 fefe51 319 Data:-> RGB (11_11_94) 00000011111111110000 00000011111111110000 00000001000110001100 00000000000111111111 111194Here's it switching the NCO back

146 Data:-> RGB (EF_AB_BE) 00000001000000001111 00000011000000110011 00000010111110010101 00000000000111111111 efabbe 147 Data:-> RGB (11_44_6A) 00000011111111110000 00000001111111000011 00000010111111011001 00000000000111111111 11446a 148 Data:-> RGB (EF_BA_D9) 00000001000000001111 00000011000000111100 00000010000000011101 00000000000111111111 efbad9 149 Data:-> RGB (C0_C5_2C) 11010101000000 11010101000011 10101010110001 00000111111111 c0c52c 150 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 151 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 152 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 153 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02Yes, font is rendered at double height. (and it is p2font16, just because that's already in interleaved format). Just because that was easier to hook up. It is re-doing it every line, it's just that the line counter is set up for 2x scaling. I did verify audio on my ASUS monitor, but that's the least picky out of my available sinks. Try it on your Pioneer to be sure.

This is what it should look like between the transitions (at normal NCO rate the whole time). I'm sending a test pattern that increments the blue channel by 4 each pixel.

534 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 535 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 536 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 537 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 538 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 539 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 540 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 541 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 542 Data:-> RGB (00_00_00) 0100000000 0100000000 0100000000 0000011111 000000 543 Data:-> RGB (00_00_04) 1111111111 1111111111 0111111100 0000011111 000004 544 Data:-> RGB (00_00_08) 0100000000 0100000000 0111111000 0000011111 000008 545 Data:-> RGB (00_00_0C) 1111111111 1111111111 1111111011 0000011111 00000c 546 Data:-> RGB (00_00_10) 0100000000 0100000000 0111110000 0000011111 000010 547 Data:-> RGB (00_00_14) 1111111111 1111111111 0100001100 0000011111 000014 548 Data:-> RGB (00_00_18) 0100000000 0100000000 0100001000 0000011111 000018and

1176 Data:-> RGB (00_09_E8) 0100000000 0100000111 1000001101 0000011111 0009e8 1177 Data:-> RGB (00_09_EC) 1111111111 1111111000 1011110001 0000011111 0009ec 1178 Data:-> RGB (00_09_F0) 0100000000 0100000111 1000000101 0000011111 0009f0 1179 Data:-> RGB (00_09_F4) 1111111111 0100000111 1011111001 0000011111 0009f4 1180 Data:-> RGB (00_09_F8) 0100000000 1111111000 1011111101 0000011111 0009f8 1181 Data:-> RGB (00_09_FC) 0100000000 0100000111 1000000001 0000011111 0009fc 1182 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 1183 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 1184 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 1185 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 1186 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 1187 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02 1188 Ctrl:-> Blanking VH=3 +V +H 1101010100 1101010100 1010101011 0000011111 fdfd02Oh wow, something's really broken in there. 11/9 duty clock, how come? I guess it sources it from the NCO counter somewhere? In that verilog snippet the serializer had its own counter, why not use that?

So for a very theoretical P2 rev D, this should really be fixed in some way (+ add harware TERC4 modulation - possibly as %xxxxxxxx_xxxxxxxx_RRRRGGGG_BBBB010x ?)

The NCO switching is very simple with setq... here's my hacked up code with xxx and yyy being the two NCO rates I hacked in.

if_z call #borderregion 'and go back to border generation setq xxx xcont m_rf, palselect 'generate the visible line sub regionsize, #1 wz 'decrement the region's size count if_z call #newregion 'when 0, reload another region call videomode 'create the next video scan line setq yyy xcont m_bs, hsync0 'generate horizontal FP blankingYeah TERC4 encode would be nice, plus HW pixel replication for DVI. Once you open up another rev the requests will start flying though.

I think that Verilog might be from Rev A, the channel command formats didn't quite line up as I expected, or I was misreading it.

Oh, you think there was significant change there from A to B?

Yeah the whole streamer was rewritten IIRC.

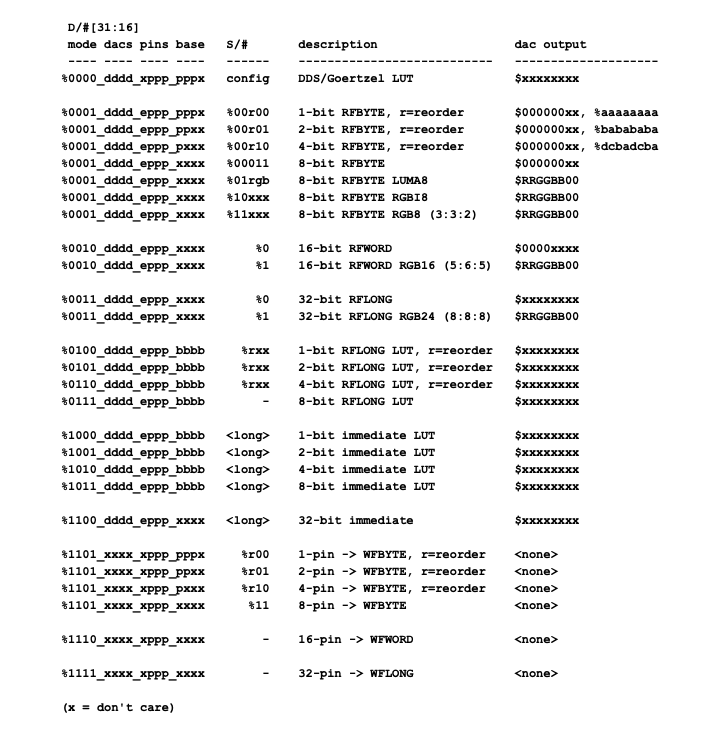

From the old rev A doc: