CLKSET(NewCLKMODE, NewCLKFREQ) syntax question

CLKSET(NewCLKMODE, NewCLKFREQ)

Safely establish new clock settings, updates CLKMODE and CLKFREQ

CLKMODE- The current clock mode, located at LONG[$40]. Initialized with the 'clkmode_' value.

CLKFREQ- The current clock frequency, located at LONG[$44]. Initialized with the 'clkfreq_' value.

For Spin2 methods, these variables can be read and written as 'clkmode' and 'clkfreq'. Rather than write these variables directly, it's much safer to use: CLKSET(new_clkmode, new_clkfreq)

This way, all other code sees a quick, parallel update to both 'clkmode' and 'clkfreq', and the clock mode transition is done safely, employing the prior values, in order to avoid a potential clock glitch.

Where do you find the CLKMODE\CLKFEQ settings. I think from the above (CLKSET)the compiler actually will generate the code to switch over. Normally I would assume the fastest rate would be the best but there may be some use to switch to a slower speed (RCFAST\RCSLOW) . Just want to include in notes for completness.

Regards and Thanks

Bob (WRD)

Comments

clkmodeandclkfreqare global hub variables so can be set or read at will. But as the description says, it's best to set them usingclkset().The compile time features for setting the initial clock mode,

_clkfreq, _xltfreq, _xinfreq, _errfreq, _rcfast, _rcslow, are constants you define in the main source file. They are effectively build parameters. And may be all you want if no desire to change the clock frequency during program run.I've used used run-time setting, with clkset(), to record multiple runs of behaviour for analysis. When using the comport for the logging it requires recomputing the baud timing for each new sysclock frequency since the hardware pacing of bits is ratio'd to sysclock.

Debug system doesn't handle this at run-time though, therefore it can't be used when using clkset(). The two features are currently incompatible with each other.

The impression I get from the documents is that if you run CLKSET(newCLKMODE,newCLKFREQ) the compiler will perform and do the group of instructions using HUBSET(Value) to make a smooth transition. (which would be great) . I am also under the impression that this should be done with only the boot cog0 running and essentially no activity (no events or interrupts). It would make sense that debug comm settings would require changing if you wanted to use debug with a different system clock frequency. What is missing is what are the possible newCLKMODE values with the possible newCLKFREQ. I have looked in the hardware document and spin document (might have missed the Info but scanned it twice) You would think this is a set table for compiler recognised values (newCLKMODE\newCLKFreq) and possibly the compiler could take into account the comm speed changes for DEBUG in the future since it appears the compiler is responsible for all the changes from CLKSET().. For now Essentially looking for the acceptable values for the CLKSET(newCLKMODE,newCLKFREQ) . PS XTAL1 frequency would be a parameter in the table notably standard 20MHZ.

Regards and Thanks

Bob (WRD)

Yep. That part is sorted.

All cogs are equal.

HUBSETinstruction is a hub-op so affects the whole Prop2, the clock tree circuits are a single global structure. Any cog callingclkset()changes the whole chip to a new frequency.Yeah, but it's locked in the existing debug routines and can't be overridden by your program. That area of hubRAM is protected when using debug features. Chip has to make changes to Pnut/Proptool to allow use of clkset() with debug.

There's nothing from Parallax so far. Chip posted his compile-time routine used to calculate the mode word but that's all there is from him.

I've done my own coding of this as I needed. I've posted it number of ways and times going back to revA silicon ...

Here's an actual topic I started in the latter days of my assembly-only coding - https://forums.parallax.com/discussion/170414/dynamic-system-clock-setting-and-comport-baud-setting/p1

Chip's calculation of

clkmode_constant symbol at compile time - https://forums.parallax.com/discussion/comment/1486815/#Comment_1486815Run-time versions by Roger - https://forums.parallax.com/discussion/comment/1506393/#Comment_1506393

and myself - https://forums.parallax.com/discussion/comment/1529415/#Comment_1529415

PS: You'll note I've used

send()instead ofdebug()in my test code so then the comport baud can be set on each test loop.Thanks Evanh. I can see that this is getting out of my range of understanding and some considerable work has been done . I tried to run the PASM program but the first line "qmul xtalmul, ##(XTALFREQ / XDIV / XDIVP)" came up with an error "Expected Unique constant name or #" and qmul was highlighted as the culprit. Best I move on frm CLKSET() instruction for the time being (pun intended). I thiink most of the time _clkfreq would not really be changed .

Regards

Bob (WRD)

If you're compiling the code from the early assembly only link then it's probably too old. Err, it's only a routine, not a full program. Try the code at the last link instead, it has complete test program included.

Still no description of CLKSET() parameters for P2 anywhere?

Will I have more luck using HUBSET ##%0000_000E_DDDD_DDMM_MMMM_MMMM_PPPP_CCSS in PASM?

(It's my next test tomorrow; doesn't seem to work fine in Spin2 even though it seems legal for the compiler; just like CLKSET(), they both show no compile error, but the Object Info window reports false data for Clock Mode, Clock Freq and XIN Freq)

The new Propeller 2 Assembly Language (PASM2) Manual - 20221101 doc by Jeff Martin is SOOOO great, but no mention of HUBSET in the detailed part. I hope the info from the older docs will work.

I want to use my 200MHz clock oscillator with no PLL.

If you use PNut.exe, and do a Ctrl-L to get a list file, it will show you the cllkmode value and derived frequency.

You can set up whatever _clkfreq value you want and it will compute the clkmode value, which can later be used in a CLKSET instruction.

The good news is all you need worry about is the least four bits: %CC and %SS. I'd go with a mode value of

%01_10for no pin loading and XI selected source. So that's:clkset( %01_10, 200_000_000 )I haven't tested but may be able to alternatively use the compiler built in to get the same effect:

CON _xinfreq = 200_000_000 _clkfreq = 200_000_000 ...Thanks so much for the very quick answers!

Great! I just found PNut using Bing instead of Google (I'm dropping google right now); I'll try that.

Update: CLKMODE = $010000F7 (%0001_0000_0000_0000_0000_1111_0111 ) in the listing, even though I entered clkset(%01_10, 200_000_000). Info window shows Clock Mode: XINPUT+PLL, Clock Freq: 200MHz, XI Input Freq: 200MHz.

I need the PLL off to take advantage of my very low jitter oscillator for very high speed serial (50-100Mbaud).

The clkset() and constants still show erroneous infos in Object Info window:

clkset(): Clock Mode: RCFAST, Clock Freq: ~12MHz, XIN Freq:

CONs with or without clkset(): Clock Mode: XTAL2 + PLL16X, Clock Freq: 200,000,000 Hz, XIN Freq: 12,500,000 Hz

Only _clkfreq with or without clkset(): Clock Mode: XTAL3 + PLL1X, Clock Freq: 200,000,000 Hz, XIN Freq: 200,000,000 Hz

Is it possible that the only problem is with Object Info and that my board is actually running ok (needing more debugging)?

I still haven't connected my logic analyzer on my microscopic PCB (without any test point, damn asshole I am).

Pnut provides correct info.

And since I generally compile with flexspin from shell, I often reuse code that emits a startup status to terminal upon run. This includes the system clock mode and frequency as a minimum. eg:

void main(void) { _waitms(200); printf( "\n clkfreq = %d clkmode = 0x%x\n", _clockfreq(), _clockmode() ); ...And a rough equivalent for Spin2:

PUB do_test() | div, divisor waitms( 200 ) debug( 10," Defaults: ",sdec(clkfreq)," ",uhex(clkmode),13,10 ) ...Cog0 INIT $0000_0000 $0000_0000 load Cog0 INIT $0000_0404 $0000_0000 load Cog0 Defaults: clkfreq = 80_000_000 clkmode = $100_070BSoldered some test points on my micro-board and I managed to output 3.13ns (?) wide pulses (there must be a scope error, I need a 10GHz), running @ 320MHz simply with...

_xinfreq = 200_000_000

_clkfreq = 320_000_000

Time for a cold beer!

I love Motorola's over-spec chips; in the 80s I did a board with a 10MHz MC68000 surrounded with 74F logic chips and it crashed only @ 17MHz so I ran it for years @ 12MHz very safely.

I used to like P1 a lot (wrote my own multi-CPU OS and com protocol on it), now I love the P2 after my first experience on it. Thanks Chip for this gem!

Even though It goes through the PLL, I just can't measure the jitter since it's too low for my instruments, and as a nice bonus I can change de clock frequency.

Because of you, I now need new scope and logic analyzer.

In practice you can run-stable at up to 340 MHz. My NeoGeo emulator uses 338 and can run for days without crashing, despite tight IO timings. 350+ also works, but beyond that the stability drops off.

Be aware that even 320 MHz can be too fast if the die gets hot - Either through excessive data wrangling, using all cores, or high ambient. First thing to bale is the hubRAM interface. It'll corrupt both reads and writes to/from hubRAM.

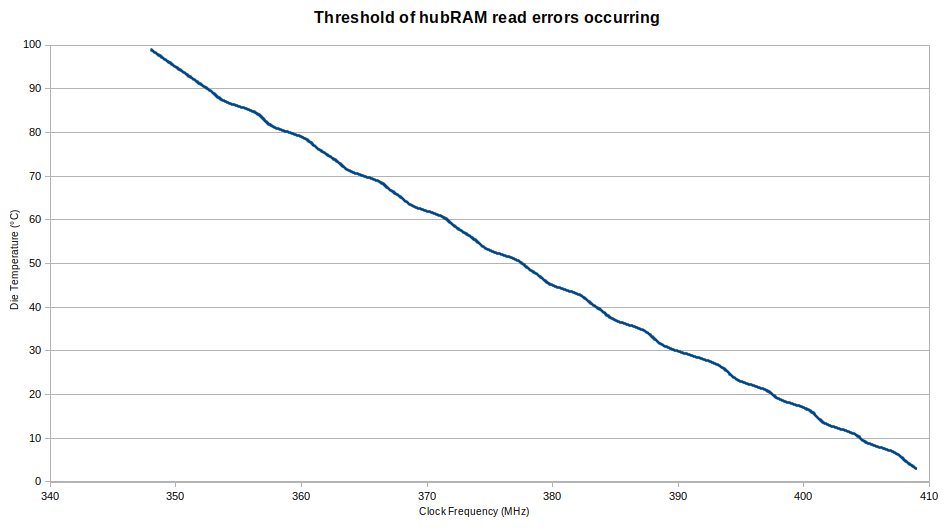

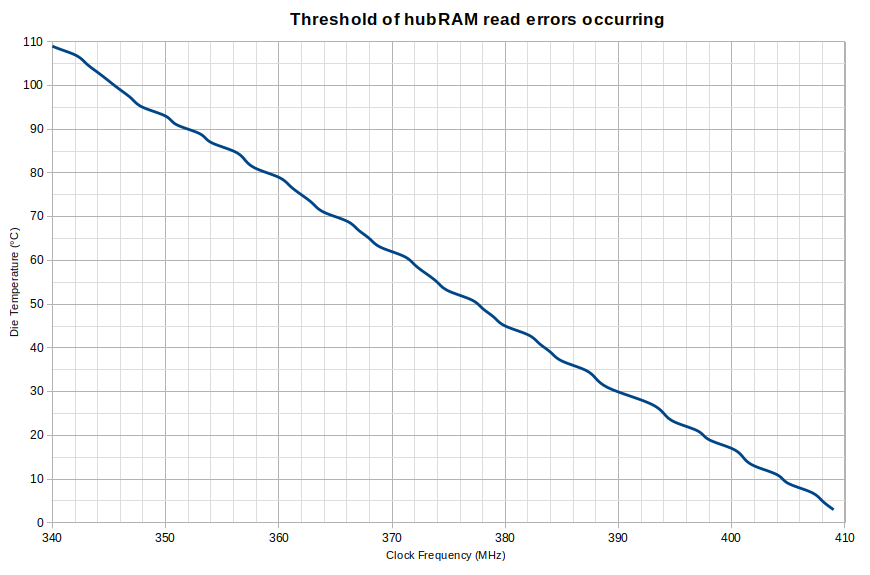

I have made a set of temperature vs frequency points in the past but never turned it into a graph ... mainly because it's hard to equate a reliable die temperature with ambient without also understanding the wattage gradient of a particular application. Experience through trial and error seems good enough.

PS: Using some ice packs I've had 410 MHz cogs running tests at -10 °C.

EDIT: Uh-oh, something else happens around 100 °C. My verify routine stops working correctly above that. It thinks all is good. Maybe the burst copy stops writing altogether and the last good data block sticks. Either that or the ALU is breaking.

It has to be a data copy problem I'd say. Or maybe a RDLUT limitation. It's not actually crashing, the print to terminal caries on fine into the PLL self-limit - executable is contained completely within cogRAM.

I removed both the burst copy and lutRAM usage. Now using the FIFO instead. Behaviour is as expected again. Decided not to go much higher toasting that poor Eval Board yet again.

Huh, found a flaw in my old high-power tester program. I was blindly COGINIT'ing all cogs at very high overclocking not realising that quite often those individual cogs had a reasonable probability of crashing due to hubRAM read errors. Time to double check things ...

@evanh

Have you run these tests on a KISS board? How about on a RetroBlade2? @Cluso99 has pointed out that one of the limiting factors for stability at a given frequency can be lack of bypass capacitance close to the chip.

That's a different kettle of fish. If the caps were a problem when doing this temperature testing then I'd be adding more capacitors until the testing worked as above.

How would you know if your stability at a given frequency is limited by temperature, or by stability of power at that frequency?

Another thing that might be useful, but would take a lot of work is testing different voltage inputs from 1.7 to 1.9V, and maybe even IO voltage might make a difference if you lowered the VIO to the lower threshold which would make it produce less heat.

A ) I used the Eval Board for testing. It has substantial decoupling on the power rails.

B ) I explicitly coded the program for minimal peak power draw. I could have gone another step to reduce overall heating further but didn't think of it at the time. The demands on the power supply was minor. Especially compared to other testing where I went for maximum power draw to see how hungry the Prop2 could be. Big contrast in wattage!

C ) Temperature response follows a consistent linear ratio of 1.6C per MHz ...and when it didn't I investigated and eliminated the cause.

One of the "other" tests - https://forums.parallax.com/discussion/comment/1528469/#Comment_1528469

Thanks a lot for the graph, it's very useful.

Juste to update on my initial problem, I just realized the latest online Hardware Manual and the 2022/11/01 Datasheet show two different maximum external clock oscillator: Hardware Manual = 180MHz, Datasheet = 200MHz. I believe it's my first (and last) time ever to use maximum specs, especially with product still under development. I wanted the 200MHz low jitter oscillator to have less probs with very high speed serial coms, and not use the PLL and its high jitter specs. I will change my oscillator for a 20MHz and go with the PLL like I was forced to do to make it work with the 200MHz osc.

Btw, any of you know if the P2 ROM code as the capability to send back a copy of the NVRAM to the PC by serial port? And if there is a way to prevent this?

The P2 has no NVRAM. Are you referring to an external flash chip? Nothing's preventing anyone from loading his own program into RAM that reads the flash and sends the data back to the PC, or indeed from just desoldering the chip and reading it.

Yes I was referring to the flash chip. All I need is to prevent reading the firmware (one of the flash chips, the one connected to P58-P61) from the PC connected to it. If I can't, I will have to add complex code protection. That would be bad as the whole project is already finished and starts beta phase. The only connection possible is by the USB connector.

I just want to be sure there is no code in the P2 ROM that enables it to read the whole (or part of the) firmware and send it back to the IDE for verification or debugging of some sort. I'm a bit paranoid.

Several more or less stupid ideas to make copying/reading firmware harder:

Cut off P62 and P63. Make a protocol to communicate with a non-standard, custom made programming contraption on other pins so you can still update the software. Put the real binary, scrambled, somewhere in the flash, but not at the start of it, while at the start you can put a descrambling loader. Make debugging harder when descrambling: use a second cog that measures time, so when someone tries to debug the main program, there will be a timeout and then the "guard" cog will clear the flash.