My Advanced Realistic Humanoid Robots Project

artbyrobot

Posts: 89

artbyrobot

Posts: 89

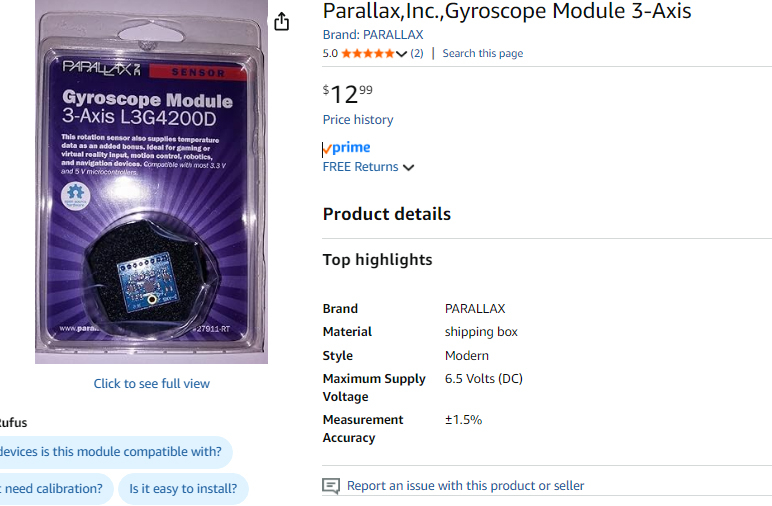

I am planning to use the Parallax Inc. 3-axis gyroscope for the balance system

So I did manage to add a pair of braided solder wick wire as a added layer over the nickel strips of the highside mosfet setup and I insulated that with red electrical tape folded over it. I also insulated everything else in sight for the most part. I lost the original control circuit so I made the replacement flat flex pcb style which should be more robust. I also added a yellow 30ga wire for the 20v input line of the gate pin of the main mosfet. I also got my fiberglass window screen mesh ready to be installed to insulate the solder wick wires acting as heatsinks. So this setup is getting close to install ready now but I want to test it again to make sure its still working after all the major changes and messing with it so much.

On another note, I noticed that stacking the 0.1mm x 4mm x 100mm nickel strip plus braided solder wick to reduce resistance and increase conductivity made the lines a bit thicker than I'd like, especially after adding tape. So to resolve this I decided to roll with 0.2mm x 6mm x 100 mm hand cut out strips of pure copper plate. I was not aware of this option before but I was able to find copper in .2mm thickness in a roll on amazon that I can use for this. With this thicker size and the much lower resistance of copper I should be able to run 30a through it with less than 1w of waste heat which is great. And this will still give me a way thinner result than what I used on this first one while lending lower resistance by getting rid of nickel strip entirely for the high amp stuff (aside from the shunt resistor nickel strip which I still plan to keep).

For continuity: this project began in an earlier thread here (2024 build log): https://forums.parallax.com/discussion/176010/my-advanced-realistic-humanoid-robots-project --- This new thread is to resume visible updates going forward.

Comments

I was randomly talking to chatgpt about how I have been feeling burdened by having to make my own BLDC motor controllers for my robot lately and it randomly mentioned integrated half-bridge power modules as something I could use to cut down on my labor load in making these motor controllers. This immediately stood out to me as something I'd never heard of and something intriguing. I have so far been working on my lowside switch and highside switch which together form a half bridge. Many solder connections have been involved and alot of discrete components are involved. The concept of an integrated half bridge on a single chip - meaning two big power mosfets and all the drive circuitry for those power mosfets all condensed into a single chip would be a huge reduction in size and component count as well. So I researched if any are able to do 8v 30a for my 2430 BLDC motor's needs. Turns out there are some out there. At first I was looking at Texas Instruments CSD95377Q4M Half‑Bridge Driver (30 A) which can do 30a continuous so perfect for me. However, I didn't want to lock myself into a single vendor chip that may one day be discontinued. I prefer something ubiquitous with many competitors making it that can be purchased from aliexpress. Something commodity level. This way I future proof it and don't have to worry about any one manufacturer discontinuing parts I'm using and prices soaring because of that or simply the part becoming unavailable. So after a bit further digging I found CSD95481RWJ QFN chipset on aliexpress sold by several vendors and one was under $1 each. So it is equivalent to two power mosfets plus all drive circuitry for each power mosfet all for under $1. This one also has 60a continuous rating. It is only 5mm x 6mm in size which to me is insane. This is so much smaller than the setup I've been working on yet just as powerful. They are usually used for tiny buck converters and used directly on videocard PCBs and in servers and in automotive PCBs and much more. In any case, using 3 of these half bridge chips you can drive a BLDC motor. The consolidation of so many parts into such a tiny package is truly blowing my mind. So I ordered 60 of these chips - enough to drive 20 BLDC motors. I am leaning toward using these for all my motor controllers if working with them is easier than working with discrete components like I have been. They are cheaper to work with I think - I'd have to run the numbers on that though. They even have built in temp sensing we can read in which is a bonus. Their built in current sensing will not work for BLDC motors so I'll still need my shunt resistor current sensing circuit setup external to it but that's ok. All in all these appear to be a game changer in terms of reducing part count so less potential points of failure and also reducing board footprint so miniaturizing my electronics even more which is very good for us. I'm still needing to work out now how I want to hook these up in terms of PCB making for it and any discrete external components needed to support it. It is also top cooled which is interesting. I'm envisioning using silicone thermal adhesive to glue on a copper pad that has my braided solder wick wires already soldered to it. These will carry the heat away to my water cooled pipe system.

I'm kind of amazed that nobody really seems to use these for BLDC motor controllers. They seem perfect for it. Maybe I'll start a trend. Assuming I don't find out the hard way why they are never used for this application!

note: the full product title: "(5pcs)100% original New CSD95481RWJ 95481RWJ CSD59950RWJ 59950RWJ QFN Chipset"

note: for my previous BLDC motor controller design I was needing to use 6 digital IO pins to drive a single BLDC motor controller's 6 power mosfets by way of their control circuitry. But for a BLDC motor controller design using 3 CSD95481RWJ H-bridge chips, I will only need to use 3 digital IO pins on the microcontroller. These CSD95481RWJ H-bridge chips use a pwm pin that is a tri-state pin - you can have high, low, and floating as the signal you send to it from your microcontroller. Digital output high and low are the usual digital output modes but the floating mode you do in your code by configuring the pin to be a digital input pin which makes it a floating pin. These 3 states fed into the chip makes it either give you V+ as its output or V- as its output or just off/floating as its output. This corresponds perfectly to the normal h-bridge 3 states we'd be using with our discrete components previous microcontroller design. So this savings in total digital I/O pin usage on the microcontroller means you can drive more motors per microcontroller in theory which is pretty cool.

Well I deep dove into the CSD95481RWJ IC route. I estimate it will cut the work in half roughly for every motor controller made and cut the size taken up by about 60% compared to my previous discrete components approach.

Now I will note that I did come across the BTN8982TA which is rated to 40v and can handle 30a continuous 50a peak short burst. But it's TO-263 form factor so about 4 times as big as the CSD95481RWJ. It also costs about $2 each so double the price. It's not a bad option though all things considered but just not quite as good as the CSD95481RWJ for the reasons mentioned. I note it here so I don't forget about it. It can be a great option if the CSD95481RWJ doesn't work out in the end or something.

Anyways, for the thermal concern - which is my biggest concern, I plan to top cool the CSD95481RWJ using a .2mm plate thermal siliconed into place on top of the CSD95481RWJ and then solder a bundle of 4 braided solder wick wires to that and run that off to the water cooled copper pipe about 4" away. The top cooling only handles about 30% of the cooling according to chatgpt. The most important 70% is from the bottom cooling through its pads on its bottom. For this I plan to use double stacked .2mm thick copper plate soldered to its IC pads. So that's .4mm thick. Also it will be around 2mm wide where it attaches to the pads. It will then route out from under the chip and swing upward into free space and head over to the 8v+ and 8v- buses coming from the 8v motor battery banks in the robot's lower torso. These thick copper traces I will fork off of with braided solder wick wire right near the CSD95481RWJ IC chip for thermal conductivity reasons. This braided solder wick wire will be live so I will wrap it in fiberglass window screen so nothing can touch it - preventing short circuits. It will then be electrically isolated from where it connects to the water cooled copper pipe with thermal conductive tape. The braided solder wick wire attaching to these thick copper traces will be a bundle of 4 per trace. The various decoupling capacitors this chip calls for I will connect to its output pins using flat flex PCB DIY hand made. I'll be attaching this PCB first and attaching the thick copper traces to the underside pads second as a separate layer that goes underneath the flat flex PCB layer. The flat flex PCB layer will mostly stay around the outsides of the chip and have its center cut out and removed - the part of it that would get in the way of the underside main pads under the chip. So the flat flex PCB will just hug the outsides of the IC mainly in a U shape around the chip leaving the center of the bottom of the chip free to solder to with my thick copper traces.

Note: the thick copper traces will be cut out with scissors from a roll of .2mm copper sheeting I bought on amazon which I mentioned a few posts back. Double stacking it wil double its thickness and increase its conductivity both electrically and thermally.

Note: in a usual setup with this CSD95481RWJ IC, a multilayer board with a array of vias is used to bring the heat downward off the chip and into another lower layer within the multilayer board where it can then radiate on said layer outward in every direction. In my approach, I use thicker traces than the layers of a multilayer PCB has so I have alot more local copper in play. Then instead of the heat transferring down and then outward in all directions on very thin copper, mine travels down then in a single direction outward away from the IC on that trace. The trace will need to be as wide as possible as soon as possible. I expect to get it from 2mm width - the width of the pad - to 5mm width within a few mm. This rapid transition to a wider width combined with the use of much thicker copper compared to a multilayer PCB's copper thickness of its layers means I should be able to exceed the thermal performance of a multilayer board using my approach. Especially since I also plan to quickly fork off the main traces with bundles of 4 solder wick wire braids that will carry the heat off to a water cooled pipe 4" away.

Note: in my attached schematic I only show a single CSD95481RWJ IC because they are all wired up the exact same way. It's just doing it 3 times for each of the 3 phase wires of the BLDC motor.

Note: I will use a single electrolytic capacitor per motor controller also not pictured in the schematic.

OK, so when I was thinking of using both my discrete components motor controller design parts I already made and then also separately implementing the integrated half bridge IC design going forward, it hit me that the 8v- and arduino gnd tie together on the half bridge IC by necessity but this ruined their intended isolation I needed for my discrete components motor controller design particularly for the lowside switching portion of that schematic. On the lowside switching portion, the little mosfet has 12v- and arduino gnd tied to its source pin. If on the integrated half bridge I also have to tie arduino gnd and 8v-, then that means 12v- and 8v- and arduino gnd are all tied together always.

That completely ruined the necessary isolation between arduino gnd/12v- and 8v- that I had intended to be in place for my lowside switch setup. So that was bug #1 freshly introduced that I would then need to solve for in my discrete components motor controller design. When studying this out on the discrete components motor controller design, another error hit me: when any lowside switch turned on in the design, the 12v- dedicated power supply gnd and the 8v- motor supply gnd become connected as long as that lowside switch is on. Since every lowside switch had always access to 8v gnd on its source pin, then even one moment of 12v gnd and 8v gnd attachment anywhere on the robot would cause every lowside switch in the entire robot to immediately turn on at the same time. So if any turned on, then all turned on. This was a huge oversight. For some reason since I only designed and focused on one half bridge conceptually at a time, I did not consider the effects one half bridge has on its neighboring half bridges. This just never occurred to me. I guess conceptually I envisioned that every half bridge had its own personal 12v ground from its own personal 12v supply that was electrically isolated from the entire rest of the robot. But of course that's not practical even if it is technically possible. So in testing, things did work, but would have failed as soon as I tried to test more than one half bridge at a time. So I caught this bug before testing revealed it.

I discussed this horrible situation with chatgpt and it taught me that in a complex system like a robot, grounds of all your different supply rail voltages cannot be relied on to be isolated from one another like I was treating it. Even if at times they were momentarily, one switch, one change and suddenly they are not and it all becomes a common system ground again. So if I can't safely assume a ground for any given voltage is safely disconnected from the grounds of other voltages, I should not rely on switching on and off access to any particular ground to any of my lowside switches. Instead, I should be shorting the gate driver of the lowside switches to ground to shut them off rather than messing with their source pin's ground connections like I was before. I am to leave the source pin's ground connection as 12v- and its gate connection as 12v+ at all times except when I want to shut it off - at which point I short the gate pin to gnd using my logic level mosfet to do so.

The fix was very straightforward and minor: I just had to add a 100ohm resistor in series with the gate pin of my big power mosfet lowside switch and then reroute my little mosfet a09t drain pin to the big lowside mosfet's gate pin instead of its source pin. The connection to its gate pin must be downstream of that 100 ohm series resistor so that the path from the big mosfet's gate pin has almost zero resistance when traveling through the little mosfet's drain line and over to its source line into ground. This way when you turn on the little mosfet, the big mosfet's internal capacitor quickly empties out, flushing into the path to ground created by the little mosfet and that discharges the big mosfet, shutting it off. When you want it back on, you shut the little mosfet off, which allows the big mosfet's internal capacitor to charge up again, which turns the big mosfet back on. So the setup now acts like a normally on relay.

Note: the resistor on the drain line of the little mosfet that we used to have when it fed into the source line previously is now removed. We want no resistance on there because that would impede the little mosfet's ability to discharge the big mosfet's internal capacitor in a timely manner. We want to be able to not only discharge that capacitor quickly but also direct all incoming current from the 12v+ line that makes it past the 100ohm resistor heading for that big mosfet's gate into our ground path. This rapid redirect flushes so much of that already limited current that hardly any can make it inside the gate of the big mosfet which causes the gate of the big mosfet's voltage to approach near zero volts. So it's called a "pull down" path to ground.

Attached is a photo of my schematic before and after the fix.

Attached is a photo of full updated schematic with the changes in place.

That all having been said, this discrete components original bldc motor controller design, now fixed, is worth keeping archived, but is now basically abandoned now that I have access to the time, money, and space saving shortcut of my integrated half bridge IC based design. It's kind of sad to abandon something I spent so much time on, but who knows, I may still use it if I ever come across a motor that I can't find a cheap half bridge integrated IC for in the future. It's a great schematic to have at the ready for that scenario.

Ok so I was thinking now that each half bridge is just a tiny IC rather than a pair of hefty power mosfets, the space taken up overall by my entire bldc motor controller is going to be about 3cm x 1cm x 2mm which is insanely small for 30a continuous at 8v motor controller! This realization caused me to reconsider whether I even need to treat this as a single motor controller cluster that has to be sat like a horse saddle onto the side of my bldc motor - my original intention for my discrete components original design for my original bldc motor controller. What I realized instead is that things are now so small that I can simply build a half bridge for each phase as a inline element nested inside the cable run leading to each phase wire of the bldc motor. So instead of having a dedicated spot for each motor controller, I'm going to have just a slight bulge in the phase wire leading out from the bldc motor and that bulge will contain the half bridge that handles that phase wire. All nested inline. This is the easiest way to implement and most streamlined I think. It also means the whole motor controller will just be "floating" in midair, not actually mounted to any motor or anything at all. Just part of the wire harness nested right in there. This is a radical approach IMO. Only made reasonable by the fact we miniaturized the design by such an insane degree.

So the previous version of the schematic was intended to be mounted to the side of the motor like a horse saddle and had an l shape so inputs would come up from bottom and outputs out to left side toward motor phase wires. These L shaped half bridge setups would be stacked next to eachother side by side. In the new variation everything is inline, inputs coming from right and outputs exit out left side to motor.

Here's the updated inline variation of the schematic (no longer L shaped flow like before).

This young YouTuber goes into lots of details about motor control for robots. That said, he uses the ESP32, not the Propeller.

-- https://www.youtube.com/@h1tec/videos

Wow great link I binged most of his channel after I clicked it.

Motor control should have been the first step. It is far from easy. Needs absolute encoder feedback and many Prop-2 processors for coordination/ kinematics.

I saw something on a drawing suggesting back-EMF for tracking rotor angle. Not happening.

Ok so I realized I don't have to feed in 8v+ from an external wire when I can just pull it from a neighboring pin on the chip that has 8v+ already fed to it from one of the big 8v+ copper traces attached to one of the big 8v+ pads on the underside that connect directly to one of the side pins. That side pin can then be routed to any 8v+ requiring pins if I can find a path for this routing - which I did find. So that is one less external wire input needed - bringing total external input wires needed to 3 instead of 4 as far as the 30ga wires I need to attach. So now all I need for 30ga wire attachments are 5v+ from arduino, GND from arduino, and PWM from arduino. This saves work and simplifies the wiring so its a great improvement.

Oh and I also did the same thing for the 8v- feed, pulling it from a local pad rather than a external wire feed for that.

Also, I have separated out the PCB traces themselves and made them black instead of blue for printing them onto the PCB transfer paper and laminating this onto the blank Pyralux flex PCBs for etching them. I also mirrored it since it prints and laminates backwards onto the PCB.

So I ran into some issues trying to make the DIY flex PCB for my integrated half bridge IC chip. This chip has extremely fine 0.4mm pin spacing so the PCB has to be insanely accurate. My previous discrete components BLDC motor controller variant enabled me to create much more crude and less dialed in flex PCBs and things still worked. But with this flex PCB it has to be very dialed in and with very high execution precision. This is no joke. The first issue is that my laser printer does not adhere well to the pcb transfer paper I bought on amazon. It prints on it with some of the toner showing up mirrored a inch or so away from where the print originally lands onto the PCB transfer paper.

This means the ink isn't setting onto the transfer paper enough and is coming off onto the fuser roller or something and that is corrupting the fuser roller. This can destroy my printer's performance over time and cause improper fusing onto all prints going forward even for normal office use which means addresses I print on envelopes are smearing off while in transit and envelopes are being lost in the mail system for me. This is VERY VERY bad. So I had to ditch using this transfer paper.

Thankfully chatgpt recommended using glossy magazine paper and so I gave that a try. I used Psychology Today magazine paper and the print went onto there PERFECTLY. I used 600 dpi setting, heavy as paper type. I prepped my copper flex PCB blank (Pyralux) with 400 grit sandpaper followed by alcohol prep pad wipes. Next, I laminated the glossy magazine paper print onto my copper flex PCB blank (Pyralux) with my laminator a few passes. Next I soaked the magazine and flex PCB sandwich in 110F water for around 30 minutes which turned the glossy magazine paper mushy/pulpy. I then rubbed the paper repeatedly with my fingers working from the outside edges and was able to roll it off gradually and gently. It came off in two layers. It leaves a bit of pulp residue behind on the pads but that is ok it doesn't affect the etching process later you can leave that. And with this method I got the cleanest traces on there EVER.

But when I went to etch this clean PCB with toner in place, things fell apart again. I used room temperature water with my Ammonium Persulfate crystal and water mixture. So it was 68F water. I did not agitate the etchant much. The etching took about 2.5-3 hours! That is horrible etching speed. The larger copper planes took their dear time to evaporate and meanwhile the finer traces had undercutting so bad that the entire copper under the toner etched away and evaporated and the toner came off having nothing to stick to anymore and whose sections were lost. The total etching time is supposed to be no more than 5-15 minutes. 3 hours is totally unacceptable. I found out from chatgpt that the reason it took forever was I failed to heat the etchant to 110-120F and I failed to agitate the mix (stir or vibrate or w/e helps). I also learned from chatgpt that for every 10F increase in temperature of the etchant, the etching time cuts in half. So a increase to 110F will mean the etching time should come down to the 5 minute range pretty likely if I also agitate well. The instructions on the container of etchant crystals said room temperature and no agitation is fine. THEY WERE WRONG for SURE on that.

So to address perfecting the etching process I plan to get a AC hot plate with temperature adjust which I already own - one for like pans or kettles cooking/heating. I also determined that the easiest way to agitate would be to put the etchant and PCB in a small container and create a apparatus that lifts and lowers one side of the container in a rhythmic way so that it rocks the etchant back and forth across the PCB. Below is my simple apparatus design for that. The advantage of this approach is its free if you have the very few parts needed. I can power it with my lab power supply. The n20 gear motor is like $1. Super easy to make. Can handle my 15g of etchant sloshing easily. Simple to make. Does not have anything going INSIDE the acid which would then be spinning and crashing into the PCB and possibly causing issues there. We want just a bear minimum amount of etchant batch per PCB job and so even a spinning stir rod with magnet setup would be hitting the PCB and tossing it around violently etc I didn't want that and didn't want acid on that and having to fish it out and clean it of acid. Prefer nothing going into the acid but the PCB itself. So rocking the whole container makes sense for this and resolves that problem.

The rocker apparatus consists of a n20 gearmotor ($1 on aliexpress), a little wheel (paper and superglue composite wheel), string, a little block to get the motor up higher in placement than the etchant container. As the n20 gearmotor rotates it lifts the string, raising up one end of the etchant container. As it reaches 180 degrees of rotation (6 o'clock) it has lowered the etchant container back down. It repeats this raising and lowering of the container over and over in a cyclic pattern which will cause the etchant solution to slosh back and forth over the PCB improving the rate of the chemical etching reaction and moving copper ions away from the PCB surface being etched more efficiently. No pwm motor controller is needed I don't think since you can change the rock speed by changing voltage setting on the lab power supply and/or changing radius of the wheel that is turning and doing the lifting and lowering action.

So I was randomly watching YouTube recently and saw a George Hotz past broadcast entitled "George Hotz | Programming | Welcome to Gas Town and the future of Computer Use | Agentic AI | Part 1". It kind of shocked me to see this. I was under the impression for some years now that vibecoding was for total noob programmers who were incompetent and horrible coders that were happy to throw together some absolute trash bug filled code with LLM help and call it done. And that were they ever to need to go back and fix the code and remove bugs, it would be more trouble than it was worth due to the horrible code the LLM output. That it would be easier to rewrite it all from scratch at that point than to try to fix its bugs. I'd been watching this space closely though. That was the consensus I thought was at hand. But then this video threw a monkey wrench into that consensus. Here, a world renowned god-tier coder in George Hotz was using agentic AI and saying its the future of coding. Kind of blew my mind. I then deep dove into agentic AI, how agents work, what is the agentic loop, what are agent tools, what are agent skills, what is good vibe coding practice, what are good vs bad vibe coding methodologies, how much does it cost, etc etc. I discovered something called Claude Code and Codex and OpenCode and OpenClaw and people raving about these tools or warning about them and making fun of them. It is a incredibly polarizing topic with people adamantly for and against it all. I even listened to a 11.5 hour long audiobook called Vibe Coding Audiobook by Gene Kim, Steve Yegge. It was a huge deep dive for me.

In the end I drew several conclusions or hypothesis and chose the following stances: I think full blown vibe coding infrastructure low level important lengthy and complex coding projects is not a good idea now and maybe ever. But I think LLM assisted coding can do it. Vibe coding where you don't even really look at the code at all and trust the LLM and agentic AI using the LLM completely is probably only doable for very simple things and will be buggy and ugly code in many ways. Not useful for 99% of my goals. Maybe that can work for front-end web UIs but nothing intensive and low level like computer vision, game engines, AI dev, making an OS, making speech synthesis or speech recognition engines, SLAM, etc etc. It would be horrible for that IMO.

So for my goals, how can I best use LLM assisted coding? Well, my chosen LLM at this time for this is Chatgpt. How will I use it? Well I can use it to co-develop plans and algorithm workflows for modules or sections of code one step at a time. Working through it all. Then once that is done and saved, we can go step by step together writing the code or editing existing code as needed to bring about successful steps one at a time and test it thoroughly as we go. Now in some ways I had already been asking Chatgpt some questions while I code and getting SOME coding help as I code when I used it a few years back as a stack exchange substitute or ask a friend type assistant. At that time I didn't have it producing much code for me though. You can see videos of me coding this way on my YouTube robotics playlist. But I am now planning to take LLM use for coding assistance to a whole other level in a novel way I came up with. So on my IDE of choice, Dev C++ 4.9.9.2, there are no copilot AIs or w/e to code along with you and those are mostly autocomplete AIs anyways - they don't code for you. So that's not what I want. I also don't want to use any other IDEs for various reasons we won't go into here. So what I decided to do is just make my web browser full-screen and stay on the chatgpt chat interface THE WHOLE TIME I am coding. No alt tabbing, no terminal uses, no IDEs. Just stay on chatgpt web browser and that's it. For the most part. That's kind of the aim. So how can I code like this and test the code and whatnot then? Well basically chatgpt and I came up with the idea of creating a program that we called "The Orchestrator" that would run on my PC and read the chat I'm having with chatgpt and read the code that chatgpt writes or wants to change in my codebase and it would then validate that code change request or code snippet addition, make sure it fits our desired formatting and coding style, make sure it doesn't break any rule or screw up anything, possibly query another LLM AI with a submission of that code change and a copy of the surrounding code module it is changing and ask if it will break anything, if it will do what it claims it will do, etc etc etc. And after a extensive series of validation steps, the Orchestrator will either report back that the code was faulty with a reason why it was faulty or it will determine the code was good and passed checks. It will report back via a popup window message and/or possibly optionally text to speech so I hear it talking to me verbally which might be kind of cool. It would populate my clipboard of my mouse and tell me to paste its response into chatgpt. Which I would then do and hit enter to submit the response. Chatgpt would then see what it screwed up and rewrite it after apologizing or w/e. This would rinse repeat until the Orchestrator approved the code. Upon approving it, it would bring up a popup window UI that would act as a IDE containing my codebase file we are editing, the ability to scroll up and down to read my code, etc. It would highlight in green the new code chatgpt was recommending we add within my codebase, giving a green background there. Or if it was a code change chatgpt had written, it would split the screen of that section into two halves. On the left half would be the original code being modified. On the right half would be the changed code proposal with the actual changes highlighted with a red background. I would read both at that point, make any modifications I wanted right there in that window, and hit ok if I approved. So then my job besides co-planning with chatgpt and talking back and forth till I like the plan with the code steps is to read and approve all code changes before they go in and make any final tweaks. Ideally I no longer actually write the code, I just help plan and submit approvals. I have to understand the codebase deeply the whole time, I give all approvals, and I at no point let the LLM just go haywire on my codebase. It's relative to full automation with a local AI agent a slow and methodical process where I retain full understanding and control the whole time. This I feel comfortable with. And this setup I described is my planning coding workflow. It does not have me using the terminal nor an IDE. Its like a custom kind of weird LLM chat and popup windows weird IDE kind of "thing".

Advantages of this approach is much less brainpower needed looking up APIs to find what I want to use or manually typing all code. This also saves on keystrokes which saves my fingers from overworking and prevents any risk of carpal tunnel and whatnot. It should greatly speed up my coding development compared to manual typing especially once all the features I want the Orchestrator to have are all working.

Note: when a new session starts, I will have a big context dump notify chatgpt of its role and of our plans and short-term and long term goals and keyword trigger commands it can give the Orchestrator to get things rolling and have it go out and find files or directory structures etc from the codebase to help chatgpt navigate the codebase. Together with Chatgpt the Orchestrator will give a context snapshot to catch chatgpt up to date on what the code does so far for the module we are working on, what steps were done recently, what next steps are, etc.

Note: as of right now the way chatgpt talks to the Orchestrator is by me manually copying the browser text output of chatgpt. The Orchestrator polls the mouse clipboard to read these text inputs and parses them and acts on them accordingly. But soon I hope to develop a way for the Orchestrator to scrape the DOM of the browser to read the output of chatgpt directly or create a browser extension that outputs that as a file or as a web socket communication to the Orchestrator or perhaps eventually even have the Orchestrator just use computer vision to literally read the pixels of the screen to read what chatgpt is saying and get its input that way just as my own eyes do. Not sure what direction I'm going to go on that front quite yet but that's coming soon I feel. That will make it even easier to use.

Note: unlike most local AI agents that have to use a local LLM (costs alot to have a good one as far as hardware which is super inflated right now as well in cost) or use a cloud LLM API (charge based on tokens used or a monthly subscription - heavy use gets extremely expensive with guys like Yegge saying they are spending like $3k/mth on tokens WOW!), I chose to just use free Chatgpt as my LLM hookup. And that's good enough. But going that route, not using an API, I can't brute force problems as fast or as parallelized as some AI agents are doing but that is fine because I'm not having AI write my entire program and beat its head against a wall trying to fix its own bugs for ages and work through its own spaghetti code making stupid mistakes but eventually after a million tokens are used it finds its way like a blind man reaching around bumping into stuff in the dark, I don't need big brute forcing like those full automated AI agents are doing. So I don't need massive token use and fast calls, etc. Since I am myself co-coding, reading everything, preventing any bad code changes from making it through into my codebase, I don't need the brute forcing stuff then. And my usage can still fall into normal expected use category and not get rate limited or get me banned. I'm still the only one interacting with my mouse and keyboard into chatgpt. All inputs are manually done by a human user. So yeah, with my system, the LLM co-piloting is FREE which is important for me on my tight budget.

So anyways, chatgpt and I developed this system of using this Orchestrator as a 3rd copilot. Chatgpt is my copilot and the Orchestrator is our 3rd copilot. The Orchestrator extends Chatgpt's abilities to leave the browser and do stuff online or with my file system etc as its assistant just like local AI agents do. So it is loosely inspired by the same concept as a local AI agent. I am excited about this tool/workflow. I think it will vastly speed up my overall developer speed while still giving me fine grained control. So the downsides of automated code production associated with vibecoding don't really apply here since I'm not taking myself out of the loop so much like people warn about.

So to make this Orchestrator, chatgpt highly recommended we use Python. I've never coded in Python before and was under the impression its much slower than C/C++ so no good for anything in my robotics projects. However, since this is basically just a sort of weird LLM assisted coding assistant thing we are making, I figured it's probably fine for that use case. So I gave in and downloaded an old version Python that runs on my machine. Within a few days I, with chatgpt's help, got Python installed and working and learned how to use a text file as my Python code which I edit in notepad on windows. I use a .bat file to launch that orchestrator.py file and it launches and works. We've got it polling the clipboard and taking action when valid clipboard changes come in, doing some simple things chatgpt tells it to do successfully. I am also working on implementing a boot sequence thing where chatgpt commands it to run a boot sequence which has it open a window where it asks me to select what coding project I want to work on today from a list we store in a txt file and I press a letter on my keyboard to select a coding project from that list. It then pulls up a .txt context file about that coding project from the Orchestrator directory containing these project specific context files and it puts that file plus a list of trigger keywords and instructions chatgpt can use into the mouse clipboard and tells me to paste in the context. I then paste that in and chatgpt is told to basically use a series of command trigger words and commands to essentially open up my codebase of that particular coding project, explore the files, explore the directory, explore the various sections of code, and find out where we left off and see what the next steps are on the coding to do list for that project if any. If none, it will work with me to create the next steps of a todo list for that code project. We then can start to code it.

So far the orchestrator.py is about 500 lines of Python code. It's all bug free and working great. I took the time to carefully read it all and understand it all and now carefully read and understand every proposed change chatgpt writes for it. Only then do I add it and test it. But eventually, my aim is to have the Orchestrator do the reading of the original code and the proposed changes or additions and validate and filter it before I personally read and approve change or addition requests. The idea there is that my brainpower is limited and I only have so much fuel in the tank mentally for a given session. So the Orchestrator will see if chatgpt is doing something clearly dumb in its code change or addition request and catch that and have me paste the problem back to chatgpt by just bringing a popup window saying "paste this to chatgpt" and I just paste it. No thinking needed. And chatgpt corrects itself and tries again. Only once Orchestrator approves do I get a popup window showing the code change and old code and highlighted changes or updates text for me to read carefully and approve or reject. By taking my brain out of the loop for the dumb mistakes, the Orchestrator shields me from petty code corrections mostly and filters out the obvious dumb LLM mistakes so I don't have to. This saves me frustration and brainpower so I only see the good stuff that makes it through the filter. That makes the system more friction-less and leaves less waste of my time nonsense to deal with so I don't get so frustrated with dumb LLM code outputs. And the idea to possibly send it off to Gemini or w/e with their API free tier usage to verify it if it was a complicated change the Orchestrator wants a second opinion on should be icing on the cake and all of this happens behind the scenes while I wait.

I will say this: for me to get as far as I did with the Orchestrator in just a few days as I did is a marvel for me. I am not that fast but with Chatgpt's guidance I am so much faster. I found a 5.5 hour tutorial on how to install Python, get it going in a IDE, create projects, etc etc and watched some of it and realized I didn't need to do any of that. Chatgpt had me up and running Python in under an hour as someone who never even used it before and we were already having working code within the hour. It was amazing. Super fast for me. Chatgpt still makes dumb mistakes and bad code often, but with the Orchestrator and its guardrails and a tightly designed context window and guidance, plus all the filtration and validation steps, this can be a amazing semi-automated coding workflow.

So that's where I'm at. It's exciting stuff. This doesn't mean I'm stopping the electronics development. I plan to do this stuff in parallel with that so updates will still come on that. But the whole wait to code AT ALL until all electronics are done I just could not stick to anymore. It's been like 3-4 years since I last worked on coding for the robot project and I just couldn't hold off anymore. I think coding some then working on electronics some then coding some is fine. A bit all over the place but so be it. I got caught up in the AI assisted coding hype and just couldn't help myself jumping in and figuring out how I can best use AI to help my coding adventures in a way that fits my needs best. I couldn't wait anymore. PLUS, if I spend many more years NOT coding, I'd start to get somewhat concerned that what I already coded and learned and still remember would start to fade more and more from memory and I'd basically have no clue of what I was trying to do if I wait too long. I wanted to get back into my code before I have no mental architecture skeleton left in memory which would make things harder for me to get back into it. It's a lot of things to keep in my mind for so long without actively spending time in the codebase. So yeah that's my other excuse for why I'm going back into it "prematurely."

Note: my first impressions of Python were very, very good. I was amazed at how much it achieved by so little lines of code. Its 500 lines of code it has so far would have taken like 3,000 lines of C/C++ code I feel. It is crazy. It's very intuitive to use. I already just get it. Although chatgpt is doing the heavy lifting so I'm not actually typing it out myself. But it doesn't matter as long as I understand what it's all doing and how then I can keep its architecture clear and consistent and avoid bugs.