"50% volume" is totally meaningless. That's how you recognize serious audio hard/software, everything's in dB scale

If you just want to smoothly fade in/out, you can strength-reduce a power calculation as multiplying the volume by a value near 1 every sample. For setting the constant volume, just use the raw gain factor, anything else is unnecessary and confusing

@Wuerfel_21 said:

"50% volume" is totally meaningless. That's how you recognize serious audio hard/software, everything's in dB scale

If you just want to smoothly fade in/out, you can strength-reduce a power calculation as multiplying the volume by a value near 1 every sample. For setting the constant volume, just use the raw gain factor, anything else is unnecessary and confusing

The driver just uses the raw SCAS factor yeah, was just thinking about some API to set volume from the user side which was easy to use. I could do two versions, one with percent the other with raw factor from 0-4096.

PUB setHdmiVolumePercent(display, lvol, rvol) ' lvol, rvol from 0-100

PUB setHdmiVolumeLevel(display, lvol, rvol) ' lvol, rvol from 0-4096

Came up with this for now. It's sounding okay I guess. I tweaked it so the final step from 1 to 0 is less discernable. 50% is about (1/sqrt(2)^7) amplitude now which is slightly more attenuation than -20dB.

PUB setHdmiVolumePercent(display, lvol, rvol) : result

lvol#>=0

rvol#>=0

lvol<#=100

rvol<#=100

if rvol

rvol:= qexp($70000000-muldiv64(700-7*rvol,1<<27,100)) & $7fff

if lvol

lvol:= qexp($70000000-muldiv64(700-7*lvol,1<<27,100)) & $7fff

result:= lvol<<16 | rvol

long[display][6]:=result

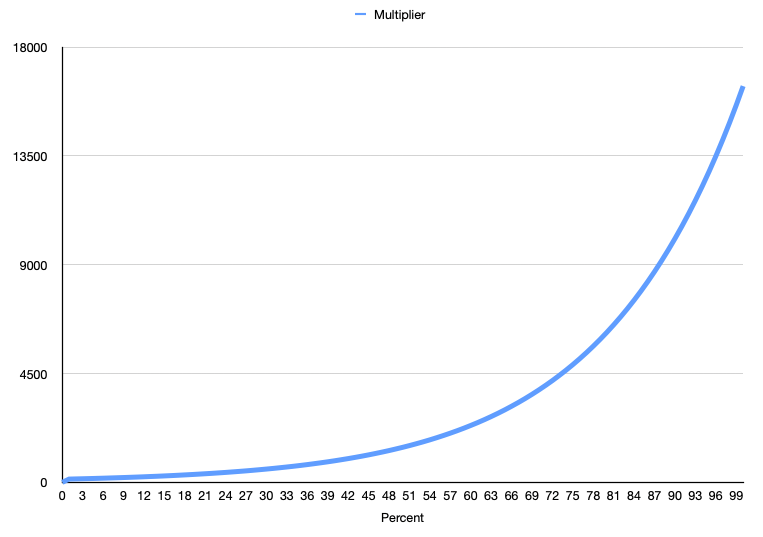

Here's the volume taper as a function of percentage passed to the API - it goes up to 16384 ($4000) at 100%, for the SCAS instruction:

The aim of this is to just use it for application level muting/ramping during start/stop of audio etc. Ideally you don't want to set this level for your master volume all the time because you are losing resolution in the audio. Best to leave it set to 100%.

Here's a test binary demo that plays back a WAV file audio out of the HDMI/DVI breakout board and VGA/AV breakout board's headphone port, as well as doing VGA and HDMI compatible video.

To play along and see if it works with your equipment grab your favourite WAV file and name it TESTWAV.WAV and put it in a FAT32 formatted uSD card's root directory and download this binary to the P2. It will boot up and automatically play this file. Needs to be a standard stereo 16 bit WAV file at 44100Hz sample rate.

Fit HDMI breakout on P32

Fit VGA/AV breakout on P24

It should still work if only one board is fitted too.

I've included just the source of the top level SPIN2 file for now so you can see what it does.

Updated ZIP with more interactive keyboard controls during operation. This is not meant to be a real WAV player or anything like that but it's nice to exercise it a bit more.

On the 115200 bps serial port press:

space - toggles play/pause

ESC - quit & restart

] - volume up

[ - volume down

, - seek -5sec

. - seek +5sec

I've managed to get the Audio & Video infoframe and other optional control packets TERC4 encoded in real time in this driver instead of just done once at startup. This change makes more use of chained overlays that can be read in and executed during different stages of the video signal being output (during VFP/VSync/VBP etc). It was little unwieldy to achieve this but it's seemingly working now and only took two additional COG RAM longs and four still remain.

For each of the vertical front porch lines generated during blanking, the driver now encodes up to two additional control frames into the data island buffer which gets sent out in a burst on the first vertical sync line. I also limit this additional burst to a maximum of 10x32 pixels to keep the transmission of these packets completing before about 1/2 way through the 640 pixel line which should provide sufficient remaining time for the next audio packet to also be encoded before the line ends.

This means creating the video AVI + audio infoframe would only need a single VFP line (the bare minimum one could set in a heavily reduced blanking scenario). Additional VFP lines allow up to 10 packets to be encoded. I just tested this with my plasma TV using a General Control Packet frame configured to alternate the A/V muting control bits every 2 seconds and noticed it would blank the TV screen and mute the sound output every 2 seconds as expected. I doubt we'd need to send very many different control packets unless later specs have lots of additional packets defined. For now 10 should be plenty and far more than is required.

This feature also means that AV infoframe data can be altered on the fly if you wanted to change between YCbCr and RGB colour format etc. You just need to update the HUB RAM infoFrame data before the next vertical sync time and it will be sent out on the next (first) vertical sync line. A method to sync the change to occur just after a Vsync pulse is provided.

I'm now going to look at using the Vsync scan line time (or perhaps VBP) to create an updated clock regen packet if the sample rate ever changes dynamically. How quickly and well HDMI sinks react to these changes will be device dependent but hopefully it should allow the sample rate to be adjusted after HDMI source startup. I don't think I'll go as far as allowing REPO vs FIFO mode to be altered dynamically though. Once setup the push/pull sampling model will remain fixed in place and only the sample rate will change. From what I can tell I can probably just recompute the N&CTS values from the new sample period, re-encode the two Clock Regeneration Packet formats (one for Vsync lines, and the other for non-Vsync lines), and adjust the SPDIF channel status values and internal COG (re)sampling parameters and it should continue processing. There may be a brief drop out at the sink while it sorts itself out.

So today I have been able to get the HDMI audio sample rate to change on the fly and my plasma TV still recognizes it after the change. It didn't seem to need much special to be done when transitioning between sample rates apart from latching the next SPDIF channel status nibble which gets updated and reloaded every 192 audio samples (so it's not inconsistent temporarily if it happens to get changed right in the middle of the outgoing nibble itself). All other variables are just updated in an instant between generating audio packets and samples continue to be issued throughout. I did reset the internal FIFO as well just in case that might fill up due to changing the drain rate. Audio seemed fine on the LCD HDMI device I have as well, plus the analog DAC pin sample rates are changed on the fly too when configured to be present.

The TV mutes out for about 1/4 of a second or so during the audio transitions both to higher and to lower sample rates. Thankfully no crackles / pops were heard although it may be content dependent (I was just listening to a square wave fixed tone on one channel plus a sinusoidal wave on the other channel and hearing the pitch alternate as it swapped between 44.1/48). The HDMI LCD panel doesn't seem to have much if any resync delay and the transitions there were okay too.

Most of the LUTRAM space was used with the overlays and I still had around 5 free LUTRAM locations left over in the worst case. The overlay code wasn't extensively optimized space wise - it didn't need to be as it all still fits in fine and there is sufficient time to compute and encode two clock regen packets and still reload the overlay and encode an audio packet in time for the next scan line.

This was tested using FIFO mode where the HDMI driver effectively pulls samples from a FIFO, I still need to check if REPO pin mode can have it's sample rate changed. Not so sure it makes sense for that if it's mainly used by fixed sample rate emulators unless they also can be changed dynamically. But I'll take a look - it's probably possible to make work also. Just not switching between the two modes on the fly.

There should never be any pops, the sink is supposed to avoid generating loud noises on receive errors. I certainly haven't heard any during any of this.

@Wuerfel_21 said:

There should never be any pops, the sink is supposed to avoid generating loud noises on receive errors. I certainly haven't heard any during any of this.

That's helpful if it keeps it quiet. I was wondering if I'd need to use those flat sample bits to mute anything during this change or the AV mute feature with the general control packet. But it didn't seem to need it in my setup at least. I also have that master volume control which could also perform a mute if it was necessary. The video never lost sync during this too which is nice, as HDMI links can be slow to recover at times.

Just tried changing the audio sample rate in REPO mode. Getting a far more abrupt click/pop when changing the rate using the HDMI LCD panel I have, and the TV audio cuts in/out a bit with this after the transition. Not sure which is the bare minimum of all the resampling parameters to recompute/reset so I did all of them :

recomputed with new values:

resample_ratio_int

resample_ratio_frac

resample_antidrift_period

resample_antidrift_phase

hdmi_regen_period

zeroed:

hdmi_regen_counter

resample_phase_int

resample_phase_frac

audio_inbuf_level

It's probably related to the fact that the generator COG doesn't update its own audio period at the exact same instant the video driver does (HDMI audio update gets delayed to the first VBP line after the call). Will have to try to wait in the API until then before returning to see if that helps a bit.

'video driver API code to update the driver with the new sample rate

PUB setSampleRate(display, rate) : audio_period

if rate < 0

audio_period := -rate

elseif rate

audio_period := (clkfreq + rate/2) / rate

if audio_period

long[display][15]:=audio_period

' sound generation COG routine updates the period after each sample in case it changes

PUB soundgen(mbpin,period_addr) | time, phase, sample, freq, inc, period

time := getct()

freq := 1<<20

inc:=0

repeat

period:=long[period_addr] ' update any new sample rate

sample.word[1] := polxy($7FFF,phase+=freq)

sample.word[0] := (inc++ & $20) ? $7fff : $8000

waitct(time+=period)

wxpin(mbpin,sample)

... ' this is the test code

repeat

waitms(5000)

audio_period:=video.setSampleRate(display, 44100)

waitms(5000)

audio_period:=video.setSampleRate(display, 48000)

...

PUB setSampleRate(display, rate) : audio_period

if rate < 0

audio_period := -rate

elseif rate

audio_period := (clkfreq + rate/2) / rate

if audio_period

waitForVsync(display) ' waits for start of Vsync

long[display][15]:=audio_period

waitForBlanking(display) ' waits for start of VBP (when audio is updated)

Result was nicer for higher->lower sample rate transitions but I get a pop from lower->higher sample rate transitions on the HDMI LCD. Must be underflowing/overflowing and resyncing. Still happens if I don't reset the audio_inbuf_level variable too.

Based on this it'll probably be best to co-ordinate any sample rate changing in the REPO mode with a muting operation.

Here's a quick update to that WAV file demo I posted earlier. Now scans the root folder of the uSD card and plays through each WAV file found instead of requiring a hard coded file name. Best use 8.3 file names.

@Wuerfel_21 said:

I still contest that no source is clown behaviour here.

Not ready. APIs aren't done. Plus still need to find out about whether any licensing stuff applies somehow. Ideally it would not, but probably safest to check this.

Just managed to get the final piece I wanted into this driver. I've been wanting to add a capability to notify clients of the scan line changing or vertical sync occurrence using a COGATN to signal a specific group of COGs. That would be useful for syncing things like frame buffer flips without polling the status all the time and for sprite drivers which depend on waiting for scan lines to be completed before starting their next render of the small group of line buffers etc. You could do this before by polling a status long in HUB RAM but using ATN in addition can be helpful in some more synchronous situations and can speed things up with simple JATN polling instructions, or be used to trigger interrupts.

There was still room in this experimental HDMI driver variant to add this in because it only needed one COGRAM long thankfully, but the original "basic" p2 video driver had a problem as its COG RAM was totally full and I'm trying to keep the feature set the same across both drivers. The P2 video driver's hsync is coded differently for doing the PAL/NTSC colour burst stuff and I actually needed two places for the COGATN instruction to be executed from depending on vsync or hsync selection. Due to being so packed it was very hard to free anything up but today I finally managed to scavenge two longs for this feature, one from a variable that was only used in GFX mode and could therefore be shared with the font RAM area used by text mode, the other from using a single wide vs double wide text process optimization I found recently coding the HDMI variant. That was the key to finding enough space for this to be put in. This still needs to be tested but should work out okay.

With this final feature that's all the stuff I wanted to fit into this driver now. If HDMI related bugs are found there are still 4 COGRAM longs free in the HDMI variant which feels like luxury compared to the original driver. I could always free a lot of room if I migrated over to the LUT RAM overlay approach there too, but I do quite like the self-contained operation of the original driver, which keeps it solid and basically impossible to lock up.

Here are the extended flags for controlling this new feature (only the lower two flags were defined before). You can choose which COGs are to be notified in the upper 8 bits of the flags, and whether to enable only on a vsync line or all lines (at hsync time). Thinking about it more right now - perhaps the COGATN_ENABLE flag itself is redundant, as you could simply pass a 0 into the COGATN byte mask to achieve the same outcome, so that bit might be removed and freed for other future config.

' startup flags

FORCE_MONO_TEXT= (1<<20) ' 1 = only output mono text, 0 = output colour if possible

WAIT_ATN_START = (1<<21) ' 1 = wait for ATN signal to start, 0 = start immediately

COGATN_SYNC = (1<<22) ' 1 = vsync only COGATN, 0 = all line COGATN

COGATN_ENABLE = (1<<23) ' 1 = enable COGATN, 0 = disable COGATN

COGATN_MASK = ($FF<<24) ' COGATN mask for ATN notification

Now there is mainly testing, cleanup and some API stuff to go.

EDIT: I changed the bits above to now mean the following. This may be more useful if you just needed to wait for the video driver to start to trigger something else but didn't want repeated ATNs after that. If you don't want any ATNs at all you simply leave %00000000 as the mask.

' startup flags

FORCE_MONO_TEXT= (1<<20) ' 1 = only output mono text, 0 = output colour if possible

WAIT_ATN_START = (1<<21) ' 1 = wait for ATN signal to start, 0 = start immediately

COGATN_VSYNC = (1<<22) ' 1 = vsync only COGATN, 0 = all line COGATN

COGATN_STARTUP = (1<<23) ' 1 = single COGATN after starting, otherwise continuous notification

COGATN_MASK = ($FF<<24) ' COGATN byte mask within flags, use 00000000 for no ATNs

@evanh said:

Hehe, now you're done, I'm with Ada. I doubt polarity has mattered, even for analogue monitors, for many decades.

LOL. So I just found out today on my Dell DVI monitor that polarity might still matter - admittedly it's an older monitor although it still was from the 2000's. When I altered my timing structure today and tested it afterwards I must have been screwed up by precedence rules and the computed flags polarity value was not correct in some cases. The Dell would not sync to it in the native resolution and would just go into power saving mode. I added parentheses around the VS * SYNC_NEG | HS * SYNC_POS calculation which altered the computed polarity value and this fixed it and a video image was displayed. Similar thing happened to the 1600x1200 mode too which was all squished into the middle of the screen with the bad polarity settings and yet nicely displayed when this line got fixed. So this monitor seems to make use of sync polarity in some way.

' sync polarities

SYNC_POS = 1

SYNC_NEG = 0

' polarity flag masks

VS = (1<<1)

HS = (1<<0)

wuxga_timing ' experimental 1920x1200@60Hz for Dell 2405FPW at 77*4 MHz YMMV

long 0 ' Optional clock mode, also forces the operating frequency, or use 0 to auto calculate from P2 frequency next

long 308000000 ' Desired P2 operating frequency, or 0 to preserve existing PLL settings and operating frequency

long 2<<8 ' XFREQ or P2 pixelclk multiplier<<8 (LSB is optional fractional portion of multiplier), forced to 10<<8 for DVI

long 0 ' CFREQ (PAL/NTSC)

' breezeway[31:24] | colorburst[23:16] | reserved[15:2] | polarity flags[1:0]

long 0<<24 | 0<<16 | 0<<2 | (VS*SYNC_NEG) | (HS*SYNC_POS)

word 16 ' horizontal front porch pixels

word 16 ' horizontal sync pixels

word 128 ' horizontal back porch pixels

word 1920/8 ' horizontal displayed columns (active pixels/8)

word 8 ' vertical front porch scan lines

word 3 ' vertical sync scan lines

word 23 ' vertical back porch scan lines

word 1200 ' vertical active displayed scan lines

Interesting observation: @MXX sent me a board with a built-in HDMI port. My HDMI driver didn't work until I added P_SYNC_IO to the drive mode: TMDS_DRIVE_MODE = P_REPOSITORY|P_OE|P_LOW_FAST|P_HIGH_1K5|P_SYNC_IO

The port is on basepin 0, maybe something weird with that group?

@Wuerfel_21 said:

Interesting observation: @MXX sent me a board with a built-in HDMI port. My HDMI driver didn't work until I added P_SYNC_IO to the drive mode: TMDS_DRIVE_MODE = P_REPOSITORY|P_OE|P_LOW_FAST|P_HIGH_1K5|P_SYNC_IO

The port is on basepin 0, maybe something weird with that group?

Probably pin timing skew related. Using P_SYNC_IO might a good idea anyway to in order to latch the output data on a clock edge to try to keep internal async delays tobetween different pins small, especially since it's a differential signal with a neighbouring pin. I wonder if it would work without the REPO mode pin level settings? i.e., go back to using the bitDAC on that port without REPO mode enabled and see if that is good or bad.

BitDAC is fine - I think the DAC is always synchronous. Also, I only saw this issue with my ASUS monitor. VisionRGB card takes anything just fine. (May also be related to length and quality of cable)

Looking into this change to my video driver I found by having HDMI/HDMI+VGA/DVI+VGA coded separately to the current P2 video driver that does CVBS/S-Video/HD/SDTV component & VGA analog outputs I can probably remove the DVI code from the original driver as well as the software pixel doubling it uses because DVI can be covered by this HDMI driver variant in a degenerate case and the pixel doubling in the original driver could instead then be done by the changing XFREQ dynamically like Wuerfel_21 does in NeoVGA. Doing that should buy me something like 40 longs of free COG RAM which I really like as it provides a more space for different sync handling which could cleanup a few issues regarding proper interlaced sync support for VGA and maybe space to do correct colour burst blanking for PAL. It should also allow features like AHD/TVI/CVI CCTV video stuff to fit using more sync overlays loaded at startup time. I'm going to try to proceed with this idea.

@TonyB_ said:

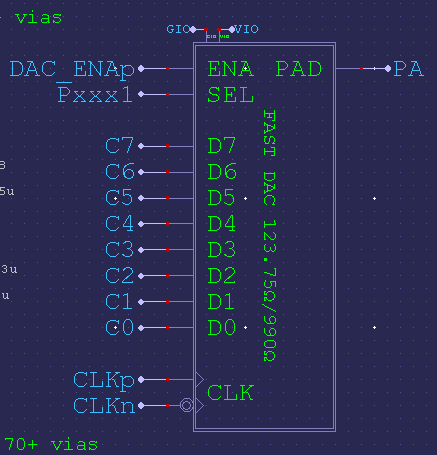

What two BitDAC levels are people using for DVI/HDMI?

So for my driver the value of %1111_0111 is what I used for DDDDDDDD bits making 15/15th's and 7/15th's of the DAC full range scale for 1 and 0 respectively, and I also setup the pin mode with 123.75ohm source resistance and the 3.3V output DAC.

@Rayman said:

Would be nice to have hdmi jukebox of some kind going

Yeah it's a good sample/demo app for streaming audio over HDMI. You could add some nice graphics and UI for selecting music etc to be played off SD, and add some nice visualizations, audio waveform stuff etc. I started down that road with a rudimentary WAV player just in simple text mode but stopped after I realized I had quite a bit more cleanup to do before things were going to be stable enough to code to with something releasable etc. And now I'm tied up trying to improve the original driver with PAL/NTSC fixes.

Comments

"50% volume" is totally meaningless. That's how you recognize serious audio hard/software, everything's in dB scale

If you just want to smoothly fade in/out, you can strength-reduce a power calculation as multiplying the volume by a value near 1 every sample. For setting the constant volume, just use the raw gain factor, anything else is unnecessary and confusing

The driver just uses the raw SCAS factor yeah, was just thinking about some API to set volume from the user side which was easy to use. I could do two versions, one with percent the other with raw factor from 0-4096.

Came up with this for now. It's sounding okay I guess. I tweaked it so the final step from 1 to 0 is less discernable. 50% is about (1/sqrt(2)^7) amplitude now which is slightly more attenuation than -20dB.

PUB setHdmiVolumePercent(display, lvol, rvol) : result lvol#>=0 rvol#>=0 lvol<#=100 rvol<#=100 if rvol rvol:= qexp($70000000-muldiv64(700-7*rvol,1<<27,100)) & $7fff if lvol lvol:= qexp($70000000-muldiv64(700-7*lvol,1<<27,100)) & $7fff result:= lvol<<16 | rvol long[display][6]:=resultHere's the volume taper as a function of percentage passed to the API - it goes up to 16384 ($4000) at 100%, for the SCAS instruction:

The aim of this is to just use it for application level muting/ramping during start/stop of audio etc. Ideally you don't want to set this level for your master volume all the time because you are losing resolution in the audio. Best to leave it set to 100%.

Here's a test binary demo that plays back a WAV file audio out of the HDMI/DVI breakout board and VGA/AV breakout board's headphone port, as well as doing VGA and HDMI compatible video.

To play along and see if it works with your equipment grab your favourite WAV file and name it TESTWAV.WAV and put it in a FAT32 formatted uSD card's root directory and download this binary to the P2. It will boot up and automatically play this file. Needs to be a standard stereo 16 bit WAV file at 44100Hz sample rate.

Fit HDMI breakout on P32

Fit VGA/AV breakout on P24

It should still work if only one board is fitted too.

I've included just the source of the top level SPIN2 file for now so you can see what it does.

Updated ZIP with more interactive keyboard controls during operation. This is not meant to be a real WAV player or anything like that but it's nice to exercise it a bit more.

On the 115200 bps serial port press:

space - toggles play/pause

ESC - quit & restart

] - volume up

[ - volume down

, - seek -5sec

. - seek +5sec

What's this BS, where's the sauce? I always post my sauce, it goes on everything!

As my mother says, all good things come to those who wait.

Let me know if it works okay with your devices Ada. Cheers.

Nah, no sauce -> no debug. You're betraying 300 YEARS OF EXCELLENCE in ALL-PURPOSE SEASONING.

I've managed to get the Audio & Video infoframe and other optional control packets TERC4 encoded in real time in this driver instead of just done once at startup. This change makes more use of chained overlays that can be read in and executed during different stages of the video signal being output (during VFP/VSync/VBP etc). It was little unwieldy to achieve this but it's seemingly working now and only took two additional COG RAM longs and four still remain.

For each of the vertical front porch lines generated during blanking, the driver now encodes up to two additional control frames into the data island buffer which gets sent out in a burst on the first vertical sync line. I also limit this additional burst to a maximum of 10x32 pixels to keep the transmission of these packets completing before about 1/2 way through the 640 pixel line which should provide sufficient remaining time for the next audio packet to also be encoded before the line ends.

This means creating the video AVI + audio infoframe would only need a single VFP line (the bare minimum one could set in a heavily reduced blanking scenario). Additional VFP lines allow up to 10 packets to be encoded. I just tested this with my plasma TV using a General Control Packet frame configured to alternate the A/V muting control bits every 2 seconds and noticed it would blank the TV screen and mute the sound output every 2 seconds as expected. I doubt we'd need to send very many different control packets unless later specs have lots of additional packets defined. For now 10 should be plenty and far more than is required.

This feature also means that AV infoframe data can be altered on the fly if you wanted to change between YCbCr and RGB colour format etc. You just need to update the HUB RAM infoFrame data before the next vertical sync time and it will be sent out on the next (first) vertical sync line. A method to sync the change to occur just after a Vsync pulse is provided.

I'm now going to look at using the Vsync scan line time (or perhaps VBP) to create an updated clock regen packet if the sample rate ever changes dynamically. How quickly and well HDMI sinks react to these changes will be device dependent but hopefully it should allow the sample rate to be adjusted after HDMI source startup. I don't think I'll go as far as allowing REPO vs FIFO mode to be altered dynamically though. Once setup the push/pull sampling model will remain fixed in place and only the sample rate will change. From what I can tell I can probably just recompute the N&CTS values from the new sample period, re-encode the two Clock Regeneration Packet formats (one for Vsync lines, and the other for non-Vsync lines), and adjust the SPDIF channel status values and internal COG (re)sampling parameters and it should continue processing. There may be a brief drop out at the sink while it sorts itself out.

So today I have been able to get the HDMI audio sample rate to change on the fly and my plasma TV still recognizes it after the change. It didn't seem to need much special to be done when transitioning between sample rates apart from latching the next SPDIF channel status nibble which gets updated and reloaded every 192 audio samples (so it's not inconsistent temporarily if it happens to get changed right in the middle of the outgoing nibble itself). All other variables are just updated in an instant between generating audio packets and samples continue to be issued throughout. I did reset the internal FIFO as well just in case that might fill up due to changing the drain rate. Audio seemed fine on the LCD HDMI device I have as well, plus the analog DAC pin sample rates are changed on the fly too when configured to be present.

The silly test:

repeat waitms(5000) video.setSampleRate(display, 44100) waitms(5000) video.setSampleRate(display, 48000)The TV mutes out for about 1/4 of a second or so during the audio transitions both to higher and to lower sample rates. Thankfully no crackles / pops were heard although it may be content dependent (I was just listening to a square wave fixed tone on one channel plus a sinusoidal wave on the other channel and hearing the pitch alternate as it swapped between 44.1/48). The HDMI LCD panel doesn't seem to have much if any resync delay and the transitions there were okay too.

Most of the LUTRAM space was used with the overlays and I still had around 5 free LUTRAM locations left over in the worst case. The overlay code wasn't extensively optimized space wise - it didn't need to be as it all still fits in fine and there is sufficient time to compute and encode two clock regen packets and still reload the overlay and encode an audio packet in time for the next scan line.

This was tested using FIFO mode where the HDMI driver effectively pulls samples from a FIFO, I still need to check if REPO pin mode can have it's sample rate changed. Not so sure it makes sense for that if it's mainly used by fixed sample rate emulators unless they also can be changed dynamically. But I'll take a look - it's probably possible to make work also. Just not switching between the two modes on the fly.

There should never be any pops, the sink is supposed to avoid generating loud noises on receive errors. I certainly haven't heard any during any of this.

That's helpful if it keeps it quiet. I was wondering if I'd need to use those flat sample bits to mute anything during this change or the AV mute feature with the general control packet. But it didn't seem to need it in my setup at least. I also have that master volume control which could also perform a mute if it was necessary. The video never lost sync during this too which is nice, as HDMI links can be slow to recover at times.

Just tried changing the audio sample rate in REPO mode. Getting a far more abrupt click/pop when changing the rate using the HDMI LCD panel I have, and the TV audio cuts in/out a bit with this after the transition. Not sure which is the bare minimum of all the resampling parameters to recompute/reset so I did all of them :

recomputed with new values:

zeroed:

It's probably related to the fact that the generator COG doesn't update its own audio period at the exact same instant the video driver does (HDMI audio update gets delayed to the first VBP line after the call). Will have to try to wait in the API until then before returning to see if that helps a bit.

'video driver API code to update the driver with the new sample rate PUB setSampleRate(display, rate) : audio_period if rate < 0 audio_period := -rate elseif rate audio_period := (clkfreq + rate/2) / rate if audio_period long[display][15]:=audio_period ' sound generation COG routine updates the period after each sample in case it changes PUB soundgen(mbpin,period_addr) | time, phase, sample, freq, inc, period time := getct() freq := 1<<20 inc:=0 repeat period:=long[period_addr] ' update any new sample rate sample.word[1] := polxy($7FFF,phase+=freq) sample.word[0] := (inc++ & $20) ? $7fff : $8000 waitct(time+=period) wxpin(mbpin,sample) ... ' this is the test code repeat waitms(5000) audio_period:=video.setSampleRate(display, 44100) waitms(5000) audio_period:=video.setSampleRate(display, 48000) ...Changed to this to test resyncing the audio:

PUB setSampleRate(display, rate) : audio_period if rate < 0 audio_period := -rate elseif rate audio_period := (clkfreq + rate/2) / rate if audio_period waitForVsync(display) ' waits for start of Vsync long[display][15]:=audio_period waitForBlanking(display) ' waits for start of VBP (when audio is updated)Result was nicer for higher->lower sample rate transitions but I get a pop from lower->higher sample rate transitions on the HDMI LCD. Must be underflowing/overflowing and resyncing. Still happens if I don't reset the audio_inbuf_level variable too.

Based on this it'll probably be best to co-ordinate any sample rate changing in the REPO mode with a muting operation.

Here's a quick update to that WAV file demo I posted earlier. Now scans the root folder of the uSD card and plays through each WAV file found instead of requiring a hard coded file name. Best use 8.3 file names.

HDMI board on P32, VGA/AV board on P24.

Press ? to get key help @ 115200bps.

I still contest that no source is clown behaviour here.

Not ready. APIs aren't done. Plus still need to find out about whether any licensing stuff applies somehow. Ideally it would not, but probably safest to check this.

Just managed to get the final piece I wanted into this driver. I've been wanting to add a capability to notify clients of the scan line changing or vertical sync occurrence using a COGATN to signal a specific group of COGs. That would be useful for syncing things like frame buffer flips without polling the status all the time and for sprite drivers which depend on waiting for scan lines to be completed before starting their next render of the small group of line buffers etc. You could do this before by polling a status long in HUB RAM but using ATN in addition can be helpful in some more synchronous situations and can speed things up with simple JATN polling instructions, or be used to trigger interrupts.

There was still room in this experimental HDMI driver variant to add this in because it only needed one COGRAM long thankfully, but the original "basic" p2 video driver had a problem as its COG RAM was totally full and I'm trying to keep the feature set the same across both drivers. The P2 video driver's hsync is coded differently for doing the PAL/NTSC colour burst stuff and I actually needed two places for the COGATN instruction to be executed from depending on vsync or hsync selection. Due to being so packed it was very hard to free anything up but today I finally managed to scavenge two longs for this feature, one from a variable that was only used in GFX mode and could therefore be shared with the font RAM area used by text mode, the other from using a single wide vs double wide text process optimization I found recently coding the HDMI variant. That was the key to finding enough space for this to be put in. This still needs to be tested but should work out okay.

With this final feature that's all the stuff I wanted to fit into this driver now. If HDMI related bugs are found there are still 4 COGRAM longs free in the HDMI variant which feels like luxury compared to the original driver. I could always free a lot of room if I migrated over to the LUT RAM overlay approach there too, but I do quite like the self-contained operation of the original driver, which keeps it solid and basically impossible to lock up.

Here are the extended flags for controlling this new feature (only the lower two flags were defined before). You can choose which COGs are to be notified in the upper 8 bits of the flags, and whether to enable only on a vsync line or all lines (at hsync time). Thinking about it more right now - perhaps the COGATN_ENABLE flag itself is redundant, as you could simply pass a 0 into the COGATN byte mask to achieve the same outcome, so that bit might be removed and freed for other future config.

' startup flags FORCE_MONO_TEXT= (1<<20) ' 1 = only output mono text, 0 = output colour if possible WAIT_ATN_START = (1<<21) ' 1 = wait for ATN signal to start, 0 = start immediately COGATN_SYNC = (1<<22) ' 1 = vsync only COGATN, 0 = all line COGATN COGATN_ENABLE = (1<<23) ' 1 = enable COGATN, 0 = disable COGATN COGATN_MASK = ($FF<<24) ' COGATN mask for ATN notificationNow there is mainly testing, cleanup and some API stuff to go.

EDIT: I changed the bits above to now mean the following. This may be more useful if you just needed to wait for the video driver to start to trigger something else but didn't want repeated ATNs after that. If you don't want any ATNs at all you simply leave %00000000 as the mask.

' startup flags FORCE_MONO_TEXT= (1<<20) ' 1 = only output mono text, 0 = output colour if possible WAIT_ATN_START = (1<<21) ' 1 = wait for ATN signal to start, 0 = start immediately COGATN_VSYNC = (1<<22) ' 1 = vsync only COGATN, 0 = all line COGATN COGATN_STARTUP = (1<<23) ' 1 = single COGATN after starting, otherwise continuous notification COGATN_MASK = ($FF<<24) ' COGATN byte mask within flags, use 00000000 for no ATNsLOL. So I just found out today on my Dell DVI monitor that polarity might still matter - admittedly it's an older monitor although it still was from the 2000's. When I altered my timing structure today and tested it afterwards I must have been screwed up by precedence rules and the computed flags polarity value was not correct in some cases. The Dell would not sync to it in the native resolution and would just go into power saving mode. I added parentheses around the VS * SYNC_NEG | HS * SYNC_POS calculation which altered the computed polarity value and this fixed it and a video image was displayed. Similar thing happened to the 1600x1200 mode too which was all squished into the middle of the screen with the bad polarity settings and yet nicely displayed when this line got fixed. So this monitor seems to make use of sync polarity in some way.

' sync polarities SYNC_POS = 1 SYNC_NEG = 0 ' polarity flag masks VS = (1<<1) HS = (1<<0) wuxga_timing ' experimental 1920x1200@60Hz for Dell 2405FPW at 77*4 MHz YMMV long 0 ' Optional clock mode, also forces the operating frequency, or use 0 to auto calculate from P2 frequency next long 308000000 ' Desired P2 operating frequency, or 0 to preserve existing PLL settings and operating frequency long 2<<8 ' XFREQ or P2 pixelclk multiplier<<8 (LSB is optional fractional portion of multiplier), forced to 10<<8 for DVI long 0 ' CFREQ (PAL/NTSC) ' breezeway[31:24] | colorburst[23:16] | reserved[15:2] | polarity flags[1:0] long 0<<24 | 0<<16 | 0<<2 | (VS*SYNC_NEG) | (HS*SYNC_POS) word 16 ' horizontal front porch pixels word 16 ' horizontal sync pixels word 128 ' horizontal back porch pixels word 1920/8 ' horizontal displayed columns (active pixels/8) word 8 ' vertical front porch scan lines word 3 ' vertical sync scan lines word 23 ' vertical back porch scan lines word 1200 ' vertical active displayed scan linesInteresting observation: @MXX sent me a board with a built-in HDMI port. My HDMI driver didn't work until I added P_SYNC_IO to the drive mode:

TMDS_DRIVE_MODE = P_REPOSITORY|P_OE|P_LOW_FAST|P_HIGH_1K5|P_SYNC_IOThe port is on basepin 0, maybe something weird with that group?

Probably pin timing skew related. Using P_SYNC_IO might a good idea anyway to in order to latch the output data on a clock edge to try to keep internal async delays tobetween different pins small, especially since it's a differential signal with a neighbouring pin. I wonder if it would work without the REPO mode pin level settings? i.e., go back to using the bitDAC on that port without REPO mode enabled and see if that is good or bad.

BitDAC is fine - I think the DAC is always synchronous. Also, I only saw this issue with my ASUS monitor. VisionRGB card takes anything just fine. (May also be related to length and quality of cable)

Looking into this change to my video driver I found by having HDMI/HDMI+VGA/DVI+VGA coded separately to the current P2 video driver that does CVBS/S-Video/HD/SDTV component & VGA analog outputs I can probably remove the DVI code from the original driver as well as the software pixel doubling it uses because DVI can be covered by this HDMI driver variant in a degenerate case and the pixel doubling in the original driver could instead then be done by the changing XFREQ dynamically like Wuerfel_21 does in NeoVGA. Doing that should buy me something like 40 longs of free COG RAM which I really like as it provides a more space for different sync handling which could cleanup a few issues regarding proper interlaced sync support for VGA and maybe space to do correct colour burst blanking for PAL. It should also allow features like AHD/TVI/CVI CCTV video stuff to fit using more sync overlays loaded at startup time. I'm going to try to proceed with this idea.

I concur. The DAC's schematic function block has a clock input.

What two BitDAC levels are people using for DVI/HDMI?

So for my driver the value of %1111_0111 is what I used for DDDDDDDD bits making 15/15th's and 7/15th's of the DAC full range scale for 1 and 0 respectively, and I also setup the pin mode with 123.75ohm source resistance and the 3.3V output DAC.

Would be nice to have hdmi jukebox of some kind going

Yeah it's a good sample/demo app for streaming audio over HDMI. You could add some nice graphics and UI for selecting music etc to be played off SD, and add some nice visualizations, audio waveform stuff etc. I started down that road with a rudimentary WAV player just in simple text mode but stopped after I realized I had quite a bit more cleanup to do before things were going to be stable enough to code to with something releasable etc. And now I'm tied up trying to improve the original driver with PAL/NTSC fixes.

Is it just stereo with hdmi? Or do we know how to do better? Wonder how that would work..