Broken tool detection with machine vision

ManAtWork

Posts: 2,292

ManAtWork

Posts: 2,292

My new automatic tool changer means I can let the machine run unattended with longer jobs and multiple tools. But this also means if something goes wrong in the middle it can get more expensive. Sometimes the cutter breaks which is no big deal if you notice it. You stop the machine, change the cutter and continue.

But if unattended the machine continues to run but without removing material. So let's assume we want to tap pre-drilled holes. If the drill bit breaks the machine changes the tool to the tap drill and ruins it by ramming it into the solid material where the pre-drilled holes should be but aren't. ![]()

The usual fix to this is to measure the tool length with a touch probe or an optical laser barrier, once after the tool has been picked up from the tool changer and once again before it is stored back. But this takes time as the machine has to move to the location of the probe and then has to slowly advance the Z axis until the tool tip hits the probe or laser beam.

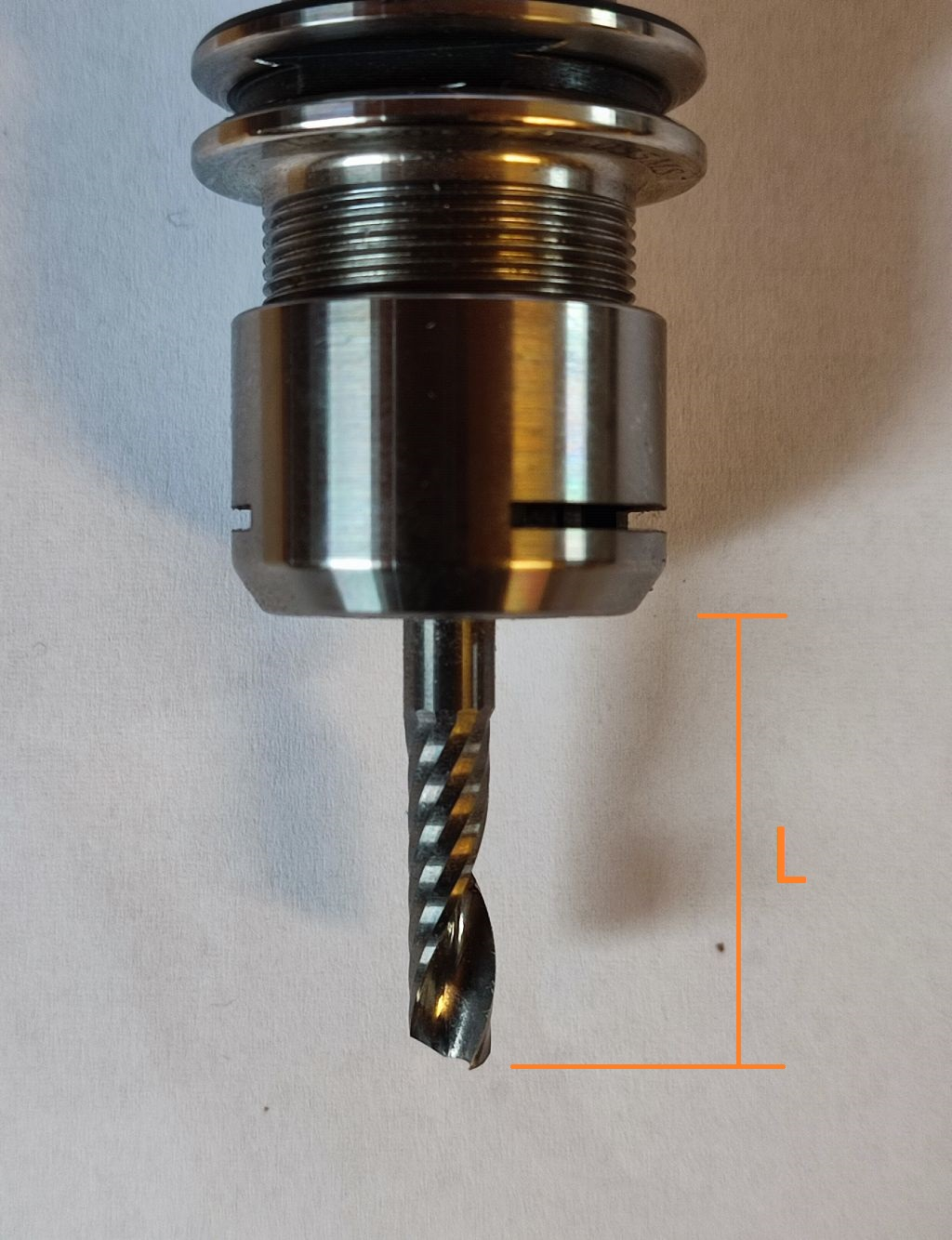

Inspired by the latest "AI & Vision with the cyber:bot and MakeCode" tutorial a better idea would be to do this with a camera and simply compare the "before" and "after" pictures. They could look something like this:

The point of interest is the distance from the collet to the lowest point of the cutter tip.

This point could be an a different side after the cutting process due to a different rotation of the tool.

But the overall length should stay the same within some small tolerance. If the length differs considerably then there's something broken.

I did some experiments with machine vision a long time ago. At that time this was usually be done with a PC, an industrial camera and a frame grabber card and you could easily spend multiple $1000s on it. Today, there a small gadgets like the HuskyLens for less than $100. But I don't know if this paticular thing is suitable. It looks like a "toy" for students with a very limited set of features like face or color recognition.

Does anybody here have experience with machine vision and can recomment something that can easily be programmed and tuned?

Comments

Well, I have some experience with using the Edge Impulse machine learning platform, have written numerous tutorials for them, e.g. this project write-up.

Machine learning is however not the optimal solution for every use case, in some cases simpler machine vision algorithms might be better. I recommend you look at any of the OpenMV cams as they support both traditional machine vision as well as machine learning methods.

OpenMV has some new camera models coming soonish, here a presentation video.

Ah thanks. That's a good starting point. I also understand that AI and "machine learning" might not be the optimal solution. At least, training an AI only with several example pictures won't work. It will be required to define as exactly as possible what parameters to focus on and what can be ignored. Otherwise a small change in illumination or dirt/chips can cause false alarms. But that's true for all projects, not just AI. The better you define what you want the better the output and the less time is spent on fixing mistakes.

Just for fun I asked ChatGPT for its opinion on using any OpenMV camera in this scenario. While I cannot comment on its correctness, it might give some ideas.

Using an OpenMV Camera for CNC Tool Integrity Monitoring

Author: Thomas Vikström (via ChatGPT)

Date: 2025-10-17 17:08 (Europe/Helsinki)

Problem description

An automatic tool changer allows long, unattended CNC operations. However, if a cutter or drill breaks during machining, the machine may continue executing toolpaths, damaging the workpiece or even destroying subsequent tools. For example, if a pre-drill breaks, a following tap might crash into solid material instead of a hole.

Traditional solutions use a touch probe or laser barrier to verify tool length before and after machining. These methods work but are slow, requiring the spindle to move to a specific station and advance slowly along the Z-axis. The goal is to achieve the same verification using a camera that compares “before” and “after” images to detect tool loss or breakage.

Solution concept with OpenMV

An OpenMV camera can quickly capture a silhouette of the tool at a fixed inspection point. By measuring the tip position or visible tool length in pixels and comparing it to a learned reference, the system determines whether the tool is intact, chipped, or missing. This process is nearly instantaneous and requires only a quick move to a fixed inspection coordinate.

Implementation details

CHECK TOOLcommand and waits for an OK/FAIL signal.CNC integration example

Method A – USB serial

LEARN,23→ stores Tool 23’s reference lengthCHECK,23→ compares current to stored lengthOKorFAIL Δ=0.3mmMethod B – Digital I/O

Full OpenMV Python program

# Program: OpenMV tool-tip silhouette length check (learn+verify) for CNC tool integrity — 2025-10-17 16:57 — Thomas Vikström import sensor, image, time, pyb, ujson, math PIXELS_PER_MM = 12.0 THRESHOLD = 0.25 ROI = (60, 20, 200, 200) LED_BACKLIGHT = pyb.LED(1) OK_PIN = pyb.Pin('P7', pyb.Pin.OUT_PP) SER = pyb.USB_VCP() REF_PATH = '/tool_refs.json' def load_refs(): try: with open(REF_PATH, 'r') as f: return ujson.loads(f.read()) except: return {} def save_refs(refs): with open(REF_PATH, 'w') as f: f.write(ujson.dumps(refs)) def setup_camera(): sensor.reset() sensor.set_pixformat(sensor.GRAYSCALE) sensor.set_framesize(sensor.VGA) sensor.skip_frames(time=500) sensor.set_auto_gain(False) sensor.set_auto_whitebal(False) sensor.set_auto_exposure(False, exposure_us=1000) return time.clock() def measure_tip_y(img): r = img.copy(roi=ROI) r.binary([(0, 80)]) x0, y0, w, h = ROI for j in range(h-1, -1, -1): if r.get_statistics(roi=(0, j, w, 1)).l_mean() < 250: return y0 + j return None def mm_from_pixels(py): _, y0, _, _ = ROI return (py - y0) / PIXELS_PER_MM def capture_length_mm(): LED_BACKLIGHT.on() img = sensor.snapshot() LED_BACKLIGHT.off() py = measure_tip_y(img) if py is None: return None return mm_from_pixels(py) def reply(msg): try: SER.write((msg + '\n').encode()) except: pass def main(): clock = setup_camera() refs = load_refs() OK_PIN.high() reply('READY') buf = b'' while True: clock.tick() if SER.any(): buf += SER.read() if b'\n' in buf: line, _, buf = buf.partition(b'\n') try: cmd = line.decode().strip() except: continue parts = cmd.split(',') if len(parts) >= 2 and parts[0].upper() in ('LEARN', 'CHECK'): action, tool_id = parts[0].upper(), parts[1].strip() length = capture_length_mm() if length is None: OK_PIN.low() reply('FAIL no_silhouette') continue if action == 'LEARN': refs[tool_id] = round(length, 4) save_refs(refs) OK_PIN.high() reply('OK LEARN ' + tool_id + ' {:.3f}mm'.format(length)) else: if tool_id not in refs: OK_PIN.low() reply('FAIL unknown_tool') continue ref = refs[tool_id] delta = abs(length - ref) if delta <= THRESHOLD: OK_PIN.high() reply('OK ' + tool_id + ' Δ={:.3f}mm len={:.3f}mm'.format(delta, length)) else: OK_PIN.low() reply('FAIL ' + tool_id + ' Δ={:.3f}mm len={:.3f}mm ref={:.3f}mm'.format(delta, length, ref)) else: if cmd.upper() == 'PING': reply('PONG') main()Why not full before/after image comparison?

While OpenMV supports

image.difference()for image comparison, it’s sensitive to lighting and alignment differences. Using silhouette-based tip measurement is faster and more reliable, giving a direct numeric measurement of tool length.Possible extensions

Conclusion

An OpenMV camera provides a cost-effective, compact, and robust vision-based solution for tool breakage detection. It can prevent expensive crashes during unattended operation and runs far faster than mechanical probing. Once calibrated, it delivers consistent accuracy and integrates easily with standard CNC control systems.

Nice! Although I intentionally asked the forum instead of ChatGPT directly to hopefully get some answers from real humans I have to admit that this is quite helpful. I don't know if I would trust the quoted code blindly but any flaws can be fixed easily if you have some basic technical understanding.

Although I intentionally asked the forum instead of ChatGPT directly to hopefully get some answers from real humans I have to admit that this is quite helpful. I don't know if I would trust the quoted code blindly but any flaws can be fixed easily if you have some basic technical understanding.

Calibration is not necessary because the actual length doesn't really matter, only the difference. OK, for the scale of the tolerance it matters, but a rough guess is enough.

BTW, I can take the picture inside the tool changer at the lowest position of the caroussel magazine. The tool stays there for a brief moment so no extra movement of the spindle is necessary and motion blurr is no issue.

Hi

For series production analysis of spindle motor current is used sometimes which will tell about a broken or blunt blade. Also vibration or noise analysis is done.

Both methods use relatively simple sensors.

I am not sure how usual the failure mode "broken shaft of tool" is. I would think it is not the typical problem.

Cheers Christof

@ManAtWork said:

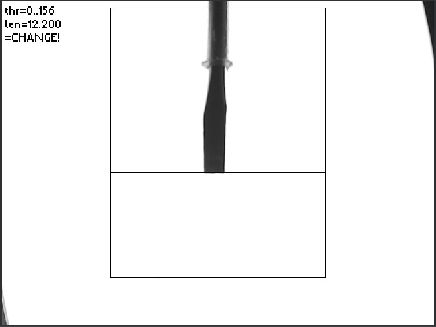

Found my RT-1062, and after a few iterations with ChatGPT I have a program that prints to the terminal window when the object length changes. It needs more work to really make it work, this was just a quick test under poor lighting conditions.

The program averages the first 18 frames as a baseline to which it later compares.

# Measures tool-tip length each frame, learns a baseline, and flags CHANGE with delta — 2025-10-17 19:00 (Europe/Helsinki) — Thomas Vikström import sensor, image, time # ====================== USER SETTINGS ====================== PIXELS_PER_MM = 20.0 # calibrate once with a gauge pin/intact tool ROI = (60, 20, 200, 200) # x,y,w,h must straddle the tip (top above, bottom below) TOOL_IS_DARK = True # True=backlit (tool darker than background); False for front-lit ROW_WHITE_L = 250 # after binary, l_mean >= this ≈ white row ERODE_ITERS = 1 DILATE_ITERS = 0 USE_MANUAL_THR = True # start True; tune MANUAL_THR; switch to False to try Otsu MANUAL_THR = 95 # try 80..130 depending on your backlight AUTO_EXPOSURE_US = 31800 # exposure when autos OFF (use bright backlight) PRINT_EVERY_N = 1 # Change detection / smoothing LEARN_FRAMES = 18 # avg these to create baseline MA_WINDOW = 3 # moving average to stabilize CHANGE_THRESH_MM = 0.30 # trip alarm if |Δ| > this HYST_MM = 0.10 # hysteresis to clear alarm # =========================================================== # --- state --- baseline_mm = None learn_buf = [] ma_buf = [] alarm = False def setup_camera(): sensor.reset() sensor.set_pixformat(sensor.GRAYSCALE) sensor.set_framesize(sensor.VGA) sensor.skip_frames(time=600) # Lock auto* for repeatability try: sensor.set_auto_gain(False) sensor.set_auto_whitebal(False) sensor.set_auto_exposure(False, exposure_us=AUTO_EXPOSURE_US) except Exception: pass print("READY — fix camera, jog Z so the tip moves inside the ROI. Tune MANUAL_THR/exposure if needed.") def to_int_threshold(thr_obj): # Normalize whatever get_threshold() returns to int try: return int(thr_obj) except Exception: pass if isinstance(thr_obj, dict): for k in ("value","v","low","l_value","threshold"): if k in thr_obj: try: return int(thr_obj[k]) except: pass try: return int(next(iter(thr_obj.values()))) except: pass for m in ("value","val","low","l_value","get","threshold"): if hasattr(thr_obj, m): try: a = getattr(thr_obj, m) v = a() if callable(a) else a return int(v) except: pass if isinstance(thr_obj, (tuple, list)) and thr_obj: try: return int(thr_obj[0]) except: pass return 128 def pixels_to_mm(py): _, y0, _, _ = ROI return (py - y0) / PIXELS_PER_MM def moving_avg_push(x): ma_buf.append(x) if len(ma_buf) > MA_WINDOW: ma_buf.pop(0) return sum(ma_buf)/len(ma_buf) def learn_push(x): learn_buf.append(x) if len(learn_buf) > LEARN_FRAMES: learn_buf.pop(0) if len(learn_buf) == LEARN_FRAMES: return sum(learn_buf)/LEARN_FRAMES return None def detect_tip_y(img): # choose threshold if USE_MANUAL_THR: thr = int(MANUAL_THR) else: h = img.get_histogram(roi=ROI) thr = to_int_threshold(h.get_threshold()) # crop and binarize with polarity r = img.copy(roi=ROI) if TOOL_IS_DARK: r.binary([(0, thr)]) else: r.binary([(thr, 255)]) r.invert() # cleanup if ERODE_ITERS: r.erode(ERODE_ITERS) if DILATE_ITERS: r.dilate(DILATE_ITERS) # scan from bottom up for first non-white row x0, y0, w, hgt = ROI for j in range(hgt-1, -1, -1): if r.get_statistics(roi=(0, j, w, 1)).l_mean() < ROW_WHITE_L: return y0 + j, thr return None, thr def s3(v): """safe formatter for numbers that can be None""" return "{:.3f}".format(v) if (v is not None) else "--" def draw_overlay(img, tip_y, thr, length_mm, sm_len, delta, alarm_flag): # ROI and tip line img.draw_rectangle(ROI, color=255) if tip_y is not None: img.draw_line(ROI[0], tip_y, ROI[0]+ROI[2]-1, tip_y, color=255) # Text lines (no formatting with None!) y = ROI[1] + 4 lines = [ "thr={} pix/mm={:.2f}".format(thr, PIXELS_PER_MM), "len_raw={} mm".format(s3(length_mm)), "len_ma={} base={}".format(s3(sm_len), s3(baseline_mm)), "Δ={} mm {}".format(s3(delta), ("CHANGE!" if alarm_flag else "OK")), ] for s in lines: img.draw_string(ROI[0]+4, y, s, mono_space=False) y += 12 def main(): global baseline_mm, alarm setup_camera() frame = 0 while True: img = sensor.snapshot() tip_y, thr = detect_tip_y(img) length_mm = pixels_to_mm(tip_y) if tip_y is not None else None sm_len = None delta = None if length_mm is not None: sm_len = moving_avg_push(length_mm) if baseline_mm is None: maybe = learn_push(sm_len) if maybe is not None: baseline_mm = round(maybe, 3) print("BASELINE {:.3f} mm (avg {} frames)".format(baseline_mm, LEARN_FRAMES)) else: delta = sm_len - baseline_mm # hysteresis alarm logic if not alarm and abs(delta) > CHANGE_THRESH_MM: alarm = True elif alarm and abs(delta) < (CHANGE_THRESH_MM - HYST_MM): alarm = False draw_overlay(img, tip_y, thr, length_mm, sm_len, delta, alarm) frame += 1 if frame % PRINT_EVERY_N == 0: if length_mm is None: print("NO_TIP thr={} (tune ROI/threshold/exposure or TOOL_IS_DARK)".format(thr)) elif baseline_mm is None: print("LEARN len_ma={} ({}/{})".format(s3(sm_len), len(learn_buf), LEARN_FRAMES)) else: print("{} tip_y={} len={} len_ma={} base={} Δ={} mm".format( "CHANGE!" if alarm else "OK ", tip_y, s3(length_mm), s3(sm_len), s3(baseline_mm), s3(delta) )) time.sleep_ms(5) main()I know, this works well for larger tools which put a considerable load on the spindle motor. If the cutter breaks completely the active current ("Wirkstrom") drops to almost zero. If the cutter wears out the load and thus the active current rises. Chiped cutting edges lead to increased vibration. To detect light loads you need to measure the actual active current and not the reactive current ("Blindstrom").

But for very small cutters this doesn't work at all. The temperature of the bearings cause more current fluctuations than the load of the cutting. And if there are some chips in the collet they cause more imbalance and vibrations than the worst possible broken cutter.

@banjo Thanks a lot! I've seen the program uses a simple statistical brightness average over each scan line. This surely works to detect the lowest point of the tip. I think I have to add detection for the lower edge of the collet. As the tool is stored in a different position than it is picked the hight tolerance of the tool holder in the magazine might be higher than the tolerance for the tool length. But as the width of the dark area changes considerably between cutter shaft and collet that should also be no problem.

I might take some time until I will actually implement this. I need to fix some other issues and do some more tests until the ATC works reliably. But it's always good to have some "mental backup" to decide if an idea is realistic or not.

Sorry to mud your waters more @"Christof Eb." mentioned earlier about vibration, perhaps anomaly detection could be used for your problem?

@"Christof Eb." mentioned earlier about vibration, perhaps anomaly detection could be used for your problem?

The idea with anomaly detection is that you record normal behaviour, build a model, and then define an anomaly threshold for when an action is to be triggered.

As it happens, I've done a project about anomaly detection on a conveyor belt for Particle, this is also using the Edge Impulse platform for the AI part. If interested, see https://www.particle.io/blog/predictive-maintenance-photon-2/

If Tensorflow Lite would be supported on the Parallax P2, it might with its 8 cores be very competitive in this type of scenarios.

In keeping with the KISS principal, simply monitor the motor current.

I have been doing this for years. Vision is sexier but becomes a PITA in a production environment.

Sorry, vibration or acoustic detection doesn't work for me. Depending on what material I cut the sound is very different. Vutting sheet metal of an electrical cabinet rattles like hell even with a good cutter. Finishing cut in plastic runs very smooth. You can't tell the difference wether the cutter is there or not.

Same for current detection. For example with warm bearings and a 1mm cutter the motor current is 1.90A with load and 1.88A without. With cold bearings it's 2.4A.

Perhaps so, but as mentioned: in anomaly detection you record data (sound, vibration, motor current, etc) from all nominal use cases, train a machine learning model, and set a threshold after which the monitoring device should take actions. While I haven't done this professionally, only for demonstration and teaching purposes, I can only say it's working on a conceptual level, but don't know if theory meets practice in your case.

If you can control the lighting environment, the camera option might still be an alternative. If I have the bandwidth during the weekend, I might try to better simulate your scenario using the OpenMV Cam and program I already have.

This works better and faster, still room for improvements. It was challenging to find the right exposure settings, would've been easier if I'd used a lightbox where I control the lighting conditions, but as it's a chore to set it up I left it out. Will probably leave this exercise, at least for now.

# Detects the lowest edge row (tool tip) using find_edges(EDGE_SIMPLE) inside ROI. # Prints length in mm and flags if the tip position changes. 2025-10-25 03:40 — Thomas Vikström # Thomas Vikström import sensor, image, time # ==== USER SETTINGS ==== ROI = (10, 10, 240, 240) # must include the full tip and a bit of background below EXPOSURE_US = 28000 # fixed exposure for stability PIXELS_PER_MM = 20.0 # calibrate this once EDGE_THRESH = (5, 255) # low/high thresholds for EDGE_SIMPLE or EDGE_CANNY EDGE_MODE = image.EDGE_SIMPLE # try EDGE_CANNY for smoother contours PRINT_EVERY_N = 1 CHANGE_THRESH_MM = 3 # alert if |Δ| > this HYST_MM = 0.5 # ==== STATE ==== baseline_mm = None alarm = False ma_buf = [] MA_WINDOW = 3 LEARN_FRAMES = 10 learn_buf = [] def s3(v): return "{:.3f}".format(v) if v is not None else "--" def setup(): sensor.reset() sensor.set_pixformat(sensor.GRAYSCALE) sensor.set_framesize(sensor.VGA) sensor.skip_frames(time=2000) sensor.set_gainceiling(8) sensor.set_auto_gain(False) sensor.set_auto_whitebal(False) sensor.set_auto_exposure(False, exposure_us=EXPOSURE_US) print("READY — keep camera fixed, jog Z to move the tip up/down inside ROI.") def pixels_to_mm(py): _, y0, _, _ = ROI return (py - y0) / PIXELS_PER_MM def moving_avg(buf, x, n): buf.append(x) if len(buf) > n: buf.pop(0) return sum(buf)/len(buf) def learn_avg(buf, x, need): buf.append(x) if len(buf) > need: buf.pop(0) return (sum(buf)/need) if len(buf) == need else None def find_tip_with_edges(img): # Run edge detection on a copy of the ROI r = img.copy(roi=ROI) r.find_edges(EDGE_MODE, threshold=EDGE_THRESH) x0, y0, w, h = ROI # Scan from bottom→top for first row that has any bright edge pixels for j in range(h - 1, -1, -1): row_stat = r.get_statistics(roi=(0, j, w, 1)) if row_stat.l_mean() > 10: # 10–15 works fine for EDGE_SIMPLE return y0 + j return None def draw_overlay(img, tip_y, length_mm, sm_len, delta, alarm_flag): img.draw_rectangle(ROI, color=255) if tip_y: img.draw_line(ROI[0], tip_y, ROI[0] + ROI[2] - 1, tip_y, color=255) y = ROI[1] + 4 def put(s): nonlocal y img.draw_string(ROI[0] + 4, y, s, scale = 1.9, mono_space=False); y += 20 put("EDGE thr={}..{}".format(*EDGE_THRESH)) put("len_raw={} len_ma={} base={}".format(s3(length_mm), s3(sm_len), s3(baseline_mm))) put("Δ={} mm {}".format(s3(delta), "CHANGE!" if alarm_flag else "OK")) def main(): global baseline_mm, alarm setup() f = 0 while True: img = sensor.snapshot() tip_y = find_tip_with_edges(img) if tip_y: length_mm = pixels_to_mm(tip_y) sm_len = moving_avg(ma_buf, length_mm, MA_WINDOW) if baseline_mm is None: maybe = learn_avg(learn_buf, sm_len, LEARN_FRAMES) if maybe is not None: baseline_mm = round(maybe, 3) print("BASELINE {:.3f} mm (avg {} frames)".format(baseline_mm, LEARN_FRAMES)) delta = None; alarm_now = False else: delta = sm_len - baseline_mm if not alarm and abs(delta) > CHANGE_THRESH_MM: alarm = True elif alarm and abs(delta) < (CHANGE_THRESH_MM - HYST_MM): alarm = False alarm_now = alarm else: length_mm = sm_len = delta = None alarm_now = False draw_overlay(img, tip_y, length_mm, sm_len, delta, alarm_now) f += 1 if f % PRINT_EVERY_N == 0: if tip_y is None: print("NO_TIP (adjust EDGE_THRESH or lighting)") elif baseline_mm is None: print("LEARN len_ma={} ({}/{})".format(s3(sm_len), len(learn_buf), LEARN_FRAMES)) else: print("{} tip_y={} len={} len_ma={} base={} Δ={} mm".format( "CHANGE!" if alarm_now else "OK ", tip_y, s3(length_mm), s3(sm_len), s3(baseline_mm), s3(delta) )) time.sleep_ms(5) main()I have no doubt that this can work very well if you train for every specific job or material. But I don't run large volume series production but rather many small jobs, often one-time prototypes. Training would take more time than the cutting itself. So I simply don't want to do it that way.

Thanbk you very much, @banjo! I have to buy one of those OpenMV cameras. I think I can get much better lighting conditions with an LED backlight tile reducing background noise and shadows.

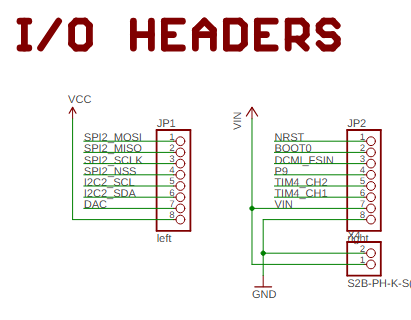

Errr, next question: which camera module would suit my application best? It would be good to have 3 IO-pins, two inputs for the commands "learn" and "check" and one output for "good/bad". This way I can interface it to the CNC controller without having to send serial data over USB.

Most camera modules have the two IO pin headers JP1 and JP2.

The description says the IO pins drive 3.3V and are 5V tolerant. I can connect optocouplers to interface to the 24V signals of the CNC controler. But which pins can I use as general purpose IOs?

The RT1062 has a lot more IO pins and even Ethernet and Wifi. I think I don't need that.

Ok, in this case I agree it would not be worth it to collect data and train a model.

If you are looking into OpenMV cameras, I suggest you ask this in their forum: https://forums.openmv.io/. As I understand it, the pins P0-P9 on the OpenMV Cam H7 Plus are GPIO pins, but there might be some caveats so better to ask before.

FWIW: Due to limited programming skills, I always look for the easy way (there always is one)

For this, I would take an old Android phone and use a BASIC interpreter that can easily grab and compare images. Use Bluetooth (HC-05 on the MCU) as it is just like a UART without the wire.

Could also easily record and compare sound.

Browsing the forums I found this documantation page and the quick reference for the H7 plus board. So I think it's correct that P0..P9 can be used as 3.3V GPIO. I've just ordered a H7 and will try it out.

Hi @ManAtWork , good morning,

had another idea, how to detect an unknown tool shape: Take several pictures in a row with rotating tool and calculate difference pictures. If the speed of rotation is known well, than you can take the pictures at optimum intervals.

Have a good day, Christof

As I already said, this is not a commercial project for customers doing mass production. There are definitely a lot of clever ways to detect wear or patially broken cutting edges. But I don't want to do that. I only want an easy solution to avoid the most likely and most expensive failures. The tools I use are mostly small tungsten carbide cutters. If they break they usually break completely and near the shaft. So detection of the overall length to a tolerance of ~0.5mm is enough for me.

Keep it simple! No rotation required. A single picture is enough.

I'm waiting for the OpenMV camera to arrive. Then I'll try out @banjo 's code. I already have a LED backlight panel.

It works! First, I've tested it with a plain white paper as background and a screwdriver.

Most of the time the measurement is correct but sometimes the line that marks the tip jumps to a false edge inside the tool, for example the end of the insulation sleeve.

With the backlight panel the background is fully saturated white and the contour recognition works much better, of course. The results are very stable. Now, I just need to figure out how to implement the learn vs. compare commands and drive the output pins accordingly. Shouldn't be too hard. There are examples of how to poll and drive pins. But I've never coded python before.

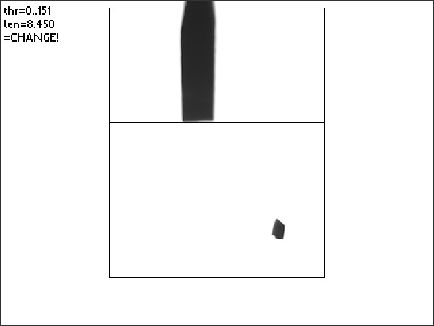

Hmm, celebrated too early. Only slight changes to the lighting conditions can lead to the tip recognition to fail.

It locks to the step where the width changes instaed of the tip. I have to change the filtering.

Yeah, I remember it was sensitive, I did though not test it systematically under controlled conditions. If you don't get it to work, I suggest you ask in the OpenMV forums I linked to earlier, someone might have a better solution than I (with help of AI) created.

I think I already have an idea of how to fix it. The

find_edges()and theif row_stat.l_mean() > 10favors horizontal edges. If the tip is not rectangular but triangular (like a fish tail cutter or countersink) the algorithm focuses to false edges inside the tool shape. I'll try to extract a solid area instead of edges with Otsu's method.Bingo!

def find_tip_solid(img): global threshold # Run solid area detection on a copy of the ROI r = img.copy(roi=ROI) hist = r.get_histogram() threshold = (0, hist.get_threshold().value()) # Otsu's method r.binary([threshold]) # convert to black/white using threshold x0, y0, w, h = ROI for j in range(h - 1, -1, -1): row_stat = r.get_statistics(roi=(0, j, w, 1)) if row_stat.l_mean() > 10: return y0 + j return NoneMy biggest problem was to try to make up python code by trial and error. I thought this was easy because of the syntax highlighting editor of the IDE spotting every mistake I make. But it wasn't. The python interpreter crashes without warning if I pass parameters to a library function of which the data type doesn't match the expectations. The parser of the editor can't warn because it has no idea of data type until the code is executed.

... and searching top-down instead of bottom-up still works with some dirt on the screen.

Maybe I should use erode() and dilate() to eliminate smaller dirt particles and call find_blobs() and throw an error if more than one blob is detected.

If you prefer static typing, you can put type annotations in your Python code and run it through a type-checker program and get something analogous to compile-time errors.