PropCAM: A Propeller imaging camera AVAILABLE NOW!

Phil Pilgrim (PhiPi)

Posts: 23,514

Phil Pilgrim (PhiPi)

Posts: 23,514

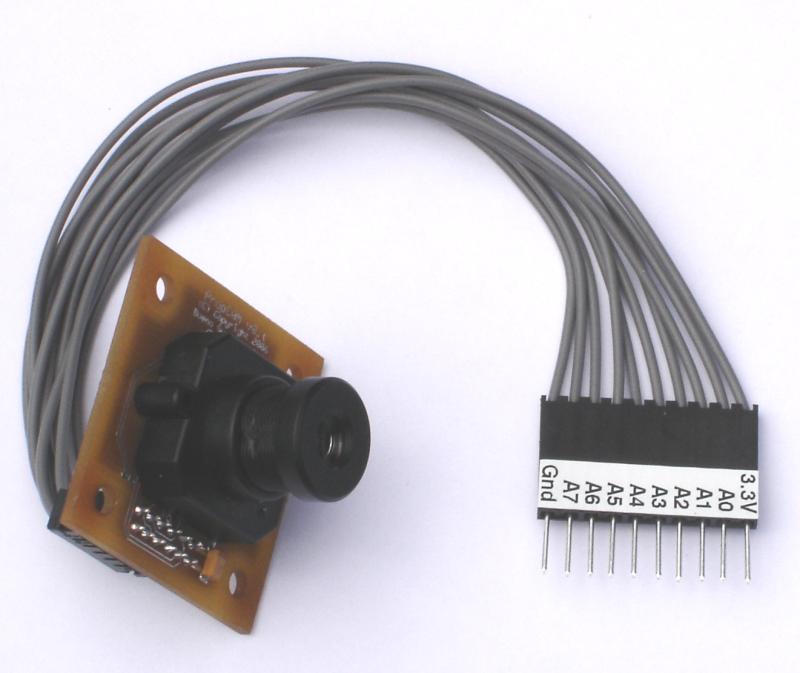

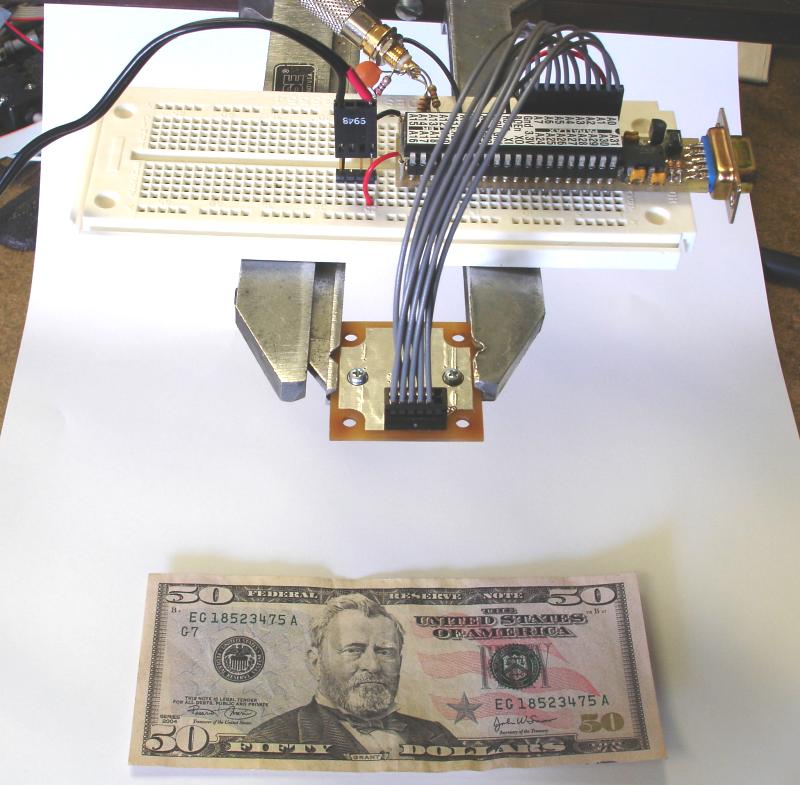

Attached are some photos taken of (and with) an image capture device I've developed for the Propeller. I call it the PropCAM. It's a black-and-white video camera having 128x96-pixel resolution and capable of acquiring up to 30 fps of 4-bit grayscale image data. It interfaces to the Propeller via ten pins: +3.3V, Gnd, and A0-A7.

With the Spin and assembly code I'm developing for it, it is capable of capturing images in the following modes:

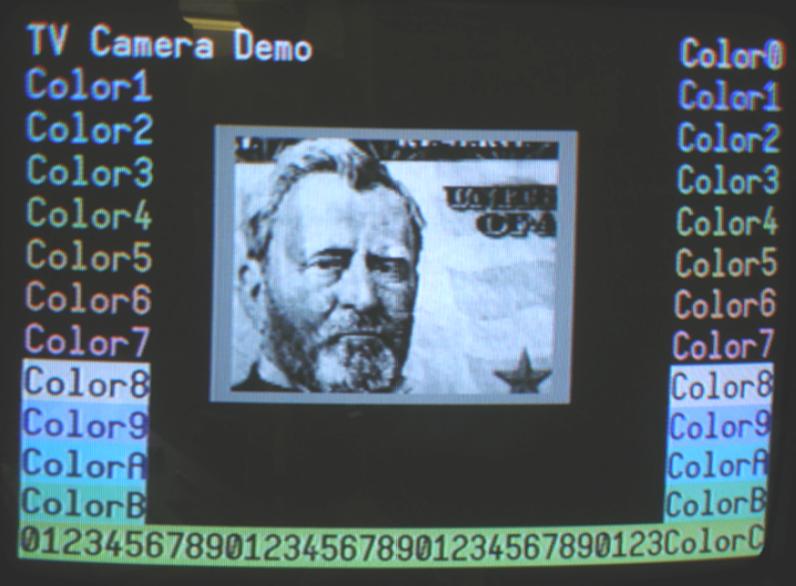

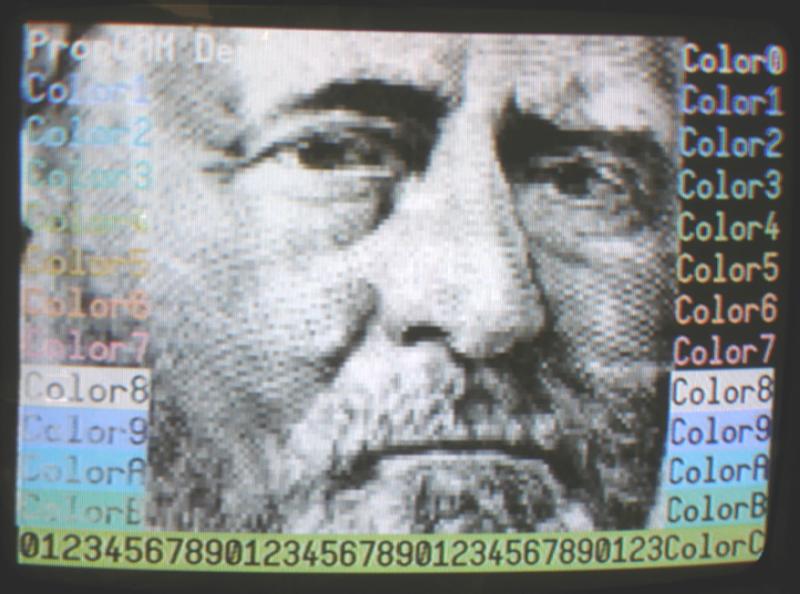

For display, the software uses a grayscale buffer which is overlayed upon output from another video driver, from which it derives its sync. The attached photos show capture output on top of text from Chip's TV_text object. Any video driver can theoretically be used, but memory usage will probably constrain one to the text display objects. In order to facilitate additional graphics, such as intensity histograms, the grayscale overlay area can be made larger than the camera's image size. The camera image can then be placed anywhere inside this grayscale "window", with the margin areas being used for additional graphics. It will also be possible to draw on top of the camera image itself.

The grayscale display can be at its native size or double its native size (line-doubled and stretched). The attached photos show one example of each. In the native-scale display, the captured image is shown centered in a larger window with a gray surround. Grayscale display uses a separate cog utilizing CTRA in its DUTY mode, and whose output is ORed onto one of the video pins. (Thanks to Chip for the suggestion!) This will work with either the PropSTICK or Demo Board and requires only the addition of a 220pF cap across the video output to filter the high-frequency hash from CTRA. The aspect ratio has been adjusted to come as close to square pixels as possible.

Yet to be written are the image processing routines that will turn the PropCAM+Propeller into a real machine vision system. They're coming, but it'll take time.

My plans are to have these units produced for sale, if there's sufficient demand. (Unlike the PropSTICK, they use SMD devices, so can't be made into a kit.) The boards are designed to plug into a product yet to be announced, but they'll be available prior to that with a cable similar to the one shown in the photos for easy interface to either the Demo Board or PropSTICK.

Cheers!

Phil

With the Spin and assembly code I'm developing for it, it is capable of capturing images in the following modes:

- Single snapshot

- Frame grab from a continuous exposure sequence

- Capture at a fixed time interval (1/30 sec. or more)

- Capture synchronized to video output (for a live, flicker-free display)

- Fixed exposure time and gain

- Auto exposure time and fixed gain

- Fixed exposure time and auto gain

- Auto exposure time and gain

For display, the software uses a grayscale buffer which is overlayed upon output from another video driver, from which it derives its sync. The attached photos show capture output on top of text from Chip's TV_text object. Any video driver can theoretically be used, but memory usage will probably constrain one to the text display objects. In order to facilitate additional graphics, such as intensity histograms, the grayscale overlay area can be made larger than the camera's image size. The camera image can then be placed anywhere inside this grayscale "window", with the margin areas being used for additional graphics. It will also be possible to draw on top of the camera image itself.

The grayscale display can be at its native size or double its native size (line-doubled and stretched). The attached photos show one example of each. In the native-scale display, the captured image is shown centered in a larger window with a gray surround. Grayscale display uses a separate cog utilizing CTRA in its DUTY mode, and whose output is ORed onto one of the video pins. (Thanks to Chip for the suggestion!) This will work with either the PropSTICK or Demo Board and requires only the addition of a 220pF cap across the video output to filter the high-frequency hash from CTRA. The aspect ratio has been adjusted to come as close to square pixels as possible.

Yet to be written are the image processing routines that will turn the PropCAM+Propeller into a real machine vision system. They're coming, but it'll take time.

My plans are to have these units produced for sale, if there's sufficient demand. (Unlike the PropSTICK, they use SMD devices, so can't be made into a kit.) The boards are designed to plug into a product yet to be announced, but they'll be available prior to that with a cable similar to the one shown in the photos for easy interface to either the Demo Board or PropSTICK.

Cheers!

Phil

Comments

Michael

PhiPi:

Thats really, really good work.

Keep us posted in the further development.

Please keep me in the list to buy your product.

Awesome!

▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔

Just tossing my two bits worth into the bit bucket

KK

·

This is very neat!!! This will lead to a whole new class of applications. I remember the old thread "Can the Propeller show a human face on a TV?" (or something like that). Well, it can now. And it takes only 6KB of RAM per picture!

▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔

Chip Gracey

Parallax, Inc.

Put me on the list for at least one!

Thx!!!

▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔

Andrew

Wow!

Nice and simple.

I've been looking for a cmos or ccd imaging divice/ IC to do Visual processeing. Stereo vision is what I've·been hopeing for, so!

Sign me up for TWO (2).

But I have a few Questions:

Can more than one Propcam be interfaced at the same time?

Are two or more cmos devices to much for system resources?)

How many Cogs are needed to get the Data? (or involved in the I/O)

Would I just need to start a new cog and run the same code using a second set of·seven pins say A8-A12? (If I were using two PropCams?)

Once the Image Data is loaded into the Propeller can the same cog/s doing the I/o also do the image procsesing or would aditional cogs be·needed?

How critical is the timming, in terms of sync?

But most of all:· When? how much?

did I say when????? when!

oh ya! what about Color? would a color version be lurking just over the next hill? Maybe·with enough Propellers we can Fly there!

▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔

IAI (Indigenous Alien Intelligence),

The New View on Machine Intelligence.

Because There is nothing Artificial about it!

Just as interesting as capturing stereo images is displaying them. Using the gray-level display techniques developed so far, it would be rather easy to drive a pair of LCD shutter glasses with alternate interlaced video fields — without losing any resolution, since the data are already duped between fields. A red/blue or red/cyan anaglyphic display would take more work, since the CTRA method of displaying grayscale images can't generate individual colors. But other display methods are under consideration and could yield results. Stay tuned!

-Phil

Post Edited (Phil Pilgrim (PhiPi)) : 6/18/2006 7:30:12 PM GMT

Keep me on your list for the PropCam! I have just the application for several of these.

Thanks!

Michael

I've been playing with 3D on the Propeller for a few days now and I've come to the conclusion that red/blue anaglyph is the way to go for a few reasons:

Shutter glasses are expensive and require CRT monitors·which are fast disappearing. LCD monitors buffer data and re-scan it at their own rate, so shutter glasses don't work on these. TV's are mainly CRTs, but you can only get 30Hz per eye which is very flickery.

Anaglyph works on all monitors, and even printed materials. It uses red and blue lenses to the block blue and red light, respectively. There are also red/cyan anaglyphic combos which let more RGB color through to the brain (the brain sums the left and right eyes' colors). These are good for color, but if you want the optimal anaglyph experience, use red and blue in monochrome. That's the tact I'm taking with the Propeller. Red and blue plus dark grey, along·with white a background makes for some dramatic depth effects (minus the color). Since the Propeller has hardware support for 4-color mode (2 bits per pixel, where you can pick the color for each combo), we've got exactly what we need. It goes like this:

%00 = white (background)

%01 = blue (dark grey to left eye with red lens, invisible to right eye with blue lens)

%10 = red (dark grey to right eye with blue lens, invisible to left eye with red lens)

%11 = dark grey (dark grey to both eyes)

Notice that to draw a 3D image, you just clear all pixels to %00, draw the left eye image·by OR'ing·%01 into pixels, then draw the right eye image by OR'ing %10 into pixels. The pixels that wind up with both bits set appear as dark grey to both eyes. Pretty simple!

Parallax will probably start selling good plastic red/blue 3D glasses for $5 a pair. If you have any glasses already, check out this site:

www.anachrome.com

I called there last Saturday at 10:00pm and the guy who runs the site, Alan Silliphant, talked to me for over three hours about anaglyphic 3D. He's made some novel strides to make 3D viable for theatres in the near-term, like sprocket refits for 35mm projectors and special glasses which are inexpensive and have slight positive diopters on the red lenses to help your brain focus the red better. Also, he uses cyan which lets both green and blue through, resulting in better color conveyance to the viewer's brain. He is a super nice and down-to-earth guy. He even offered to come to Parallax and teach us how to get into 3D so that we could have 3D product shots on our website. He's on a 3D mission!

The long-term 3D solution for theatres is dual digital projectors with complementary polarized filters, and matching glasses for the viewers. This would look like the Captian EO show at Disneyland, but all digital, with 4k pixels per line. Allan thinks this is 7-10 years away, yet.

Anyway, I hope to have some simple, but stunning, 3D demo on the Propeller soon. The longer-term goal will be to make 3D world transforms so that you could animate 3D coordinates in real-time and view them in 3D (with red/blue glasses). What is the point of all this? To have fun and be able to explore sensor visualization in 3 dimensions! A dual PropCam would be a great testbed, but also you could explore 'seeing' ultrasound (or RF?)· in 3D and who knows what else. Our eyes are the biggest inputs to our brains. Like our ears, they're wired straight in, but receive lots more data. We should use their 3D capacity, as well to perceive phenomena.

▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔

Chip Gracey

Parallax, Inc.

Post Edited (Chip Gracey (Parallax)) : 6/18/2006 8:19:46 PM GMT

I'm glad to see you've come so far with the stereo 3D stuff! Oddly enough, a couple years ago I bought a set of plastic anaglyph glasses from another company I found on the web, Berezin Stereo Photography Products (www.berezin.com/3d/3dglasses.htm). They might be the same glasses that Anachrome is offering; I'm not sure. They're quite comfortable to wear over my regular glasses, and I keep a pair near each of my PCs -- mostly for looking at 3D photos from Mars (www.lyle.org/mars/synth/). One thing I did notice, though, is that the filtering on these glasses is not quite as good as on the cheap cardboard ones I got from the same company. The pricier ones have more ghosting, due to the wrong color getting through. It's most noticeable in high-contrast, computer-generated images and less so with low-contrast photos. I also prefer the red/cyan glasses to the red/blue ones. The eye is relatively insensitive to blue. By adding a bit of green to the non-red side, the left/right intensities seem to balance a little better than with blue alone. But that's an entirely subjective observation and more a matter of personal preference than anyting else.

I've also got some LCD shutter glasses that work on my TV's video out, switching between even and odd fields. You're right: they are headache-inducing. But I've also attended 3D Imax showings that use these with no discomfort, likely the benefit of a much higher frame rate.

What I'd really like to be able to do is display a grayscale image in 3D. I've figured out a way to do it anaglyphically using the ctra grayscale method, but it requires alternating fields. It relies on the fact that tv_text uses a lookup table for character and background colors. All you have to do is set the background color underlying the grayscale window to dark red for one field and change it in the lookup table to dark blue or cyan for the alternate field. Unfortunately, you'd end up with a double dose of headache inducement. If the color separation doesn't cause a migraine, the flicker certainly will!

I think the best approach might stem from your earlier suggestion to use the video circutiry for generating both the duty pulses that come from ctra now, along with some chroma modulation. OTOH, there's no reason ctra can't be used for intensity in the high-order bit, with the video circuitry doing the chroma in the two low-order bits -- in the same cog. It'd take some fancy scheduliing to coordinate waitvids with writes to frqa, but it might work.

So much to try!

-Phil

▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔

OS-X: because making Unix user-friendly was easier than debugging Windows

links:

My band's website

Our album on the iTunes Music Store

I'm working on interfacing the commercially-available 3088 module; since I'm not interested in selling hardware (and my jealous employer wouldn't take kindly to it anyway), I'm hoping to provide a mostly-software solution for the off-the-shelf modules. Depending on how much of your code you're interested in open-sourcing, perhaps we could share image processing code.

Thanks!

I'm working with a lower-resolution sensor than the 6620; and it's B/W, not color. But it has the advantage of true frame mode sensing without needing a mechanical shutter during pixel transfer, which is critical for certain machine vision apps. The lens assembly came from my Korean lens supplier and is one I bought for a different project. It's not optimum for this camera, tough, and I shall have to find a lens with a shorter focal length for best performance.

Good luck on your 3088 project!

-Phil

I guess these lenses are pretty much stock. Say, do you know if yours has an IR cut filter? The cheap Omnivision lenses have an IR-reflective coating on the frontmost element, which in my case is bad.

Yeah, for B/W imaging the IR-cut filters can be a pain if you need high sensitivity. The lenses I've been testing all have them, but I'm going to try finding one that doesn't. OTOH, cheap S-mount (M12x0.5) lenses don't come with an adjustable aperture. Using them in bright, IR-rich sunlight without an IR filter may swamp the sensor no matter how short the integration time. So there's definitely a tradeoff.

-Phil

This is exactly the product I've been looking for, sign me up for at least two!

-Mike

Just my two bits... Looks like a wonderful product that I'd be interested in too!

Tim

Is that model available with Infared? Or possible an option for your baseline model?

The half-max sensitivity point on the sensor's spectral response curve is around 800nm. At 900nm it drops to 10%, and at 950nm to zero.

-Phil

The camera project is something my boss is going to send me to school for, so your reply really exposed my ignorance of the subject matter.

However, if I can glean some proof that the proppeller will do the job, then he will likely get all the propeller equipment I can handle and I want have to sweat it.

But, If I have to wait till christmas I'm getting one of these puppies!

Way over my head, but I know the boss is going to throw me in head first!

Thanks for the reply!·10microns would be good for me or maybe just a good algorythme to distingush human from inanimate objects blown by the wind!·

10 microns (10,000nm) is well beyond the reach of most image sensors and ordinary optics. I'm not sure what to recommend, except perhaps one of those IR motion detectors.

Good luck with your project!

-Phil

I got that bit of info from (http://imagers.gsfc.nasa.gov/ems/infrared.html.)

Quiet possible I'm compareing apples to oranges.

I have the IR motion detectors, there great, I just need the camera to confirm false alarms.

Thanks for straightening me out!

Keep up the good work.

I can certainly sympathize with the false alarm problem. My shop has a burglar alarm equipped with IR motion detectors. I kept getting alarms in the middle of the night. I'd go out to investigate — and nothing. 'Turns out, a squirrel was getting in through an unused flue opening and scampering out when he heard me unlock the door!

It may be possible for a camera to disambiguate something like this, based on the number of pixels that change between exposures. It would require either a double buffer or a capture mode that made the comparson and wrote the difference in real time. This is an interesting problem!

-Phil

The PropCAM uses two cogs for image acquisition: one for even lines, one for odd lines. I've added some code to select which lines to acquire in any given snapshot: even, odd, or both. This makes motion detection feasible, since it allows comparison of two successive images, interlaced into one. I'm not sure if this will help in your app, but your comments certainly inspired the program change!

-Phil

Update: Attached is an image illustrating the principle. The first exposure captured the even lines, when there was just a schematic in the field of view. The second exposure captured the odd lines, after a Sharpie pen tip had moved in. By comparing the even lines with the odd ones, the change (i.e. motion between frames) is readily apparent.

Post Edited (Phil Pilgrim (PhiPi)) : 6/20/2006 9:09:33 PM GMT

·

▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔

▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔

OS-X: because making Unix user-friendly was easier than debugging Windows

links:

My band's website

Our album on the iTunes Music Store

I am interested in at least one.