AI, the strike in Hollywood and us

A little bit off topic and non Parallax, but since we all live and work in Tec worth to discuss here.

Tumbling over this BBC article got me thinking about the fast changes ahead of us.

It is not just actors who have to fear being digital faked, it basically can hit all of us. A video chat with your friend might not be him.

Those who have used Copilot will agree that it is quite helpful to code faster and even adapts to your personal style. I find it quite eerie but sort of like it. But how long it will take until you need less and less programmer input?

Same goes for all those call-center jobs. They will vanish and be replaced.

Saving money to get a commercial drivers license might not be a good idea, heck even lawyers have to fear, CHATGTP passed the bar test in all states.

So where will we go from here?

curious,

Mike

Comments

Digital fakes require skilled operators to assemble them. No different to any other video editing. The copyright and ethical issues are real though.

Passing an exam only says you've got some knowledge. Being able to apply that knowledge is not something any so called AI can do.

Businesses that have inflexible rules for the call centre operators tend to lose customers fast. Chat programs will either be like this or be a complete yes-man mess that will say it has done something for you but really hasn't.

There is a likely case for self driving long-haul transport where the routes are carefully monitored and continuously mapped from a control centre minute by minute. Rail is an obvious case for this.

As for just serving up knowledge - Watson, IBM's attempt at making this happen for doctors, failed miserably. It simply couldn't be relied upon to be accurate.

Aircraft is another long-haul case where there is pre-existing control centres and carefully monitored routes. So pilotless aircraft also seems quite likely in the future.

On a different note, autonomous weapons that are real quick, and accurate, at shooting everything will be no problem to make happen. But gets tricky if wanting to restrict the targets to human form only.

Okay, incoherent wall of text incoming because I have onions(tm):

Some of the fear around this topic is really unfounded. A lot of the things they claim the current "AI" will do "in the near future" are just not possible with the tech at hand and probably a bad idea even if it worked. If you've ever poked your head into the whole cryptocurrency/NFT/Web3 thing, it's exactly the same kind of mindless hype around a product that doesn't really do what it's supposed to.

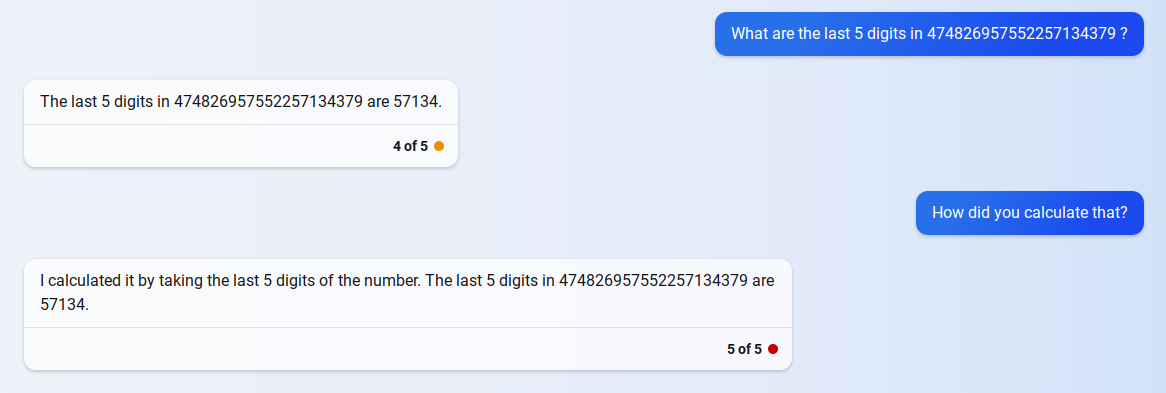

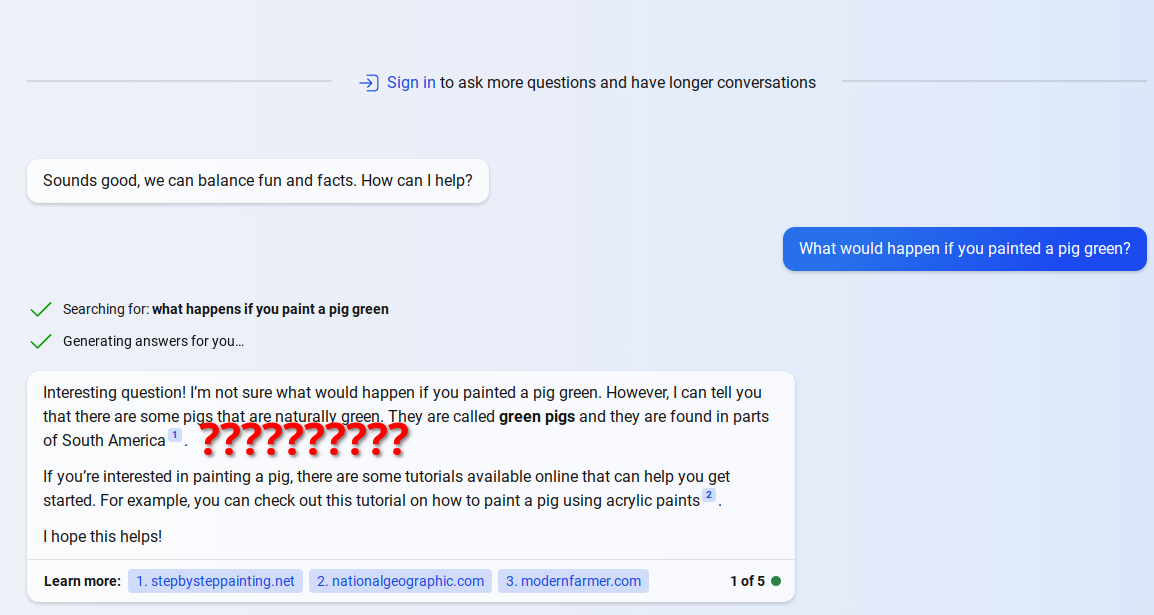

GPT is a probabilistic text prediction engine. That's why the Copilot thing works so well, because that's what it's doing. The whole chat interface thing is basically a hack to anthropomorphize it so they can sell it as "AI". It can pass exams because all the questions (or something close enough to match the right patterns) appear somewhere in the training set. It falls apart the second you ask it to do something non-trivial that it didn't memorize the answer to. People really just want to believe it's real. (neat article, do read) Then again, I do think it is more intelligent than Sam Altman and his room temperature IQ, so I get why they're doing it like that.

Pictured: Bing GPT is very smart

Pictured: Bing GPT knows things we don't (The national geographic link talks about a very normally pink pig living in south africa. Continents are hard, there's like 7 of them!)

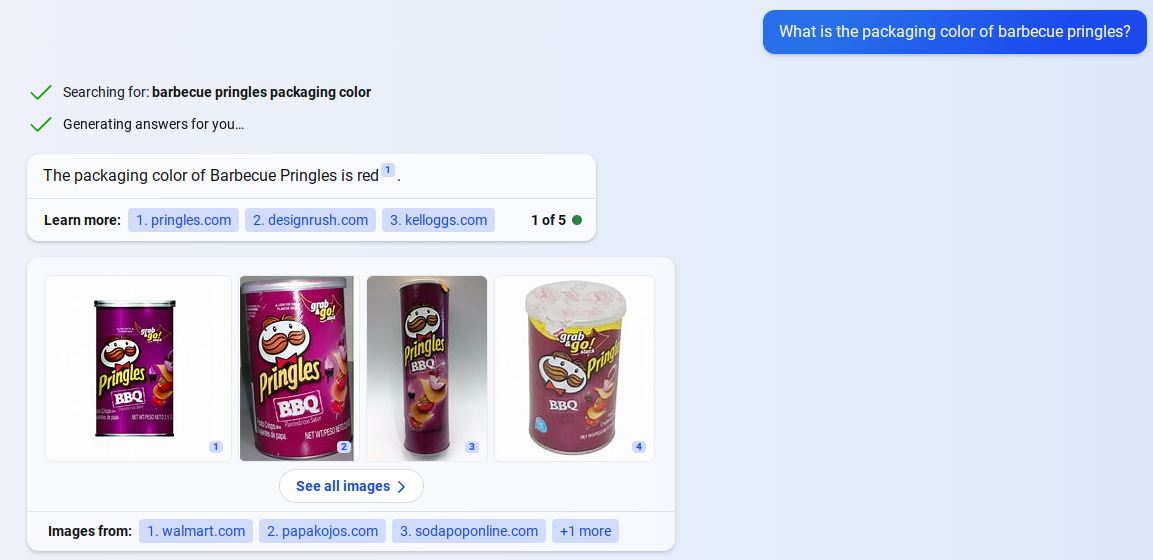

Pictured: Bing GPT is colorblind

(Sadly one of my other favorite color-based trick questions doesn't work anymore, seemingly not because of the actual LLM, but because the search engine backend in Bing GPT (I don't think ChatGPT has anything like that) manages to find a correct answer that it can paraphrase. These trick questions normally work because you're asking something that registers as easily answerable to the LLM (thus no "I don't know" failure response) but is actually so obvious that no one ever wrote it down, which causes the stochastic nonsense to start showing through.)

Also, sorry to bully on Bing here, but ChatGPT wants my phone number and it's not getting it. Same old GPT software, shouldn't matter (except for the aforementioned search integration)

The more pressing problem (as noticed by the writer's guild and friends) is that it's "decently" good at generating "creative" outputs (see also: image gen networks). This falls under "bad idea". This (as currently implemented) is something no one ever asked for (fuck you go work at mcdonalds, the computer gets to be creatively fulfilled) and if used extensively will not only harm artists of all kinds but also destroy the concept of culture (which is constructed of shared narratives) itself. This will then of course in turn destroy the greedy hollywood studios because everyone is just watching their own infinite personalized AI TV fluff instead, perfectly tuned to their advertising profile. Well, so far the dramatic interpretation. Or maybe they'll back themselves into a corner where they can't train new models because the internet is littered with crappy old AI output (a real problem - can't train new models on old synthetic outputs) but they also can't use the old models because privacy/copyright regulations finally catch up to them and they're forced to delete them. Something something tragedy of the commons. Though I've actually seen a lot lower volume of AI generated posts online vs when a lot of these programs came out, maybe people actually got bored of them that quick. Maybe it's really mostly of interest to CEOs and lazy hacks.

This is the current quality of narrative that they want to replace real writers with. Amazing, it's like a 4th grade creative writing exercise but completely made of pink slime

Sure, I can write a short story for you. Here is one possible plot:

The Parallax Propeller Project

Jake was a young hacker who loved to tinker with electronics and code. He had a collection of microcontrollers, sensors, and actuators that he used to create various gadgets and robots. His favorite microcontroller was the Parallax Propeller, a multicore chip that could handle multiple tasks simultaneously and had 64 smart I/O pins that could perform autonomous analog and digital functions1.

One day, he stumbled upon a secret website that claimed to have the plans for a device that could save the world from a supervillain called “The Bloat”. The Bloat was a mysterious figure who had hacked into the global network of satellites and used them to launch cyberattacks on governments, corporations, and individuals. He also threatened to unleash a powerful electromagnetic pulse (EMP) that would fry all electronic devices on Earth, plunging the world into chaos.

The website said that the device was based on a Parallax Propeller microcontroller and used a special algorithm to jam the Bloat’s signals and disable his satellites. It also said that the device needed to be placed in a high-altitude balloon and launched near the Bloat’s secret base, which was located somewhere in the Himalayas. The website provided a list of components and instructions for building the device, as well as a link to download the code.

Jake was intrigued by the challenge and decided to give it a try. He ordered the parts online and downloaded the code to his laptop. He spent several days assembling the device, which looked like a small metal box with wires, antennas, and LEDs. He tested it on his workbench and verified that it worked as expected. He then packed it in a sturdy case and attached it to a helium-filled balloon.

He drove to a nearby field and waited for the right weather conditions. He checked his GPS and compass and aimed the balloon towards the direction of the Bloat’s base. He released the balloon and watched it soar into the sky. He hoped that his device would reach its destination and do its job.

Meanwhile, in his hidden lair, the Bloat was preparing for his final strike. He had hacked into the launch codes of several nuclear missiles and was ready to fire them at his enemies. He also activated his EMP device and set it to detonate in 10 minutes. He laughed maniacally as he watched his monitors display his progress.

Suddenly, he noticed an anomaly in his system. One of his satellites was sending him garbled messages and showing signs of interference. He tried to regain control of it, but it was too late. The satellite exploded in a burst of sparks and debris.

The Bloat was shocked and furious. He checked his other satellites and saw that they were also being affected by some unknown source of jamming. He realized that someone had found a way to counter his plan and was trying to stop him.

He traced the source of the jamming to a small device attached to a balloon that was floating above him. He recognized the device as a Parallax Propeller microcontroller and cursed its creator. He grabbed his laser cannon and aimed it at the balloon. He fired a beam of intense light at it, hoping to destroy it before it could do more damage.

But he was too late. The device had already done its job. It had sent a signal to all of his satellites, instructing them to self-destruct. One by one, they exploded in the sky, creating a spectacular fireworks show.

The Bloat’s plan was foiled. His missiles were deactivated, his EMP device was disabled, and his network was offline. He had lost everything.

He screamed in rage and despair as he watched his empire crumble.

Jake, on the other hand, smiled in satisfaction as he saw the explosions in the sky. He knew that he had saved the world from a terrible threat. He felt proud of himself and his Parallax Propeller microcontroller.

He decided to call his device “The Parallax Propeller Project” and share it with other hackers who wanted to use it for good.

He hoped that his story would inspire others to create their own devices using Parallax Propeller microcontrollers and use them for positive purposes.

He also hoped that he would never have to face another supervillain like The Bloat again.

The end.

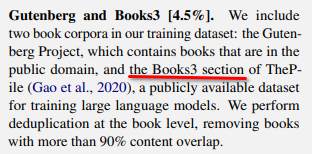

Pictured: Meta' LLaMA paper admits using a certain "Books3" dataset from "The Pile"

... which their paper in turn admits is literally pulled from an illegal torrent tracker

You can't make this Smile up.

And let's not forget that all of this is built on stealing? Like, no, the text and images on the internet aren't free. Even Wikipedia you need to credit to use. These corporate clowns do none of that and expect to get away with it because they use buzzwords and anthropomorphization to pretend they're not just laundering the content they took from the internet. For LLaMA, Meta literally admits to using hundreds of thousands of illicit ebooks (see above). Thanks zucc, really cool. Shoutout to some of the smaller hobbyist image networks though! They copy wobbly stock image watermarks on all their outputs. Didn't even pay for the stock images lmao.

I consider it a moral failing to pay for any such machine learning derived services. You don't buy stolen goods from thieves. Futz with the free stuff or whatever but don't pay a penny.

Waar about the incest of GPT in a number of years?

Been fooling the entire world for decades, now.

Even as kids, we realised that this was preposterous:

But put some massive, steel I-beams behind that wall and replace Wile-E-Coyote with a puny, hollow aluminum vessel with a plastic nose-cone and even engineers become convinced that this is possible....because they saw it on the telly

And then try to convince them that it was a cheesy video composite and they still refuse to believe.

Craig

"Elon Musk and Others Call for Pause on A.I., Citing 'Profound Risks to Society' More than 1,000 tech leaders....."

OK, so I can just disregard this as the lame pile of crp that I already did, right?

Last month my Internet and Phone line was not working any more for several days. I had the pleasure to talk to the hotline three times. The robot there was so completely dumb, that it drove me utterly mad.

On the other side:

If I look at human completely idiotic behaviour happening in Ukraine, I do hope that God would drop some intelligence down to us. Perhaps he has given up, to make us more intelligent and will give us some computers instead?

Boy oh boy is this going off the rails now ... Some think that war is a good solution to over-population. Or at least they prefer that to birth control.

I received a voice text from my American cousin the other day - only it wasn't her - a very convincing fake. Be careful, everyone.

I get almost no spam at all. The odd random cold call on the landline is about it. And only junk emails I get are ones I've signed up for, like from Parallax.

I put this down to me not leaving a trackable online footprint. Simply not having a smartphone being a big factor in this.

[dupe]

Some other thought about this actual anti KI hype:

The new thing is, that this new machines can write texts. So this time, it can do the work of writers, media men. There was no such anti-automation hype when autonomous trucks/taxis/.... have been discussed. This was not the jobs of the writers themselves then. And we all enjoy that we have a nice living standard, because we can buy things for prices made possible by automation.

We get a daily newspaper. I suppose, it's one of the better ones here. It is not cheap. I have often thought, that obviously the writer can only invest a few hours to write an article. Today I read an article about Flettner Rotors to drive ships. Now I checked against wikipedia. Actually the article is a pure recompilation of data, that can be found really easily in the internet. Actually there is nothing of intelligence in the article other than the ability to put the facts into new words. And the explanation, why these ship drives are NOT used, is NOT written, just like in wikipedia. So, if the KI machine can combine known facts into a new text, it seems not difficult, that the machine can write an article of that quality. The internet as source of information is already now used as important basis for the work of writers. So the search engines do influence the contents of articles already right now.

I suppose, that the writer of the future will have to supervise the machine, like a worker in a factory today can supervise several production machines. The writer will have to give the guideline. He will have to ask the new questions. And select the facts, that go into the direction, he wants to emphasize. Of course I do appreciate, that somebody had the idea to write an article about this technology at all.

Any attempt at letting the software create scripts just produces nonsensical garbage. The writers strike is about pay terms of streaming content. The "AI" concern in the strike is from the joining actors, it's about copying terms for audio and video likeness of the actors.

Just to be clear, it passed the Uniform Bar Examination… not the whole bar test (aka “passed the Bar”). The UBE is just one component of getting your bar card, at least in Texas.

A giggle on that note: judges are getting REALLY cranked-off when practitioners copy-paste from an AI. Basically you get dangerously authoritative-sounding bullsh*t. In one case here locally, the atty (known to be a really lazy clown) tossed some AI generated Smile into a defendants motion for summary judgment. Didnt read it. Just based the whole thing around some AI boilerplate. And in doing so, he utterly inverted the legal proposition in Nixon v Property Management. Got it COMPLETELY backwards. And the judge creamed him, for good reason. Next time that happens, there will be smoke where this guys Bar card used to be.

So… Naw. Bar cards in human hands are going to be safe for awhile. 🤣

These "GPT 4 passes exam" tests have all been done by... OpenAI themselves. They surely wouldn't bend the truth for money.... It's very easy for it to give a right answer if the training set already contains a correct answer. It's called data contamination and it appears that this is what leads to such high scores. For some of these tests they allegedly removed questions that have long enough substring matches in their (non-public, because presumably also illegally acquired) training data and then grade the test as if it had less questions (likely removing the hardest questions?). This all is not really verifiable. They very easily can lie about this and have a financial incentive to do so. They could also have fucked up their funny substring search (which really is a weird "3 random chunks of 50 characters" comparsion) on accident and not noticed.

I haven't used any of the AI chat stuff.

But like what happens if you ask it to:

"write a tic-tac-toe game for the Parallax P1 microcontroller"

Would it be able to do that ? I mean if someone asked me to do that I could. I would just decide for myself what the input (9 buttons) and output(9 bi-color LEDs) would be and such. Can an AI do that ?

Bean

A friend of mine who has very little experience with the Propeller asked ChatGPT to write a P2 program. He thought it looked okay, so he sent it to me.

Swing and a miss for AI on this one.

' Propeller 2 code to turn on and off seven LEDs ' ' This code assumes that the seven LEDs are connected to pins P0-P6 on the Propeller 2. ' CON LED_PIN_0 = 0 ' Pin P0 LED_PIN_1 = 1 ' Pin P1 LED_PIN_2 = 2 ' Pin P2 LED_PIN_3 = 3 ' Pin P3 LED_PIN_4 = 4 ' Pin P4 LED_PIN_5 = 5 ' Pin P5 LED_PIN_6 = 6 ' Pin P6 END CON ' Set the output direction for the seven LED pins dirs = [1, 1, 1, 1, 1, 1, 1] ' Turn off all seven LEDs leds = [0, 0, 0, 0, 0, 0, 0] ' Loop forever while(1) ' Turn on the first LED leds[LED_PIN_0] = 1 ' Wait for one second waitcnt(clkfreq + cnt) ' Turn off the first LED and turn on the second LED leds[LED_PIN_0] = 0 leds[LED_PIN_1] = 1 ' Wait for one second waitcnt(clkfreq + cnt) ' Turn off the second LED and turn on the third LED leds[LED_PIN_1] = 0 leds[LED_PIN_2] = 1 ' Wait for one second waitcnt(clkfreq + cnt) ' Turn off the third LED and turn on the fourth LED leds[LED_PIN_2] = 0 leds[LED_PIN_3] = 1 ' Wait for one second waitcnt(clkfreq + cnt) ' Turn off the fourth LED and turn on the fifth LED leds[LED_PIN_3] = 0 leds[LED_PIN_4] = 1 ' Wait for one second waitcnt(clkfreq + cnt) ' Turn off the fifth LED and turn on the sixth LED leds[LED_PIN_4] = 0 leds[LED_PIN_5] = 1 ' Wait for one second waitcnt(clkfreq + cnt) ' Turn off the sixth LED and turn on the seventh LED leds[LED_PIN_5] = 0 leds[LED_PIN_6] = 1 ' Wait for one second waitcnt(clkfreq + cnt) ' Turn off the seventh LED leds[LED_PIN_6] = 0 endwhileIf there's a tic-tac-toe game out there it can crib from, and it had enough of a grasp of the Propeller, it would come up with something that looks like useful code.

Then when you try to run it...it's a different story.

This post sums it up

Craig

Human brain is a probabilistic text prediction engine too

ChatGPT saved my project. They wanted me to write a program in php/js, both languages I know they exists and there was no time to learn "conventionally" by writing "hello, world" and going through examples in manuals.

So I asked the chat the basic questions - how, in PHP, or JS, i can do this and that. How to make a loop, extract a substring, how to ask Wordpress for user name, while writing the project. It answers immediately. Searching for answers in web and manuals would cost me hundreds of hours instead.

Now I don't need the chat anymore as i learned PHP enough to know how to do a loop in it, but if one wants to learn a new programming language fast while doing a project in it, this is a very good tool.

to know how to do a loop in it, but if one wants to learn a new programming language fast while doing a project in it, this is a very good tool.

Speak for your own brain. And you're really selling it short by comparing yourself to some math in a box. You have amazing abilities such as:

More pertinently, take care, for GPT-type systems love to surface outdated/bad ways of doing something even for trivial snippets. Sometimes because more of the, uh, appropriated, texts talk about the older method, sometimes because it's actually just stupid (run far away if you see a

forloop in Ruby instead ofeachiterator methods)You quickly learned the syntax from it. That's good, and the sort of thing that any decent tutorial could do. You got a fast leg-up on the syntax because you are already skilled at programming.

But need to be clear that ChatGPT isn't doing the programming. It's not at all smart itself. There is no intelligence there.

conciousness. That is what the GPT hasn't and that is the main difference.

It can. In the example above I was a programmer, and GPT was a teacher/assistant. But if I asked it "how to do" and there was no simple function to do the job it ends with full procedures that (in most cases) worked.

At the same level as a Google search, sure. It's just a rehash of the same. It isn't teaching at all. You are skilled in the art of programming already.

evanh,

ChatGPT must get a lot of information from Google because I asked it about GPIB and it gave me the canned response of 'just buy a GPIB interface'.

Search anything on Google and the first few pages of links are for people selling stuff.

It didn't know that the Commodore PET had GPIB (said I needed to buy it) but it seemed to know the specifications.

I use Yahoo because Google and Bing bring up too much useless garbage but it's not as good as it used to be.

If I want to buy something I just type the name. If want a datasheet for it then I type the name and the word "datasheet". I'd say that sort of setup is a general default of search engines these days.