Rotate 16 x 16 image algorithm - ChatGPT!!!

jrullan

Posts: 178

jrullan

Posts: 178

Ok this was a WTF moment for me. About a week ago I was struggling with implementing an image rotation method in Spin2 to rotate a 16x16 pixels image at any degree. Then I watched a Youtube video about this OpenAI ChatGPT thing that even produces code, so I decided to try it out...

My question to the bot:

"describe an algorithm that rotates a 16 by 16 pixels image to any degree"

The answer:

One algorithm that can be used to rotate a 16 by 16 pixels image by any degree is the following:

Begin by converting the angle of rotation from degrees to radians. This is necessary because most mathematical functions, including those used for image manipulation, use radians as the unit for angles. The conversion formula is: radians = degrees * (pi / 180).

Next, calculate the center point of the image. This is the point around which the image will be rotated. The center point can be calculated by taking the average of the x and y coordinates of the top-left and bottom-right corners of the image. For example, if the top-left corner of the image has coordinates (x1, y1) and the bottom-right corner has coordinates (x2, y2), the center point will be at coordinates ((x1 + x2) / 2, (y1 + y2) / 2).

Create a new, blank image that is the same size as the original image. This will be the rotated image.

Iterate through each pixel in the original image. For each pixel, calculate its new position in the rotated image using the following formula:

x_rotated = (x_original - center_x) * cos(angle) - (y_original - center_y) * sin(angle) + center_x

y_rotated = (x_original - center_x) * sin(angle) + (y_original - center_y) * cos(angle) + center_ySet the color of the pixel at the new position (x_rotated, y_rotated) in the rotated image to the color of the pixel at the original position (x_original, y_original) in the original image.

Repeat this process for all pixels in the original image.

When all pixels have been processed, the rotated image will be complete.

This algorithm works by first calculating the center point of the image, which serves as the pivot point for the rotation. It then iterates through each pixel in the original image, calculating its new position in the rotated image based on the angle of rotation and the distance from the center point. Finally, it sets the color of the pixel at the new position in the rotated image to the color of the pixel at the original position in the original image.

This algorithm can be implemented using a variety of programming languages and libraries, such as Python with the Pillow library or C++ with the OpenCV library.

I then implemented it in Spin2 and it worked!

PUB rotate_16_image(orig, rotated, angle)|xc, yc, xr, yr, x, y, xa, ya, val

xc := 8

yc := 8

repeat x from 0 to 15

xa := x - xc

repeat y from 0 to 15

ya := y - yc

xr := (QCOS(xa, angle, 360) - QSIN(ya, angle, 360) + xc)

yr := (QSIN(xa, angle, 360) + QCOS(ya, angle, 360) + yc)

WORD[rotated][yr].[xr] := WORD[orig][y].[x]

I must admit I don't know how to feel about this. It's equal parts scary and surprising.

Comments

The question

The result:

CON angle_degrees LONG center_x LONG center_y LONG image[16][16] LONG PUB RotateImage(angle, center_x, center_y, image) angle_radians = angle * PI / 180 FOR y FROM 0 TO 15 FOR x FROM 0 TO 15 new_x = x * COS(angle_radians) - y * SIN(angle_radians) new_y = x * SIN(angle_radians) + y * COS(angle_radians) IF new_x >= 0 AND new_x < 16 AND new_y >= 0 AND new_y < 16 image[new_x][new_y] = image[x][y]It still has to learn

Indeed. A few weeks ago a friend of mine -- who is new to Spin -- asked ChatGPT to write a simple Spin program. It needed a lot of touch-ups to work.

Still... wouldn't it be interesting to provide accurate Spin and PASM training data to ChatGPT so that it could provide better suggestions.

The working code

Still needs work though.

ChatGPT showed its limitations in this example. In fact you want to loop over the pixels of the NEW image, not the original, and map them backwards into the original image. That way you won't get any holes in the output image. ChatGPTs suggestion will probably work OK for 90 degree rotations of squares, but not so well for "odd" angles.

ChatGPT doesn't seem to know about the cordic. Wonder how long that will last.

Brian's 'spinning fozzie image' video (uses cordic queues)

https://forums.parallax.com/discussion/comment/1385954/#Comment_1385954

Y'all are missing the obvious mistake of using sin/cos within the loop. Rotation can be expressed as an affine matrix, no per-pixel trig required.

Hi @Wuerfel_21, I'm researching about this affine transformations but how are matrices operations implemented in Spin2? Can you point out some examples or reference?

Thanks

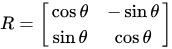

The rotation matrix is just

The given algorithm pointlessly recomputes this on every pixel. The matrix (i.e. the individual sin/cos calls) can obviously be factored out of the loop. Additionally, since the x/y space is stepped through in equal intervals, the matrix multiplication can be strength-reduced out of the loop, leaving only additions. Brain hurts right now, so no example code from me today.

Thanks!

It doesn't know if it's correct or not. As others have noted, the response is a mash-up of a poor solution. Exactly what seems to be normal for these so-called AIs.

They're like a Google search but a Google search is better because A) you don't expect that to be perfect, and B ) it also provides multiple matches for your perusal.

And you'll note Google, who have spent mega bucks on "AI" themselves, have been very quiet about what the tech can achieve.

IBM threw in the towel on this a couple of years back, BTW. The Watson engine was supposed to revolutionise medical knowledge. But it failed to impress those that understood their own questions ... the doctors! It worked well when the question had a single word answer, is about all.