The standalone Propeller 2 XMM Demo has been removed from SourceForge since an improved (but incompatible) version of Propeller 2 XMM support is now incorporated in Catalina release 5.6.

Decided to give Catalina a try. Console says there is no P2 attached -- but there is, and Propeller Tool has no problem identifying and downloading to it. When I use "Download and Interact" I can see the P2 Eval reset, but nothing else happens. Suggestions?

@JonnyMac said:

Decided to give Catalina a try. Console says there is no P2 attached -- but there is, and Propeller Tool has no problem identifying and downloading to it. When I use "Download and Interact" I can see the P2 Eval reset, but nothing else happens. Suggestions?

Which 'Console'?

How are the DIP switches set on your P2? Also, try specifying the port explicitly. This is most easily done from the Catalina command line, using a command like (on Windows):

payload program.bin -b230400 -pX -Ivt100

or (on Linux):

payload program.bin -b230400 -pX -i

where 'program.bin' is your program (which can be a Spin binary) and X is your port.

If this works, but you prefer using Geany, you can add the -p option to the Geany download commands (see the Build->Set Build Commands menu option).

Also, when using Geany, make sure the -p2 option is included in the Catalina Options (see the Project Properties command)

I've had a bad week, I have a raging headache, I'm stressed about work -- I feel like hell. That said, I was probably being a little impatient. Since I didn't delete the installer nor the project directory, I reinstalled.

Take 2... I had to move the P2 Flash switch to OFF to get payload to work

@JonnyMac said:

I've had a bad week, I have a raging headache, I'm stressed about work -- I feel like hell. That said, I was probably being a little impatient. Since I didn't delete the installer nor the project directory, I reinstalled.

No worries. But maybe not the best time to be trying something new!

Take 2... I had to move the P2 Flash switch to OFF to get payload to work

Odd. That switch makes no difference for me - only the P59 switches should affect payload. EDIT: This may depend on the program loaded into FLASH!

Still, glad you made some progress. Let me know if you run into more issues.

I now have both the SD Card and Serial loaders working for Propeller 2 XMM programs, so Catalina XMM programs can now be used on the Propeller 2 as easily as they could on the Propeller 1 - Catalyst knows how to load them from SD Card, and to load them serially from the PC you just compile them as XMM programs (SMALL or LARGE) and then specify the XMM loader in your payload command (exactly as you did on the Propeller 1).

For example, to compile hello world.c as an XMM SMALL program, load it into PSRAM on the P2 EDGE from the PC and execute it, you might use the following commands:

Interestingly, on the Propeller 2 the XMM serial loader is written entirely in C, whereas on the Propeller 1 it had to be written entirely in PASM. This makes life a lot easier!

This completes pretty much everything I had intended to include in the next release, so expect a new release sometime soon.

Ross.

EDIT: The catalina command to compile XMM programs on the P2 has now been simplified - if the platform supports XMM you no longer need to explicitly specify either any additional libraries or the cache - if these are required by the platform they will be added as needed. But you can override the default cache size (8kb) if you want to by explicitly specifying a cache size.

This post is a follow up to the previous one wherein we discussed the possibility (or lack thereof) of running multi-cog XMM on the Prop1:

@Wingineer19 said:

Do you anticipate any of the techniques used in the Prop2 XMM to backfeed into an enhanced Prop1 XMM? Like, for example, allowing more than one cog to execute XMM code on the Prop1?

@RossH said:

Not likely. On the Propeller 1 both Hub space and cog space are too limited. But with the Propeller, you should never say never!

@Wingineer19 said:

(Somewhere I thought I came across a writeup wherein the GCC developers claim to have enabled the Prop1 to execute XMM code on multiple cogs with as little as 2K cache for each cog).

@RossH said:

Not with the cache architecture I am currently using, which was originally the same one GCC used. But I didn't really stay in touch with the GCC progress, because Catalina was always so much more capable and GCC could never do what I needed. But I'll check to see if they later came up with a better cache architecture that the one which I adopted. If so, I could perhaps use it on both the Propeller 1 and the Propeller 2. If you or anyone else has a reference or link, please let me know.

While doing some digging I found this thread where you, David Betz, Cluso99, Heater, et al discussed Prop1 XMM multi-cog execution:

If I'm understanding this correctly, David said that it was possible to have several cogs running XMM with only a single cog dedicated as a cache driver? Or am I reading this wrong?

Multi-cog XMM with only a single caching cog would be impressive if it could provide reasonable performance. He said they tried it on more than one XMM cog and got decent results, but he didn't recommend running more than 4 XMM cogs due to hubram usage requirements. He also said that the best performance would be achieved using the Catalina equivalent of the XMM SMALL memory model.

If using a single caching cog can service multiple XMM cogs on the Prop1 then perhaps something similar could be done with the Prop2?

If I'm understanding this correctly, David said that it was possible to have several cogs running XMM with only a single cog dedicated as a cache driver? Or am I reading this wrong?

I think you are probably referring to this, from David Betz ...

If you give each XMM COG a 2K cache you can run four with 8K total hub memory consumed by the caches and still have three COGs left to run PASM or COGC drivers.

and also this ...

In propgcc you have a single COG managing access to external memory and up to 7 COGs running XMM code. So there is only one COG of overhead no matter how many XMM COGs your program uses.

But I think that while these statements may be true in theory, in practice things are not so easy. For instance, if you need to have to have a cog dedicated to managing XMM access, then each of the XMM execution cogs will need an independent cache to achieve reasonable execution speed - and that means having a cog dedicated to each cache (so a maximum of 3, not 7). Implementing a cache capable of servicing multiple XMM cogs is certainly possible, and would help slightly (in theory that might get you to 6 XMM execution cogs, but at the cost of significantly reduced execution times) - but I am not aware this was ever done. If GCC prototyped such a solution then it was probably done using Hub RAM to emulate XMM RAM, and not using physical XMM RAM. Having 6 XMM cogs may be possible in such a simulated case, but this doesn't mean it is possible in practice with actual XMM hardware, and also with the typical need to use some cogs for other functions (HMI, SD access, clock, floating point etc). The practical limit could be as low as 2 or 3 XMM execution cogs. But I would be happy to be proven wrong on this, since it would mean I could simply adopt this as a solution!

@RossH said:

But I think that while these statements may be true in theory, in practice things are not so easy. For instance, if you need to have to have a cog dedicated to managing XMM access, then each of the XMM execution cogs will need an independent cache to achieve reasonable execution speed - and that means having a cog dedicated to each cache (so a maximum of 3, not 7).

Each XMM cog needs its own cache memory, but there's no reason each needs its own cache cog. If the cache misses are so common that the cache cog is a bottleneck, then the cache is too small. It should be relatively straightforward to have one cache cog serving the XMM execution cog requests into up to 7 different areas of HUB ram, and IIRC David did implement something like this. But XMM on P1 was pretty much dying by that point, so it didn't see much use.

@RossH said:

But I think that while these statements may be true in theory, in practice things are not so easy. For instance, if you need to have to have a cog dedicated to managing XMM access, then each of the XMM execution cogs will need an independent cache to achieve reasonable execution speed - and that means having a cog dedicated to each cache (so a maximum of 3, not 7).

Each XMM cog needs its own cache memory, but there's no reason each needs its own cache cog. If the cache misses are so common that the cache cog is a bottleneck, then the cache is too small. It should be relatively straightforward to have one cache cog serving the XMM execution cog requests into up to 7 different areas of HUB ram, and IIRC David did implement something like this. But XMM on P1 was pretty much dying by that point, so it didn't see much use.

Yes, I mentioned this would be possible - but this does not mean it is practical - such a cache could only service one cog at a time, so it potentially stalls all the XMM cogs for a significant amount of time every time a cache miss occurs for any one of them. Unless the caching cog itself can multitask. And while that might just be possible on the P2, I can't see it as being possible on the P1 - e.g. the P1 simply doesn't have enough cog RAM, there is no internal P1 stack, and P1 cog code is not re-entrant (but see footnote). Also, as you point out, XMM on the P1 is already quite slow, and implementing an even slower cache would probably kill it completely. However, I am not ruing out the possibility that David came up with a better cache architecture than the one we all used at the time, so if you (or anyone else) has a link to such code I'd be interested in looking at it, since I could probably plug it straight in to the P2 (where it might be practical).

Ross.

Footnote: Although the usual rule applies here - on should never say 'never' when it comes to the Propeller!

Maybe you will find something of interest within this repository...

Yes, I knew about that repository, but had never really investigated it since gcc died on the P1. However, I just had a quick look and it does seem that David Betz updated the cache so that a single cache cog could service multiple XMM cogs. But as I pointed out earlier, this is not a good solution - it means every XMM cog can potentially stall for as long as the single cache cog is busy - which can be a long time with slow (e.g. serial) XMM RAM. But actually it is worse than that - it also reduces the speed of the cache cog in responding to cache requests, since the cog must check 8 cache mail boxes every time it checks for a new cache request. So not only could this solution stall all XMM cogs while it was servicing each cache request, it would also take longer just to check for each new cache request than it does to service it - especially when the required data is already in the cache (which it normally is - that's the whole point of using a cache!). Finally, they also use the single cache cog to refresh SDRAM, which would slow the cache down even further, and potentially eliminate any speed advantage using SDRAM might have had.

So it is probably no wonder the gcc team saw no future for XMM on the P1. The performance of XMM under gcc must have been quite slow even if you only wanted one XMM cog.

I am guessing here, but I suspect they were thinking they could eventually get Linux running on the P1 using XMM - but in trying to do that they appear to have doomed the whole gcc initiative.

@RossH said:

Yes, I mentioned this would be possible - but this does not mean it is practical - such a cache could only service one cog at a time, so it potentially stalls all the XMM cogs for a significant amount of time every time a cache miss occurs for any one of them.

It's true that there is a slight performance gain from having multiple cache cogs, since they can multitask the administrative overhead, but they can't multitask the really time consuming part of the caching operation, which is reading the data from the external memory. For most applications the small performance gain probably isn't worth it, because no matter how many cache cogs you have, only one at a time can actually be accessing the serial RAM (or whatever the backing store is). I guess if you have multiple different XMM memories in play (flash, SD, PS RAM) then having one cache cog per external memory would make sense, but that's a pretty niche case and in any event is quite a different model to having one cache cog per XMM execution cog.

@RossH said:

Yes, I mentioned this would be possible - but this does not mean it is practical - such a cache could only service one cog at a time, so it potentially stalls all the XMM cogs for a significant amount of time every time a cache miss occurs for any one of them.

It's true that there is a slight performance gain from having multiple cache cogs, since they can multitask the administrative overhead, but they can't multitask the really time consuming part of the caching operation, which is reading the data from the external memory. For most applications the small performance gain probably isn't worth it, because no matter how many cache cogs you have, only one at a time can actually be accessing the serial RAM (or whatever the backing store is). I guess if you have multiple different XMM memories in play (flash, SD, PS RAM) then having one cache cog per external memory would make sense, but that's a pretty niche case and in any event is quite a different model to having one cache cog per XMM execution cog.

It is not a slight performance gain. It is potentially a massive performance gain. Precisely because it reduces the chance of needing "the really time consuming part of the caching operation, which is reading the data from the external memory". The whole point of caching is that for most cache requests, the data is already in the cache, and all you need is a reminder of exactly where. You don't need to read XMM to get it if it is already in Hub RAM, which it typically will be if you have recently accessed it. You only need to read XMM RAM on a cache miss, which - given a decent caching algorithm - is a rare event. And what makes it more likely that what you want is already in hub RAM is if the cache is dedicated to you, and is not polluted by requests from other cogs which are probably executing code elsewhere in memory.

@RossH said:

Yes, I mentioned this would be possible - but this does not mean it is practical - such a cache could only service one cog at a time, so it potentially stalls all the XMM cogs for a significant amount of time every time a cache miss occurs for any one of them.

It's true that there is a slight performance gain from having multiple cache cogs, since they can multitask the administrative overhead, but they can't multitask the really time consuming part of the caching operation, which is reading the data from the external memory. For most applications the small performance gain probably isn't worth it, because no matter how many cache cogs you have, only one at a time can actually be accessing the serial RAM (or whatever the backing store is). I guess if you have multiple different XMM memories in play (flash, SD, PS RAM) then having one cache cog per external memory would make sense, but that's a pretty niche case and in any event is quite a different model to having one cache cog per XMM execution cog.

It is not a slight performance gain. It is potentially a massive performance gain. Precisely because it reduces the chance of needing "the really time consuming part of the caching operation, which is reading the data from the external memory". The whole point of caching is that for most cache requests, the data is already in the cache, and all you need is a reminder of exactly where. You don't need to read XMM to get it if it is already in Hub RAM, which it typically will be if you have recently accessed it. You only need to read XMM RAM on a cache miss, which - given a decent caching algorithm - is a rare event. And what makes it more likely that what you want is already in hub RAM is if the cache is dedicated to you, and is not polluted by requests from other cogs which are probably executing code elsewhere in memory.

You've totally missed my point: whether the data is in hub RAM or not is not a function of how many cache cogs you have, it's a function of how many cache areas you have in hub RAM. That is, you can certainly have multiple cache areas managed by one cog. Having multiple cache cogs to manage the cache area does very little to help with filling the cache, since only one cog at a time can actually manage that cache.

Ah, I think I may now understand why we're talking past each other: I take it that in your model the XMM execution cog has no cache logic at all, and to retrieve any data it has to talk to the cache cog? If so then yes, I see the problem. But I'm pretty sure that in David's multi-cog XMM that wasn't the case: the XMM cogs had a tiny bit of cache logic in them (enough to handle trivial cache hits) and only had to make requests to the cache cog on cache misses This also cuts down on general inter-cog communication overhead.

@ersmith said:

Ah, I think I may now understand why we're talking past each other: I take it that in your model the XMM execution cog has no cache logic at all, and to retrieve any data it has to talk to the cache cog? If so then yes, I see the problem. But I'm pretty sure that in David's multi-cog XMM that wasn't the case: the XMM cogs had a tiny bit of cache logic in them (enough to handle trivial cache hits) and only had to make requests to the cache cog on cache misses This also cuts down on general inter-cog communication overhead.

I'd have to check the code in more detail to make sure - it is possible that we use basically the same logic (I recognized parts of it) but that Catalina has less of that logic in the XMM cog than gcc did. But (as is always the case) there are tradeoffs to be made here - more cache logic in the XMM cog means less room for other things, which means you have to implement some common operations using code stored in Hub RAM rather than Cog RAM. So while it sounds good in theory, you have to ensure you gain enough not to just lose it all again because all you end up doing is caching common operations that could have been in the XMM cog anyway.

But that is not the main issue here. In Catalina the XMM cog goes to the cache cog whenever it needs to change page. This is a fairly trivial operation for one XMM cog, but not for eight XMM cogs. However, it does mean multiple XMM cogs can share the same cache, which means there is no problem if two cogs want to write to the same page, since there is only ever one copy of the page in Hub RAM. If each XMM cog implements its own cache logic, then doesn't that also mean that each XMM cog must have its own cache? And in that case, what happens when two XMM cogs write to the same page?

But that is not the main issue here. In Catalina the XMM cog goes to the cache cog whenever it needs to change page. This is a fairly trivial operation for one XMM cog, but not for eight XMM cogs. However, it does mean multiple XMM cogs can share the same cache, which means there is no problem if two cogs want to write to the same page, since there is only ever one copy of the page in Hub RAM. If each XMM cog implements its own cache logic, then doesn't that also mean that each XMM cog must have its own cache? And in that case, what happens when two XMM cogs write to the same page?

It's been a long time since I looked at the code, but I think cache writes also have to go through the central cog. I believe the code was optimized for the (fairly common) case where mostly read-only code is stored in XMM, with only occasional writes. And I think the cache data was in hub RAM and shared by all the XMM cogs, with some kind of locking mechanism so that the central cache cog would not evict cache lines that were in use for execution. But as I say it's been a while since I looked at the code, I may be misremembering. The main thing I remember is that the round-trip to a mailbox was expensive enough that keeping the fast case (cache hit for a read) in the local cog saved a significant amount of time, as well as freeing up cogs for other uses (having 2 cogs, one execution and one cache, dedicated to each hardware thread is pretty expensive).

But that is not the main issue here. In Catalina the XMM cog goes to the cache cog whenever it needs to change page. This is a fairly trivial operation for one XMM cog, but not for eight XMM cogs. However, it does mean multiple XMM cogs can share the same cache, which means there is no problem if two cogs want to write to the same page, since there is only ever one copy of the page in Hub RAM. If each XMM cog implements its own cache logic, then doesn't that also mean that each XMM cog must have its own cache? And in that case, what happens when two XMM cogs write to the same page?

It's been a long time since I looked at the code, but I think cache writes also have to go through the central cog. I believe the code was optimized for the (fairly common) case where mostly read-only code is stored in XMM, with only occasional writes. And I think the cache data was in hub RAM and shared by all the XMM cogs, with some kind of locking mechanism so that the central cache cog would not evict cache lines that were in use for execution. But as I say it's been a while since I looked at the code, I may be misremembering. The main thing I remember is that the round-trip to a mailbox was expensive enough that keeping the fast case (cache hit for a read) in the local cog saved a significant amount of time, as well as freeing up cogs for other uses (having 2 cogs, one execution and one cache, dedicated to each hardware thread is pretty expensive).

Yes, all writes need to be coordinated, and the easiest way to do that is have them all performed by the same cog. Perhaps that's where the confusion lies - it depends whether you are looking at the write code or the read code. For Catalina both are done by essentially the same code, but for gcc perhaps reads are done by the XMM cog (except for cache misses) and writes by the cache cog. That would make some sense. And it means you could have multiple caches for reads, as long as you don't also need to write to those pages. Which really means you can have faster code access, but not data access. However, there is another downside to this solution - if you have multiple read caches then each cache would have to be be very small - i.e. 1/8th of Catalina's cache size. This would reduce the performance of the multi-cache solution. So it is not clear to me that having multiple caches would necessarily end up being faster. Most likely it would depend on the code being executed.

However, this has been a useful discussion. XMM on the P1 is unlikely to be revisited now that gcc is essentially dead, but I now have a better idea of what might be the best solution for the P2. The current solution - which will be released shortly - is the Catalina P1 solution, but the P2 architecture offers many ways to improve that solution without necessarily going down the gcc path.

Ha! I just found a bug that had eluded me for a couple of days - I couldn't get the VGA and USB keyboard and mouse drivers working in XMM SMALL mode (they worked fine in all the other modes, including XMM LARGE mode). Turned out to be a missing orgh statement, and it wasn't even in my code - it was in some PASM code I imported. The program complied but wouldn't run.

Now only some documentation updates to go before the release.

I sometimes wonder how new users ever manage to get to grips with programming the Propeller in either PASM or Spin. There are just so many 'gotchas'

The main purpose of this release is to add XMM support to the Propeller 2. Only the PSRAM on the P2 Edge is currently supported as XMM RAM, but more will be added in future (e.g. HyperRAM).

Here is the relevant extract from the README.TXT:

RELEASE 5.6

New Functionality

-----------------

1. Catalina can now build and execute XMM SMALL and XMM LARGE programs on

Propeller 2 platforms with supported XMM RAM. Currently the only supported

type of XMM RAM is PSRAM, and the only supported platform is the P2_EDGE,

but this will expand in future. To compile programs to use XMM RAM, simply

add -C SMALL or -C LARGE to a normal Catalina compilation command.

For example:

cd demos

catalina -p2 -lci -C P2_EDGE -C SMALL hello_world.c

or

cd demos

catalina -p2 -lci -C P2_EDGE -C LARGE hello_world.c

Note that a 64kb XMM loader is always included in the resulting binaries,

so the file sizes will always be at least 64kb.

2. The payload serial program loader can now load XMM programs to the

Propeller 2. Loading XMM programs requires a special XMM serial loader to

be built, which can be built using the 'build_utilities' script. Currently,

the only supported type of XMM RAM is PSRAM, and the only supported P2

platform is the P2_EDGE. The script will build SRAM.bin (and XMM.bin,

which is simply a copy of SRAM.bin). These load utilities can then be

used with payload by specifying them as the first program to be loaded

by the payload command, and the XMM program itself as the second program

to be loaded.

For example:

cd demos

catalina -p2 -lc -C P2_EDGE -C LARGE hello_world.c

build_utilities <-- follow the prompts, then ...

payload xmm hello_world.bin -i

3. The Catalyst SD loader can now load XMM programs from SD Card on the

Propeller 2. Just build Catalyst as normal, specifying a suppored P2

platform to the 'build_all' script. Currently, the only type of XMM RAM

supported is PSRAM, and the only supported P2 platform is the P2_EDGE.

For example:

cd demos\catalyst

build_all P2_EDGE SIMPLE VT100 CR_ON_LF

Note that now that Catalyst and its applications can be built as XMM

SMALL or LARGE platforms on the Propeller 2, it is no longer possible to

specify a memory model on the command line when building for Propeller 2

platforms. This is because some parts of Catalyst MUST be build using a

non-XMM memory model. However, it is possible to rebuild the non-core

component of Catalyst as XMM SMALL or XMM LARGE programs. See the

README.TXT file in the demos\catalyst folder for more details.

4. New functions have been added to start a SMALL or LARGE XMM kernel:

_cogstart_XMM_SMALL();

_cogstart_XMM_SMALL_cog(g);

_cogstart_XMM_LARGE();

_cogstart_XMM_LARGE_cog();

_cogstart_XMM_SMALL_2();

_cogstart_XMM_SMALL_cog_2();

_cogstart_XMM_LARGE_2();

_cogstart_XMM_LARGE_cog_2();

These are supported on the Propeller 2 only. The '_2' variants accept

two arguments rather than just one, to allow the passing of argc and

argv to the program to be started.

Refer to 'catalina_cog.h' for details of parameters etc.

5. The Cache and Cogstore cogs are now explicitly registered and can now

therefore be listed by a C program interrogating the register (e.g. by

the demo program ex_registry.c)

6. The p2asm PASM assembler now allows conditions to be specified as "wc,wz"

or "wz,wc" which both mean the same as "wcz", It also does more rigorous

checking about which instructions should allow such conditions.

7. OPTIMISE can now be used as a synonym for OPTIMIZE. Both enable the

Catalina Optimizer when used in CATALINA_DEFINE or as a parameter to

one of the build_all scripts. For Catalina command-line use you should

use -C OPTIMISE or -C OPTIMIZE or the command-line argument -O5.

8. COLOUR_X can now be used as a synonym for COLOR_X, where X = 1, 4, 8 or

24. Both specify the number of colour bits to be used in conjunction

with the VGA HMI options. For example, on the Catalina command-line you

could use either -C COLOUR_24 or -C COLOR_24.

9. The build_catalyst script now looks for Catalyst in the current directory

first, then the users home directory (for folder 'demos\catalyst') before

finally resorting to building Catalyst in the Catalina installation

directory.

10. The build_utilities script now looks for utilities to build in the current

directory first, then the users home directory (for folder 'utilities')

before finally resorting to building the utilities in the Catalina

installation directory.

11. There are now more pre-built demos of Catalyst. In the main Catalina

directory you will find:

P2_DEMO.ZIP - compiled to suit either a P2_EVAL or a P2_EDGE without

PSRAM, using a serial HMI on pins 62 & 63 at 230400

baud. Built to use a VT100 emulator, or payload with

the -i and -q1 options.

P2_EDGE.ZIP - compiled to suit a P2_EDGE with 32 Mb of PSRAM, using

a serial HMI on pins 62 & 63 at 230400 baud. Built to

use a VT100 emulator, or payload with the -i and -q1

options.

P2_EVAL_VGA.ZIP - compiled to suit a P2_EVAL using a VGA HMI with VGA

base pin 32 and USB base pin 40.

P2_EDGE_VGA.ZIP - compiled to suit a P2_EDGE with 32Mb of PSRAM using a

VGA HMI with VGA base pin 0 and USB base pin 8 .

In all cases, unzip the ZIP file to an SD Card, and set the pins on the

P2 board to boot from the SD Card.

The XMM demos for the P2 EDGE are now included in the release, in the files P2_EDGE.ZIP (using a serial HMI) and P2_EDGE_VGA.ZIP (using a VGA HMI).

Here is the XMM.TXT from the Serial HMI version (P2_EDGE.ZIP):

This version of Catalyst contains various demo programs compiled to run in

XMM RAM on a P2 EDGE with 32Mb of PSRAM installed. They are compiled to use

a Serial HMI on pins 62 & 63 at 230400 baud using a VT100 emulator or

payload using the -i and -q1 option.

The XMM files are:

xs_lua.bin \

xs_luac.bin | the Lua interpreter, compiler and execution engine,

xs_luax.bin / compiled to run in XMM SMALL mode.

xl_lua.bin \

xl_luac.bin | the Lua interpreter, compiler and execution engine,

xl_luax.bin / compiled to run in XMM LARGE mode.

xl_vi.bin - the vi text editor, compiled to run in XMM LARGE mode,

which allows files larger than Hub RAM to be edited.

The XMM SMALL versions were compiled in the usual Catalyst demo folders,

using the command:

build_all P2_EDGE SIMPLE VT100 CR_ON_LF USE_COLOR OPTIMIZE MHZ_200 SMALL

The XMM LARGE versions were compiled in the usual Catalyst demo folders,

using the command:

build_all P2_EDGE SIMPLE VT100 CR_ON_LF USE_COLOR OPTIMIZE MHZ_200 LARGE

I found the issue that was holding up the Linux release, so Catalina release 5.6 is now complete and available on SourceForge for both Windows and Linux here.

The issue was to do with payload, but can be easily fixed by adjusting payload's timeouts on the command line. Now that Catalina supports XMM on the P2, uploading multiple files in one payload command is now routine on the P2 (as it already was on the P1), and it turns out the default interfile timeout was not long enough. I am not sure yet whether this is just my setup or a general issue, so instead of changing it I have just added a suitable note to the README.Linux file:

Under Linux, payload's various timeouts may need to be extended, especially

when loading multiple files (e.g. when loading XMM programs). Try adding

one or more of the following to the payload command (refer to the Catalina

Reference Manual for details):

-f 1000 <-- extend interfile timeout to 1000ms

-t 1000 <-- extend read timeout to 1000ms

-k 100 <-- extent interpage timeout to 100ms

-u 100 <-- extend reset time to 100ms

For example:

payload -f 500 -t 1000 -u 100 -k 100 SRAM hello_world -i

Once the payload command is working, adjust the timeouts one at a time

to identify the timeout(s) that need adjusting and a suitable value.

In my case, I only needed to extend the interfile delay to 500ms (i.e. adding -f 500 ) to make payload work reliably.

Ross.

P.S. While testing this, I noticed a a minor issue when compiling small programs (like hello_world.c) that I had not noticed when compiling programs that were already megabytes in size (which I was doing to test the XMM!). The issue is that the code size shown by the compiler can be be 512kb too large in some cases - for instance:

The answer is that the program is actually only few kilobytes. But when compiling in LARGE mode, the addressing for the XMM RAM must start above the Hub RAM, and the compiler is reporting the program size as if it started from address zero, which includes the entirety of the 512kb Hub RAM which is not used for code at all.

It makes no difference to the actual compiler output - the file is correct, it is only the message that is in error. I will fix this in the next release.

Just a quick note about the xl_vi demo program included in release 5.6 - xl_vi is just Catalyst's usual vi text editor, but compiled to run on the P2_EDGE with 32Mb of PSRAM using the new P2 XMM LARGE mode, which means it can handle much larger text files. I had tested it with files large enough to show it could indeed handle files that the previous versions of vi couldn't, but I had not tested it with a really big text file.

I just did so using a 2MB text file, and yes it does work ... but it takes over 3 minutes to load the file into PSRAM. Once it is loaded then editing it is pretty snappy - until you have to save it back to disk - but since there is no progress indication on the load or save operations it would be very easy to assume the program had simply crashed and the operation would never complete. But it does.

Not directly about Catalina itself, but a new version of the stand-alone VTxxx terminal emulator that Catalina also now uses has been released. This program is actually part of the Ada Terminal Emulator package, which is available here.

This will be included in the next Catalina release, but you can download it now from the link provided and just overwrite comms.exe in Catalina's bin folder with the new version in this package.

The changes are:

- Comms has an improved ymodem implementation and dialog box:

* A ymodem transfer can now be aborted in the middle of a send or

receive operation.

* The dialog box now responds to Windows messages. This stops Windows

complaining that the application is not responding during a long

ymodem transfer.

* The Abort button now attempts to abort both the local and the remote

ymodem applications if a transfer is in progress. Previously, it

could only abort the remote ymodem application and only while the

local ymodem application was not executing.

* The Start and Done buttons now check and give an error message if a

ymodem transfer is in progress. Previously, they simply performed their

respective actions, which could lead to a comms program lock up.

* Opening the dialog box no longer crashes comms if no port has been

opened.

Hello Community - I've been using SimpleIDE to program P1 boards and I'd like to try out a P2 board so I'm investigating Catalina.

I've been working my way through the Getting Started PDFs and the reference manuals, but I've hit a road block.

Using the CLI, payload hello_world_3 -i works as expected, but using Geany, Build, Download and interact, I get Using Propeller (version 1) on port COM12 for download

Error loading program

I'm not sure what I'm missing. Guidance please.

@TommieBoy said:

Hello Community - I've been using SimpleIDE to program P1 boards and I'd like to try out a P2 board so I'm investigating Catalina.

I've been working my way through the Getting Started PDFs and the reference manuals, but I've hit a road block.

Using the CLI, payload hello_world_3 -i works as expected, but using Geany, Build, Download and interact, I get Using Propeller (version 1) on port COM12 for download

Error loading program

I'm not sure what I'm missing. Guidance please.

The Geany project files included in the Catalina demo folder are mostly set up for a Propeller 1. If you have a Propeller 2, you will probably need to add -p2 to the Catalina Options (accessible via the Project->Properties menu item). You may also need to modify the Baudrate to 230400 (it is probably set to 115200 if it was originally a P1 project).

Finally, if you have built a P1 executable, be sure to do a clean and then build the project again for the P2 - payload may default to downloading the P1 .binary rather than the P2 .bin file if both exist in the same directory.

Let me clarify. I haven't started with the P2 yet. I like to make one change at a time, so moving from SimpleIDE to Catalina is my fist change. The P2 will come after I'm comfortable with Catalina.

While working through the Getting Started tutorials, I hit this roadblock.

Using the CLI, payload hello_world_3 -i works as expected, but using Geany, Build, Download and interact, I get

Using Propeller (version 1) on port COM12 for download

Error loading program

The error message is kind of lacking in detail and I don't know where to start.

The error message is kind of lacking in detail and I don't know where to start.

That message means the loader didn't get the expected response from the Propeller. It may be a timeout issue.

To increase the timeout, and also get more information about what's going on, use the Build->Set Build Commands menu item and add -d -t 1000 to the "Download and Interact" command.

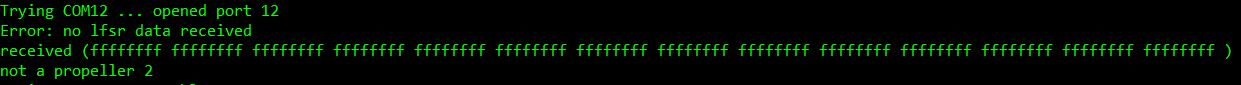

Thanks Ross, I'm using Windows 10. The modified command certainly changed things, however now I get

and at end of the message I get No Propeller found on any port. I do have a P1 Activity Board WX on that port.

I see that it is looking for a P2. From the Getting Started, on page 2 it says that P1 is the default and that I would need to add -P2 option to each Catalina command. I checked the Build->Set Build Commands but I don't see **-P2 **anywhere.

Comments

Post deleted.

The standalone Propeller 2 XMM Demo has been removed from SourceForge since an improved (but incompatible) version of Propeller 2 XMM support is now incorporated in Catalina release 5.6.

Ross.

Decided to give Catalina a try. Console says there is no P2 attached -- but there is, and Propeller Tool has no problem identifying and downloading to it. When I use "Download and Interact" I can see the P2 Eval reset, but nothing else happens. Suggestions?

Which 'Console'?

How are the DIP switches set on your P2? Also, try specifying the port explicitly. This is most easily done from the Catalina command line, using a command like (on Windows):

payload program.bin -b230400 -pX -Ivt100or (on Linux):

payload program.bin -b230400 -pX -iwhere 'program.bin' is your program (which can be a Spin binary) and X is your port.

If this works, but you prefer using Geany, you can add the -p option to the Geany download commands (see the Build->Set Build Commands menu option).

Also, when using Geany, make sure the -p2 option is included in the Catalina Options (see the Project Properties command)

Ross.

None of that worked. Uninstalled.

Hello Jon.

A shame. Next time you try it, give me a bit more information and I am sure we can get it working. I have a P2 Eval board and everything works fine.

Also, next time be aware that you don't need to use payload. Catalina binaries on the P2 can be loaded using any P2 loader that works for you.

I've had a bad week, I have a raging headache, I'm stressed about work -- I feel like hell. That said, I was probably being a little impatient. Since I didn't delete the installer nor the project directory, I reinstalled.

Take 2... I had to move the P2 Flash switch to OFF to get payload to work

No worries. But maybe not the best time to be trying something new!

Odd. That switch makes no difference for me - only the P59 switches should affect payload. EDIT: This may depend on the program loaded into FLASH!

Still, glad you made some progress. Let me know if you run into more issues.

Ross.

Just a progress update ...

I now have both the SD Card and Serial loaders working for Propeller 2 XMM programs, so Catalina XMM programs can now be used on the Propeller 2 as easily as they could on the Propeller 1 - Catalyst knows how to load them from SD Card, and to load them serially from the PC you just compile them as XMM programs (SMALL or LARGE) and then specify the XMM loader in your payload command (exactly as you did on the Propeller 1).

For example, to compile hello world.c as an XMM SMALL program, load it into PSRAM on the P2 EDGE from the PC and execute it, you might use the following commands:

Interestingly, on the Propeller 2 the XMM serial loader is written entirely in C, whereas on the Propeller 1 it had to be written entirely in PASM. This makes life a lot easier!

This completes pretty much everything I had intended to include in the next release, so expect a new release sometime soon.

Ross.

EDIT: The catalina command to compile XMM programs on the P2 has now been simplified - if the platform supports XMM you no longer need to explicitly specify either any additional libraries or the cache - if these are required by the platform they will be added as needed. But you can override the default cache size (8kb) if you want to by explicitly specifying a cache size.

Hi @RossH,

This post is a follow up to the previous one wherein we discussed the possibility (or lack thereof) of running multi-cog XMM on the Prop1:

While doing some digging I found this thread where you, David Betz, Cluso99, Heater, et al discussed Prop1 XMM multi-cog execution:

https://forums.parallax.com/discussion/153332/more-than-one-cogs-running-xmm-code

If I'm understanding this correctly, David said that it was possible to have several cogs running XMM with only a single cog dedicated as a cache driver? Or am I reading this wrong?

Multi-cog XMM with only a single caching cog would be impressive if it could provide reasonable performance. He said they tried it on more than one XMM cog and got decent results, but he didn't recommend running more than 4 XMM cogs due to hubram usage requirements. He also said that the best performance would be achieved using the Catalina equivalent of the XMM SMALL memory model.

If using a single caching cog can service multiple XMM cogs on the Prop1 then perhaps something similar could be done with the Prop2?

I think you are probably referring to this, from David Betz ...

and also this ...

But I think that while these statements may be true in theory, in practice things are not so easy. For instance, if you need to have to have a cog dedicated to managing XMM access, then each of the XMM execution cogs will need an independent cache to achieve reasonable execution speed - and that means having a cog dedicated to each cache (so a maximum of 3, not 7). Implementing a cache capable of servicing multiple XMM cogs is certainly possible, and would help slightly (in theory that might get you to 6 XMM execution cogs, but at the cost of significantly reduced execution times) - but I am not aware this was ever done. If GCC prototyped such a solution then it was probably done using Hub RAM to emulate XMM RAM, and not using physical XMM RAM. Having 6 XMM cogs may be possible in such a simulated case, but this doesn't mean it is possible in practice with actual XMM hardware, and also with the typical need to use some cogs for other functions (HMI, SD access, clock, floating point etc). The practical limit could be as low as 2 or 3 XMM execution cogs. But I would be happy to be proven wrong on this, since it would mean I could simply adopt this as a solution!

Ross.

Each XMM cog needs its own cache memory, but there's no reason each needs its own cache cog. If the cache misses are so common that the cache cog is a bottleneck, then the cache is too small. It should be relatively straightforward to have one cache cog serving the XMM execution cog requests into up to 7 different areas of HUB ram, and IIRC David did implement something like this. But XMM on P1 was pretty much dying by that point, so it didn't see much use.

Yes, I mentioned this would be possible - but this does not mean it is practical - such a cache could only service one cog at a time, so it potentially stalls all the XMM cogs for a significant amount of time every time a cache miss occurs for any one of them. Unless the caching cog itself can multitask. And while that might just be possible on the P2, I can't see it as being possible on the P1 - e.g. the P1 simply doesn't have enough cog RAM, there is no internal P1 stack, and P1 cog code is not re-entrant (but see footnote). Also, as you point out, XMM on the P1 is already quite slow, and implementing an even slower cache would probably kill it completely. However, I am not ruing out the possibility that David came up with a better cache architecture than the one we all used at the time, so if you (or anyone else) has a link to such code I'd be interested in looking at it, since I could probably plug it straight in to the P2 (where it might be practical).

Ross.

Footnote: Although the usual rule applies here - on should never say 'never' when it comes to the Propeller!

Hi @RossH,

I found this repository for the propgcc on github.

I assume it's the latest even though it doesn't appear to have been updated for several years:

https://github.com/parallaxinc/propgcc

Just scrolling through the file descriptions on the webpage I see several mentions of multiple xmmc cogs.

Maybe you will find something of interest within this repository...

Yes, I knew about that repository, but had never really investigated it since gcc died on the P1. However, I just had a quick look and it does seem that David Betz updated the cache so that a single cache cog could service multiple XMM cogs. But as I pointed out earlier, this is not a good solution - it means every XMM cog can potentially stall for as long as the single cache cog is busy - which can be a long time with slow (e.g. serial) XMM RAM. But actually it is worse than that - it also reduces the speed of the cache cog in responding to cache requests, since the cog must check 8 cache mail boxes every time it checks for a new cache request. So not only could this solution stall all XMM cogs while it was servicing each cache request, it would also take longer just to check for each new cache request than it does to service it - especially when the required data is already in the cache (which it normally is - that's the whole point of using a cache!). Finally, they also use the single cache cog to refresh SDRAM, which would slow the cache down even further, and potentially eliminate any speed advantage using SDRAM might have had.

So it is probably no wonder the gcc team saw no future for XMM on the P1. The performance of XMM under gcc must have been quite slow even if you only wanted one XMM cog.

I am guessing here, but I suspect they were thinking they could eventually get Linux running on the P1 using XMM - but in trying to do that they appear to have doomed the whole gcc initiative.

Ross.

It's true that there is a slight performance gain from having multiple cache cogs, since they can multitask the administrative overhead, but they can't multitask the really time consuming part of the caching operation, which is reading the data from the external memory. For most applications the small performance gain probably isn't worth it, because no matter how many cache cogs you have, only one at a time can actually be accessing the serial RAM (or whatever the backing store is). I guess if you have multiple different XMM memories in play (flash, SD, PS RAM) then having one cache cog per external memory would make sense, but that's a pretty niche case and in any event is quite a different model to having one cache cog per XMM execution cog.

It is not a slight performance gain. It is potentially a massive performance gain. Precisely because it reduces the chance of needing "the really time consuming part of the caching operation, which is reading the data from the external memory". The whole point of caching is that for most cache requests, the data is already in the cache, and all you need is a reminder of exactly where. You don't need to read XMM to get it if it is already in Hub RAM, which it typically will be if you have recently accessed it. You only need to read XMM RAM on a cache miss, which - given a decent caching algorithm - is a rare event. And what makes it more likely that what you want is already in hub RAM is if the cache is dedicated to you, and is not polluted by requests from other cogs which are probably executing code elsewhere in memory.

You've totally missed my point: whether the data is in hub RAM or not is not a function of how many cache cogs you have, it's a function of how many cache areas you have in hub RAM. That is, you can certainly have multiple cache areas managed by one cog. Having multiple cache cogs to manage the cache area does very little to help with filling the cache, since only one cog at a time can actually manage that cache.

Ah, I think I may now understand why we're talking past each other: I take it that in your model the XMM execution cog has no cache logic at all, and to retrieve any data it has to talk to the cache cog? If so then yes, I see the problem. But I'm pretty sure that in David's multi-cog XMM that wasn't the case: the XMM cogs had a tiny bit of cache logic in them (enough to handle trivial cache hits) and only had to make requests to the cache cog on cache misses This also cuts down on general inter-cog communication overhead.

I'd have to check the code in more detail to make sure - it is possible that we use basically the same logic (I recognized parts of it) but that Catalina has less of that logic in the XMM cog than gcc did. But (as is always the case) there are tradeoffs to be made here - more cache logic in the XMM cog means less room for other things, which means you have to implement some common operations using code stored in Hub RAM rather than Cog RAM. So while it sounds good in theory, you have to ensure you gain enough not to just lose it all again because all you end up doing is caching common operations that could have been in the XMM cog anyway.

But that is not the main issue here. In Catalina the XMM cog goes to the cache cog whenever it needs to change page. This is a fairly trivial operation for one XMM cog, but not for eight XMM cogs. However, it does mean multiple XMM cogs can share the same cache, which means there is no problem if two cogs want to write to the same page, since there is only ever one copy of the page in Hub RAM. If each XMM cog implements its own cache logic, then doesn't that also mean that each XMM cog must have its own cache? And in that case, what happens when two XMM cogs write to the same page?

It's been a long time since I looked at the code, but I think cache writes also have to go through the central cog. I believe the code was optimized for the (fairly common) case where mostly read-only code is stored in XMM, with only occasional writes. And I think the cache data was in hub RAM and shared by all the XMM cogs, with some kind of locking mechanism so that the central cache cog would not evict cache lines that were in use for execution. But as I say it's been a while since I looked at the code, I may be misremembering. The main thing I remember is that the round-trip to a mailbox was expensive enough that keeping the fast case (cache hit for a read) in the local cog saved a significant amount of time, as well as freeing up cogs for other uses (having 2 cogs, one execution and one cache, dedicated to each hardware thread is pretty expensive).

Yes, all writes need to be coordinated, and the easiest way to do that is have them all performed by the same cog. Perhaps that's where the confusion lies - it depends whether you are looking at the write code or the read code. For Catalina both are done by essentially the same code, but for gcc perhaps reads are done by the XMM cog (except for cache misses) and writes by the cache cog. That would make some sense. And it means you could have multiple caches for reads, as long as you don't also need to write to those pages. Which really means you can have faster code access, but not data access. However, there is another downside to this solution - if you have multiple read caches then each cache would have to be be very small - i.e. 1/8th of Catalina's cache size. This would reduce the performance of the multi-cache solution. So it is not clear to me that having multiple caches would necessarily end up being faster. Most likely it would depend on the code being executed.

However, this has been a useful discussion. XMM on the P1 is unlikely to be revisited now that gcc is essentially dead, but I now have a better idea of what might be the best solution for the P2. The current solution - which will be released shortly - is the Catalina P1 solution, but the P2 architecture offers many ways to improve that solution without necessarily going down the gcc path.

Ross.

Ha! I just found a bug that had eluded me for a couple of days - I couldn't get the VGA and USB keyboard and mouse drivers working in XMM SMALL mode (they worked fine in all the other modes, including XMM LARGE mode). Turned out to be a missing orgh statement, and it wasn't even in my code - it was in some PASM code I imported. The program complied but wouldn't run.

Now only some documentation updates to go before the release.

I sometimes wonder how new users ever manage to get to grips with programming the Propeller in either PASM or Spin. There are just so many 'gotchas'

Catalina 5.6 has been released here.

The main purpose of this release is to add XMM support to the Propeller 2. Only the PSRAM on the P2 Edge is currently supported as XMM RAM, but more will be added in future (e.g. HyperRAM).

Here is the relevant extract from the README.TXT:

RELEASE 5.6 New Functionality ----------------- 1. Catalina can now build and execute XMM SMALL and XMM LARGE programs on Propeller 2 platforms with supported XMM RAM. Currently the only supported type of XMM RAM is PSRAM, and the only supported platform is the P2_EDGE, but this will expand in future. To compile programs to use XMM RAM, simply add -C SMALL or -C LARGE to a normal Catalina compilation command. For example: cd demos catalina -p2 -lci -C P2_EDGE -C SMALL hello_world.c or cd demos catalina -p2 -lci -C P2_EDGE -C LARGE hello_world.c Note that a 64kb XMM loader is always included in the resulting binaries, so the file sizes will always be at least 64kb. 2. The payload serial program loader can now load XMM programs to the Propeller 2. Loading XMM programs requires a special XMM serial loader to be built, which can be built using the 'build_utilities' script. Currently, the only supported type of XMM RAM is PSRAM, and the only supported P2 platform is the P2_EDGE. The script will build SRAM.bin (and XMM.bin, which is simply a copy of SRAM.bin). These load utilities can then be used with payload by specifying them as the first program to be loaded by the payload command, and the XMM program itself as the second program to be loaded. For example: cd demos catalina -p2 -lc -C P2_EDGE -C LARGE hello_world.c build_utilities <-- follow the prompts, then ... payload xmm hello_world.bin -i 3. The Catalyst SD loader can now load XMM programs from SD Card on the Propeller 2. Just build Catalyst as normal, specifying a suppored P2 platform to the 'build_all' script. Currently, the only type of XMM RAM supported is PSRAM, and the only supported P2 platform is the P2_EDGE. For example: cd demos\catalyst build_all P2_EDGE SIMPLE VT100 CR_ON_LF Note that now that Catalyst and its applications can be built as XMM SMALL or LARGE platforms on the Propeller 2, it is no longer possible to specify a memory model on the command line when building for Propeller 2 platforms. This is because some parts of Catalyst MUST be build using a non-XMM memory model. However, it is possible to rebuild the non-core component of Catalyst as XMM SMALL or XMM LARGE programs. See the README.TXT file in the demos\catalyst folder for more details. 4. New functions have been added to start a SMALL or LARGE XMM kernel: _cogstart_XMM_SMALL(); _cogstart_XMM_SMALL_cog(g); _cogstart_XMM_LARGE(); _cogstart_XMM_LARGE_cog(); _cogstart_XMM_SMALL_2(); _cogstart_XMM_SMALL_cog_2(); _cogstart_XMM_LARGE_2(); _cogstart_XMM_LARGE_cog_2(); These are supported on the Propeller 2 only. The '_2' variants accept two arguments rather than just one, to allow the passing of argc and argv to the program to be started. Refer to 'catalina_cog.h' for details of parameters etc. 5. The Cache and Cogstore cogs are now explicitly registered and can now therefore be listed by a C program interrogating the register (e.g. by the demo program ex_registry.c) 6. The p2asm PASM assembler now allows conditions to be specified as "wc,wz" or "wz,wc" which both mean the same as "wcz", It also does more rigorous checking about which instructions should allow such conditions. 7. OPTIMISE can now be used as a synonym for OPTIMIZE. Both enable the Catalina Optimizer when used in CATALINA_DEFINE or as a parameter to one of the build_all scripts. For Catalina command-line use you should use -C OPTIMISE or -C OPTIMIZE or the command-line argument -O5. 8. COLOUR_X can now be used as a synonym for COLOR_X, where X = 1, 4, 8 or 24. Both specify the number of colour bits to be used in conjunction with the VGA HMI options. For example, on the Catalina command-line you could use either -C COLOUR_24 or -C COLOR_24. 9. The build_catalyst script now looks for Catalyst in the current directory first, then the users home directory (for folder 'demos\catalyst') before finally resorting to building Catalyst in the Catalina installation directory. 10. The build_utilities script now looks for utilities to build in the current directory first, then the users home directory (for folder 'utilities') before finally resorting to building the utilities in the Catalina installation directory. 11. There are now more pre-built demos of Catalyst. In the main Catalina directory you will find: P2_DEMO.ZIP - compiled to suit either a P2_EVAL or a P2_EDGE without PSRAM, using a serial HMI on pins 62 & 63 at 230400 baud. Built to use a VT100 emulator, or payload with the -i and -q1 options. P2_EDGE.ZIP - compiled to suit a P2_EDGE with 32 Mb of PSRAM, using a serial HMI on pins 62 & 63 at 230400 baud. Built to use a VT100 emulator, or payload with the -i and -q1 options. P2_EVAL_VGA.ZIP - compiled to suit a P2_EVAL using a VGA HMI with VGA base pin 32 and USB base pin 40. P2_EDGE_VGA.ZIP - compiled to suit a P2_EDGE with 32Mb of PSRAM using a VGA HMI with VGA base pin 0 and USB base pin 8 . In all cases, unzip the ZIP file to an SD Card, and set the pins on the P2 board to boot from the SD Card.The XMM demos for the P2 EDGE are now included in the release, in the files P2_EDGE.ZIP (using a serial HMI) and P2_EDGE_VGA.ZIP (using a VGA HMI).

Here is the XMM.TXT from the Serial HMI version (P2_EDGE.ZIP):

This version of Catalyst contains various demo programs compiled to run in XMM RAM on a P2 EDGE with 32Mb of PSRAM installed. They are compiled to use a Serial HMI on pins 62 & 63 at 230400 baud using a VT100 emulator or payload using the -i and -q1 option. The XMM files are: xs_lua.bin \ xs_luac.bin | the Lua interpreter, compiler and execution engine, xs_luax.bin / compiled to run in XMM SMALL mode. xl_lua.bin \ xl_luac.bin | the Lua interpreter, compiler and execution engine, xl_luax.bin / compiled to run in XMM LARGE mode. xl_vi.bin - the vi text editor, compiled to run in XMM LARGE mode, which allows files larger than Hub RAM to be edited. The XMM SMALL versions were compiled in the usual Catalyst demo folders, using the command: build_all P2_EDGE SIMPLE VT100 CR_ON_LF USE_COLOR OPTIMIZE MHZ_200 SMALL The XMM LARGE versions were compiled in the usual Catalyst demo folders, using the command: build_all P2_EDGE SIMPLE VT100 CR_ON_LF USE_COLOR OPTIMIZE MHZ_200 LARGEI found the issue that was holding up the Linux release, so Catalina release 5.6 is now complete and available on SourceForge for both Windows and Linux here.

The issue was to do with payload, but can be easily fixed by adjusting payload's timeouts on the command line. Now that Catalina supports XMM on the P2, uploading multiple files in one payload command is now routine on the P2 (as it already was on the P1), and it turns out the default interfile timeout was not long enough. I am not sure yet whether this is just my setup or a general issue, so instead of changing it I have just added a suitable note to the README.Linux file:

In my case, I only needed to extend the interfile delay to 500ms (i.e. adding -f 500 ) to make payload work reliably.

Ross.

P.S. While testing this, I noticed a a minor issue when compiling small programs (like hello_world.c) that I had not noticed when compiling programs that were already megabytes in size (which I was doing to test the XMM!). The issue is that the code size shown by the compiler can be be 512kb too large in some cases - for instance:

Huh? 527324 bytes for 'hello_world' ???

The answer is that the program is actually only few kilobytes. But when compiling in LARGE mode, the addressing for the XMM RAM must start above the Hub RAM, and the compiler is reporting the program size as if it started from address zero, which includes the entirety of the 512kb Hub RAM which is not used for code at all.

It makes no difference to the actual compiler output - the file is correct, it is only the message that is in error. I will fix this in the next release.

Just a quick note about the xl_vi demo program included in release 5.6 - xl_vi is just Catalyst's usual vi text editor, but compiled to run on the P2_EDGE with 32Mb of PSRAM using the new P2 XMM LARGE mode, which means it can handle much larger text files. I had tested it with files large enough to show it could indeed handle files that the previous versions of vi couldn't, but I had not tested it with a really big text file.

I just did so using a 2MB text file, and yes it does work ... but it takes over 3 minutes to load the file into PSRAM. Once it is loaded then editing it is pretty snappy - until you have to save it back to disk - but since there is no progress indication on the load or save operations it would be very easy to assume the program had simply crashed and the operation would never complete. But it does.

Ross.

Not directly about Catalina itself, but a new version of the stand-alone VTxxx terminal emulator that Catalina also now uses has been released. This program is actually part of the Ada Terminal Emulator package, which is available here.

This will be included in the next Catalina release, but you can download it now from the link provided and just overwrite comms.exe in Catalina's bin folder with the new version in this package.

The changes are:

- Comms has an improved ymodem implementation and dialog box: * A ymodem transfer can now be aborted in the middle of a send or receive operation. * The dialog box now responds to Windows messages. This stops Windows complaining that the application is not responding during a long ymodem transfer. * The Abort button now attempts to abort both the local and the remote ymodem applications if a transfer is in progress. Previously, it could only abort the remote ymodem application and only while the local ymodem application was not executing. * The Start and Done buttons now check and give an error message if a ymodem transfer is in progress. Previously, they simply performed their respective actions, which could lead to a comms program lock up. * Opening the dialog box no longer crashes comms if no port has been opened.Hello Community - I've been using SimpleIDE to program P1 boards and I'd like to try out a P2 board so I'm investigating Catalina.

I've been working my way through the Getting Started PDFs and the reference manuals, but I've hit a road block.

Using the CLI, payload hello_world_3 -i works as expected, but using Geany, Build, Download and interact, I get

Using Propeller (version 1) on port COM12 for download

Error loading program

I'm not sure what I'm missing. Guidance please.

The Geany project files included in the Catalina demo folder are mostly set up for a Propeller 1. If you have a Propeller 2, you will probably need to add -p2 to the Catalina Options (accessible via the Project->Properties menu item). You may also need to modify the Baudrate to 230400 (it is probably set to 115200 if it was originally a P1 project).

Finally, if you have built a P1 executable, be sure to do a clean and then build the project again for the P2 - payload may default to downloading the P1 .binary rather than the P2 .bin file if both exist in the same directory.

Ross.

Let me clarify. I haven't started with the P2 yet. I like to make one change at a time, so moving from SimpleIDE to Catalina is my fist change. The P2 will come after I'm comfortable with Catalina.

While working through the Getting Started tutorials, I hit this roadblock.

Using the CLI, payload hello_world_3 -i works as expected, but using Geany, Build, Download and interact, I get

Using Propeller (version 1) on port COM12 for download

Error loading program

The error message is kind of lacking in detail and I don't know where to start.

That message means the loader didn't get the expected response from the Propeller. It may be a timeout issue.

To increase the timeout, and also get more information about what's going on, use the Build->Set Build Commands menu item and add -d -t 1000 to the "Download and Interact" command.

i.e. modify the command to be:

payload -d -t 1000 %x "%e" -i -b%bAlso, are you running on Windows or Linux?

Ross.

Thanks Ross, I'm using Windows 10. The modified command certainly changed things, however now I get

and at end of the message I get No Propeller found on any port. I do have a P1 Activity Board WX on that port.

I see that it is looking for a P2. From the Getting Started, on page 2 it says that P1 is the default and that I would need to add -P2 option to each Catalina command. I checked the Build->Set Build Commands but I don't see **-P2 **anywhere.