[WIP] Projekt Menetekel: That game I keep talking about

Wuerfel_21

Posts: 5,799

Wuerfel_21

Posts: 5,799

[Very preliminary logotype. Is this readable?]

What is it?

Projekt Menetekel: [Subtitle pending...] is the P1 (and PC and likely also P2 and Wii, DOS, etc.) game I've been working on for a while now. It has been referred to as "The game(tm)", "The big game" or "PropAdv" before. You could say it started in the summer of 2018 when I first created the JET ENGINE graphics system. You could say it started early 2019 when I started developing the custom tools. You could say it starts now, with the engine core being mostly functional and me having a rough idea of what the gameplay and storyline will be like.

To that:

The game will be a mix of elements, pulling mostly from the Zelda series, bullet hell shooters (well, as much as that is reasonable on limited hardware and non-linear movement) and classic graphic adventures. There will be a large overworld to explore and some number of dungeons containing tough challenges and useful treasures. There will also be a fleshed out storyline with lots of characters, although I neither can nor want to say more about it at this time.

Why do a post now?

- I have (had?) a habit of starting big projects and then not following through. This seems to have not happened here long enough that I'm confident I'll at least be able to get a demo out.

- I wanted to avoid posting before deciding on a final title, which in turn required deciding on the basic kind of story. Well, I compromised and settled on the main series title Projekt Menetekel (which will be kept if I make another similar game), but not the game's own subtitle. (FYI: "Menetekel" is a semi-obscure german word that roughly translates to "bad omen". It references a bible story (Daniel 5, if you're so inclined), but it doesn't seem to have a become a word of its own in many other languages. Also, yes, there is a reason I chose that beyond it sounding really neat.)

- Recently, I've gotten slightly sidetracked with P2 stuff.

- Idk really

When will it be done?

IDK really. Probably not soon. But i'll post the more-or-less occasional update to this thread.

What will it run on?

The specs for the P1 version are relatively final:

- At least 80 MHz clock speed

- S-Video, Composite, 256 color VGA or 64 color VGA (in order of descending preference)

- SD card socket with very fast SD card (A1 application performance class required for smooth gameplay)

- Input method with 8-way direction input and at least 4 buttons (6 highly recommended). A whole cog is reserved to read it, but no hub RAM.

- Standard audio circuit (technically optional. Stereo recommended.)

- Optional: 64K SPI RAM (reduces writing to SD card) (currently actually required because I haven't bothered writing the RAM-less memdriver yet...)

P2 version estimated requirements:

- Standard 20MHz crystal

- VGA, HDMI/DVI ,YPbPr, SVideo or Composite output. They are all of relatively similar quality, but there may be issues with pixel aspect ratio and sampling on the non-anlaog-SDTV ones. Other outputs may be supported, too, IDK.

- Input method with 8-way direction input and at least 4 buttons (6 highly recommended). Not sure about specifics yet.

- SD card socket. Card doesn't have to be as fast as for P1, but a good card is still recommended.

The emulator currently runs on Windows only, but is designed with portability in mind.

In particular, ports for Linux, Wii and MS-DOS are planned.

What have you got so far?

Important note: Unless otherwise noted, all captures are from the PC version, which features improved graphics (more colors and high-res text).

Main "Action" engine

This is the most important part of the game code, because this is where the actual game happens.

[The engine can handle up to 24 enemy objects (enemies + projectiles) at once! (P1 video capture with CPU usage bars)]

[Complex tile animations]

[Static elements can be above the player]

[Fake multi-layer scrolling]

[Bothering birds...]

[Weapons in action...]

Random overengineered visual effects

[Opening a treasure chest...]

[What could this be for?]

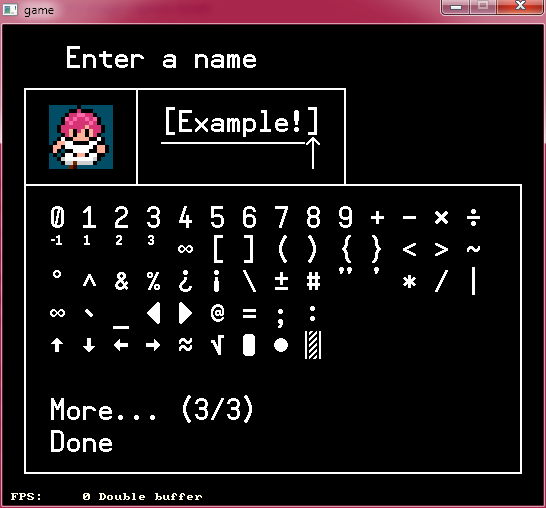

Text system

Projekt Menetekel uses a fairly advanced text printing system. This allows

- Automatic word wrapping and pagination, including manual linebreaks, NBSPs and ZWSs

- Color text (color can be set by program or in the string itself)

- Text crawl or instant printing

- Flexible text box shapes (not fully implemented yet)

- Including graphics tiles into text

- Including dynamically generated/selected text snippets into a ROM string

- Translation support. Up to 254 languages can be defined. All ROM font characters can be used.

[Language switching on-the-fly]

[Dialog system in action.]

[The name of the player character can be customized (see previous GIF)]

Somewhat related:

The characters can display many different facial expressions by combining different layers (this required creating some custom tooling to read the proprietary layered format of my favorite paint program...)

[Isn't she cute?]

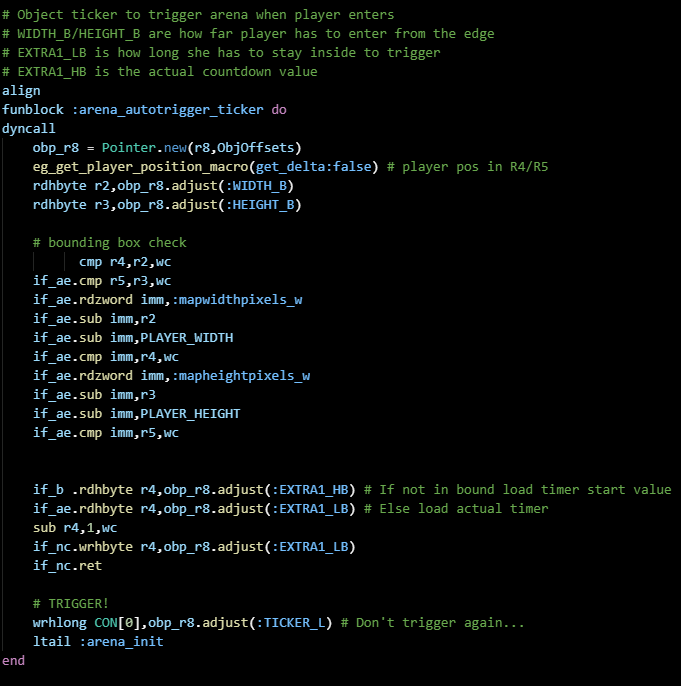

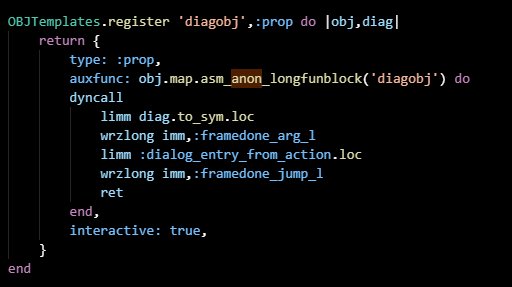

Custom macro assembler and XMM tooling

Well, it's really just some special methods defined in a Ruby interpreter, but if it quacks like an assembler...

This approach essentially gives you a powerful object-oriented macro language for free.

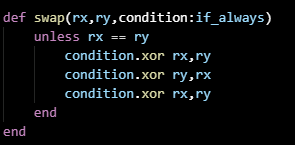

[An average function. Note the slightly odd syntax, auto-detection of immediate/register S, pointer manipulation macros and emulator-friendly instrumentation (dyncall)]

[A macro being defined]

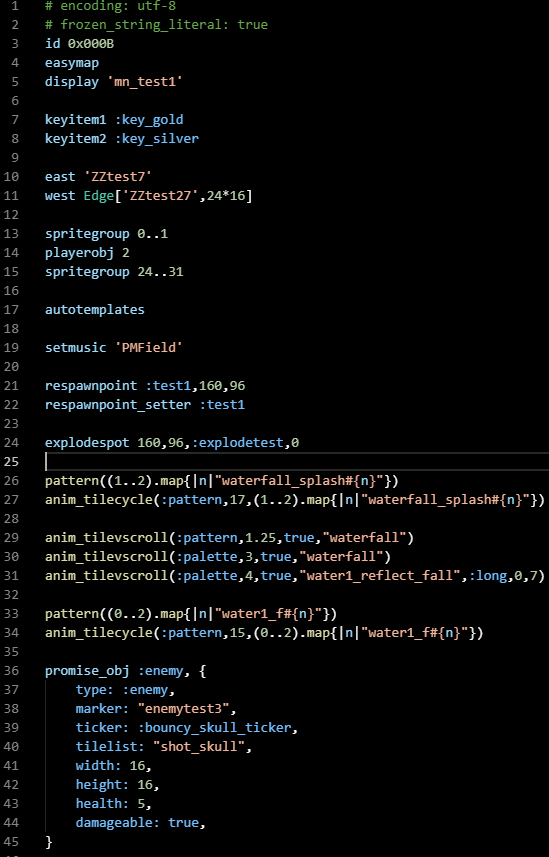

[Maps are defined in code. They use their own DSL (yes, it's a DSL inside a DSL)]

[Advanced macro magic. This method is defined for MapDSL. Map definitions are evaluated before any code is actually assembled, but the code inside the mapticker_asm block is stored away and executed later while assembling the map's ticker function]

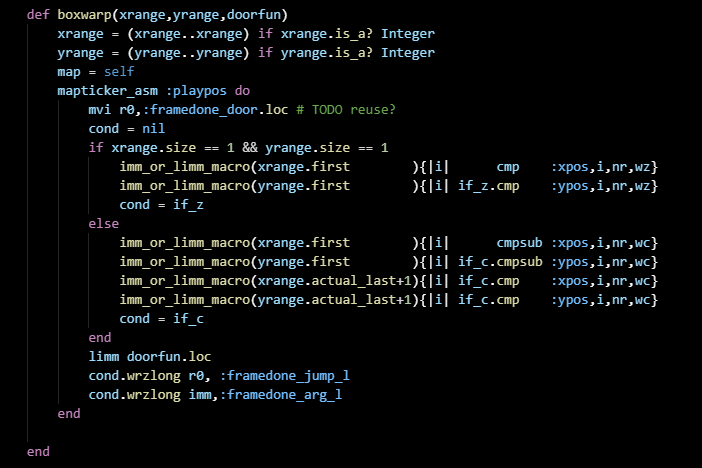

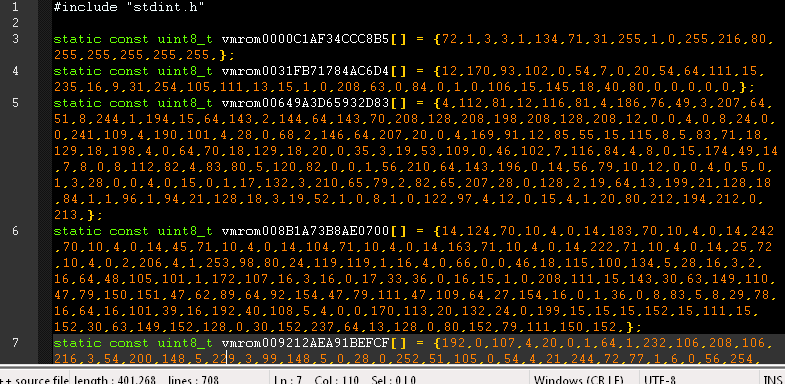

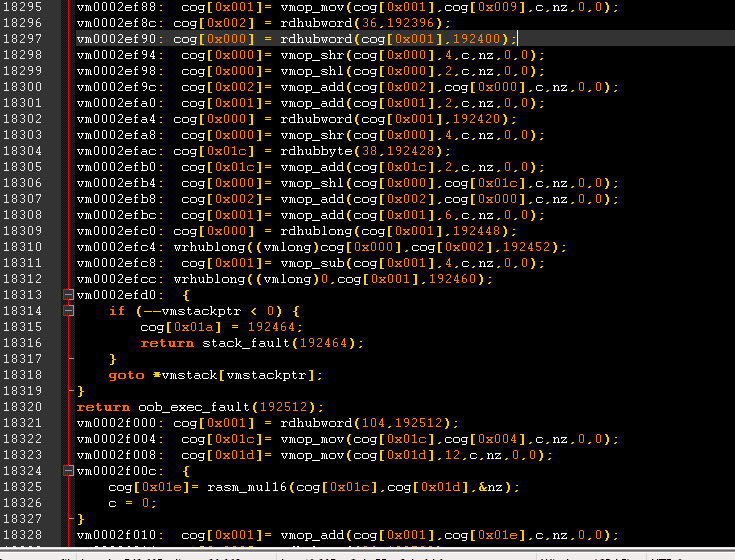

The assembler also includes a de-compilation feature, which emits C++ code equivalent to the assembled code. This is the core of the PC emulator. The code has to be specially instrumented and well-behaved (don't read or write itself, don't read inline data without a special rdcodexxxx instruction, don't read reserved memory, etc.) to make this work.

[512 byte pages of ROM data are deduplicated and compressed]

[Code after being de-compiled into C++]

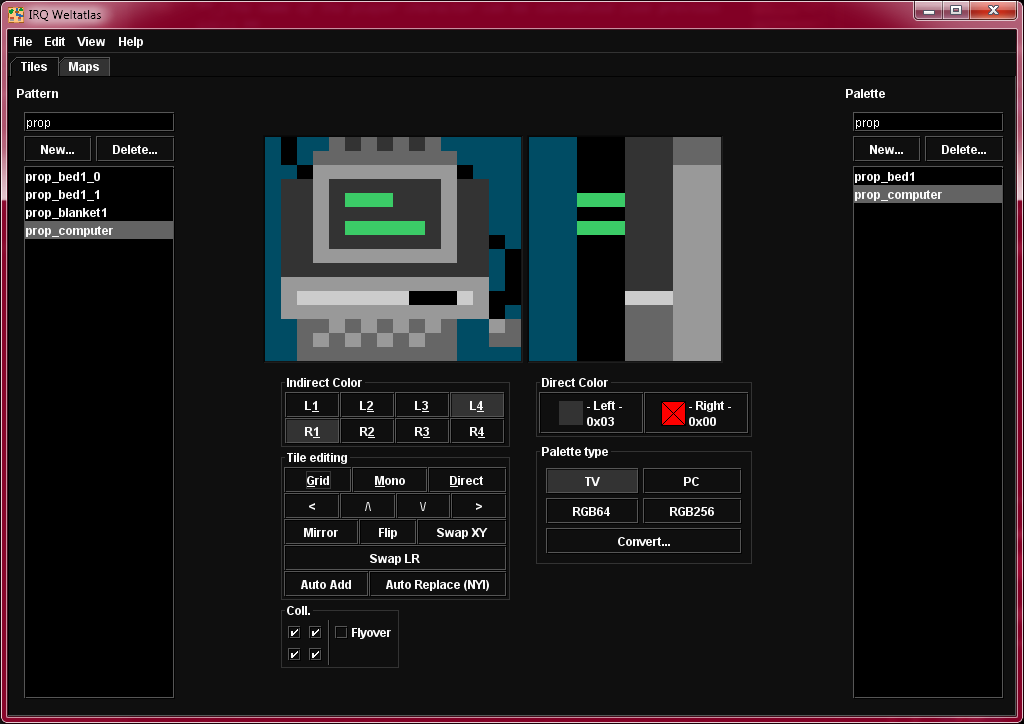

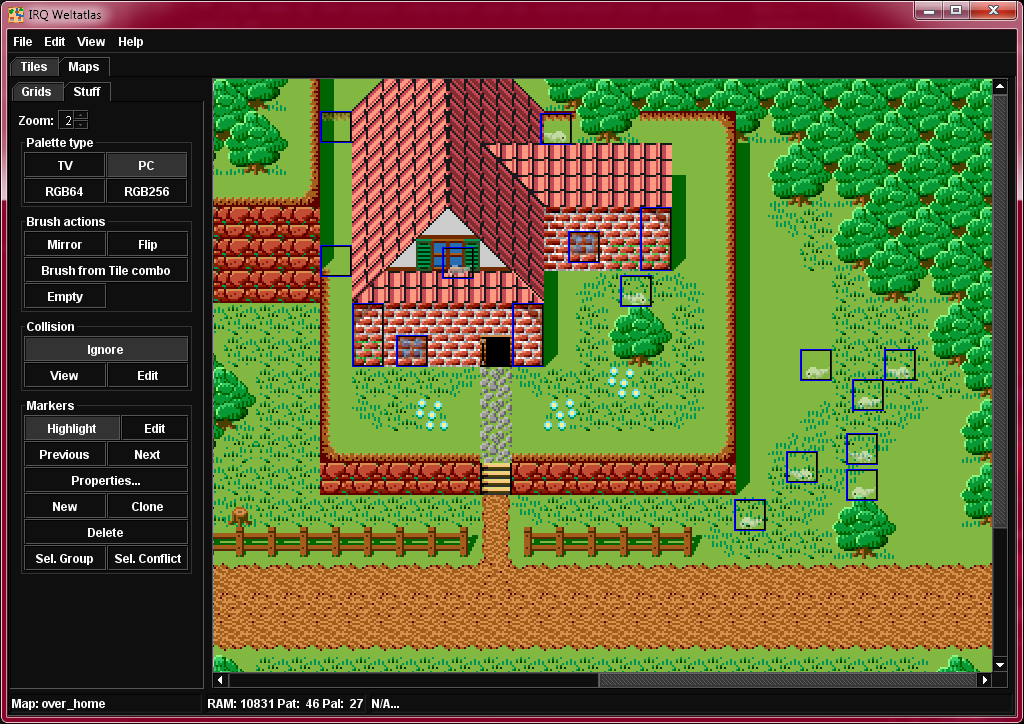

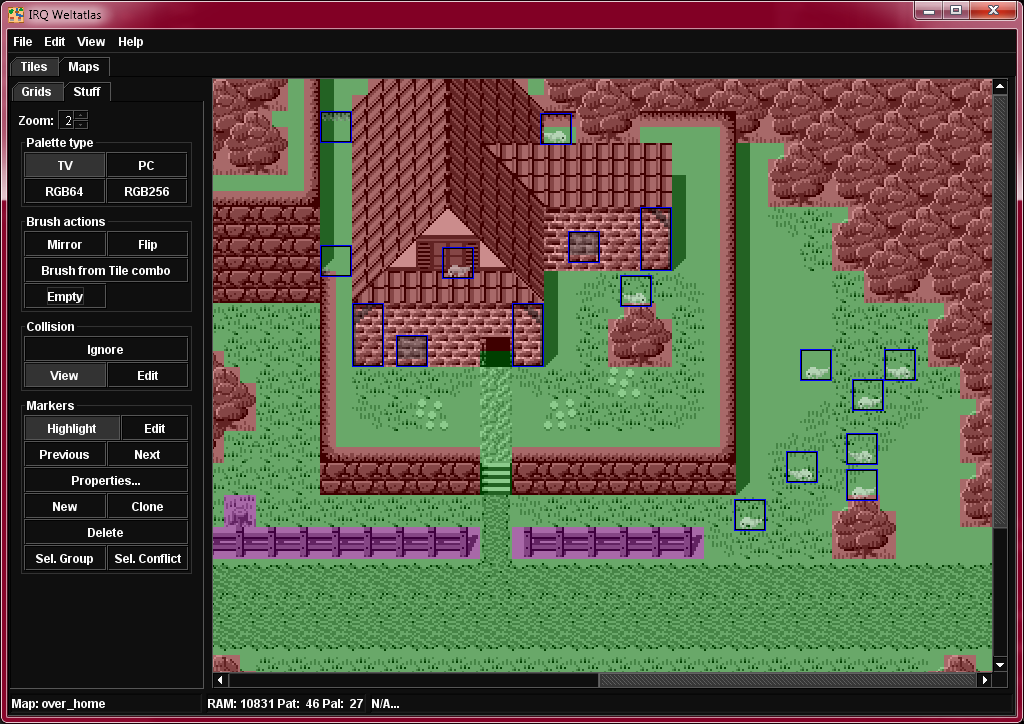

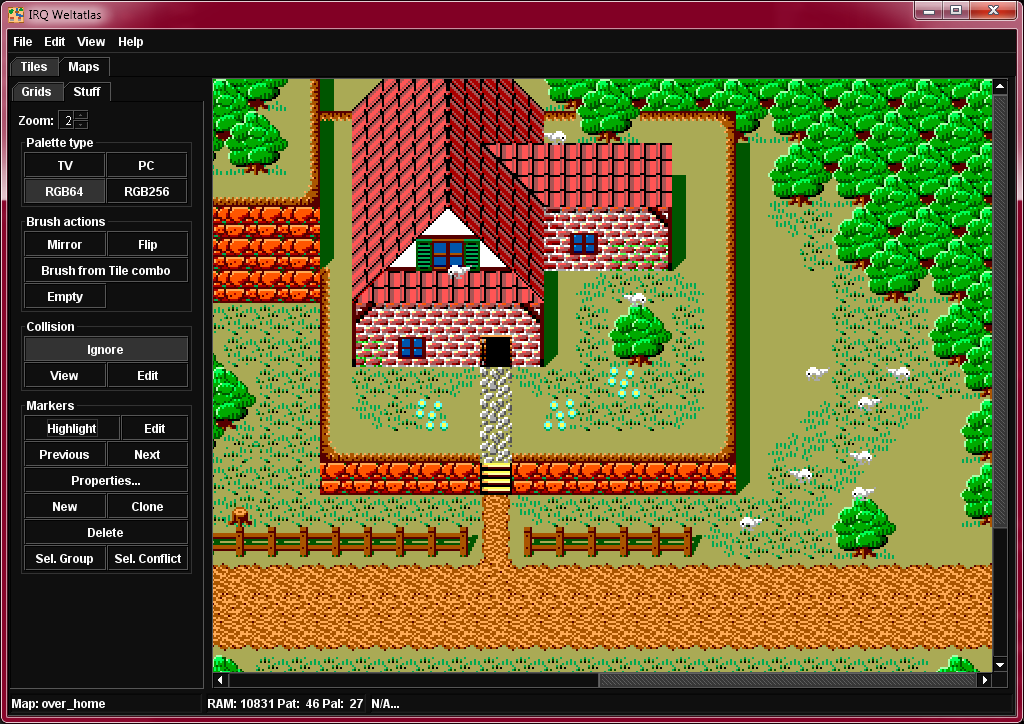

Custom Tile/Map Editor

It is called Weltatlas and is written in Java with Swing. The code is spaghetti and inefficient, but it works...

[Tile Editing. It simulates the non-square pixels seen on real hardware.]

[Map Editing]

[Viewing collision data]

[All four master palettes can be previewed with the click of a button. This is what this map looks like on 64 color VGA, yuck. Marker highlighting can also be disabled for a more aesthetically accurate preview.]

Other gunk

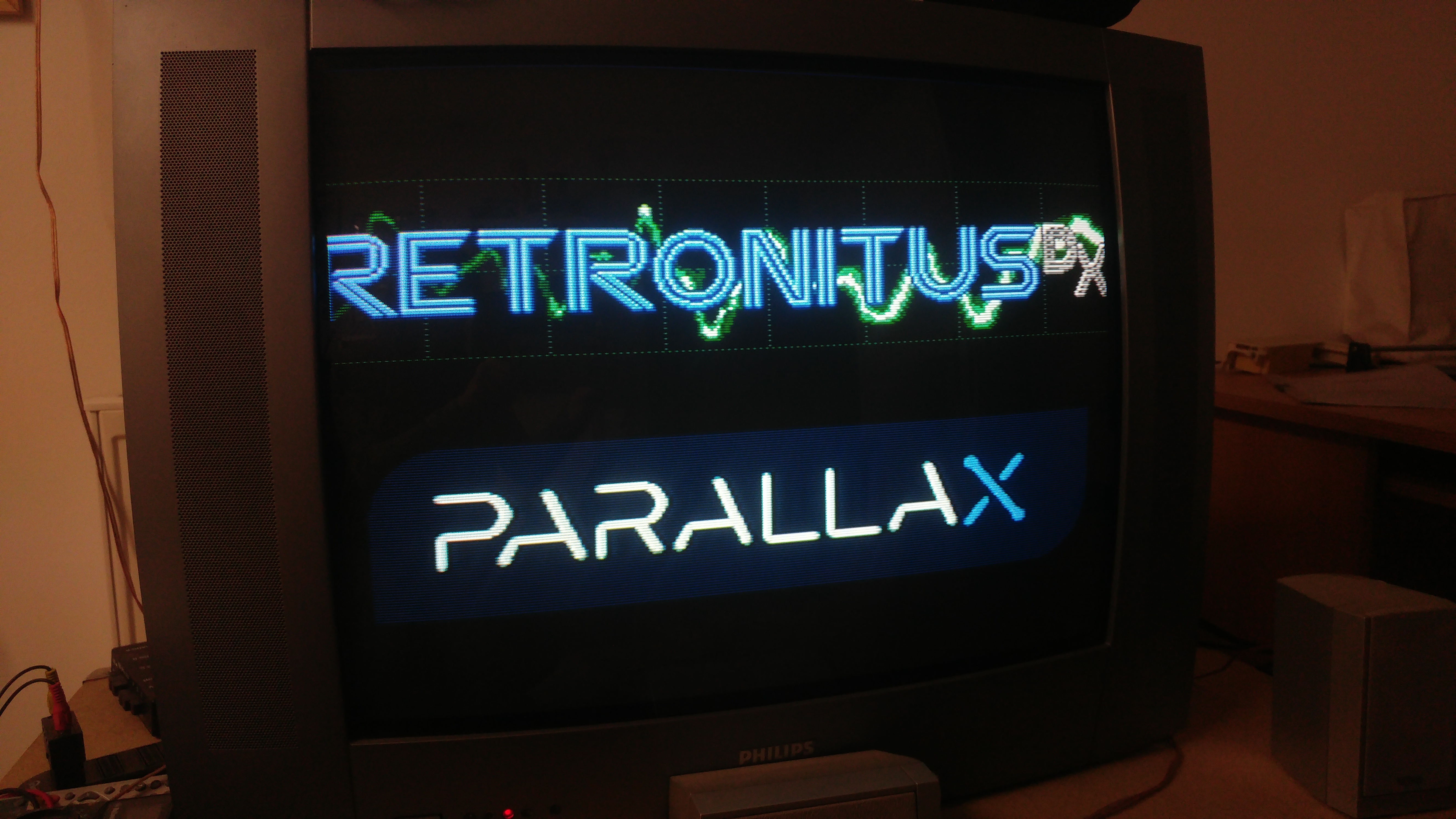

Most of the original Retronitus DX music I made may or may not end up in the game. You can read slightly more about that in the Retronitus DX thread

As said above, I have a rough idea of what I want to do with the storyline. (Writing and coming up with good ideas, I'm not very good at)

[Random tech logo screen mockup (as displayed by PixCRUSH. JET Engine has less horizontal overscan.)]

Statistics

Lines of code

Updated 19th May. 2021

Only non-blank, non-comment lines are counted.

| Part | Files | Lines |

|---|---|---|

| Main code (Assembly+macros+tables+MapDSL) | 112 | 17881 |

| Custom Assembler + Misc. Utilities (Ruby) | 23 | 4260 |

| Tile/Map and Dialog Tree Editors (Java) | 41 | 7511 |

| P1 VM/kernal/loader (Spin/PASM) | 15 | 4321 |

| P2 VM/kernal/loader (Spin/PASM) | 5 | 2973 |

| PC emulator (C++) | 34 | 3991 |

| Total | 230 | 40937 |

Other

Updated 2nd Mar. 2021

| Git commits | 457 |

| Total files | 556 |

| Size (source code) | 11.4 MB |

| Size (compiled XMM ROM) | 566 KB |

| Size (compiled Windows EXE) | 1003 KB |

Comments

Reserving first comment for overflow.

Looks fantastic!

Wow

That's absolutely wonderful!

I don't know if you are aware but I have a whole range of Propeller-based game consoles, one is here:

https://forums.parallax.com/discussion/164043/the-p8x-game-system

I also have a portable version with sd card and 320x240 lcd screen, and the game system II with sd card, 256 colors vga/rgb output and snes-like controller (image attached).

I would really love to see this game running on them, and would be happy to send you some pcbs if you are interested (not sure when though we are in lockdown until march 21st at least).

Getting it to work on a multi-propeller system will be a challenge, but one that was anticipated. The one that runs the video isn't connected to the SD card, right? Because the main VM cog interacts directly with the graphics system through memory, so those can not be split. But it also needs very fast reads from the SD card. So a custom memdriver and some special code for the middleman propeller will have to be written. Not sure if it can be done fast enough. Certainly some amount of latency will be added. However, if the middleman code was to implement a simple read cache, that would likely more than offset that (as-is, there are only 3 sectors of code cache). The input and audio interact with the main program through simple interfaces, so moving those to the other propeller shouldn't be difficult (especially if that other propeller has direct access to the SD card to load audio data from).

In particular, here is what a memdriver does (right now, but I think this is reasonably final), maybe that is enough for you to prototype something. If not, I can show you the actual kernal/memdriver code.

drv_initdrv_readramdrv_writeramdrv_readsectordrv_writesectordrv_flushsectorFor the moved audio/input, some LMM code would also need to change, but I haven't figured out how I want to do that yet.

Also the loader needs to be modified, but that is pending a rewrite, so I can't really say much about it.

The cool thing is that all of this is invisible to the actual game code (even a compiled binary, if the kernal itself has not shifted), the only platform dependency it has is the color palette. Speaking of which, you do mean

%RRRGGGBBkinda 256 colors? Because that's what I've been creating graphics for (I don't actually have any hardware to test it with, so it kindof receives less attention and thus looks a bit goofy. Better than 64 colors though.)While I always appreciate any gifts, if you send bare PCBs it may take a very long time for me to get around to building them.

Ok, maybe it is better if I explain a bit how it works.

The Propeller on the right is the main processor where the game logic should run, it connects to the gamepad, audio and sd card. The Propeller on the left is the video processor connected to the VGA output and its only job is to render the graphics. The two are connected with an high-speed serial line (about 10 Mbits, if I remember correctly). Both also have an EEPROM for boot and general "utilities" if needed, and a 128KB SPI SRAM. The code for the video processor is loaded by the main processor through the serial link and can be written either to hub ram or to the sram if you need more than 32k.

The concept is that the main processor loads the graphics to the video processor through the serial link as needed, then it updates a local tilemap memory buffer that is copied through the serial link to the video processor at the frame frequency. It is not much different from having everything on a single processor, except that the video portion doesn't take much memory and processors, you just need a cog that updates the video processor from time to time. How the graphics data are handled is up to the programmer.

The code running on both processors is completely customizable, there aren't restrictions on that.

Yes, RRRGGGBB + sync. The video processor also has two jumpers that can be used to select the output driver (vga, pal or ntsc rgb or whatever you need). It doesn't output composite, only RGB.

I've looked through your stuff before and thus am at least vaguely aware (although I haven't seen the new one).

Well problem is that it doesn't work like that. The main code has to run in the same chip as the graphics render cogs, it just is written like that. Thus the SD access needs to be passed through to the left propeller somehow. And it has to be very fast, because code is streamed in from the SD card in real time (the normal memdriver uses 20MHz SPI, but the actual max. effective data rate is probably closer to 10 Mbit/s due to pauses between read longs and access latency.

I'm a bit hazy today, so maybe I'm not explaining this very well.

Can it be done in blocks ?

I mean: read a block from sd to hub (512 bytes, 1024 bytes, whatever), start the transfer to the gpu, read another block, transfer, etc.

Transfer and read can be simultaneus, and syncronization can be either with the frame frequency or with the transfer itself (the transfer doesn't start until the receiver is ready).

The only limitation is that it must be done in blocks with the current high-speed driver implementation, there is too much overhead for single-long transfers, unless rewritten for this task. Which, btw, is the High Speed P2P RX/TX code by Beau Schawbe, unfortunately I can't find the thread on the forum nor the code on github.

Maybe we need a small example to explain the concept.

Yes, as laid out above, it is all in 512 byte units. However, I don't think reading a block from the SD and then sending it over will cut it, the transfer has to begin as soon as the data arrives from the SD. As I said, will have to be something custom. caching some blocks in the middleman would reduce the necessary speed, but that can only do so much.

I do realize I haven't really explained the technical basis at all, so maybe the following poorly created graphic and accompanying text can help. So let's start from the beginning:

Basically, the actual game (=user code) runs on a kind of virtual machine that abstracts away lots of things. When up and running on a normal P1 system, it looks a bit like this:

(note that some of the arrows between cogs and memory areas have been omitted for clarity. In particular, the user code may arbitrarily read or write any green area, can access yellow areas in certain specific way and is not allowed to access red areas)

(also note that the cog numbers are more or less arbitrary, this is just what they happen to currently be)

The problem with mapping this onto multiple propellers is that the user code expects to be able to write graphics data to the green memory areas and have that be displayed without further action (and adding such a requirement would be highly obnoxious).

This good enough? I can also give you access to the git repository if you want to and have a GitLab account. Seeing is believing or something...

The schematic is much clear now, thanks.

Well, I could be wrong and certainly needs some testing, but moving the rendering cogs to the video processor doesn't appear to be a problem. The rendering cogs needs the green area data ? Good, you can transfer that portion to the GPU (and at the same locations if needed). If my math is correct, the biggest green user area is 18432 bytes, at 3.2uSec per long, it will take 14.745 mSec. to transfer, fast enough for about 67 frames per second, more than enough for 60 fps. The transfer can be automated and synchronized with the frame rate (I haven't mentioned it, but the gpu also has a line that pulses at the frame rate so the main processor can synchronize with it) without user intervention.

Maybe just a little rearrange of the zones to make all graphics areas contiguous if possible.

The only problem I see is that, given the amount of data to transfer, it could be updated in the middle causing some unwanted efffects.

Certainly it is the not the ideal situation, there is little or no advantage in using two propellers because basically they just split the same amount of work, free cogs will be unused and there isn't more memory for code or graphics.

Well, I don't know how it can be tested. Maybe if you can build a small, self contained (without the need for the sd card) example that renders a simple screen, maybe with some code to write text just to prove that one processor is updating the other, I can see if it can work. Don't worry about the video output, if you don't have a 256 colors driver, I can test it with the other console version that uses the standard 64 colors dac, the base concept is the same.

I already thought about that and came to the conclusion that it won't work properly (the rendering may need any part of memory at any time, so in many cases it would be displaying stale data). As I said, I anticipated having to split it across multiple chips somehow. It has to be as I say, the SD access has to be proxied. It shouldn't be very tricky to code and I'd do it if I had the hardware. Here's how I'd do it:

The "CPU" Propeller would run two cogs. One cog listens for commands from the memdriver running on the other prop. The other is a customized SD block driver. When the listener cog gets the command to read a block, it relays it to the SD cog, which sets a flag when the transfer has started. As soon as that is set, the other cog starts sending the data (will have to be at least as slow as the SD SPI transfer itself to not read ahead of it). If that isn't fast enough, I'd write a system where it can cache a few blocks in hub ram, which it can send back immediately with no wait time. It of course also needs to support writing, but that doesn't need to be fast. It also needs to handle the audio and input, but that also shouldn't be difficult to add on top.

Well, the SD access is the problem, so how would I make an example without it? More generally, breaking off any piece of the code is difficult because it is all quite interwoven. You either have to prototype something on its own or do it with everything attached, no inbetweens. (and the latter of course requires that the loader can handle booting the second propeller, etc, etc).

But it all can and has to wait, I don't even have the memdrivers in separate files yet, haha, still bolted to the kernal.

I do have a driver that does 256 colors (the same one that does 64 colors, infact), in theory at least. It needs some rework though, it kindof sucks as is.

Ditto !

@Wuerfel_21

Great explanation.

How many pins do you have available to link two P2's? ie must it be serial or could you do something parallel?

I can help with the SD driver code if needed.

Also, don't know if it is any help, but cogs can be used in pairs where they share common LUT ram.

The problem concerns dual P1s. If dual P2 were to be considered, one could probably just send the rendered video data over a high-speed serial link and be fine.

OK. Hadn't realised this was P1's

That is brilliant Wuerfel_21, especially considering this is on the P1.

It's not my type of game to be honest (I prefer Hexagon) but I really do admire your skill and creativity!

Keep up the great work.

Thanks for the praise, y'all, BTW.

Unrelatedly, here's a piece of code that I just wrote that shows off some more cool macro shenanigans:

This registers an object template

diagobjthat when interacted with runs a dialog (well, in this case it's really just monolog...) specified by the template argument (passed into the block after the object that the template is applied on).The

obj.map.asm_anon_longfunblock('diagobj')line is remarkable:longfunblock(which means that the assembler takes care of crossing page boundaries automatically) assigned that labelIt's basically like an assembly lambda function.

Work on P2 version has begun...

First order of business is to get the graphics to be "good enough". JET Engine is tricky to port because it really makes use of certain P1 quirks. But it seems to be fine now. Just needs 1 cog on P2... I've attached a test program, if someone wants to fool around with it.

[Doesn't look like much, but tells me that everything sortof works]

After fixing and optimizing the video stuff some more, I started working on the CPU emulation for P2.

Got all the P1 instructions emulated correctly-enough to pass my fuzzing test with the same checksum as the P1 and PC version.

The instruction format I use is not the same as actual P1 instructions, but something custom.

Next I'll have to hook it up to an SD driver. I think I might put it in a separate cog, to make it slightly less cumbersome to swap out.

I've worked further on the JET Engine implementation. It now supports hi-res text like the PC version. Here it is once again, if someone wants to study it for some reason. The test/demo/example thing also looks kinda cool now.

Perhaps more generally interesting is the optimized tile decoding routine.

On the P1, it looks like this (with omissions/adaptations for clarity):

cmp pixel_ptr_stride,#1 wz' get previous mirror flag into nz test tile,#1 wc 'get (horizontal) mirror flag (bit 0) into c negc pixel_ptr_stride,#1 if_nz add pixel_ptr,#17 'If previous tile was mirrored: correct pointer if_c add pixel_ptr,#15 'If next tile is mirrored: advance pointer to end of tile :tilepixel shl pattern,#1 wc if_c ror palette,#16 shl pattern,#1 wc if_c ror palette,#8 wrbyte palette,pixel_ptr add pixel_ptr,pixel_ptr_stride djnz pixel_iter,#:tilepixelThis decodes the infamous "2bpp delta" pattern format into 8bpp with the given palette. This is the fastest way I'm aware of to decode 2bpp data into 8bpp on P1. It fits snugly in two hub cycles.

On P2, regular 2bpp is easy and fast to decode with MOVBYTS. But to get Menetekel on P2, it needs to still deal with the oddball format. Running the equivalent of the P1 code is comparatively slow. So here's what we get on P2:

test tile,#1 wc 'get (horizontal) mirror flag (bit 0) into c if_nc mov mirrorset,skipbits_nomirror if_c mov mirrorset,skipbits_yesmirror call #delta2skip call lutslide setq #3 wrlong tilebuffer,ptra++Huh... Function calls, in my hot loop?

delta2skip ' bit magic mov pat1skp,pattern not pat1skp ' has to be inverted rev pat1skp splitw pat1skp movbyts pat1skp,#%%3120 mov pat2skp,pat1skp setword pat1skp,mirrorset,#1 setword pat2skp,mirrorset,#0 movbyts pat2skp,#%%1032 mergeb pat1skp _ret_ mergeb pat2skp skipbits_nomirror long $FF00 skipbits_yesmirror long $00FF tilebuffer res 4and

lutslidepoints to an autogenerated piece of code that looks like this:skipf pat1skp ror palette,#16 ror palette,#8 setbyte tilebuffer+0,palette,#0 setbyte tilebuffer+3,palette,#3 ror palette,#16 ror palette,#8 setbyte tilebuffer+0,palette,#1 setbyte tilebuffer+3,palette,#2 ' [...] ror palette,#16 ror palette,#8 setbyte tilebuffer+1,palette,#3 setbyte tilebuffer+2,palette,#0 skipf pat2skp ror palette,#16 ror palette,#8 setbyte tilebuffer+2,palette,#0 setbyte tilebuffer+1,palette,#3 ' [...] ror palette,#16 ror palette,#8 setbyte tilebuffer+3,palette,#3 setbyte tilebuffer+0,palette,#0 retGet what's going on here? It transforms the tile pattern into two skip patterns, mapping the two bits to the two RORs and the mirror/not-mirror flags to the SETBYTEs. Running through the

lutslidethen assembles the decoded 8bpp data intilebuffer, ready for dumping onto the screen using SETQ+WRLONG. The same technique is used for sprites, but the transparent palette entry is forced to zero (zero is not a valid color in the PC/P2 palette) and WMLONG is used to blit it out.Pretty clever, right?

Aaaaand we bootin' !!!

Annoyingly, opening any text screen whatsoever crashes....

Okay, got the CPU and graphics fully (as far as I can tell) working, so audio is next (after that I'll have to clean everything up and integrate the P2 target with the build process).

So I guess @Ahle2 can rejoice, for RetronitusDX on P2 is going to be a thing. I think i''ll even make it workable for general P2 usage (as opposed to the JET port, which is limited to reading data from the first 64K for compatibility with the P1 version). I think I'll just add a base pointer to move around the 64K that Retronitus can read.

I've already started, but despite commenting out nearly everything something is corrupting cog ram... tough nut.

Well, I just wasted some hours because I'm stupid

Wave shaping and sequencing works now, but something about those instruments....

OK, it's all done and merged!

The P2 loader (read: the bit of spin code that sets up the I/O and environment) is super duper barebones right now. The P1 loader isn't that much better, but it at least has on-screen error messages (for when it fails to find a file) and can toggle PAL/NTSC, haha.

Eventually I want to rewrite them both and hook up a common setup utility (that could even be shared with the PC version by way of spin2cpp, if that feature hasn't bitrotted. @ersmith ?) that allows setting preferences like PAL/NTSC, audio type (mono vs. stereo vs. surround receiver vs. headphones (yes, those all warrant slightly different mixes of the music)) with an on-screen menu and persist them in some settings file.

spin2cpp hasn't been tested much lately, so there's probably a lot of Spin2 it doesn't support. But basic spin support should still work, as the test suite for it still runs.

Since I haven't posted here in almost a month...

Explosion with no context!

Since OPN2cog is done and waiting for release, time for this again.

Starting to implement some things that modify the environment...

Generating fade tables for the P1 NTSC palette (and the RGB ones, but those are less interesting)...

Currently I calculate an ideal color for each fade step and then pick the palette color with the lowest CIELAB distance to the ideal. This is kinda ok, but has the issue that the luminance sometimes isn't monotonous (i.e. a step that is supposed to be darker is actually lighter than the previous). Idk how to fix that though.

Very important and not all procrastination: IRQsoft logo animation/chime

*Notices she hasn't posted here in months*

...

*slight startlement*

...

So anyways, just to confirm that I am still alive, here's a new GIF of a thing I put in at some point!

I know, originality flows in my veins like over-caffinated blood.

Note that the flashing of the chains

a) isn't really noticable on a Vsync'd display (as you'd have running directly from a P1 chip9

b) is there so you can use another tool while the clawshot is doing its thing

I've also ported the PC version to SDL2, which has given it abilities such as lower audio latency, better framepacing (after figuring out initial issues... Pro tip: do not try to update your Window title at 60Hz), not crashing at random when switching window focus and being able to scale the game framebuffer to any window size (honoring the intended non-square PAR, too!).

But yeah, lately I hadn't had much time since I was working on MegaYume.

Also, has the file upload limit lowered? Had to hotlink that GIF from Imgur to make it decent-sized.