Today I reached another milestone: The resolver interface on the daughterboard is working. This includes a driver for the 24 bit stereo audio ADC PCM1807 with I2S interface which might be interesting to others, so I'll open another thread....

Yesterday I tested the velocity control code I posted on July 8th and it finally works. I had to insert some more scaling factors because I forgot the some of the velocity values are in steps/loop iteration units while the PID needs rad/s. And I forgot the commutation

This is the only equation that needs masking and shifting because the CORDIC expects left adjusted angle values.

So the motor ran closed loop for the first time and I think the whole project can be called a success. However, there's still a lot of work to do. I'm not really happy with the tuning and before I do a fine adjustment of all the parameters I have to implement the safety features (thermal, overcurrent and overvoltage protection...) so I can test without fearing that the magic smoke comes out.

I'm kind of late to this thread but can you share the motivation for this migration from the arm based micro to the P2 ?

I have an idea, but why guess when I can ask.

Well... very good question. A lot of really bad curses came to my mind, immediately, when I remeber the time I tried to program those ARM beasts. I have to find some other words I can write here in the forum without being banned.

Those ARM CPUs (ATSAMS70 in my case, but true for most of them) are designed for the mass market. They are relly good when it comes to horsepower per $ but the whole peripheral architecture around the ARM core is just SICK! They are so stingy with every bit and flipflop and on the other hand so many resources are wasted by including so much different modes and options. For example: timers are only 16 bit wide. Because they overflow quickly there are lots of different clocking sources, pre-dividers, options to daisy chain multiple timers and so on. Instead, a single, uniform 32 bit timer running on a fixed global clock could do everything without the need for pre-scalers.

Pin assignment... I understand that there have to be multiplexers for output pins to decide which signal source should drive the pin when there are multiple options. But why the heck de-multiplexers for input pins??? What??? Yes, believe it or not, they decided that you have to explicitely select the function to which the input signal is routed. If you want to use a signal for two purposes (counting pulses and triggering an interrupt, for example) you have to waste an extra pin and connect the signal to two inputs. Why not route the signal source to every possible sink inside the chip? Just stupid. It takes resources and limits the possibilities without any reason.

And because peripherals and pins are very limited there are so many derivates you have to choose from. But you have to read hundrets of pages of data sheets to decide which one is the best for your application. Need more RAM? OK, take a derivate with more memory... Some days later you find out that your code isn't working any longer. Reason: This derivate doesn't support a special register bit you need to signal the DMA to poll your ADC, UART or whatever. The feature lists in the data sheets sound so promising but at the end you find out that XY is not supported and function A conflicts with pin assignment of function B and so on... You have to work around that, change the pin assignments, schematics, re-do the PCB-layout once again and again. It's just a nightmare alltogether. To do one hour of productive programming you need 10 hours reading manuals and 100 hours to do useless hardware re-designs.

Maybe I can explain it this way: Imagine there is a product developed for the mass market and it is linked to promises to enter the paradise immediately after the cash flow, like we experienced over the years and more and more become aware, that there is something wrong, still many are not willing to give up and give the chimera another chance, then the propeller comes as the alternative. Promising more then blood, sweat and tears, promoting the concept of load sharing and simplicity as all pins are created equal. As we in Europe know, what the prize of uniformity is, men are at work and and thanks to a certain American we are freed from the limits of the arm and finally can work with the brain.

Or let me say it even most simplified: The propeller is just great!

I know, bashing at others is probably not the best trait but it's so liberating...

This is the code to initialize the CPU and peripheral clocks for an ARM:

void Init_Clock ()

{

//CPU-Takt auf 300MHz

//MCK auf 150MHz

//PLLA auf 300MHZ

EFC->EEFC_FMR = EEFC_FMR_FWS(6)|EEFC_FMR_CLOE; //Waitstates auf 6, wegen 150MHz

//Takt auf Main-Oszi umschalten, weil wir eventuell der CPU bei StopPLL den Takt wegnehmen.

PMC->PMC_MCKR = (PMC->PMC_MCKR & (~PMC_MCKR_CSS_Msk)) | PMC_MCKR_CSS_MAIN_CLK;

while (!(PMC->PMC_SR & PMC_SR_MCKRDY)) {};

PMC->CKGR_MOR = (PMC->CKGR_MOR | CKGR_MOR_MOSCXTBY) | CKGR_MOR_KEY_PASSWD; //Bypass XTAL-Oszillator

PMC->CKGR_MOR = (PMC->CKGR_MOR | CKGR_MOR_MOSCSEL) | CKGR_MOR_KEY_PASSWD; /* Switch to XTAL */

while (!(PMC->PMC_SR & PMC_SR_MOSCSELS)){} //Warten auf Ready vom 12MHz RC

//Configure and enable PLLA

/* PMC hardware will automatically make it mul+1 */

//25MHz input. Mul(11+1) div(1) = 300

uint32_t ctrl = CKGR_PLLAR_MULA(12 - 1) | CKGR_PLLAR_DIVA_BYPASS | CKGR_PLLAR_PLLACOUNT(PLL_COUNT);

PMC->CKGR_PLLAR = CKGR_PLLAR_ONE | CKGR_PLLAR_MULA(0); //Stoppen!

PMC->CKGR_PLLAR = CKGR_PLLAR_ONE | ctrl; //Configurieren

while(!(PMC->PMC_SR & PMC_SR_LOCKA)){}; //Wait for lock

PMC->PMC_MCKR = (PMC->PMC_MCKR & (~PMC_MCKR_MDIV_Msk)) | PMC_MCKR_MDIV_PCK_DIV2; //MCK max. = 150MHz

while (!(PMC->PMC_SR & PMC_SR_MCKRDY)); //Warten bis gesetzt

PMC->PMC_MCKR = (PMC->PMC_MCKR & (~PMC_MCKR_PRES_Msk)) | PMC_MCKR_PRES(0); // *1 Prescaler, Register=0

while (!(PMC->PMC_SR & PMC_SR_MCKRDY)) {};

PMC->PMC_MCKR = (PMC->PMC_MCKR & (~PMC_MCKR_CSS_Msk)) | PMC_MCKR_CSS_PLLA_CLK;

while (!(PMC->PMC_SR & PMC_SR_MCKRDY)) {};

//Init RTT auf 32kHz Vorteiler 33 => ca. 1ms Auflösung

RTT->RTT_MR = 33;

}

Counterpart for the P2:

_clkset (modePll, _CLOCKFREQ);

And yes, there are libraries for the ARM to simplify the above. But they bloat the code and have so many side effects nobody knows that you better decide to throw them into the bit bucket, and finally return to programming on the bare metal.

ManAtWork,

Hats off to you ! This is the most informative and detailed answer one could get. Thank you.

I suspected to get something along these lines as I've had some really hard times with arm chips myself for the very same reasons you so accurately described, but thought it's maybe just me being too stupid or lazy. Well, at times I am. But it appears there are other, less personal reasons as well .

Most people I know, who work with arm chips, try to stick to one or two of them (one at the lower and the other at the higher end), usually from the same manufacturer, that best suit their needs to avoid that constant, time consuming datasheet digging, and do so until the needs change or the chip slips out of production. That is perfectly understandable for high volume products or just one particular product the chip is a good fit for.

But, as it is often the case with a product made to order or in small quantities, that approach just doesn't cut the deal.

This is why I turned to the P1 and P2 in hopes that'll bring more elasticity to the design process and, at the end, will simplify it and make it more efficient. Plus, with these I get a benefit of Peter's Tachyon and TAQOZ forths, which are great.

ErNa,

And you have synthesized the answer masterfully. Thanks to you too.

And because it's so crazy, just another story: The ATSAME70 has an ethernet controller built in, very powerful and usefuly, I thought. They supply a library so it should be easy to use, I also thought... It turned out that the library didn't make use of any DMA and interrupt but instead used polling and busy-waiting for every single operation although the chip would have provided it.

Needless to say that this ruined every single bit of it's suitability for real time systems. If the connection was interrupted for a short time the whole CPU stalled completely until the connection was re-established.

...but thought it's maybe just me being too stupid or lazy

Me too. Fortunatelly, a friend re-wrote the whole driver for the ethernet controller. I wouldn't have been patient enough. I'd rather had taken a big hammer and hit the ARM CPU to end it once and for all...

Atmel (now Microchip) arm micros aren't particularly friendly, that a fact. I use the ones from ST. Much nicer to me but still, well over a thousand page manuals for the more advanced ones plus programming manuals and datasheets and errata notices, of course. And all that to only find out that a particular feature you need has a bug that is not fixed and no known workaround is in place for the chip revision you just happen to have bought in volume.

Who has enough time to read the fine details, which is a must ? I'm afraid not many. I'm really hoping the P2 story will be much happier. It looks so now but I've just started that journey

Thanks guys for your lovely (haha) insight to the arm world. Peter J recents similar experiences too.

Every time I go to use an arm chip, I look at the mountain of docs, the confusing set of crappy configuration registers, and the mountain of various chip designs with different sets of peripherals, and then think better of getting into this nightmare.

Then I return to thinking how easy it is to use a P1 or P2 for my designs. I can’t help think that for many of my newer designs I would really have just liked an updated P1 - just a bit faster with more hub ram (64KB min, 80KB would have been nicer. Spin could just access the 64KB without changes).

The P2 is really a big new beast that shares some common roots. It’s way more complex, but no-where near an arm, or even this pics, atmegas, etc. While I have my complaints (and they are actually software constraints that don’t make sense) the P2 is just sooo much better than the others!!! While it’s not as easy as the P1, it’s still a joy to work with.

FWIW, I still wish the smart pins had have been implemented as a group of tiny cut-down P1 fast CPUs with some of the extra multi-pin instructions. We could have done so much more, and been simpler, yet not take any more silicon. In fact, the P2 cogs could have then been simpler, and probably 4 would have been enough. But I’m thankful for what we have! Dreaming is nice.

Today, I checked if I could finally finish this project. But after a quick look over the BOM and an inventory check at several distributors I came to the sad conclusion that I have to bury it. The SAMC21 ARM slave processor for the high voltage side and the current sensors are completely unavailable. I have ~10 boards but it doesn't make any sense to develop something that I won't be able to produce in series for the next 2-3 years.

So I'm thinking about a redesign with a slightly different architecture:

P2 on the high voltage side (back end), processes PWM and PID control

classical current measurement with shunt resistors in the ground path if the IGBTs

P1 on the low voltage side (front end), processes command input and GPIO

isolated serial link (RX/TX) between P1 and P2

The added cost for the P1 is lower than the sum of that of the current sensors and ARM slave processor. The P1 could boot from the memory of the P2 so I don't even need a second flash chip. But there are also some drawbacks.

analogure feedback (resolvers or sin/cos encoders) would be hard to implement because the P2 with it's superior ADCs sits on the wrong side of the isolation barrier.

my Goertzel encoder can be connected but needs a transformer and an isolated power supply

simple feedback devices (incremental or RS485 based absolute encoders) can be connected to the P1

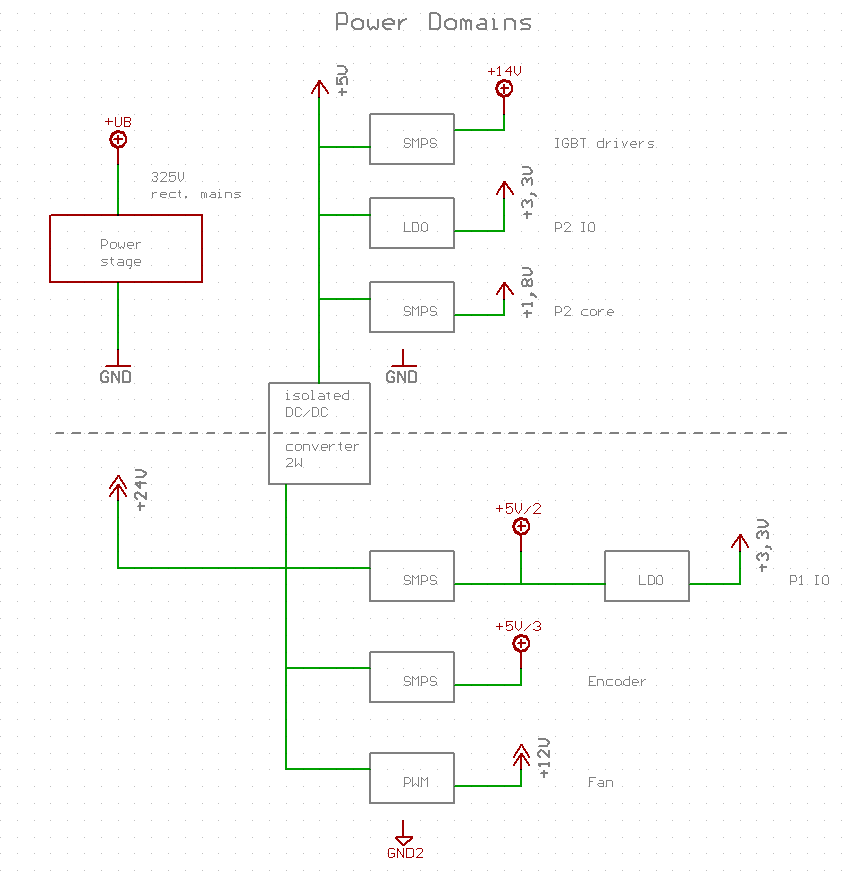

Although I find the idea of having a P1 and a P2 in the servo controller somewhat cool the isolation barrier and the different power domains make the design rather complex. I'd need at least eight different voltage regulators.

The power stage runs on rectified mains voltage. But I don't have an onboard AC/DC converter. Instead, everything is powered from an external 24V supply. The P2 sits on the power ground domain because it has to measure the motor currents with ADCs connected directly to the shunt resistors in the power stage. But it has to stay alive when the motor power is shut down for safety reasons. So the P2 has to be powered from the 24V side which supplies standby power in that case. The encoder also has to stay active all the time to keep track of position while the motor is in standby.

Communication between P1 and P2 runs over an ISO7721 digital coupler, well actually its chinese copy because the original one from TI is unavailable. The QPSK signal for the encoder data is transferred with a conventional transformer from the encoder to the P2.

All those 8 regulators need also be available and the isolated one will be really expensive because of the P2 being quite power hungry.

Things are getting pretty exciting on my end but I'm working on a basic motion controller to interface with existing drives.

My customer in Ohio uses Beckhoff based controls but they just can't get them and I have also researched the supply chain problem. Of the 23 systems they ordered back in November, they have yet to receive a single unit. They might receive 2 systems, later this month.

They are pushing hard for my P2 system and plan to ditch Beckhoff altogether.

Electrical shock is not such a big concern if you use the proper protection. For prototyping I use an isolation transformer so I can use grounded probes from the scope without causing short circuits all the time. And an RCD protects against electrical shock in the case insulation fails or I do something wrong with the wiring.

Using mains voltage actually makes a lot easier if you need >100W power because the currents are smaller and you don't need thick wires and chunky connectors and terminals. Yes, if something fails it could make some fireworks but the electrolytic caps at 350V contain much less energy than the battery of your cell phone.

Comments

So the motor ran closed loop for the first time and I think the whole project can be called a success. However, there's still a lot of work to do. I'm not really happy with the tuning and before I do a fine adjustment of all the parameters I have to implement the safety features (thermal, overcurrent and overvoltage protection...) so I can test without fearing that the magic smoke comes out.

I'm kind of late to this thread but can you share the motivation for this migration from the arm based micro to the P2 ?

I have an idea, but why guess when I can ask.

Those ARM CPUs (ATSAMS70 in my case, but true for most of them) are designed for the mass market. They are relly good when it comes to horsepower per $ but the whole peripheral architecture around the ARM core is just SICK! They are so stingy with every bit and flipflop and on the other hand so many resources are wasted by including so much different modes and options. For example: timers are only 16 bit wide. Because they overflow quickly there are lots of different clocking sources, pre-dividers, options to daisy chain multiple timers and so on. Instead, a single, uniform 32 bit timer running on a fixed global clock could do everything without the need for pre-scalers.

Pin assignment... I understand that there have to be multiplexers for output pins to decide which signal source should drive the pin when there are multiple options. But why the heck de-multiplexers for input pins??? What??? Yes, believe it or not, they decided that you have to explicitely select the function to which the input signal is routed. If you want to use a signal for two purposes (counting pulses and triggering an interrupt, for example) you have to waste an extra pin and connect the signal to two inputs. Why not route the signal source to every possible sink inside the chip? Just stupid. It takes resources and limits the possibilities without any reason.

And because peripherals and pins are very limited there are so many derivates you have to choose from. But you have to read hundrets of pages of data sheets to decide which one is the best for your application. Need more RAM? OK, take a derivate with more memory... Some days later you find out that your code isn't working any longer. Reason: This derivate doesn't support a special register bit you need to signal the DMA to poll your ADC, UART or whatever. The feature lists in the data sheets sound so promising but at the end you find out that XY is not supported and function A conflicts with pin assignment of function B and so on... You have to work around that, change the pin assignments, schematics, re-do the PCB-layout once again and again. It's just a nightmare alltogether. To do one hour of productive programming you need 10 hours reading manuals and 100 hours to do useless hardware re-designs.

Or let me say it even most simplified: The propeller is just great!

This is the code to initialize the CPU and peripheral clocks for an ARM:

void Init_Clock () { //CPU-Takt auf 300MHz //MCK auf 150MHz //PLLA auf 300MHZ EFC->EEFC_FMR = EEFC_FMR_FWS(6)|EEFC_FMR_CLOE; //Waitstates auf 6, wegen 150MHz //Takt auf Main-Oszi umschalten, weil wir eventuell der CPU bei StopPLL den Takt wegnehmen. PMC->PMC_MCKR = (PMC->PMC_MCKR & (~PMC_MCKR_CSS_Msk)) | PMC_MCKR_CSS_MAIN_CLK; while (!(PMC->PMC_SR & PMC_SR_MCKRDY)) {}; PMC->CKGR_MOR = (PMC->CKGR_MOR | CKGR_MOR_MOSCXTBY) | CKGR_MOR_KEY_PASSWD; //Bypass XTAL-Oszillator PMC->CKGR_MOR = (PMC->CKGR_MOR | CKGR_MOR_MOSCSEL) | CKGR_MOR_KEY_PASSWD; /* Switch to XTAL */ while (!(PMC->PMC_SR & PMC_SR_MOSCSELS)){} //Warten auf Ready vom 12MHz RC //Configure and enable PLLA /* PMC hardware will automatically make it mul+1 */ //25MHz input. Mul(11+1) div(1) = 300 uint32_t ctrl = CKGR_PLLAR_MULA(12 - 1) | CKGR_PLLAR_DIVA_BYPASS | CKGR_PLLAR_PLLACOUNT(PLL_COUNT); PMC->CKGR_PLLAR = CKGR_PLLAR_ONE | CKGR_PLLAR_MULA(0); //Stoppen! PMC->CKGR_PLLAR = CKGR_PLLAR_ONE | ctrl; //Configurieren while(!(PMC->PMC_SR & PMC_SR_LOCKA)){}; //Wait for lock PMC->PMC_MCKR = (PMC->PMC_MCKR & (~PMC_MCKR_MDIV_Msk)) | PMC_MCKR_MDIV_PCK_DIV2; //MCK max. = 150MHz while (!(PMC->PMC_SR & PMC_SR_MCKRDY)); //Warten bis gesetzt PMC->PMC_MCKR = (PMC->PMC_MCKR & (~PMC_MCKR_PRES_Msk)) | PMC_MCKR_PRES(0); // *1 Prescaler, Register=0 while (!(PMC->PMC_SR & PMC_SR_MCKRDY)) {}; PMC->PMC_MCKR = (PMC->PMC_MCKR & (~PMC_MCKR_CSS_Msk)) | PMC_MCKR_CSS_PLLA_CLK; while (!(PMC->PMC_SR & PMC_SR_MCKRDY)) {}; //Init RTT auf 32kHz Vorteiler 33 => ca. 1ms Auflösung RTT->RTT_MR = 33; }Counterpart for the P2:

Hats off to you ! This is the most informative and detailed answer one could get. Thank you.

I suspected to get something along these lines as I've had some really hard times with arm chips myself for the very same reasons you so accurately described, but thought it's maybe just me being too stupid or lazy. Well, at times I am. But it appears there are other, less personal reasons as well

Most people I know, who work with arm chips, try to stick to one or two of them (one at the lower and the other at the higher end), usually from the same manufacturer, that best suit their needs to avoid that constant, time consuming datasheet digging, and do so until the needs change or the chip slips out of production. That is perfectly understandable for high volume products or just one particular product the chip is a good fit for.

But, as it is often the case with a product made to order or in small quantities, that approach just doesn't cut the deal.

This is why I turned to the P1 and P2 in hopes that'll bring more elasticity to the design process and, at the end, will simplify it and make it more efficient. Plus, with these I get a benefit of Peter's Tachyon and TAQOZ forths, which are great.

ErNa,

And you have synthesized the answer masterfully. Thanks to you too.

Needless to say that this ruined every single bit of it's suitability for real time systems. If the connection was interrupted for a short time the whole CPU stalled completely until the connection was re-established. Me too. Fortunatelly, a friend re-wrote the whole driver for the ethernet controller. I wouldn't have been patient enough. I'd rather had taken a big hammer and hit the ARM CPU to end it once and for all...

Who has enough time to read the fine details, which is a must ? I'm afraid not many. I'm really hoping the P2 story will be much happier. It looks so now but I've just started that journey

Every time I go to use an arm chip, I look at the mountain of docs, the confusing set of crappy configuration registers, and the mountain of various chip designs with different sets of peripherals, and then think better of getting into this nightmare.

Then I return to thinking how easy it is to use a P1 or P2 for my designs. I can’t help think that for many of my newer designs I would really have just liked an updated P1 - just a bit faster with more hub ram (64KB min, 80KB would have been nicer. Spin could just access the 64KB without changes).

The P2 is really a big new beast that shares some common roots. It’s way more complex, but no-where near an arm, or even this pics, atmegas, etc. While I have my complaints (and they are actually software constraints that don’t make sense) the P2 is just sooo much better than the others!!! While it’s not as easy as the P1, it’s still a joy to work with.

FWIW, I still wish the smart pins had have been implemented as a group of tiny cut-down P1 fast CPUs with some of the extra multi-pin instructions. We could have done so much more, and been simpler, yet not take any more silicon. In fact, the P2 cogs could have then been simpler, and probably 4 would have been enough. But I’m thankful for what we have! Dreaming is nice.

Today, I checked if I could finally finish this project. But after a quick look over the BOM and an inventory check at several distributors I came to the sad conclusion that I have to bury it. The SAMC21 ARM slave processor for the high voltage side and the current sensors are completely unavailable. I have ~10 boards but it doesn't make any sense to develop something that I won't be able to produce in series for the next 2-3 years.

So I'm thinking about a redesign with a slightly different architecture:

The added cost for the P1 is lower than the sum of that of the current sensors and ARM slave processor. The P1 could boot from the memory of the P2 so I don't even need a second flash chip. But there are also some drawbacks.

I was just wondering about the projects that are on-hold because of the shortage and how many could kick-off if the devs were aware of the Prop 🤔

Craig

Although I find the idea of having a P1 and a P2 in the servo controller somewhat cool the isolation barrier and the different power domains make the design rather complex. I'd need at least eight different voltage regulators.

The power stage runs on rectified mains voltage. But I don't have an onboard AC/DC converter. Instead, everything is powered from an external 24V supply. The P2 sits on the power ground domain because it has to measure the motor currents with ADCs connected directly to the shunt resistors in the power stage. But it has to stay alive when the motor power is shut down for safety reasons. So the P2 has to be powered from the 24V side which supplies standby power in that case. The encoder also has to stay active all the time to keep track of position while the motor is in standby.

Communication between P1 and P2 runs over an ISO7721 digital coupler, well actually its chinese copy because the original one from TI is unavailable. The QPSK signal for the encoder data is transferred with a conventional transformer from the encoder to the P2.

All those 8 regulators need also be available and the isolated one will be really expensive because of the P2 being quite power hungry.

Things are getting pretty exciting on my end but I'm working on a basic motion controller to interface with existing drives.

My customer in Ohio uses Beckhoff based controls but they just can't get them and I have also researched the supply chain problem. Of the 23 systems they ordered back in November, they have yet to receive a single unit. They might receive 2 systems, later this month.

They are pushing hard for my P2 system and plan to ditch Beckhoff altogether.

Same story with Siemens, Allen Bradley, etc.

Opportunity is knocking 👍😎

That's actually somewhat frightening. Use care - We like you and would prefer that you not get fried. Thanks. S.

Electrical shock is not such a big concern if you use the proper protection. For prototyping I use an isolation transformer so I can use grounded probes from the scope without causing short circuits all the time. And an RCD protects against electrical shock in the case insulation fails or I do something wrong with the wiring.

Using mains voltage actually makes a lot easier if you need >100W power because the currents are smaller and you don't need thick wires and chunky connectors and terminals. Yes, if something fails it could make some fireworks but the electrolytic caps at 350V contain much less energy than the battery of your cell phone.