New "compiler" for writing Elev8-FC float math

JasonDorie

Posts: 1,930

JasonDorie

Posts: 1,930

in Propeller 1

Thought I'd share a fun Prop project I'm working on for the Elev8-FC.

A little background: the Elev8-FC does all the IMU math on the Prop, in floating point. In order to keep it small and fast, I'm using a modified F32 driver to do the computation. I added a stream processor to it so I can feed it a list of instructions to execute - it processes the whole sequence and sets a flag when it's done, leaving the main cog free to do other tasks. The "instructions" are just 4 byte values: opcode, input1, input2, and output. The input/output values are byte indices into an array of 32 bit variables, mostly floats, but some ints.

All of this works well, but I've been coding it by hand. That was fine initially, but as the project has grown in scope it's become a bit of a pain point - changing the code isn't trivial, so typically I write in C on the PC until I get the math right, then manually translate to the FPU instructions, and I often make mistakes when translating.

Nothing makes mistakes faster than a computer, so I figured I'd try my hand at automating the process, and it works!

I looked into using Yacc/Lex (Bison/Flex) to generate the parser stage, but the documentation for those tools is terrible, and everything I found online was either "calculator" or "C", with nothing in between. Since I was really only interested in parsing a finite subset of C, with no control statements, I decided to write my own. There's still a bunch of work to do, but it's checked in to GitHub in the Flight-Controller project if you're curious.

First up is converting the code into an expression tree - This is done in two stages, tokenizing and parsing. The tokenizing stage is mostly a pre-filter that reads the source and passes along tokens, like "comment", "label", "operator", "function", "parenthesis", "constant", and so on, along with the relevant token content. For a label it would be the variable name.

The parser takes the token stream and makes sure that the tokens make sense. For example:

This code: rx = gx * const_GyroRate;

Becomes: label, assignment, label, operator*, label, end

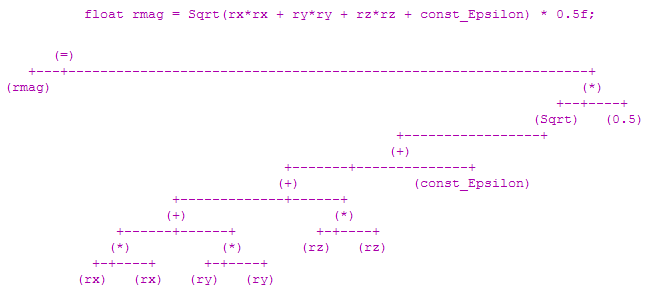

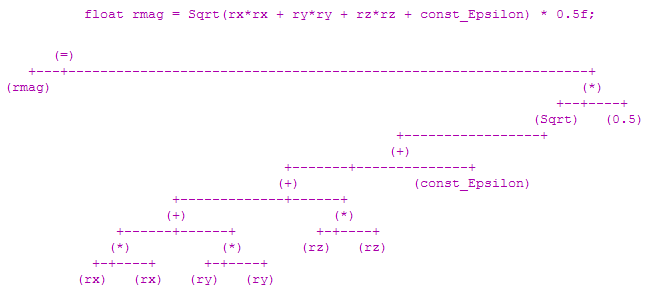

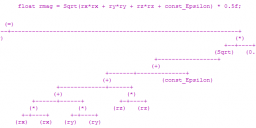

From the parser, I use a technique called "Recursive Descent" to generate an expression tree. This handles converting an infix expression with operators into a tree that represents the expression, and the order of operations derived from operator precedence. You end up with something like this:

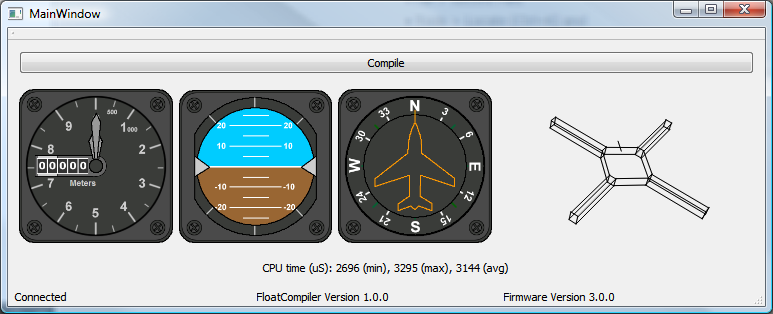

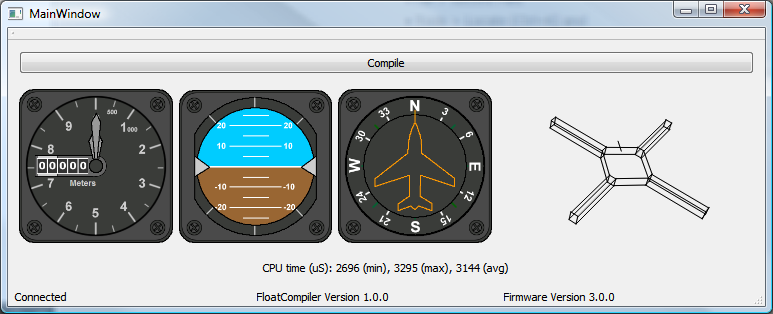

From the expression tree I generate the actual operators, along with a blob of which variables and constants were used, and any const initialization I need. I got it working last night, and actually replaced one of the core math routines in my Elev8 with the generated code, and it works exactly as expected. Even cooler, since the code is just C, I can run the exact same code on the PC in a previewer, feeding it data from the Elev8, and show both results overlaid on each other, like this:

The 3D "X' on the right is actually two models - one computed on the PC, and one computed on the Elev8, showing that they match (this is good). This frees me up to code new math functions in C on the PC, and when I'm happy with the results, have the F32 stream generated automatically from the source.

I've written a code generator before, but never the front-end of a compiler, so this was a fun diversion and I learned a bunch. It might also be useful for others, so if you have a project that needs to run a long sequence of floating point math in a hurry, this might help you.

I've attached an example of a cpp source function and the generated F32 instruction stream.

A little background: the Elev8-FC does all the IMU math on the Prop, in floating point. In order to keep it small and fast, I'm using a modified F32 driver to do the computation. I added a stream processor to it so I can feed it a list of instructions to execute - it processes the whole sequence and sets a flag when it's done, leaving the main cog free to do other tasks. The "instructions" are just 4 byte values: opcode, input1, input2, and output. The input/output values are byte indices into an array of 32 bit variables, mostly floats, but some ints.

All of this works well, but I've been coding it by hand. That was fine initially, but as the project has grown in scope it's become a bit of a pain point - changing the code isn't trivial, so typically I write in C on the PC until I get the math right, then manually translate to the FPU instructions, and I often make mistakes when translating.

Nothing makes mistakes faster than a computer, so I figured I'd try my hand at automating the process, and it works!

I looked into using Yacc/Lex (Bison/Flex) to generate the parser stage, but the documentation for those tools is terrible, and everything I found online was either "calculator" or "C", with nothing in between. Since I was really only interested in parsing a finite subset of C, with no control statements, I decided to write my own. There's still a bunch of work to do, but it's checked in to GitHub in the Flight-Controller project if you're curious.

First up is converting the code into an expression tree - This is done in two stages, tokenizing and parsing. The tokenizing stage is mostly a pre-filter that reads the source and passes along tokens, like "comment", "label", "operator", "function", "parenthesis", "constant", and so on, along with the relevant token content. For a label it would be the variable name.

The parser takes the token stream and makes sure that the tokens make sense. For example:

This code: rx = gx * const_GyroRate;

Becomes: label, assignment, label, operator*, label, end

From the parser, I use a technique called "Recursive Descent" to generate an expression tree. This handles converting an infix expression with operators into a tree that represents the expression, and the order of operations derived from operator precedence. You end up with something like this:

From the expression tree I generate the actual operators, along with a blob of which variables and constants were used, and any const initialization I need. I got it working last night, and actually replaced one of the core math routines in my Elev8 with the generated code, and it works exactly as expected. Even cooler, since the code is just C, I can run the exact same code on the PC in a previewer, feeding it data from the Elev8, and show both results overlaid on each other, like this:

The 3D "X' on the right is actually two models - one computed on the PC, and one computed on the Elev8, showing that they match (this is good). This frees me up to code new math functions in C on the PC, and when I'm happy with the results, have the F32 stream generated automatically from the source.

I've written a code generator before, but never the front-end of a compiler, so this was a fun diversion and I learned a bunch. It might also be useful for others, so if you have a project that needs to run a long sequence of floating point math in a hurry, this might help you.

I've attached an example of a cpp source function and the generated F32 instruction stream.

Comments

But I know it works on the Elev-8!

When I got curious about how one writes a compiler a few years back, even a simple one for a simple language, I also gave up on trying to understand the likes of Yacc/Lex (Bison/Flex) and ended up writing my own recursive decent parser in C. I did not even have a tokenizer, it was a single pass from source to generated assembler. All terribly inefficient and un-optimized but it worked.

That was hard enough for me!

If I were doing such a thing again I think I would be using peg.js. http://pegjs.org/

Mostly I wanted it for me, and I'm using Qt as the framework, which provides a lot of string handling, containers, etc. I was surprised at how fast it came together - I had working code generation by the third day.

...and that beauty had to be translated to a compiled BASIC dialect.

BASICally (mwhuaahahahahaaa!) I could do the same thing with own bytecode but I had to mimic the BASIC interpreter too by adding an own bytecode interpreter.

Lots of fun! \o/

Today I'd crawl thru Flex/Bison examples first too... ;-)

I am writing this question for all the experts here like JasonDorie and Heater.

All online information I have seen on C compilers all have the stack being used for local variable storage.

I am writing a compiler that uses general memory for local variables, and with each function call, pushes as much of this general memory onto the stack as needed to free up space for the new function's variables. So it's like I am doing it backwards.

Is there a name for this technique? Can't find any info on this. And perhaps this is how it's actually normally done for older micros? (8051, PIC without all the fancy addressing modes)

Functions, as we know them from most programming languages, can be called from many places within a program.

Ignoring local variables for a minute, this dictates that function calls store their return address somewhere. This does not require a stack (yet), only a single location in which to store a return address. Which is what happens in Propeller PASM with the CALL/RET instructions.

The "yet" part comes when you want to allow recursive calls in your program. Function "A" calls "B" calls "C" which calls "A" again. At this point you find you need a stack to remember all the return addresses so that you can return up the call chain and return to a different place each time.

There is a similar problem with local variables. One could do without a stack. Just keep the local variables of each function in a memory space somewhere.

Again, this whole scheme falls down if you want to allow recursive calls in your language. Every recursion requires a new set of local variables.

Conclusion: Stack is best unless you want to forbid recursion in your language.

Arguably, not supporting recursion is a way to make an efficient code generator on some architectures.

A traditional C program doesn't have this luxury for exactly the reasons Heater describes above. You can easily do this within a single code block if it doesn't call out to other functions. If other functions *are* called, you'd have to be able to map their variable usage as well, and in turn any functions they might call, all the way down the tree. If it's not a tree you need a stack, end of story, because you simply can't know how the code will behave when running.

What you're doing sounds like it might work, but it's probably really inefficient in memory usage. My guess is (and please correct me if I've misunderstood) that you're giving all local variables a pre-assigned location in memory to use. Then when you call out to a different function, you push those variables onto the stack. My question for you is: what happens if a function is never called? Are the locals used in that function "reserved" somewhere in your memory space? This is what the stack is truly for - preventing wasted space.

That is nifty.

How does the final code size (Calls + Modified F32) compare with the same equations fed thru PropGCC ?

Each opcode is <instruction>, <source1>, <source2>, <destination>

My assumption is that in CMM, the code would have to do something like:

Assuming best case, those are likely two bytes per instruction (one for opcode, one for local stack index or register). If any of those need to be written to / read from memory, the instruction size now has to include that too. Add in the actual address for #multiply (minimum 2 bytes) and I'm better by more than 2:1 for size. Again, unmeasured, but I'd be surprised if I was lower than a 3:1 savings.

GCC could make gains by using opcodes for push/pop to a register index in a single byte, possibly, and if expressions were primarily kept in registers that would save some, but I'd be very surprised if it was able to match the level of compactness I'm at.