P2 vs modern process limits

cgracey

Posts: 14,293

cgracey

Posts: 14,293

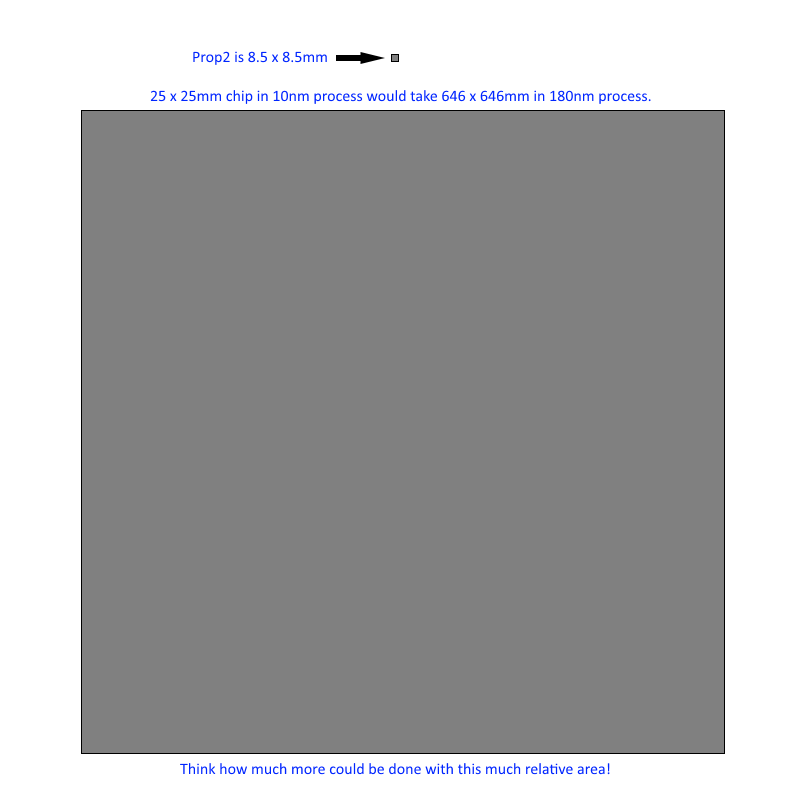

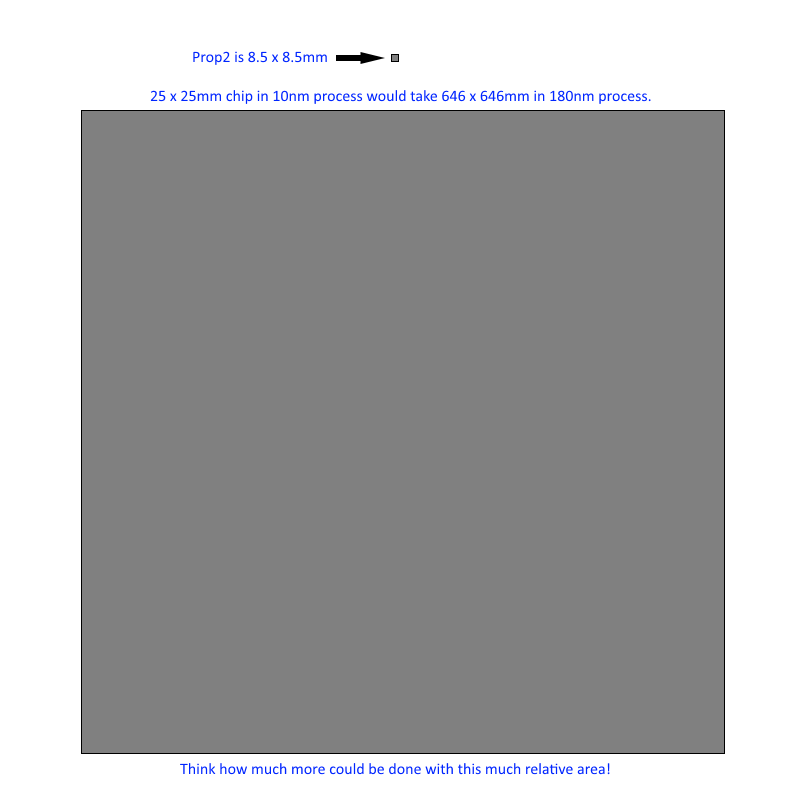

There are chips under design, already, for 10nm processes that will be 1 inch square (645 mm2). The mask costs, alone, on these will be upwards of $10,000,000.

The P2 is built in a 180nm process and is 0.335 inches on the edge (72 mm2).

A 10nm process is 324x denser than the P2's 180nm process. A 645 mm2 die is 9x bigger than the P2 die. Multiplying those two factors together yields 5,805. That's how much more logic and RAM are on these 1-inch square 10nm chips, compared to the P2.

To put that in perspective, if it were possible to realize that 10nm chip's logic functionality using 180nm process technology, the die would be 76x bigger, on the edge, than the P2. That would make it 25.46 inches on the edge (646mm)!!!

I/we struggle with this relatively simple project, but imagine if we had this much more room to work with:

I know... we'd probably die before anything got made.

The P2 is built in a 180nm process and is 0.335 inches on the edge (72 mm2).

A 10nm process is 324x denser than the P2's 180nm process. A 645 mm2 die is 9x bigger than the P2 die. Multiplying those two factors together yields 5,805. That's how much more logic and RAM are on these 1-inch square 10nm chips, compared to the P2.

To put that in perspective, if it were possible to realize that 10nm chip's logic functionality using 180nm process technology, the die would be 76x bigger, on the edge, than the P2. That would make it 25.46 inches on the edge (646mm)!!!

I/we struggle with this relatively simple project, but imagine if we had this much more room to work with:

I know... we'd probably die before anything got made.

Comments

E-PAD TQFP100 kind of chooses your die size for you...

Here are the sorts of things I recall others mentioning:

* Speed. That makes sense, but is there anything that can make the chip faster at 180nm? Less dense circuits (i.e. larger dies for the same design)?

* Yield. I can somewhat see this, but it seems that the setup cost necessitates an extremely high unit volume to bring the cost in line with the older, cheaper process.

What else is there, that's only possible at smaller scales?

10mm might not have fit the die pad and accommodate the down-bonds for the GND connections.

The layout is done, now, except for some power metal additions which are coming along.

Hmm... good point. I would have thought you'd have more room than 8.5mm, but I guess you need a certain amount of room for bonding. Do I remember correctly that the P2 package will be 14mm^2?

I wasn't suggesting a change.

Yes, it does show just how much more resource those big players have at their fingertips, but it also shows the risk trade-offs of playing in that space, and teh quite small number of actual design starts.

Some interesting observations here

https://www.semiwiki.com/forum/f293/tsmc-7nm-trials-start-next-year-7672.html

This from Samsung :

- they cover both leading edge, and 'matured node'

7nm: we have already begun work on our cost optimized 7LPP node which comes with very competitive PPA scaling.

8” matured node: keeping in mind there are still ample of new designs and applications that can take advantage of 8in technology, we are opening up our differentiated 8in technologies ranging from 180nm to 65nm, covering eFlash, Power devices, Image sensors and High voltage processes

Have you asked OnSemi about BGA options, and if anything simple needs doing now, to make BGA possible (/ not excluded) as a future package choice ?

The 1" sq chip in 10nm at 76x quivalent Prop in 180nm on the edge gives an increase from the current 25 pins per edge to 1,900 per edge, or 7,600 EHQFP (exceedingly heavy QFP) package. Presumably there would be a similar increase in mass?

*No toes were harmed during this act.

Those 92,800 cogs could easily clock at 3, maybe even 4 GHz. You start to realize just how much relative wire time must be involved at those scales.

From jmg's forum link:

"By the way, sizeable 10nm demand in 2nd quarter 2017 is the iPone 7 refresh of course. " (iPone = iPhone)

At those nodes, maybe several consumer products drive 80% of demand.

More from jmg's link:

"At 14nm, Samsung seems to be doing well here as their fabs in Korea and Texas are running full time, with over 0.5M wafers shipped and defect density below 0.2 defects/cm^2 in production."

20" wafers???

I think that refers to 500,000 chips shipped.

Oh, duh! Well, wait... wafers, not chips. Over 500,000 wafers were shipped. Probably 300mm wafers.

In reality the geometry is bigger, so you can not just scale to a 180nm process to get the yield.

I read it in a ordinary newspaper, so I'm not sure if it's true.

Andy

Yes, I think there is an element of 'smoke and mirrors' in some of these 'nm' figures, but I guess as long as everyone agrees on using the same smoke, they still indicate relative improvements ?

Certainly, you cannot simply scale 10nm:180nm, as very little of the die pushes 10nm, even virtually.

I've heard these high end devices need very serious clock tree design efforts, with amps involved.

If we could build a Propeller with an infinite number of COGs what would it's performance be?

I suggest zero MIPS.

Why?

Because the bandwidth to HUB would be whatever it is now divided by infinity. That is to say zilch.

There would have to be some serious re-architecting of the Propeller to make any use of 92,800 COGs.

I don't think there is a serious suggestion for 92,800 COGs on the table, just a musing over what the other end of the nm spectrum can give designers to play with.

If Quartus takes 2 hours to compile Chip's 16 cog verilog code now, even 1000 cogs would take an eternity.

I've heard of one-month place-and-route runs. Hopefully, no bug is found three weeks in.

There is an FPGA cluster made by Cadence called Palladium that costs $1M, I heard. It's for ASIC prototyping. I bet that thing takes a lot of time to compile for. The user's design would have to be partitioned somehow.

I just now heard our 7-year-old daughter ask her mom, "When you do math REALLY fast, does it knock you out?"

Yes, a single infinite HUB. Well we were being absurd, might as well go all the way

More seriously is that it it's Amdahl's law that gets in the way when parallelizing things.

Yeah, there are limits to the energy you can muster. That's why it can't knock you out. Maybe a yogi could knock himself out, but we are more likely to peter out in a stupor of thought with images of food floating in our minds.

Or you could make a tree structure, where groups of 8 or 16 cogs have access to their own local hub, and those hubs are tied into higher-up hubs somehow, which are again tied into higher-up hubs, and so on. This sounds like it would need caches, which sounds disgusting.

But what would be the point of doing this with the Propeller? If you want to do supercomputing, use a supercomputer.

If HUB access proves too long in P2, another variant would be to split the memory plane, so clusters of 4 can access their shared area in 4 hub cycles and a larger common area could still be 16 Cycles.

Of course, this adds another memory segment to explain, and for tools to track, and it lowers the peak memory size a Single COG could 'see', but it would cut the latency between closely co-operating COGs.

I'm almost done adding 1/2/4-bit modes to the streamer. I've got the outputting working. Now, I'm working on the inputting. After this, I'm going to add... it'll be a surprise. It's mainly just a matter of wiring and a mux, but will finish the design.

Yahoo! Guessing time (I'd never do that!) ... Top candidates: Streaming to the LUT, or Streaming to/from Smartpins. Oops, that's way more than some muxing. That leaves Cluso's Cog to Cog latch as a possible.

I just finished the 1/2/4-bit pin-to-hub streamer input. I still need to test it, though.

You're on track with Cluso's latch, but it's better than that.