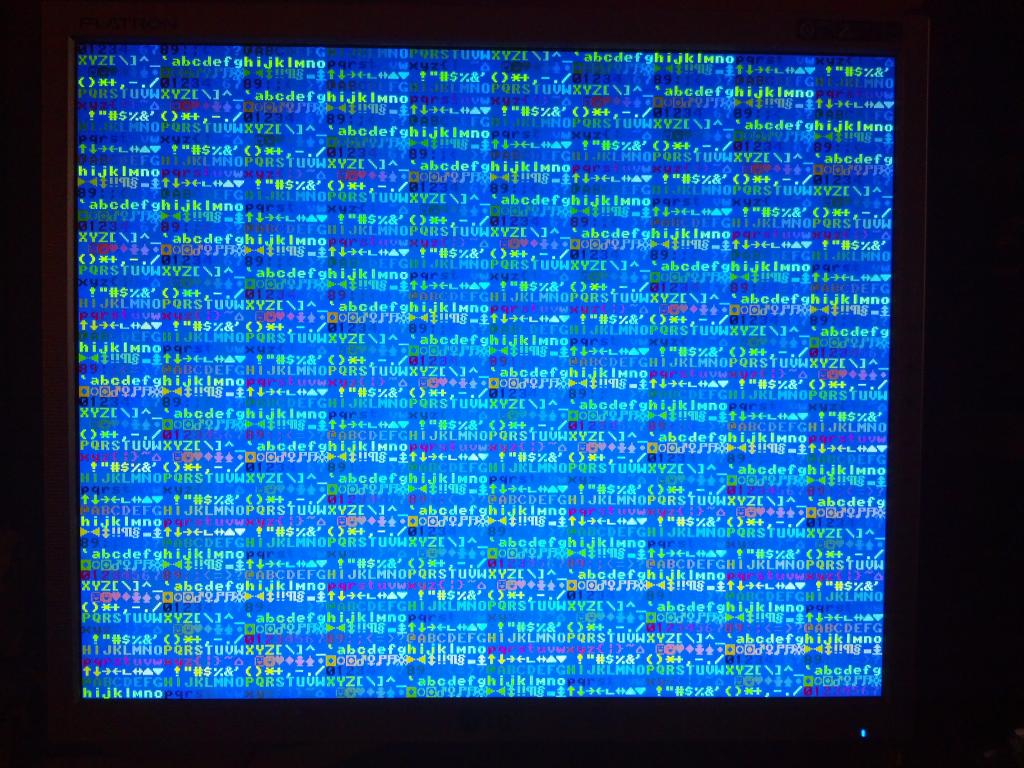

VGA and NTSC tiled color text console driver

pedward

Posts: 1,642

pedward

Posts: 1,642

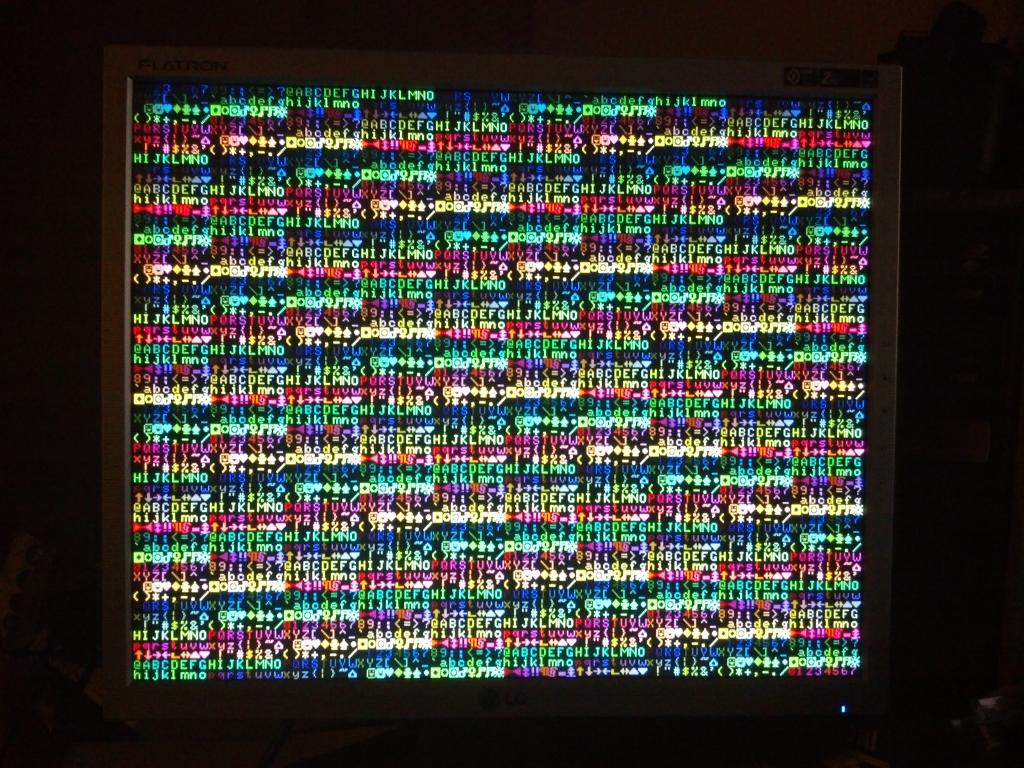

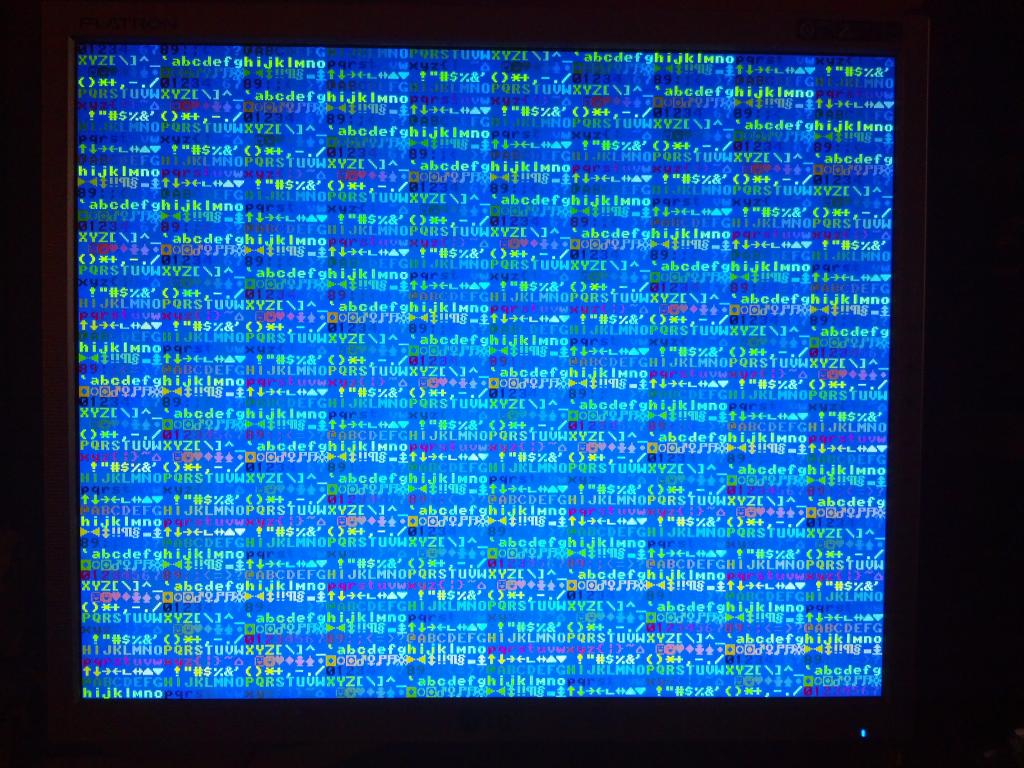

So I rewrote my TTY driver to do color per cell, at least FG color.

The format is a lot like the IBM PC, it's 1 word per character, with the high byte being the foreground color in 3:3:2 and the low byte being the character code.

If there were more cycles, I would have implemented a 1-of-16 FG and BG color palette, but there just weren't enough cycles to be had, so I cheated.

The FG color in the mode I'm using is bits 18-27, but since that isn't addressable directly and the bgcolor is addressable using MOVD, I switched them. The FG color is now the D register and the BG color is set in a variable and gets initialized into the color mask on each scanline render.

I spent a lot of time instrumenting my code to really suss out what is going on with the VID, it's going to infuriate and confuse a lot of people because of the seeming indeterminate nature of how it works.

The basic thing you need to understand is that if the data to be displayed is stored in the CLUT, you have to double buffer the data because the VID is running out of sync with the COG. There isn't any way around this fact that I've found. What makes this interesting is that there are 8 modes that take data directly in the S register to WAITVID and use the CLUT as a palette instead of buffer. These should be immune from the problem because the data is taken away immediately and is determinate.

I initially thought that the best way to speed things through the VID was to build up a scanline and pump it to the VID in quick succession, but this is actually opposite of what you want. The reason is that you can only stack a few VID instructions before you need to pump more data into the CLUT, and writing all of the data first just doesn't work.

The solution is to stream the data to VID in realtime by working on a little bit, then feeding VID, then working, then feeding. This is how I rewrote my rendering mechanism, it renders 1 character, then feeds the VID, renders, then feeds. The trick is that there are 256 indices in the CLUT, so I can waste some space and save clock cycles by only using 1 byte of every long, and each successive call to VID gets a different CLUT address, so it doesn't matter how many calls out of sync I am, because they all point to different memory locations and won't conflict.

This turns out to work very well and is scalable to pretty much any display resolution because the timing is fixed per tile, add more tiles and the timing stays the same, because the important factor is the tile timing is right, not the overall time to render a single screen (though that does come into play eventually).

The rendering loop has 15 instructions and that is the practical maximum you can execute between feedings to VID, he won't tolerate delays, it's very very tight.

What my instrumenting has shown me is that very often when you make a call to WAITVID, the COG will spin however many cycles it needs before the prior VID command is done executing. Some command calls take a lot of time before they return, some are fairly short. There is no deterministic way to control the VID because it needs to be fed, and you have no way of knowing exactly when it will read the data and execute your command. The VID is almost always slower than the COG, so the COG usually can pump the data into the CLUT faster than the VID can read it, and this actually becomes a problem--a race condition.

Since there is no way to sync the VID and the COG, with respect to reading the CLUT, you must double buffer data, pumping the data in before calling to VID actually doesn't work as well as it does on paper. What I found is that you get very unexpected results from the VID if you try to put data into the CLUT before executing a command, but if you double buffer, or sometimes write data behind the VID, it works. The most deterministic way is to double buffer, then you don't have any contention.

My color tile driver uses a unique CLUT address for each call to WAITVID, avoiding buffering issues.

I also included some additional fonts, a couple of them are very legible, and I included some vintage stuff (Hercules graphics card fonts). The thin8x8 font is actually nice.

The format is a lot like the IBM PC, it's 1 word per character, with the high byte being the foreground color in 3:3:2 and the low byte being the character code.

If there were more cycles, I would have implemented a 1-of-16 FG and BG color palette, but there just weren't enough cycles to be had, so I cheated.

The FG color in the mode I'm using is bits 18-27, but since that isn't addressable directly and the bgcolor is addressable using MOVD, I switched them. The FG color is now the D register and the BG color is set in a variable and gets initialized into the color mask on each scanline render.

I spent a lot of time instrumenting my code to really suss out what is going on with the VID, it's going to infuriate and confuse a lot of people because of the seeming indeterminate nature of how it works.

The basic thing you need to understand is that if the data to be displayed is stored in the CLUT, you have to double buffer the data because the VID is running out of sync with the COG. There isn't any way around this fact that I've found. What makes this interesting is that there are 8 modes that take data directly in the S register to WAITVID and use the CLUT as a palette instead of buffer. These should be immune from the problem because the data is taken away immediately and is determinate.

I initially thought that the best way to speed things through the VID was to build up a scanline and pump it to the VID in quick succession, but this is actually opposite of what you want. The reason is that you can only stack a few VID instructions before you need to pump more data into the CLUT, and writing all of the data first just doesn't work.

The solution is to stream the data to VID in realtime by working on a little bit, then feeding VID, then working, then feeding. This is how I rewrote my rendering mechanism, it renders 1 character, then feeds the VID, renders, then feeds. The trick is that there are 256 indices in the CLUT, so I can waste some space and save clock cycles by only using 1 byte of every long, and each successive call to VID gets a different CLUT address, so it doesn't matter how many calls out of sync I am, because they all point to different memory locations and won't conflict.

This turns out to work very well and is scalable to pretty much any display resolution because the timing is fixed per tile, add more tiles and the timing stays the same, because the important factor is the tile timing is right, not the overall time to render a single screen (though that does come into play eventually).

The rendering loop has 15 instructions and that is the practical maximum you can execute between feedings to VID, he won't tolerate delays, it's very very tight.

What my instrumenting has shown me is that very often when you make a call to WAITVID, the COG will spin however many cycles it needs before the prior VID command is done executing. Some command calls take a lot of time before they return, some are fairly short. There is no deterministic way to control the VID because it needs to be fed, and you have no way of knowing exactly when it will read the data and execute your command. The VID is almost always slower than the COG, so the COG usually can pump the data into the CLUT faster than the VID can read it, and this actually becomes a problem--a race condition.

Since there is no way to sync the VID and the COG, with respect to reading the CLUT, you must double buffer data, pumping the data in before calling to VID actually doesn't work as well as it does on paper. What I found is that you get very unexpected results from the VID if you try to put data into the CLUT before executing a command, but if you double buffer, or sometimes write data behind the VID, it works. The most deterministic way is to double buffer, then you don't have any contention.

My color tile driver uses a unique CLUT address for each call to WAITVID, avoiding buffering issues.

I also included some additional fonts, a couple of them are very legible, and I included some vintage stuff (Hercules graphics card fonts). The thin8x8 font is actually nice.

Comments

The notion of flipping between two buffers to overcome timing uncertainties is a fundamental that must be employed when streaming video data. Once you get that, though, it all becomes very simple again.

I second what Chip said. It's easier to grok the stream in a single buffer environment, but much harder to do complicated display tricks. With P2, the double buffer means some "slop" in how things happen, but it also means chaining them end to end, with effects and mode changes are now easy! (well, as easy as video gets, but you know what I mean)

IMHO, you could "sync" with a small loop that fires off a series of short waitvids. Read the counter, compare, do math, adjust waitvid length, then when it's all working, continue on. Not sure what the point of it would be though. One use I can think of is to use two cogs to do a display where color info is run at a different resolution than luma info on component for simple compression of high color images. I plan to try that someday. The YPbPr format is cool that way, and it's something I've long wanted to do.

For most uses, the setup we have now actually delivers more from your code, because there isn't the difficult managing of chains of waitvids...

IMHO, you could interlace this driver to get a little lower sweep rate, maybe enough time to drop your background color in... You could also store the font data so that the rev instruction isn't needed too. I'll bet you have time to do this on component or composite NTSC.

However it all shakes out, we are at 60Mhz, a small fraction of the real clock speed. None of this will matter on the real chip, which will be able to do something like this as a mere task in a COG. Then we all will be grumbling about more COG space, and easier LMM tools.

I instrumented my loop that displays characters, and the loop took 15 cycles to execute, which means every instruction is hitting on all cylinders. The WAITVID takes 8 cycles at 30Mhz, or 16 cycles at 60Mhz. This means you you have exactly 16 cycles to get everything done. You can't pre-render the content because it stalls the VID and causes the display to tweak out, so you have to constantly feed the VID.

So a rule of thumb with the VID:

The number of clock cycles it takes to render the data must be less than the number of clock cycles it takes to display.

This seems obvious and simple, but if you count your clocks, you know how much you can fit into a loop.

I shaved the render loop down to 13 clocks, no NOPs, actually replacing one of the NOPS with a WAITVID, since WAITVID works in write behind.

Coincidentally this means it is unlikely that HUB based fonts will work with this rendering architecture.

The real chip will have many more cycles to burn, at 160Mhz you will have 5.5 clocks (average) per display clock.

But consider that Prop1 does single-cog hub-based fonts with lesser video hardware and 1/3 the MIPS of even the Terasic Prop2 emulators. The Prop2 should be able to do much more than Prop1 with hub-based fonts.

'nuff for tonight, now I've got hub fonts and can do 8x16 and 256 character fonts. BTW, this only works for video modes up to a 30mhz dot clock, because it takes 2 clocks per dot at 30mhz. If you lower the resolution by doubling pixels in the waitvid, you can go higher resolutions.

There just isn't any more clocks, this is as big and as small as you can write that routine, it has to be 16 clocks for the hub windows to work.

The driver uses a 4k font loaded at $2000 and 2 byte per character buffer, fgcolor and character.

The highlights of this driver is that it uses a hub stored font comprised of 256 characters in an 8x16 matrix. This gives you the familiar standard IBM PC character set and font.

That's a really nice display, rock solid on my Dell monitor, in fact most stable I have seen out of a Prop!

Regards,

Coley

The driver is currently limited by the speed of the FPGA. Since the dot clock of the VGA is 30Mhz, it takes 2 system clocks to render 1 pixel. Since the driver renders 8 pixels, it takes 16 system clocks. By syncing the hub windows in the routine, the loop is lockstepped to this 16 clock timeframe. When the real silicon is available, it will be 160Mhz, which gives 160/30 = 5.3 system clocks per pixel. There will be a lot more clock cycles available to do some shifting to implement a 16 color foreground/background in one byte.

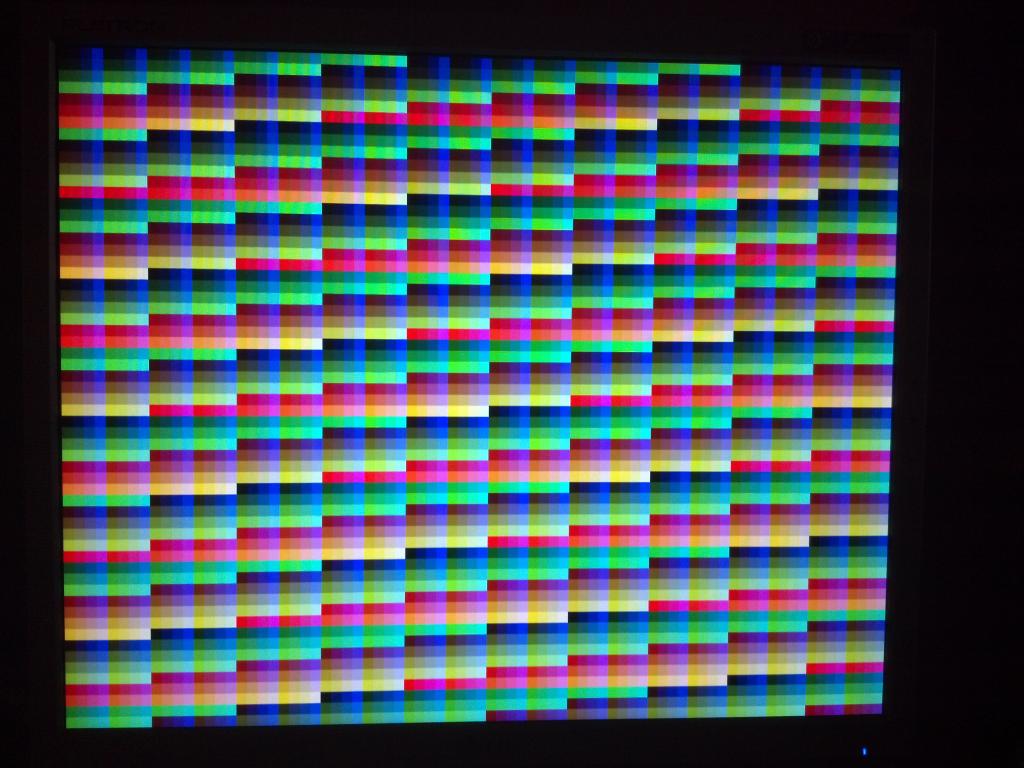

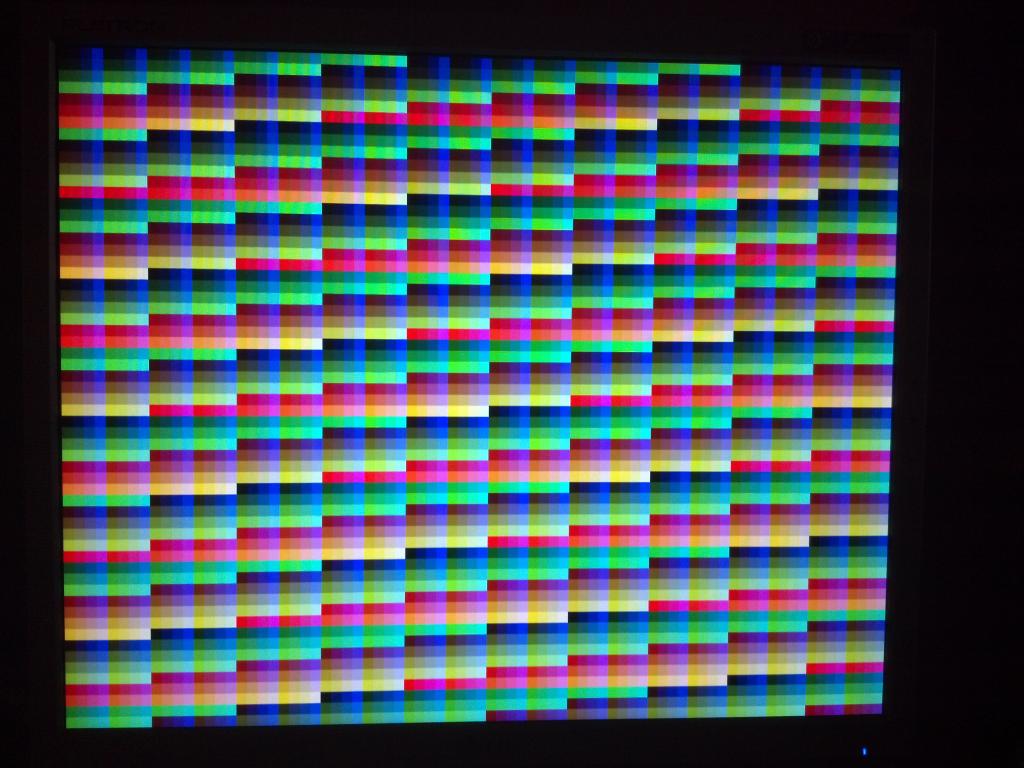

The other neat thing you can do is 4 color fonts. If you change the RDBYTE to a RDLONG in the bitmap lookup, and change the color mode to 4 bit, you can have 16 colors per pixel in a bitmap font. This would permit you to do effects like gradients in a video game.

The relevant modes are:

%1000 = STR4_RGBI4 * - 4-bit pixels are streamed from stack RAM starting at %AAAAAAAA plus S[7:0], with S[31..29] selecting the starting nibble. The pixels are colored as: %0000 = black %0001 = dark grey %0010 = dark blue %0011 = bright blue %0100 = dark green %0101 = bright green %0110 = dark cyan %0111 = bright cyan %1000 = dark red %1001 = bright red %1010 = dark magenta %1011 = bright magenta %1100 = olive %1101 = yellow %1110 = light grey %1111 = white %1001 = STR4_LUMA4 * - 4-bit pixels are streamed from stack RAM starting at %AAAAAAAA plus S[7:0], with S[31..29] selecting the starting nibble. The pixels are used as brightness values for colors determined by S[11..9]: %000 = black..orange %001 = black..blue %010 = black..green %011 = black..cyan %100 = black..red %101 = black..magenta %110 = black..yellow %111 = black..whiteBy using the high byte of the framebuffer to select the color palette, you can use the same font format and gradients, but change which palette is used to render the text on the fly.

The font format would be 32bits per row, 8 pixels wide, 4 colors, times 8 or 16 pixels tall. There are many 8x8 sized fonts used in classic 8-bit and arcade games that have gradients. You would need 4*8 or 32 bytes per character. An 8x8 128 character font would take 4 kbytes in 4 colors, 8 kbytes in 8x16.

Right now an 8x16 256 character font takes 4 kbytes, so the 8x8 128 character 4 color gradient is the sweet spot.

Here's the challenge to the group: Find me a 128 character font with 4 color gradients in 8x8 pixels, and I'll whack together a driver to display it. This would make for a very neat text display, especially with some graphical characters mixed into the font in the low areas.

Keep up the good work!

The font table is an 8x1024 pixel BMP image, which is flipped vertically to compensate for the flipped BMP format. I then massage the bits in the driver to de-interleave the nibbles.

The BMP is a 4bit grayscale palettized image using the palette I included in the zip file.

To make a custom font, create an 8x1024 pixel image in grayscale mode. Once you've pushed all of the characters into the image, select the GrayProp palette as the current palette, then choose "Colors->Map->Palette Map" to map all of the color shades to the palette. Then choose "Image->Mode->Indexed" and choose "Use custom palette" and select the "GrayProp" palette. Then unselect "remove unused colors from palette" and select OK. This will convert the grayscale image into a 4bpp palettized image that matched the palette needed for the bitmap font.

The image must be flipped vertically so the order of lines is correct in the binary file.

To extract the binary BLOB from the BMP file requires one of 2 choices, the easiest is to use the Unix dd command to extract the data:

dd if=snkfont1.bmp of=snkfont1.bin bs=1 skip=118

The other option is to load the entire BMP file into memory and adjust the font offset to add 118 bytes to skip the header/palette.

Once you've loaded the font into memory, you have 8 different colors to choose from, with 16 shades represented in the palette. The font actually specifies the background color as well as the foreground color, so in the screen buffer you only specify the palette and character.

The result is a cartoon or arcade game like text console. The code can be easily adapted to NTSC too.

I was looking at this mode:

%0011 = CLU4_SRGB26 - 8 4-bit offsets in S lookup 2:8:8:8 pixel longs in stack RAM

And realized that it's much more powerful for arbitrary colorization. You would define your entire palette ahead of time, and use the high byte of the screen buffer to choose the palette offset, the shades defined in the font would define which colors within the palette to use. In practice the buffer format is identical, you use the low 3 bits of the high byte to choose your palette, but you can arbitrarily choose all palette colors, instead of being limited to the 8 luma palettes.

Is there any value in making this option available, or have I nauseatingly exhausted the list of tiled text console drivers?

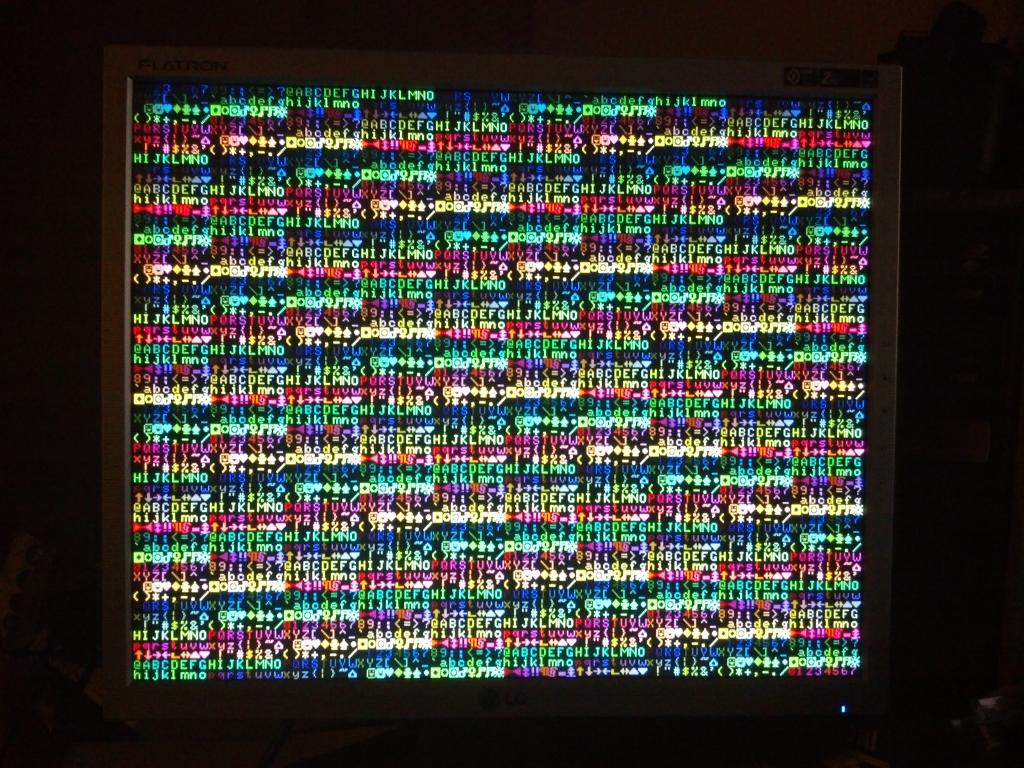

It would be easy to make a bunch of graphic characters and use them for sprites in a video game, so I made this video to demonstrate. It was difficult to capture because even when I turn off the anti-shake, the camera continues to try and track the motion and the screen was shuttering.

[video=youtube_share;SclYBoiFiiU]

It is possible You made it 800x600 pix ?

Very much Thanks.

Function correctly on my 800x600 LCD