Bug in video output?

pedward

Posts: 1,642

pedward

Posts: 1,642

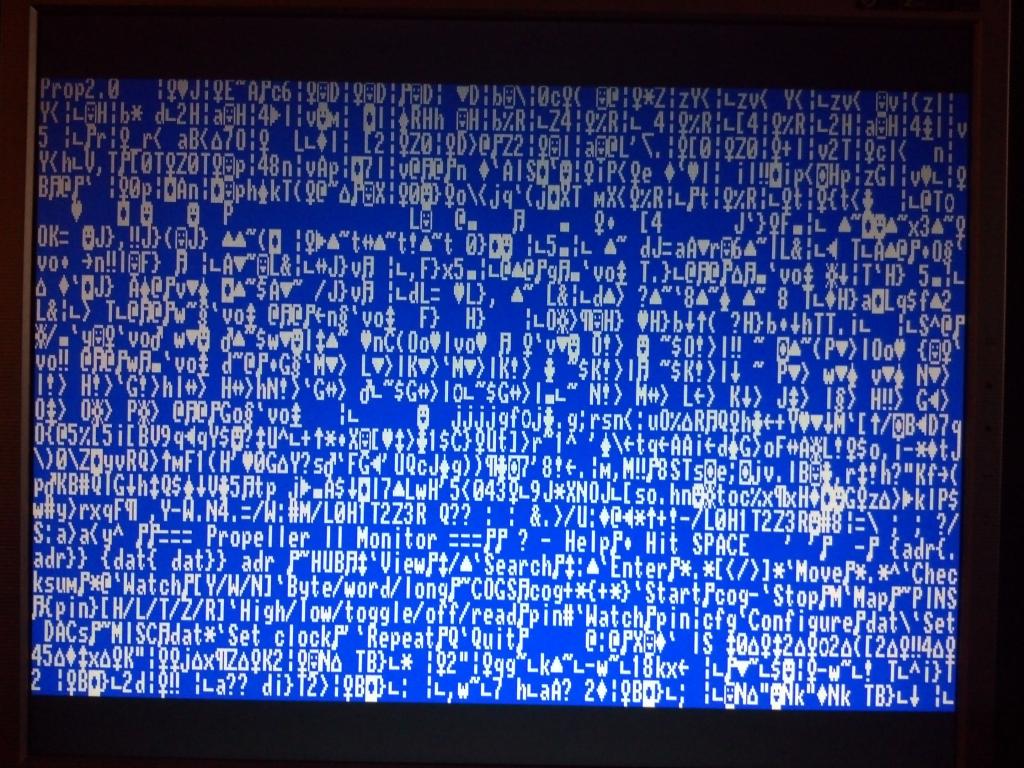

I've noticed for a while that there seems to be an issue with the first scanline being "short" or truncated in height when displaying VGA.

The problem is present across all resolutions and it seems to be an issue with the buffering in WAITVID.

The first scanline is short, but if you put a dummy sync and WAITVID at the top of the field, the problem is remedied and you don't get any extra scanlines.

The demos up till now have only focused on color bars, but if you display discrete pixels, you get "short" pixels. Increasing the blanking doesn't fix it, only priming the VID pump with a dummy seems to work.

Neither a bare horizontal sync nor WAITVID fixes it, because it tweaks the monitor out, so you need to put a hsync/WAITVID at the top of the field.

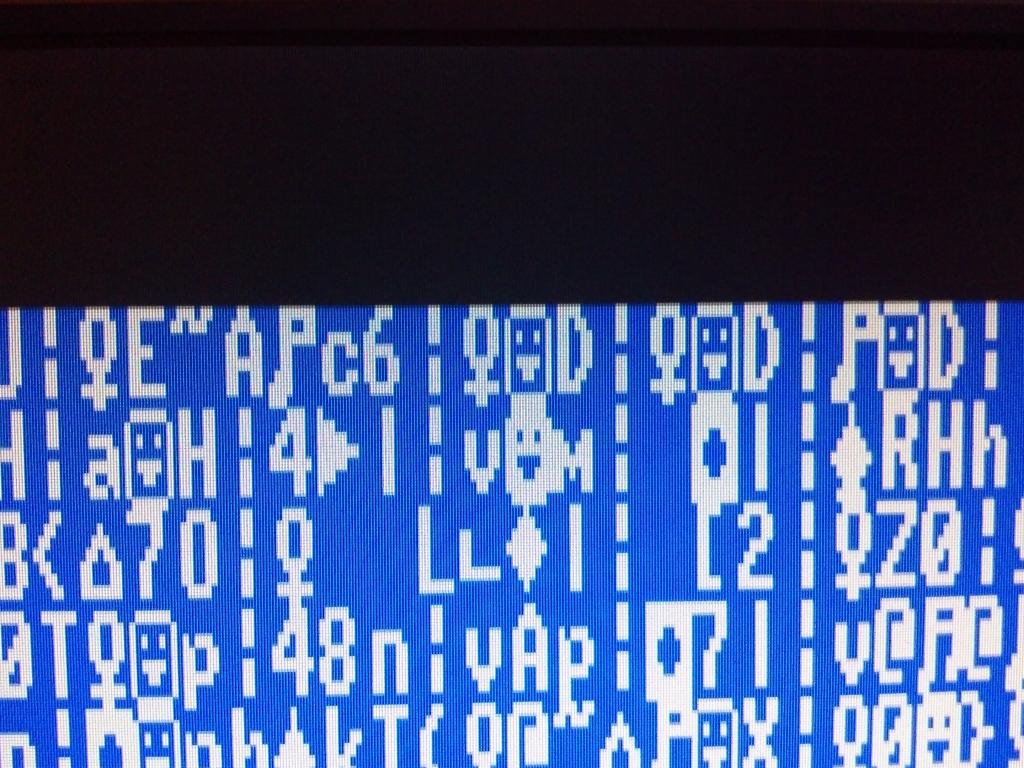

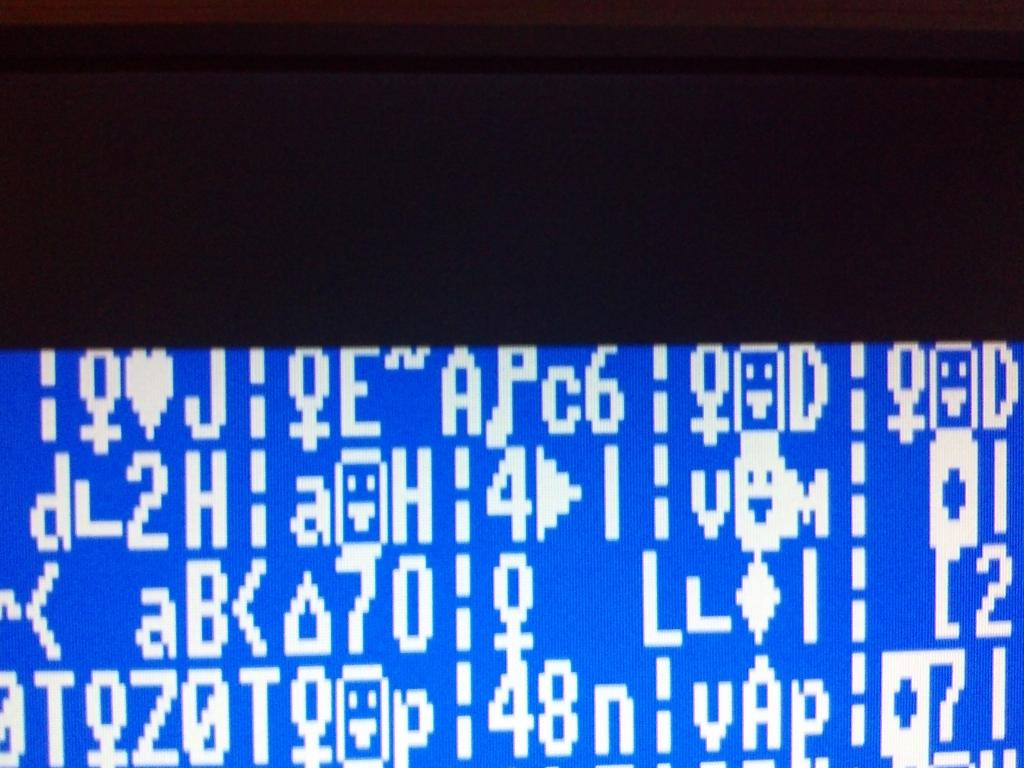

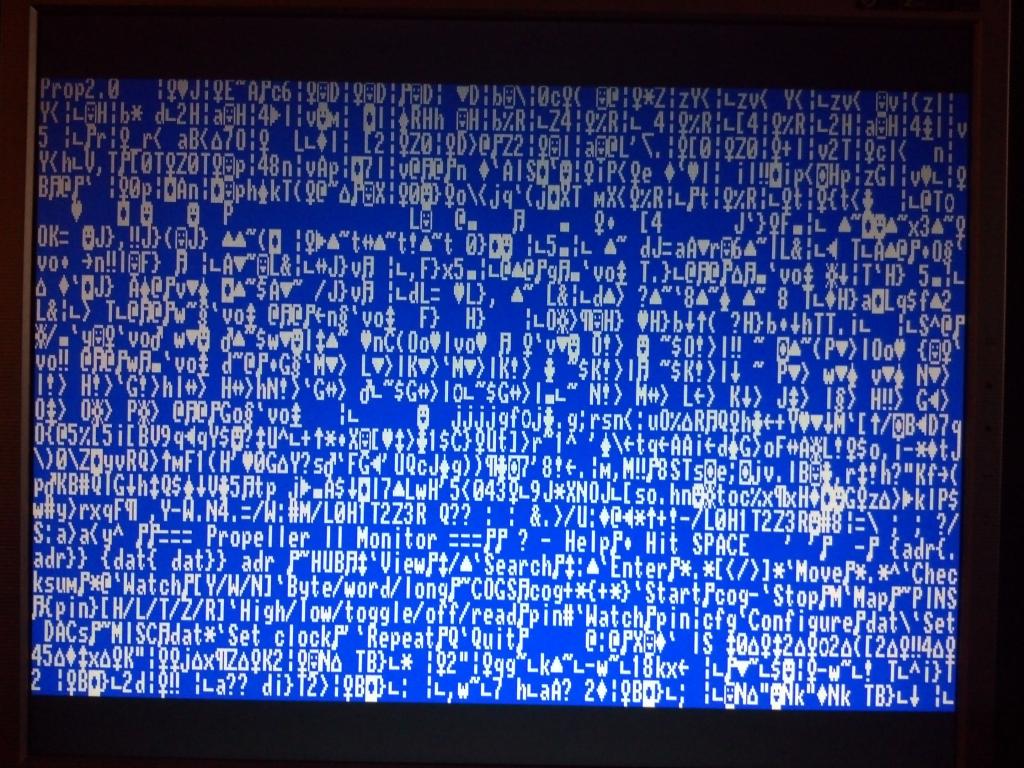

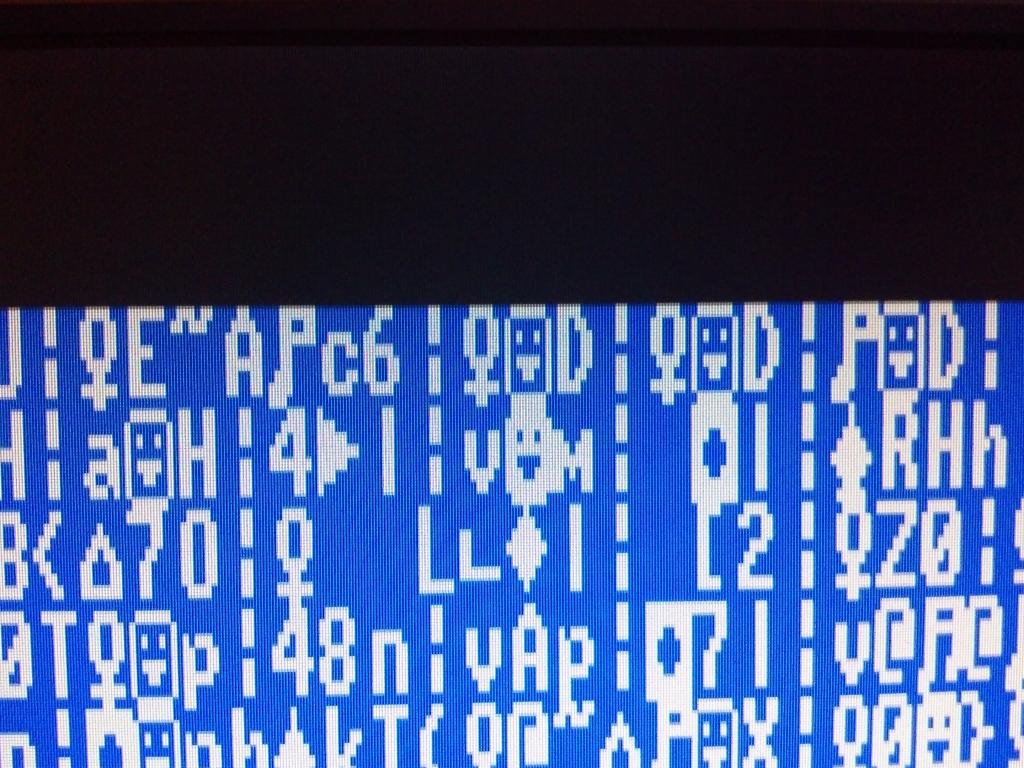

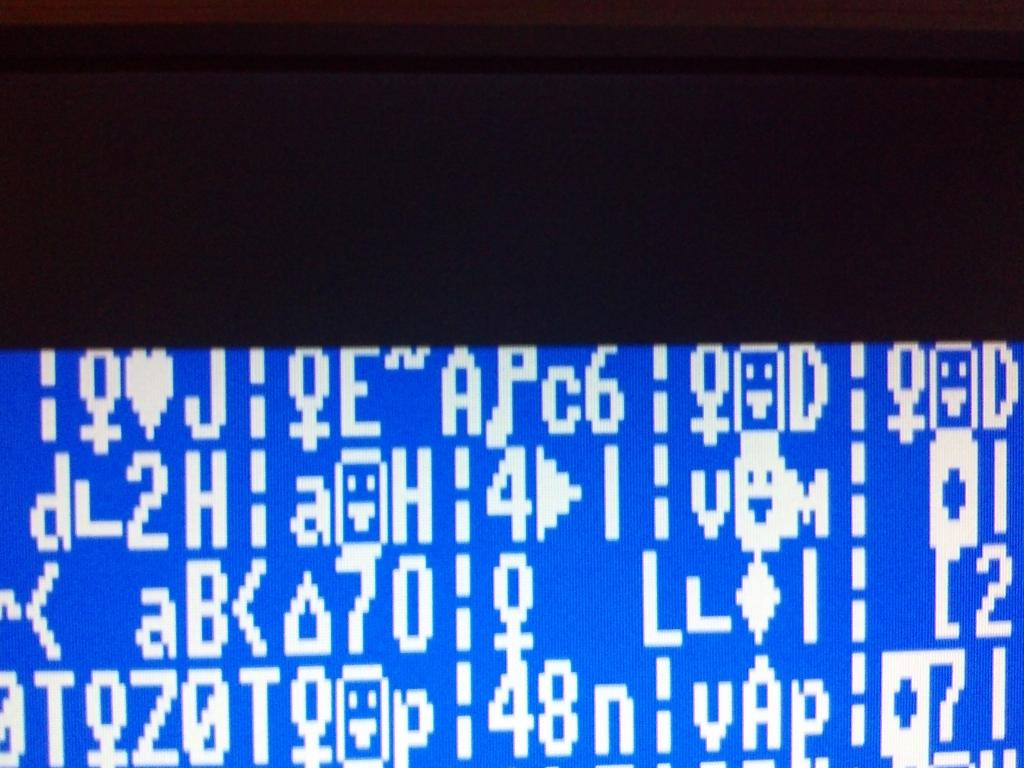

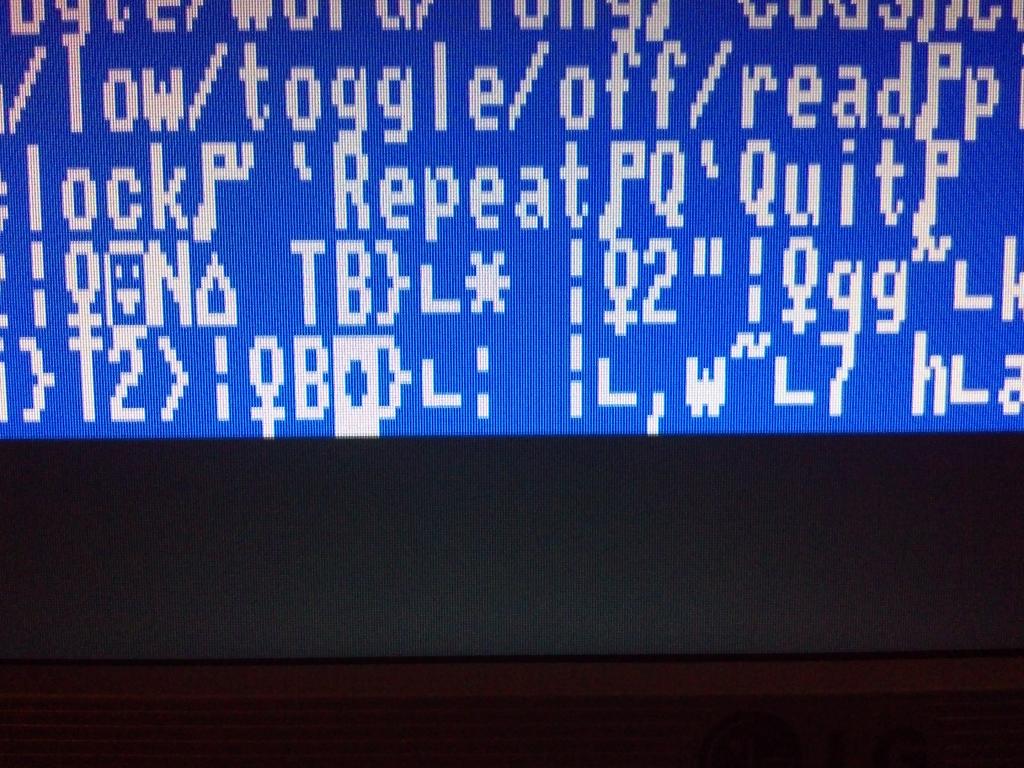

Here you can see pictures of the error and the "fix". I also notice that VID is outputting a longer last scanline too.

Is this perhaps an artifact of the double buffering?

The problem is present across all resolutions and it seems to be an issue with the buffering in WAITVID.

The first scanline is short, but if you put a dummy sync and WAITVID at the top of the field, the problem is remedied and you don't get any extra scanlines.

The demos up till now have only focused on color bars, but if you display discrete pixels, you get "short" pixels. Increasing the blanking doesn't fix it, only priming the VID pump with a dummy seems to work.

Neither a bare horizontal sync nor WAITVID fixes it, because it tweaks the monitor out, so you need to put a hsync/WAITVID at the top of the field.

Here you can see pictures of the error and the "fix". I also notice that VID is outputting a longer last scanline too.

Is this perhaps an artifact of the double buffering?

Comments

field mov x,#34 'top blanks call #blank mov x,#192 'set visible lines setptra bitmap 'Get ready to scan pixels setptrb bitmap line call #hsync 'do horizontal sync waitvid v_bo,#0 'black left border --------------->tempting to put buffer fill here, but don't do it :) waitvid mode,#0 '256*9 clocks per waitvid - show luma/colorbars call #Fill_Clut 'Double buffered waitvid means get pixels now' waitvid v_bo,#0 'black right border djnz x,#line 'Do all the lines mov x,#28 'bottom blanks call #blank synch mov x,#6 'high vertical syncs call #vsynch mov x,#6 'low vertical syncs call #vsyncl mov x,#6 'high vertical syncs call #vsynch jmp #field 'field loopThis whole thing smells of a race condition. It shouldn't matter if I put data in the buffer before calling WAITVID, in fact the result should be correct. It shouldn't work if you fill the buffer after calling WAITVID, because the CLUT isn't double ported, the XFR docs say so. This means the CLUT has to be read while executing the WAITVID instruction, which means the buffer contents need to be there before the first call.

The only explanation is that WAITVID needs 1 dummy call to clear some internal state or to prime the VID pipeline.

WAITVID is the gateway that pumps commands into the video circuit, if the time between WAITVID calls is too long, the video circuit will pull garbage from the buffer and act on it. What he said is that if your routine to fill the CLUT buffer takes too long, the video circuit will behave unpredictably, so you have to get ahead of the video circuit in your code.

Adding dummy sync/WAITVID was satisfying the video circuit's need for data, since the CLUT was empty at that point it ends up being an empty line, but keeps the video circuit primed with commands while the rendering routine runs. My rendering routine is around 270 instructions unrolled, with a cached readbyte, probably taking somewhere around 275 clocks to execute.

Shortening the execution time of the routine may help, but the problem seems to reside between the top blank and first waitvid instruction.

I tried priming the buffer at the top, before the top blank, and this worked to a degree, the top line was slightly larger and the last line was truncated.

Chip is working on a truecolor demo and said he was running into these timing issues too, but much more severe because of the pixel bandwidth and SDRAM.

That is going to be one boundary on a COG performance. Could always use another one. That also suggests using the lower sweep frequencies where warranted too. Seems to me using multiple waitvids per line might help too, depending...

Sadly, I've not had time to push the edge on things just yet.

I know that's the case with the Prop I; but my undestanding was that, in the Prop II, the waitvid data would be buffered in its own registers, from which repeat data would be reloaded, absent an intervening waitvid. This would, at least, provide predictable behavior in cases where it's not entirely necessary to reload the buffers with new data. Is this a deliberate change from your previously-stated plans?

-Phil

Maybe I did make it so that it repeats the last instruction if it runs dry. I don't remember. I'll need to check the Verilog code. The commands ARE double-buffered, anyway.

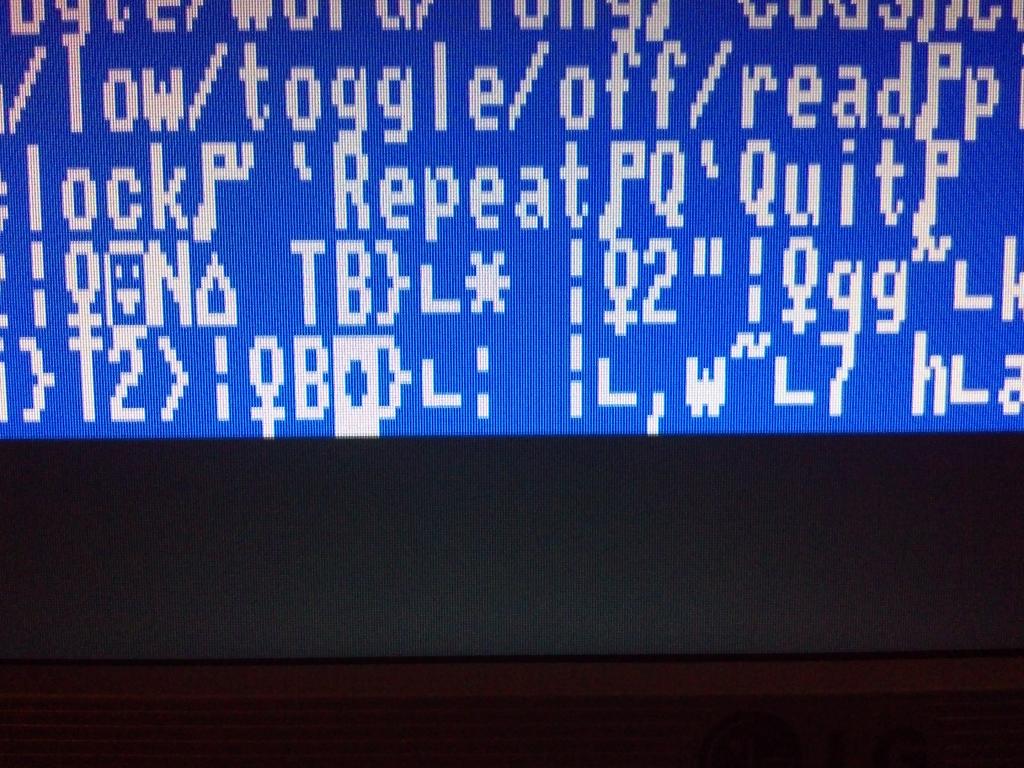

I'm trying to do color per tile in the TTY driver, but clearly something ain't right.

If I set the scanline length to 640 pixels, WAITVID is golden.

320 pixels works fine too.

160 pixels ditto.

80 pixels and I start getting vertical tearing in the 7th WAITVID.

I cannot get 64 pixels to work at all.

I tried changing to 5 WAITVIDs of 128 pixels, 4 clocks per pixel, and I'm getting vertical tearing in the 8th pixel of the 4th WAITVID. Obviously because I'm using an LCD it manifests as vertical tearing because a sync issue is present.

A snippet of the code goes like this:

Is this an artifact of the FPGA not able to keep WAITVID fed? Obviously this VID works way different than the P1, because this is SOP on the P1.

%PPPPPPP = number minus 1 of dot clocks per pixel (0..127 --> 1..128)

%CCCCCCCCCCCCC = number minus 1 of dot clocks in WAITVID (0..8191 --> 1..8192)

In reality, they are base 1, a value of 1 is 1 dot clock per pixel, 2 = 2 and so on. That is true for C as well.

The demos you (Chip) wrote confirm this in their example values.

So that's a picture which has lead me to question a few conclusions about how the new VID circuit works.

What you see is a 1 pixel line with 1 clock per pixel, followed by the rest of the frame at 4 clocks per pixel.

After much tinkering and thinking, I believe what is going on is that WAITVID actually executes commands 1 issue later.

How this is important has to do with the CLUT.

If I issue a command to display a line at 1 pixel per clock, it doesn't actually read the CLUT and execute that command when I call it, it waits until the next call to execute that command, so the contents of the CLUT, while timely, are out of sync with the VID command.

In effect, I have to update the CLUT after the call to WAITVID, then the data is actually latched out when the next WAITVID call is issued by the COG. In essence the clock for the WAITVID circuit is actually driven by the COG issuing commands, the first issue doesn't result in any action, so you have to stuff another command into the queue to execute.

Unfortunately there aren't any NOP commands that I'm aware of for WAITVID, at least to generate a sync point where the COG code and WAITVID are aligned in a meaningful manner.

Potatohead's code shows this, as he figured out that the Fill_CLUT needed to go after the WAITVID, but that's because the next iteration through the loop caused the data to latch, and that's why putting the Fill_CLUT above the command didn't work.

I looked at the Verilog code and it DOES repeat the last WAITVID instruction if you don't provide a new one before it runs out.

Because commands are double-buffered, you can issue one which calls on the stack RAM for colors or pixels, and then fill the data into the stack RAM before the command executes. Your only timing issue is whether or not you can get the stack RAM filled before VID starts to use it - and this has everything to do with the duration of the preceding command.

Also, what could be the cause for the problem with short waitvid calls? I tried doing render-display-render-display, but didn't have enough bandwidth to keep up, so I rendered once and then broke the display up into different calls, so I could supply a different color per call.

Ultimately I can call WAITVID with a length of 16 (8 doublescanned) pixels and shift which bit offset in the buffer I'm displaying, and have per character color. However, if there is some problem with issuing short WAITVID calls back to back, then that won't work.

That limitation really makes for a problem.

VID has its own port to the stack RAM, so it never needs to wait.

All your frustrations have to do with timing issues.

Consider this:

WAITVID 20-clock command

WAITVID 100-clock command uses stack RAM

when you execute the 1st WAITVID instruction, its VID command gets held while VID executes the prior WAITVID's command. At the time the 2nd WAITVID is accepted/executes, the 1st WAITVID's command has begun executing in VID. At this time, you have 20 video clocks to start stuffing the stack RAM before the 2nd WAITVID's command starts accessing the stack RAM. You have until just before that command is done to be waiting in the next WAITVID.

I'm not sure what you are asking. I think you need an epiphany to realize its all simpler than you are supposing. When it snaps to grid, you'll know exactly what you can do.