More NOOB questions, reader beware

My past --n-- attempts at building a cache driver for the Touchburger have failed and I'm starting to pull my hair out. The read and write commands are directly from working code. I must be missing something!

The latest attempt. Download: Skeleton JCACHE external RAM driver from google code. Fresh copy. Rename touch_cache.spin in new efolder.. Test cache is reused, although I doubt it's that.. have code for CACHE SIZE = 8192 and 4096

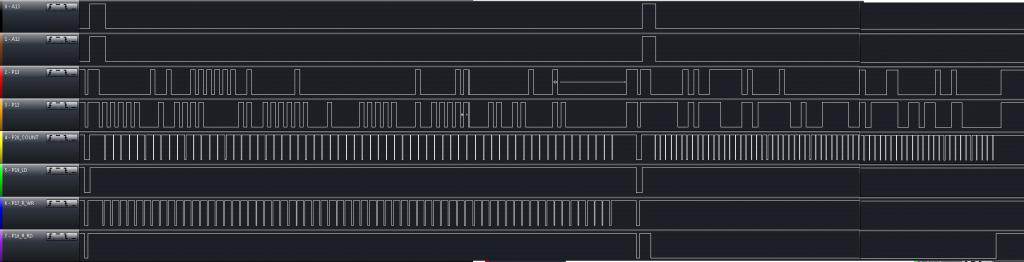

The problem seems to be address bit 13 is aliasing. I think it's correct, I might reconnect LA and grab a few screenshots if it could help. I remember having a problem like this before and I can't remember what it was!

Here's the full cache.spin, hopefully you guys can help before I ragequit again!

The symptoms are all tests fail miserably. Walking address bits always show aliasing on A13. Other tests flat out fail at completing write ??? I did change this code to NOT use ramaddr and just directly use vmaddr, first version driver shifted vmaddr right every set161, now we do a little more but should be okay? I know hubaddr and line_size can't be trashed from previous experience.

If I put these commands back in the PASM engine I'm using, things work perfectly so I'm stumped!

The latest attempt. Download: Skeleton JCACHE external RAM driver from google code. Fresh copy. Rename touch_cache.spin in new efolder.. Test cache is reused, although I doubt it's that.. have code for CACHE SIZE = 8192 and 4096

The problem seems to be address bit 13 is aliasing. I think it's correct, I might reconnect LA and grab a few screenshots if it could help. I remember having a problem like this before and I can't remember what it was!

Here's the full cache.spin, hopefully you guys can help before I ragequit again!

{

Skeleton JCACHE external RAM driver

Copyright (c) 2011 by David Betz

Based on code by Steve Denson (jazzed)

Copyright (c) 2010 by John Steven Denson

Inspired by VMCOG - virtual memory server for the Propeller

Copyright (c) February 3, 2010 by William Henning

For the TouchBurger 3 Board - DateCode AUG2012 By James Moxham and Joe Heinz

Basic port by Joe Heinz, Optimizations influenced by Steve Denson *huge thanks for V1

Honorable mention to David Betz for his patience during V1

Copyright (c) 2012 by John Steven Denson and Joe Heinz

TERMS OF USE: MIT License

Permission is hereby granted, free of charge, to any person obtaining a copy

of this software and associated documentation files (the "Software"), to deal

in the Software without restriction, including without limitation the rights

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

copies of the Software, and to permit persons to whom the Software is

furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in

all copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE,ARISING FROM,

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN

THE SOFTWARE.

}

CON

' default cache dimensions

DEFAULT_INDEX_WIDTH = 6

DEFAULT_OFFSET_WIDTH = 7

' cache line tag flags

EMPTY_BIT = 30

DIRTY_BIT = 31

PUB image

return @init_vm

DAT

org $0

' initialization structure offsets

' $0: pointer to a two word mailbox

' $4: pointer to where to store the cache lines in hub ram

' $8: number of bits in the cache line index if non-zero (default is DEFAULT_INDEX_WIDTH)

' $a: number of bits in the cache line offset if non-zero (default is DEFAULT_OFFSET_WIDTH)

' note that $4 must be at least 2^(index_width+offset_width) bytes in size

' the cache line mask is returned in $0

init_vm mov t1, par ' get the address of the initialization structure

rdlong pvmcmd, t1 ' pvmcmd is a pointer to the virtual address and read/write bit

mov pvmaddr, pvmcmd ' pvmaddr is a pointer into the cache line on return

add pvmaddr, #4

add t1, #4

rdlong cacheptr, t1 ' cacheptr is the base address in hub ram of the cache

add t1, #4

rdlong t2, t1 wz

if_nz mov index_width, t2 ' override the index_width default value

add t1, #4

rdlong t2, t1 wz

if_nz mov offset_width, t2 ' override the offset_width default value

mov index_count, #1

shl index_count, index_width

mov index_mask, index_count

sub index_mask, #1

mov line_size, #1

shl line_size, offset_width

mov t1, line_size

sub t1, #1

wrlong t1, par

' put external memory initialization here

shr line_size,#1 ' from V1 > offset for byte to word conversion *suggested by JSD

or outa,maskP22 ' pin 22 high - LATCH OE - disable

or dira,maskP22 ' and now set as an output

mov dirb, #$FF ' latch all high - done uses dirb to set latch-

call #done ' and set latch, release all pins- EXCEPT P22 for latch OE

jmp #vmflush

fillme long 0[128-fillme] ' first 128 cog locations are used for a direct mapped cache table

fit 128

' initialize the cache lines

vmflush movd :flush, #0

mov t1, index_count

:flush mov 0-0, empty_mask

add :flush, dstinc

djnz t1, #:flush

' start the command loop

waitcmd wrlong zero, pvmcmd

:wait rdlong vmline, pvmcmd wz

if_z jmp #:wait

shr vmline, offset_width wc ' carry is now one for read and zero for write

mov set_dirty_bit, #0 ' make mask to set dirty bit on writes

muxnc set_dirty_bit, dirty_mask

mov line, vmline ' get the cache line index

and line, index_mask

mov hubaddr, line

shl hubaddr, offset_width

add hubaddr, cacheptr ' get the address of the cache line

wrlong hubaddr, pvmaddr ' return the address of the cache line

movs :ld, line

movd :st, line

:ld mov vmcurrent, 0-0 ' get the cache line tag

and vmcurrent, tag_mask

cmp vmcurrent, vmline wz ' z set means there was a cache hit

if_nz call #miss ' handle a cache miss

:st or 0-0, set_dirty_bit ' set the dirty bit on writes

jmp #waitcmd ' wait for a new command

' line is the cache line index

' vmcurrent is current cache line

' vmline is new cache line

' hubaddr is the address of the cache line

miss movd :test, line

movd :st, line

:test test 0-0, dirty_mask wz

if_z jmp #:rd ' current cache line is clean, just read new one

mov vmaddr, vmcurrent

shl vmaddr, offset_width

call #wr_cache_line ' write current cache line

:rd mov vmaddr, vmline

shl vmaddr, offset_width

call #rd_cache_line ' read new cache line

:st mov 0-0, vmline

miss_ret ret

' pointers to mailbox entries

pvmcmd long 0 ' on call this is the virtual address and read/write bit

pvmaddr long 0 ' on return this is the address of the cache line containing the virtual address

cacheptr long 0 ' address in hub ram where cache lines are stored

vmline long 0 ' cache line containing the virtual address

vmcurrent long 0 ' current selected cache line (same as vmline on a cache hit)

line long 0 ' current cache line index

set_dirty_bit long 0 ' DIRTY_BIT set on writes, clear on reads

zero long 0 ' zero constant

dstinc long 1<<9 ' increment for the destination field of an instruction

t1 long 0 ' temporary variable

t2 long 0 ' temporary variable

tag_mask long !(1<<DIRTY_BIT) ' includes EMPTY_BIT

index_width long DEFAULT_INDEX_WIDTH

index_mask long 0

index_count long 0

offset_width long DEFAULT_OFFSET_WIDTH

line_size long 0 ' line size in bytes

empty_mask long (1<<EMPTY_BIT)

dirty_mask long (1<<DIRTY_BIT)

' input parameters to rd_cache_line and wr_cache_line

vmaddr long 0 ' external address

hubaddr long 0 ' hub memory address

'------------------------------------------------ SRAM Address Setup -----------------------------------------------------------------

'' looks like address bit 13 not correct? doubt it's hardware...

set161and373 'mov ramaddr, vmaddr ' copy ram address

shr vmaddr, #1 ' from old build 1 > '' schematic connects SRAM A0 to A0, not A1 - jsd

or vmaddr, maxram ' mask off unused ram address bits

'' setup pointer for hub and count

mov ptr, hubaddr ' cant trash hubaddr

mov len, line_size ' or line_size

mov dirb, latchvalue ' save old latch value for restore at end of op.

''

'' do locking here!

''

or outa,maskP16P20 ' set control pins high

or dira,maskP16P20 ' set control pins P16-P20 as outputs

mov latchvalue,#%11111110 ' group 1, displays all off

call #set373 ' send out to the latch

and outa,maskP0P20low ' prepare data pins for address

''extended addressing, only for stacked SRAM - comment out and uncomment below for standard config

or dira,maskP0P20P29 ' %00100000_11100000_00000000_00000000 ' make all address pins out

cmp vmaddr,maskP19 wc ' check if we have extended address ' and do extended addressing

muxnc outa, maskP29 ' and !mux onto p29

andn vmaddr,maskP0P18low ' mask off the low 19 bits

'' end extended addressing, only for stacked SRAM

'or dira,maskP0P20 ' use this for standard ram config

or outa,vmaddr ' send out ramaddr

andn outa,maskP20 ' P20 clock low

or outa,maskP20 ' P20 clock high

or outa,maskP16P20 ' P16-P20 high

andn dira,maskP29 ' %1101_1111_1111_1111_1111_1111_1111_1111 ' release P29, xmm only, else comment out

mov latchvalue,#%11111101 ' group 2

call #set373 ' change to group 2

set161and373_ret ret '' returns @ INS window

'------------------------------------------------ Latch Control -----------------------------------------------------------------

set373 or outa,maskP22 ' pin 22 high

or dira,#%1_11111111 ' enable pins 0-7 and 8 as outputs

and outa,maskP0P8low ' P0-P7 low

or outa,latchvalue ' send out the data

or outa,maskP8 ' P8 high, clocks out data

andn outa,maskP22 ' pin 22 low

set373_ret ret '' returns @ INS window

done mov latchvalue, dirb ' restore old value

call #set373 ' Set latch to vaule prior to cog opperation

and dira,maskP0P20low ' tristates all the common pins, leaves P22 as is though

'' ''

'' do un-locking here!

''

done_ret ret

'----------------------------------------------------------------------------------------------------

'

' rd_cache_line - read a cache line from external memory

'

' vmaddr is the external memory address to read

' hubaddr is the hub memory address to write

' line_size is the number of bytes to read

'

'----------------------------------------------------------------------------------------------------

rd_cache_line

pasmramtohub call #set161and373 ' set up the 161 counter and change to group 2

andn dira,maskP0P15 ' data bus inputs

andn outa,maskP16 ' memory /rd low

nop ' first read sometimes corrupt?

'

ramtohub_loop mov data_16, ina ' get the data 3

wrword data_16, ptr ' move data to hub 1-2

andn outa, maskP20 ' clock 161 low 3

or outa, maskP20 ' clock 161 high 4

add ptr, #2 ' increment the hub address 1

djnz len,#ramtohub_loop ' 2

or outa,maskP16 ' memory /rd high

call #done ' tristate pins

rd_cache_line_ret ret

'----------------------------------------------------------------------------------------------------

'

' wr_cache_line - write a cache line to external memory

'

' vmaddr is the external memory address to write

' hubaddr is the hub memory address to read

' line_size is the number of bytes to write

'

'----------------------------------------------------------------------------------------------------

wr_cache_line

pasmhubtoram call #set161and373 ' set up the 161 counter and then change to group 2

or dira,maskP0P15 ' data bus outputs

hubtoram_loop andn outa,maskP0P15 ' clear P0 to P15 for output 2

rdword data_16,ptr ' get the word from hub 1-2

or outa,data_16 ' send out the byte to P0-P15 3

andn outa,maskP17 ' set mem write low 4

add ptr, #2 ' increment by 2 bytes = 1 word. Put this here for small delay while writes 1

or outa,maskP17 ' mem write high 2

andn outa,maskP20 ' clock 161 low 3

or outa,maskP20 ' clock 161 high 4

djnz len,#hubtoram_loop ' loop this many times 1

call #done ' tristate pins and listen for command

wr_cache_line_ret ret

' constants

maskP0P18low long %11111111_11111000_00000000_00000000 ' P0-P18 low

maskP16 long %00000000_00000001_00000000_00000000 ' pin 16 - SRAM_RD

maskP17 long %00000000_00000010_00000000_00000000 ' pin 17 - SRAM_WR

maskP19 long %00000000_00001000_00000000_00000000 ' pin 19 - LOAD - Group1

maskP20 long %00000000_00010000_00000000_00000000 ' pin 20 - Clock - Group1-Group2

maskP22 long %00000000_01000000_00000000_00000000 ' pin 22 - Latch OE - GroupPin

maskP0P15 long %00000000_00000000_11111111_11111111 ' for masking words

maskP16P20 long %00000000_00011111_00000000_00000000 ' control pins

maskP0P20low long %11111111_11100000_00000000_00000000 ' for returning all group pins HiZ

maskP0P8low long %11111111_11111111_11111110_00000000 ' P0-P8 low for set 373

maskP8 long %00000000_00000000_00000001_00000000 ' pin 8 for set 373

maskP0P20P29low long %11011111_11100000_00000000_00000000 ' xmm

maskP29 long %00100000_00000000_00000000_00000000 ' xmm

maskP0P20P29 long %00100000_00011111_11111111_11111111 ' xmm

maxram long %00000000_00001111_11111111_11111111 '7_ff_ff - f_ff_ff

latchvalue res ' current 373 value

data_16 res ' general purpose value

'ramaddr res ' copy of vmaddr, not used

ptr res ' pointer to hub

len res ' copy of line_size for decimation

fit 496

The symptoms are all tests fail miserably. Walking address bits always show aliasing on A13. Other tests flat out fail at completing write ??? I did change this code to NOT use ramaddr and just directly use vmaddr, first version driver shifted vmaddr right every set161, now we do a little more but should be okay? I know hubaddr and line_size can't be trashed from previous experience.

If I put these commands back in the PASM engine I'm using, things work perfectly so I'm stumped!

Test 0- Address Walking 0's 15 address bits: ERROR! Expected 0 @ 00007ffc after write to address 00005ffc 00002000 Test 1- Address Walking 1's 15 address bits: ERROR! Expected 0 @ 0 after write to address 00002000 00002000 Test 2- Incremental Pattern Test 32 KB :ERROR at $00000000 Expected $00000001 Received $00001801 Test 3- Pseudo-Random Pattern Test 524 KB : ERROR at $00000000 Expected $d0000001 Received $bb67aaab Test 4 -Pseudo-Random Pattern Test 32 KB :ERROR at $00000000 Expected $00000e80 Received $a0800e66 Address Walking 0's 18 address bits :ERROR! Expected 0 @ 0003fffc after write to address 0003dffc 00002000 Address Walking 1's 18 address bits. : ERROR! Expected 0 @ 0 after write to address 00002000 00002

Comments

*edit*

Looks like that was it! I'm testing full memory area now!

Thank you SO MUCH for taking a look, I guess I was starting to get tunnel vision.

It looks like that resolved my issue. Now build the cache and see if runs programs. It looks like I hit issues trying to use the full 1megaword... Pass first 2 tests, then fails the rest

*aedit* okay, now I'm having the problems that got me last time... I can load some programs in SimpleIDE and they run just fine in XMMC and XMM *hello.c* but drystone won't run now!

The other question that I've been trying to figure out... When running the Walking address bit test, for example, it says testing 18 address bits *524k* but with the right shift of line_size by one and the right shift of hubaddr, is that actually 17 bits? I should probably get my logic analyzer out and check..

thanks again @kuroneko, I looked at that stupid OR inst for over an hour and it didn't click!

I have a feeling it's something simple. I'll try to pull out the LA over the next few days and see if I can see anything obvious. It should benchmark quite well since read/write loops are 2 hub cycles!

Thanks again for all your help!

*edit*

Here's a full list of things that work:

Cache test, mostly. Throws errors when memory size increased, else okay.

FIBO: seems to run fine

Hello : seems okay

Dhrystone: loader opens terminal and nothing happens.

xmm-single and xmmc tested on all programs and seems to not make a difference. If there's other programs I should check that could help pinpoint I will. I know this version of dry.c is good because I ran it on the previous version Steve helped with.

Line 130 of the dry.c file:

I have 19 address bits, and these test okay. My extended memory hack has 20 and works in cog driver, but not in cache test? I have this feeling that with with both the shifts, it works out one bit higher than test results say? So when I run walking 0s 18 address bits, it's running from A0-A18, which is really 19 address bits and when I run incremental 1024kb is really 1024 kWord ??

C:\Users\Joe\Desktop\Touchburger V1\Programs>bstc -Ograux -c touch_cache.spin Brads Spin Tool Compiler v0.15.3 - Copyright 2008,2009 All rights reserved Compiled for i386 Win32 at 08:17:48 on 2009/07/20 Loading Object touch_cache Program size is 1052 longs Compiled 176 Lines of Code in 0.008 Seconds C:\Users\Joe\Desktop\New folder (4)\dry>propeller-load -r -t -b touchburger dry_ xmm_single.elf Propeller Version 1 on COM38 Loading the serial helper to hub memory 9528 bytes sent Verifying RAM ... OK Loading cache driver 'touch_cache.dat' 1028 bytes sent Loading program image to RAM 17408 bytes sent Loading .xmmkernel 1724 bytes sent [ Entering terminal mode. Type ESC or Control-C to exit. ] Dhrystone Benchmark, Version C, Version 2.2 Program compiled without 'register' attribute Using STDC clock(), HZ=80000000 Trying 5000 runs through Dhrystone: Final values of the variables used in the benchmark: Int_Glob: 5 should be: 5 Bool_Glob: 1 should be: 1 Ch_1_Glob: A should be: A Ch_2_Glob: B should be: B Arr_1_Glob[8]: 7 should be: 7 Arr_2_Glob[8][7]: 5010 should be: Number_Of_Runs + 10 Ptr_Glob-> Ptr_Comp: 536899232 should be: (implementation-dependent) Discr: 0 should be: 0 Enum_Comp: 2 should be: 2 Int_Comp: 17 should be: 17 Str_Comp: DHRYSTONE PROGRAM, SOME STRING should be: DHRYSTONE PROGRAM, SOME STRING Next_Ptr_Glob-> Ptr_Comp: 536899232 should be: (implementation-dependent), same as above Discr: 0 should be: 0 Enum_Comp: 1 should be: 1 Int_Comp: 18 should be: 18 Str_Comp: DHRYSTONE PROGRAM, SOME STRING should be: DHRYSTONE PROGRAM, SOME STRING Int_1_Loc: 5 should be: 5 Int_2_Loc: 13 should be: 13 Int_3_Loc: 7 should be: 7 Enum_Loc: 1 should be: 1 Str_1_Loc: DHRYSTONE PROGRAM, 1'ST STRING should be: DHRYSTONE PROGRAM, 1'ST STRING Str_2_Loc: DHRYSTONE PROGRAM, 2'ND STRING should be: DHRYSTONE PROGRAM, 2'ND STRING Microseconds for one run through Dhrystone: 1401 Dhrystones per Second: 713So I have one version compiled. I'm going to import a few optimizations from cogdriver *using safe drivers, not fast drivers*last result: and with stacked ramchip config *got it working*

I'm getting excited because with the fast version, only need to toggle 1 pin, not 2. SO, read and write could fit in 1 hub window using the counters! I'm thinking this could be a VERY fast cache

preliminary results!

Already beats SDram! I'm going to try again and compile for "standard" hardware, should be a titch faster! - strange how xmmc and xmm-single differ slightly as to the faster dhrystone. fibo's are the same though.. I'd really like to push this to use faster writes. I think it would work, just need to toggle 2 pins at the same time with counters....

The fibo function is so small it probably fits in the cache so it doesn't end up accessing external memory much and it doesn't use any global variables.

I really think Dhrystone should be compiled with -Os. The quote Steve provided said that optimizing compilers should be prevented from removing significant statements. That's not at all the same as saying optimization should be turned off, and in fact if you look on the web I believe most Dhrystone benchmarks are quoted with optimization turned on. Leaving it off might give people an inaccurate picture of Propeller performance.

But I guess this is drifting off topic...

Eric

'' ************ RAM cog driver *************** PUB start : err_ ' Initialise the Drac Ram driver. No actual changes to ram as the read/write routines handle this command := "I" cog := 1 + cognew(@tbp2_start, @command) if cog == 0 err_ := $FF ' error = no cog else repeat while command ' driver cog sets =0 when done err_ := errx ' driver cog sets =0 if no error, else xx = error code PUB stop if cog cogstop(cog~ - 1) PUB CogCmd(command_, hub_address, ram_address, block_length) : err_| a,b ' Do the command: A-Z (I is reserved for Initialise) a := dira ' store the state of these and restore at the end DIRA &= %11001111_10100000_00000000_00000000 ' tristate all common pins that cog can change so no conflicts hubaddrs := hub_address ' hub address start ramaddrs := ram_address ' ram address start blocklen := block_length ' block length command := command_ ' must be last !! ' Wait for command to complete and get status repeat while command ' driver cog sets =0 when done err_ := errx ' driver cog sets =0 if no error, else xx = error code dira := a ' restore dira and outa CON '' Modified code from Cluso's triblade '' commands to move blocks of data to the touchscreen display ' DoCmd(command_, hub_address, ram_address, block_length) ' B - logic OR hubaddrs with latchvalue - logic AND ramaddrs with latchvalue ' C - convert 3 byte .bmp format BGR to 2 byte ili format and store to ram ' E - convert from .raw RGB to two byte ILI format RRRRRGGG_GGG_BBBBB ' D - Draw_ILI9325 ' G - Draw_SSD1289 ' H - Draw_SSD1963 ' S - Move data from hub to ram ' T - Move data from ram to hub ' U - Move data from ram to display ' V - Hub to display ' X - merge icon and background based on a mask VAR ' communication params(5) between cog driver code - only "command" and "errx" are modified by the driver long command, hubaddrs, ramaddrs, blocklen, errx, cog ' rendezvous between spin and assembly (can be used cog to cog) ' command = A to Z etc =0 when operation completed by cog ' hubaddrs = hub address for data buffer ' ramaddrs = ram address for data ' blocklen = ram buffer length for data transfer ' errx = returns =0 (false=good), else <>0 (true & error code) ' cog = cog no of driver (set by spin start routine DAT '' +-----------------------------------------------------------------------------------------------+ '' | Touchblade 161 Ram Driver (with grateful acknowlegements to Cluso and Average Joe) | '' +-----------------------------------------------------------------------------------------------+ 'PASM DRIVERAnd the PASM driver continues. Then, the Touch.h looks something like this:

#ifndef __TOUCH_H__ #define __TOUCH_H__ typedef struct tchstruct { long command_; ///' rendezvous between spin and assembly (can be used cog to cog) long hub_address; long ram_address; long block_length; long err_; } Tchst; /** * Start touchscreen driver - starts a cog * * @param cmdptr = Address to start of structure * * Driver returns 0 if no error, else xx == error code * */ int touch_start(unsigned int *cmdptr) { extern unsigned int *binary_pasm_dat_start[]; return cognew(&binary_pasm_dat_start, cmdptr) + 1; } /** * Stop touchscreen driver - frees a cog */ void touch_stop(void);Then, there's the Touch.c file that looks something like this:

#include <propeller.h> #include "touch.h" /** * Structs here */ Tchst cmdptr ; /** * Main program function. */ int main(void) { int touch_start(unsigned int *cmdptr); return -1 ; }Now, I'm wondering if I'm on the right path, or there's some obvious glaring errors?Last I heard Demoniator(TED) was AFK, so if anyone has used LOCKS before... I'm wondering if there's a command to call to enable the lock? I believe I have them implemented correctly in the cache driver, but still not tested.

Also, about cache_interface.spin... If I were to modify this to handle some functions... Can I do this? Or is it best to just leave the cache driver for just cache???

*and my 2 cents about optimizations... Has anyone actually TRIED optimized dhrystone, and if so, how different are the numbers?

I'm currently just using dhrystone as a "known-good" test, since it seems to find problems than the cache test doesn't. It is interesting to see how code modifications alter the results. Then next board I build will have an auto-increment-address function which should put read-writes in 1 hub-cycle per loop... That and the 6.25mhz xtal should provide some interesting results, even if they are "just synthetic benchmarks" :P

Thanks again guys!