Multi-Propeller configurations with PropForth v 5.0

mindrobots

Posts: 6,506

mindrobots

Posts: 6,506

Now that we have PropForth v5.0, my experimenting can commence in earnest.

(This is not intended to be a "Baby Brain" type thread.)

For a long time, since my RCA COSMAC 1802 days, I have had an interest in Forth and other threaded, interpreted type languages. From the start of my professional career (ca. 1980), I have had an interest in multi-processor systems, having started with Sperry-Univac and their multi-processor 1100 series of mainframes. Now, through the Propeller and PropForth, I can spend my free time exploring both of these strange interests. Some or all of this may be of little interest or little use to most folks here but hopefully, through my journey, I can find something of interest to the community and possibly inspire someone.

The attached document is the formal journal for this project. I'll keep the latest version of it attached to this fist post.

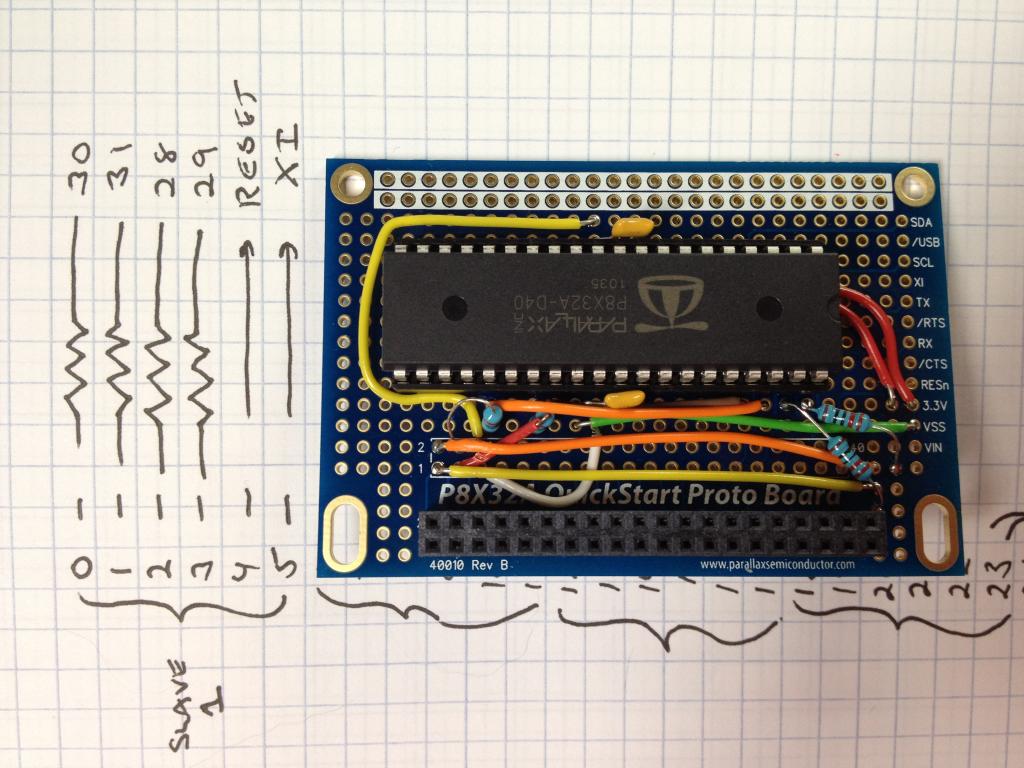

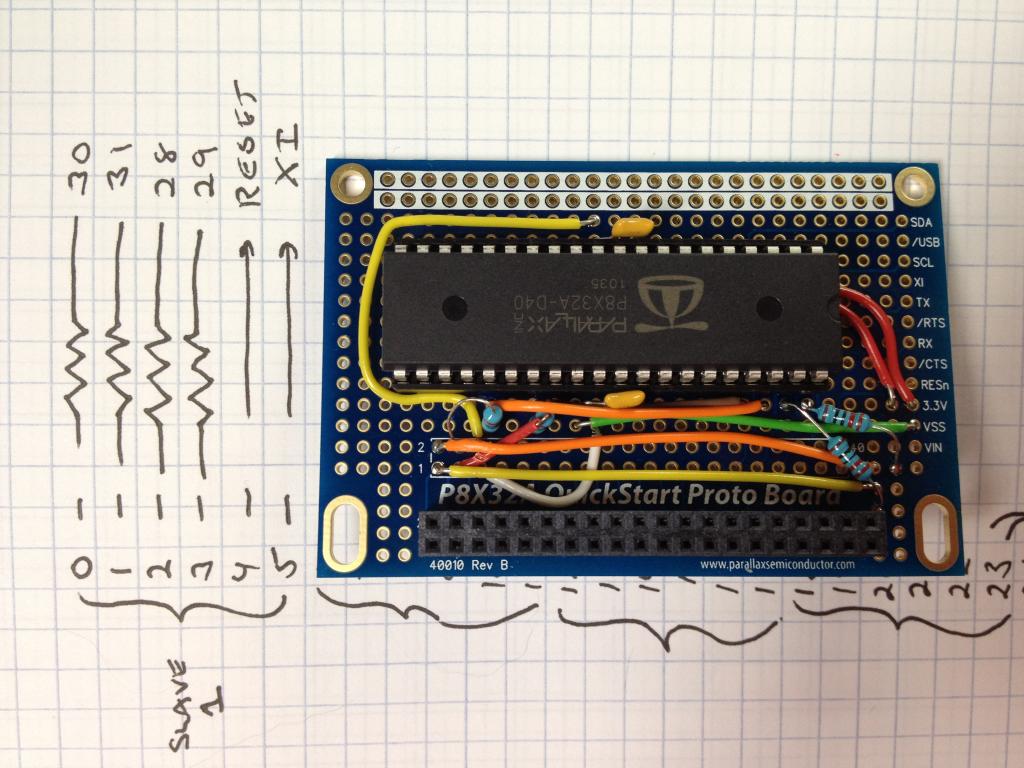

Step one: Build a Propeller Slave to attach to a QuickStart board. QuickStart Proto Board, 40 pin P8X32A, 40 pin dip socket, 4 x 220 Ohm resistor, 10K Ohm resistor, hook up wire.

Propeller Slave attached to QuickStart board

With this configuration, I was able to complete the PropForth v5.0 tutorial 7.2 NoROM. When complete, I had 2 Propellers running the same Forth images and connected via a 57600bps serial link. As it exists now, there are no channels between the Propellers and to do anything on the slave, you need to start a terminal session.

As I develop more Forth vocabulary, it will be included with this thread so everybody can play along!

I can see this heading in a number of interesting directions as the multi-Propeller development continues: Robots with a central (master) controller and slave Propellers to handle motor control, sensor input, etc.; Multi-Propeller development systems with a Propeller handling I/O, one handling communications and one handling general processing; Multi-propeller sensor networks.

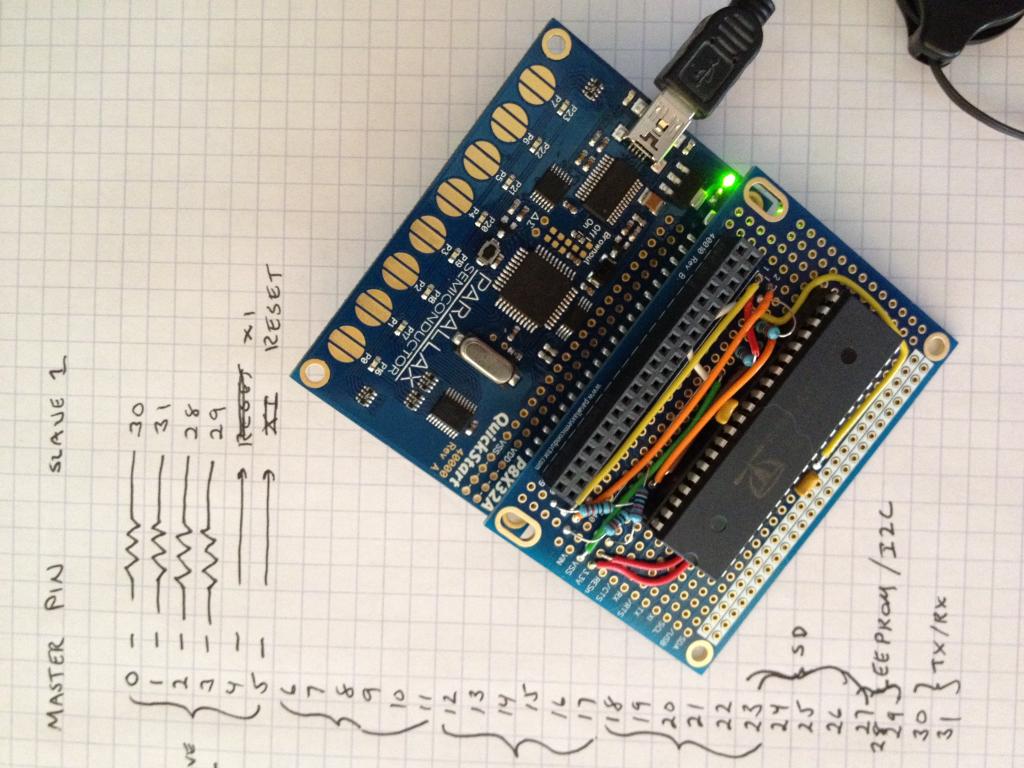

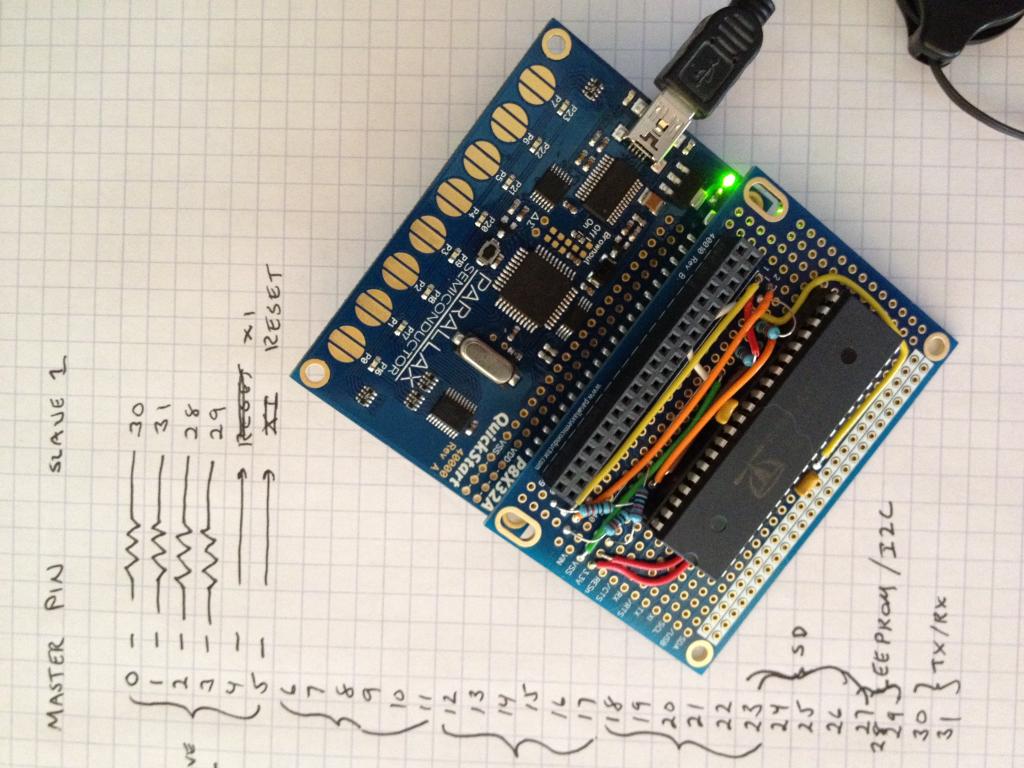

The QuickStart and QuickStart Proto Board combination is intended to explore master/Slave configurations with one Master Propeller providing power, clocking, EEProm, and host communication for up to 4 slave Propellers.

I have a Gangster Gadget PPUSB with one of jazzed's Tetra-Prop boards on top of it to give me a five Propeller peer-to-peer cluster.

Additional clusters will be built up as needed.

That's all for now!

(This is not intended to be a "Baby Brain" type thread.)

For a long time, since my RCA COSMAC 1802 days, I have had an interest in Forth and other threaded, interpreted type languages. From the start of my professional career (ca. 1980), I have had an interest in multi-processor systems, having started with Sperry-Univac and their multi-processor 1100 series of mainframes. Now, through the Propeller and PropForth, I can spend my free time exploring both of these strange interests. Some or all of this may be of little interest or little use to most folks here but hopefully, through my journey, I can find something of interest to the community and possibly inspire someone.

The attached document is the formal journal for this project. I'll keep the latest version of it attached to this fist post.

Step one: Build a Propeller Slave to attach to a QuickStart board. QuickStart Proto Board, 40 pin P8X32A, 40 pin dip socket, 4 x 220 Ohm resistor, 10K Ohm resistor, hook up wire.

Propeller Slave attached to QuickStart board

With this configuration, I was able to complete the PropForth v5.0 tutorial 7.2 NoROM. When complete, I had 2 Propellers running the same Forth images and connected via a 57600bps serial link. As it exists now, there are no channels between the Propellers and to do anything on the slave, you need to start a terminal session.

As I develop more Forth vocabulary, it will be included with this thread so everybody can play along!

I can see this heading in a number of interesting directions as the multi-Propeller development continues: Robots with a central (master) controller and slave Propellers to handle motor control, sensor input, etc.; Multi-Propeller development systems with a Propeller handling I/O, one handling communications and one handling general processing; Multi-propeller sensor networks.

The QuickStart and QuickStart Proto Board combination is intended to explore master/Slave configurations with one Master Propeller providing power, clocking, EEProm, and host communication for up to 4 slave Propellers.

I have a Gangster Gadget PPUSB with one of jazzed's Tetra-Prop boards on top of it to give me a five Propeller peer-to-peer cluster.

Additional clusters will be built up as needed.

That's all for now!

Comments

When you build your multiprop configuration, please consider the "test bed hardware configuration" in the hardware.txt file in the propforth download archive.

If you use the same physical pin assignments, your hardware will compatible with the test bed, and the others can easily run your code for testing, experiments etc.

If you include the spinerette or wiznet in your configuration, Sal or I or any of the others can examine your code WHILE IT IS RUNNING on YOUR board in YOUR lab, and Sal gets to stay home in his office.

That is, you get immediate, on-site assistance with no travel costs. (Maybe, if Sal remains in a good mood).

@rick:

Great work!

If its not too much trouble would you consider posting just your instructions and tips as a web page on the propforth site? Sometimes it helps to keep the instructions separate from the discussion, mixing them in a thread can be confusing for slower folks like me. Eventually I would like to use your instructions in Sal's test suite.

I had looked (and built) the reference hardware but for these experiments, I wanted a set of Propellers I could configure to stack either wide (1 Master with 4 slaves all at the same level driven by that one master) or deep (one master with multiple sub slaves at different levels). In the wide configuration, the master can support at most 4 slaves and it can talk directly to each of the slaves. In the deep configuration, each propeller is driven by the one below it which allows for a theoretically unlimited number of slaves but the master (Prop 0) needs to have a message pass through each slave until it reached the intended slave. This will require some message passing and hop counting logic to pass things between the various levels.

I could use a Spinner as the Master Propeller for the deep configuration, it could be kind of fun to turn it loose on the Internet!

I will start some Wiki work in my spare time. We don't want to get details lost in the threaded discussion.

....now if I could just get rid of this pesky day job!

You really have to do something about that day job. Sounds like it gets in the way.

I started on the sieve of Eratosthenes http://en.wikipedia.org/wiki/Sieve_of_Eratosthenes when we first started synchronous serial. Of course, it got sidelined for other projects, maybe this would be a candidate now?

The idea was:

1) Make a big file on SD.

2) Start with the first two prime numbers , 2, and 3, in the file.

3) Take the next higher number, and check if it evenly divisible by any number in the list.

4) Any numbers not evenly divisible are prime and are added to the list.

While the method shown on the wiki page uses a finite list of numbers, this method might go until the SD is filled. Also, one could start with 3 in increament the next number by two an only check odd numbers, etc; but in this case we want to look at spreading work over cogs, not optimizing the algorithm just yet..

Each next highest number might be assigned to a different cog? As long as the number of active cogs was less than the sqaure root of the number being checked, I think it would work.

Then we could measure the speed of one cog, and check if adding more cogs makes any difference.

It wouldn't be a supercomputer, but it might have an interesting result. Please consider giving this a try as an early test.

On the good news side, I spent some time working on the Wiki pages for this, so I'm not a complete slacker!

In researching and reading .f files, I also started working on a handy little forth code indexer....which suffered from major "scope creep" in its requirements definition.

So today's time at the "day job" wasn't a complete waste!

The cogx command allow the current cog to send a string to the interpreter on another cog.

file:///C:/propforth/doc/PropForth.htm#_Toc316818207

This is the implementation of "message passing" on propforth. (We don't think it can get much simpler than that, if you can figure out how, please give suggestions).

Responses and results are "returned" to the "master" cog as a side affect of the target application. In the example of the sieve of Eratosthenes, each cog causes a discovered prime to be added to the SD.

@Rick: Looking forward to your docs and extensions!

How does a slave cog 'send a message' to a cog on the master to write to the SD without danger of colliding with another slave cog's request?

There's a bunch of ways to do it, this is how Sal designed it:

Each cog running SD support has its own set of control variables. For each cog, the last file accessed is the current file. As I understand it, Each cog waits for the SD to be ready until it requests read or write. Its up to the user to make sure two tasks don't try to write to the same block at the same time. So files would be named COG1-LOG and COG2-LOG rather than a single files named COGS1AND2-LOGS. So each cogs doesn't care what the other cogs are doing, when it gets a turn he's the only one dancing, and won't bump into anybody else.

Another way would be to have one cog do all the talking to the SD. The other cogs send requests to the SD COG. this might be an option when it it required to have COGS1 AND2-LOGS as a common file for two cogs. In earlier version it was design so one cog would load the SD support, and the common dictionary would not contain the SD code. This might happen again when the public release comes out and the "paged assembler" documentation is a little further along.

Multiple open files is another can of worms. P1COG3 could have one file open, P2COG6 another, P0COG2 a third......the poor little SD COG would need to track all that and open and close files as it queued requests. An application with a single COG having a single logging file open and then it soliciting data from it's slave cogs would be a better PropForth application.

You also get into memory issues in bunch off different areas....it makes my head hurt the more I think about it.

The idea is that the fewest number of cog have the SD driver, so as not to take up dictionary space from the application(s).

Typically, we have 1, 4, or 10 buffers for SD access. When you get down to it, any given file is only going to be writing one block at a time, and reading one block at a time. So the plan is to "Get 'er done" before moving on to the next one. So, the queuing is pretty much taken care off by virtue of the drive design, rather than specific queue overhead. This doesn't work in all cases, but so far we have had no problem cases jump up.

The best ones are very simple and elegant. The main strategy is to acknowledge that we are writing to SD over serial, and not expect to continuously write multiple files of multiple megabytes all at the same time. So the we design for small files, and let the buffers do all the work.

If more files are needed, we can alwas add more SD cards, and if large files are needed, we can do something else. As long as we keep it simple as possible. If it gets to be a can of worms, we go fishing until we figure it out

You may be thinking too much. This can result in brain swelling (Ask me I know). When a specific need arises, it can be addressed nicely, but don't try to build in safety for exceptions that have not come to pass. This can lead to bloat that micro controllers and forth cannot afford.

You see? The brain swelling has started. Don't worry about this until your app has a specific requirement. When it does, we can break it down into a form that is solvable with the tools at hand, or we will make new tools.

Haha!! I don't think anyone has ever accused me of THAT!!!!

I need to remember to keep things simple. Thanks!