Floyd Steinberg image manipulation

Dr_Acula

Posts: 5,484

Dr_Acula

Posts: 5,484

I'm working on a crazy project to display bitmap .bmp files on the propeller. Scroll to the bottom of this post to see the eye-candy.

I have the .bmp part decoded in spin

and I have been working out the best way to display these files. This has led me down the road of doing a number of experiments with image processing.

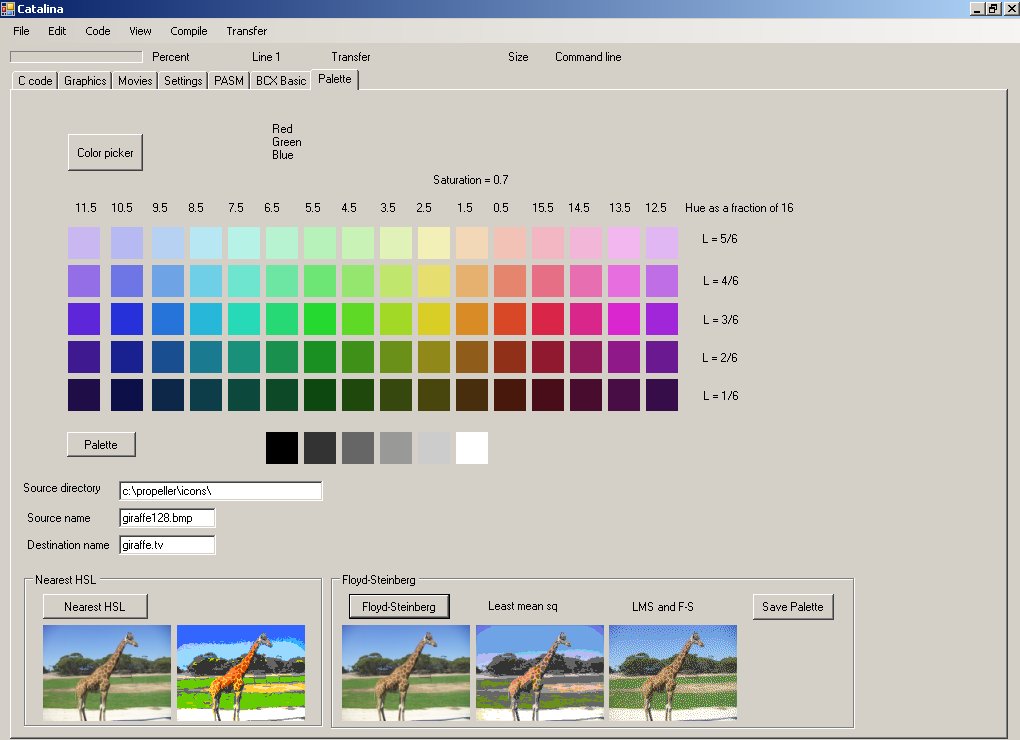

The propeller has a standard palette of 86 bytes and on the screenshot below are the 16 hues, 5 luminance values per hue, and 6 grayscales.

How best to use these?

My first experiments with with converting RGB values to HSL, and then assuming that if the saturation was <64/255 the color would be a gray scale. Anything more than 64 would be a color.

Because this reduces the Saturation from an integer scale of 0-255 to a discrete 0 or 1 value, many colors end up oversaturated. In particular, facial skin tones are very bright.

In the picture of the giraffe, the grass is over green and the giraffe is too yellow.

After having almost written the Spin code for this (mainly a matter of translating floating point math into 32 bit integer math), I happened upon a better formula.

This involves taking the RGB color space and working out the closest color based on the least mean square. Since we just want the closest color, the "least sum of squares" will do.

This is some C code to do just this.

and the results are the second to right picture of the giraffe.

The grass ends up a deeper shade of green, intermixed with gray.

However, the sky ends up with color bands, and indeed, color bands are a problem with all algorithms that translate pixels 1:1 to the propeller TV palette.

This is where the very clever Floyd-Steinberg formula can help http://en.wikipedia.org/wiki/Floyd%E2%80%93Steinberg_dithering with some more complete C code here http://en.literateprograms.org/Floyd-Steinberg_dithering_%28C%29

The output is the giraffe picture on the right. This is the vb.net image processor and the actual output on a TV display looks very similar.

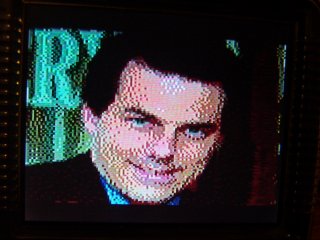

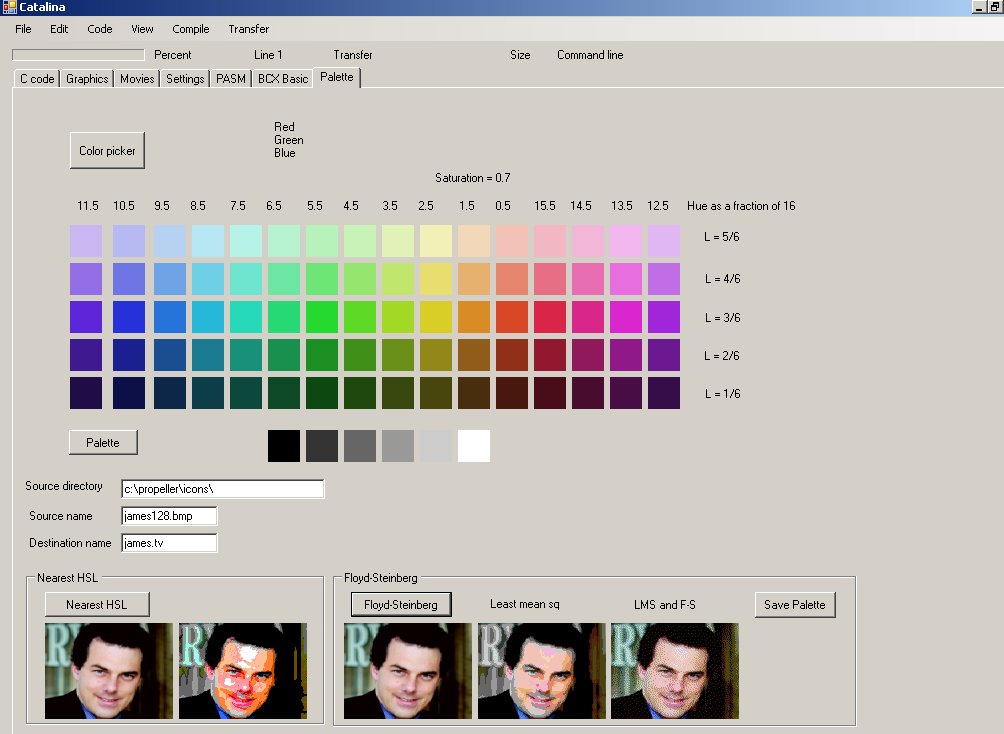

Where dithering really helps is with facial skin tones.

The final little picture is taken on a 3.5" TV screen, and is using Bagger's 128x96 display driver.

Attached also is the vb.net source. If you want to experiment with this you will need something like Paint Shop Pro to take pictures and convert to 128x96 bitmaps. I'm hoping soon that the propeller will be able to read .bmp files directly. I have a simple file viewer for .tv files - see the attached Bitmap.zip file

The .net software is doing a lot of extra things to display things on the screen. There is also a pre-processor that creates the palette software and this will be a lot quicker if it is saved as a binary file (see the attached palette.zip file). This little file stores R,G,B,propbyte for each of the 86 colors. You can read it with a binary file reader eg Hexedit, and the last 4 bytes are FF,FF,FF,07 which is white.

The core of the Floyd-Steinberg dithering code is this

and this function

The biggest problem with translating to Spin is that we need 128x96x3 values for the picture array which is more than the propeller memory. However, it may be possible to use either

1) external memory (rewrite in C) or

2) use the SD card as a temporary store.

Because the array transformation is

and because it starts at the top left and works left to right and then down, the values in the array being changed are always 'ahead' of where we are. This does make it somewhat easier to design an algorithm that uses two instances of, say, Kye's fat driver. One is reading, and one is writing.

I'm still debating whether to try to shoehorn this into Spin, or whether it is better to use C with external memory, or indeed, if it is better just to do the image transform on a PC and save as .tv files (which is working already).

I might also see if this works for movies...

I have the .bmp part decoded in spin

PUB ReadBitmap | Filesize,Offset,Width,Height,x,y,rowcounter,colcounter,R,G,B,remainder

fat.openfile(string("test.bmp"),"R") 'file to read

fat.readshort ' skip the first two bytes

FileSize := fat.readlong ' get the file size

fat.readlong ' read 4 bytes and discard

Offset := fat.readlong ' read in the offset

fat.readlong ' read 4 bytes and discard

Width := fat.readlong ' width

Height :=fat.readlong ' height

repeat (Offset-26) ' jump to the beginning of the data

fat.readbyte ' read and discard

' now read in the bitmap

y := (Height - 1)

repeat rowcounter from 0 to(Height - 1)

colcounter :=0

repeat x from 0 to (Width - 1)

B := fat.readbyte ' stored B,G,R and bottom row first

G := fat.readbyte

R := fat.readbyte

'color := (R << 16) + (G << 8) + B

'printhex(color) ' color value in one long 00RRGGBB, x and y are location

colcounter += 3

remainder := colcounter //= 4 ' same as Modulus

if (remainder <> 0)

repeat (4 - remainder) ' bitmap files pad out rows to a multiple of 4

fat.readbyte

y -= 1 ' move up a row

fat.closefile

and I have been working out the best way to display these files. This has led me down the road of doing a number of experiments with image processing.

The propeller has a standard palette of 86 bytes and on the screenshot below are the 16 hues, 5 luminance values per hue, and 6 grayscales.

How best to use these?

My first experiments with with converting RGB values to HSL, and then assuming that if the saturation was <64/255 the color would be a gray scale. Anything more than 64 would be a color.

Because this reduces the Saturation from an integer scale of 0-255 to a discrete 0 or 1 value, many colors end up oversaturated. In particular, facial skin tones are very bright.

In the picture of the giraffe, the grass is over green and the giraffe is too yellow.

After having almost written the Spin code for this (mainly a matter of translating floating point math into 32 bit integer math), I happened upon a better formula.

This involves taking the RGB color space and working out the closest color based on the least mean square. Since we just want the closest color, the "least sum of squares" will do.

This is some C code to do just this.

There are many ways to find the nearest palette color with varying levels of efficiency and quality. Here we use a trivial algorithm that finds the color with minimum straight-line distance in the color cube from the given color:

<<find nearest color to current pixel>>=

unsigned char index = FindNearestColor(*currentPixel, palette);

result.pixels[x + y*result.width] = index;

<<FindNearestColor definition>>=

unsigned char FindNearestColor(RGBTriple color, RGBPalette palette) {

int i, distanceSquared, minDistanceSquared, bestIndex = 0;

minDistanceSquared = 255*255 + 255*255 + 255*255 + 1;

for (i=0; i<palette.size; i++) {

int Rdiff = ((int)color.R) - palette.table[i].R;

int Gdiff = ((int)color.G) - palette.table[i].G;

int Bdiff = ((int)color.B) - palette.table[i].B;

distanceSquared = Rdiff*Rdiff + Gdiff*Gdiff + Bdiff*Bdiff;

if (distanceSquared < minDistanceSquared) {

minDistanceSquared = distanceSquared;

bestIndex = i;

}

}

return bestIndex;

}

and the results are the second to right picture of the giraffe.

The grass ends up a deeper shade of green, intermixed with gray.

However, the sky ends up with color bands, and indeed, color bands are a problem with all algorithms that translate pixels 1:1 to the propeller TV palette.

This is where the very clever Floyd-Steinberg formula can help http://en.wikipedia.org/wiki/Floyd%E2%80%93Steinberg_dithering with some more complete C code here http://en.literateprograms.org/Floyd-Steinberg_dithering_%28C%29

The output is the giraffe picture on the right. This is the vb.net image processor and the actual output on a TV display looks very similar.

Where dithering really helps is with facial skin tones.

The final little picture is taken on a 3.5" TV screen, and is using Bagger's 128x96 display driver.

Attached also is the vb.net source. If you want to experiment with this you will need something like Paint Shop Pro to take pictures and convert to 128x96 bitmaps. I'm hoping soon that the propeller will be able to read .bmp files directly. I have a simple file viewer for .tv files - see the attached Bitmap.zip file

The .net software is doing a lot of extra things to display things on the screen. There is also a pre-processor that creates the palette software and this will be a lot quicker if it is saved as a binary file (see the attached palette.zip file). This little file stores R,G,B,propbyte for each of the 86 colors. You can read it with a binary file reader eg Hexedit, and the last 4 bytes are FF,FF,FF,07 which is white.

The core of the Floyd-Steinberg dithering code is this

For y = 1 To sizey - 1

For x = 1 To sizex - 1

R = PixelArray(x, y, 0) ' old red

G = PixelArray(x, y, 1) ' old green

B = PixelArray(x, y, 2) ' old blue

' find the closest match

BestIndex = FindNearestColor(Palette, R, G, B) ' find closest palette color

NewR = Palette(BestIndex, 0)

NewG = Palette(BestIndex, 1)

NewB = Palette(BestIndex, 2)

'pixel[x][y] := newpixel

PixelArray(x, y, 0) = NewR

PixelArray(x, y, 1) = NewG

PixelArray(x, y, 2) = NewB

'quant_error := oldpixel - newpixel

QuantErrorR = R - NewR

QuantErrorG = G - NewG

QuantErrorB = B - NewB

'pixel[x+1][y] := pixel[x+1][y] + 7/16 * quant_error

PixelArray(x + 1, y, 0) = PixelArray(x + 1, y, 0) + (7 / 16) * QuantErrorR

PixelArray(x + 1, y, 1) = PixelArray(x + 1, y, 1) + (7 / 16) * QuantErrorG

PixelArray(x + 1, y, 2) = PixelArray(x + 1, y, 2) + (7 / 16) * QuantErrorB

'pixel[x-1][y+1] := pixel[x-1][y+1] + 3/16 * quant_error

PixelArray(x - 1, y + 1, 0) = PixelArray(x - 1, y + 1, 0) + (3 / 16) * QuantErrorR

PixelArray(x - 1, y + 1, 1) = PixelArray(x - 1, y + 1, 1) + (3 / 16) * QuantErrorG

PixelArray(x - 1, y + 1, 2) = PixelArray(x - 1, y + 1, 2) + (3 / 16) * QuantErrorB

'pixel[x][y+1] := pixel[x][y+1] + 5/16 * quant_error

PixelArray(x, y + 1, 0) = PixelArray(x, y + 1, 0) + (5 / 16) * QuantErrorR

PixelArray(x, y + 1, 1) = PixelArray(x, y + 1, 1) + (5 / 16) * QuantErrorG

PixelArray(x, y + 1, 2) = PixelArray(x, y + 1, 2) + (5 / 16) * QuantErrorB

'pixel[x+1][y+1] := pixel[x+1][y+1] + 1/16 * quant_error

PixelArray(x + 1, y + 1, 0) = PixelArray(x + 1, y + 1, 0) + (1 / 16) * QuantErrorR

PixelArray(x + 1, y + 1, 1) = PixelArray(x + 1, y + 1, 1) + (1 / 16) * QuantErrorG

PixelArray(x + 1, y + 1, 2) = PixelArray(x + 1, y + 1, 2) + (1 / 16) * QuantErrorB

Next

Next

and this function

Private Function FindNearestColor(ByVal Palette As Integer(,), ByVal R As Integer, ByVal G As Integer, ByVal B As Integer)

' minimum straight line distance from color to the color cube color

Dim i, BestIndex As Integer

Dim DistanceSquared, MinDistanceSquared, Rdiff, Gdiff, Bdiff As Long

MinDistanceSquared = 255 * 255 + 255 * 255 + 255 * 255 + 1

For i = 0 To 85

Rdiff = R - Palette(i, 0)

Gdiff = g - Palette(i, 1)

Bdiff = B - Palette(i, 2)

DistanceSquared = Rdiff * Rdiff + Gdiff * Gdiff + Bdiff * Bdiff

If DistanceSquared < MinDistanceSquared Then

MinDistanceSquared = DistanceSquared

BestIndex = i

End If

Next

Return BestIndex

End Function

The biggest problem with translating to Spin is that we need 128x96x3 values for the picture array which is more than the propeller memory. However, it may be possible to use either

1) external memory (rewrite in C) or

2) use the SD card as a temporary store.

Because the array transformation is

0,0,0 0,x,7 3,5,1

and because it starts at the top left and works left to right and then down, the values in the array being changed are always 'ahead' of where we are. This does make it somewhat easier to design an algorithm that uses two instances of, say, Kye's fat driver. One is reading, and one is writing.

I'm still debating whether to try to shoehorn this into Spin, or whether it is better to use C with external memory, or indeed, if it is better just to do the image transform on a PC and save as .tv files (which is working already).

I might also see if this works for movies...

Comments

Also a minor correction to the code - it was putting purple patches on the screen so have added some error bounds correction so values stay 0-255. This vb.net code has the movie maker code as well.

http://www.youtube.com/watch?v=VmVP4IcfSFY on the PropGFXLite (with sound!)

http://www.youtube.com/watch?v=4aIRdOV8tp4 using F-S dithering & no sound

and for Dr Zoidberg - http://www.youtube.com/watch?v=AYfac41-PdA

and Dr Zoidberg playing chess http://www.youtube.com/watch?v=3t7C68EhHUU

@Baggers - the engine behind this is your video driver and Kye's sd driver. There are black bars at the top and bottom and it is a subjective thing, but I think my face looks fatter on the photo of the screen than it does in real life *grin*.

This is 128x96 and there is plenty of hub ram free. Is it possible to fill the screen by increasing the number of y tiles? Maybe even just one more tile, and bump the centre of the screen down slightly? ?? 128x112?

-Phil

I have problems with aspect ratios on all sorts of picture capture methods. Cameras and mirrors always make me look fat (even when not at the fairground).

Great work on the picture improvements.

Yes, I'm suffering from FFS (Fat Face Syndrome).

This Finding Nemo video really shows off the full color range http://www.youtube.com/watch?v=QMLG-5t45Ek

@Baggers, anything that increases the number of pixels will improve things. How high can we go? Anything stopping us from going to Kye's 160x120? I have a vague recollection you mentioned going for even better resolution with two propellers?

If you keep making videos, you are gonna need one of these: http://www.google.com/products/catalog?q=easy+cap+usb+capture&oe=utf-8&rls=org.mozilla:en-US:official&client=firefox-a&channel=np&um=1&ie=UTF-8&tbm=shop&cid=8439074576208910462&sa=X&ei=SK0QTvnJB4PgiAKNl8XYDQ&ved=0CCoQ8wIwAQ

Comes with software to make little movies, and it has composite, S-video, NTSC / PAL.

256x96 8bit uses 24K of display memory

You could keep the ratio and get an increased color depth with that. Start witn 128x96 and use two pixels for each real RGB color. Do a F-S transform in floating point - multiply the pixel ahead by 7/16 of the error. A blue/gray color will end up a blue and a gray pixel next to each other. The F-S transform takes whatever palatte you have given it and minimises the error as it goes.

I'll do some experiments and see how it looks...

I need to go back and put a modified version into my NTSC 4x2 converter.