Can the Prop Handle This?

I want to make something very similar to this:

http://www.youtube.com/watch?v=UZ7_ED9g4FY

http://www.cs.cornell.edu/~asaxena/rccar/

I would build an outdoor track for it, with contrasting borders. It would learn to drive around the track through a machine learning algorithm. First I would drive it myself, so that it could sense that when the path curves right or left I turn it right or left. After this, I'd let it drive the track itself and occasionally tell it if it's doing something wrong. Eventually, I'd put a timer on the track, and have it attempt to go around the track in the minimum time.

To do this, some Image Processing would have to be possible.

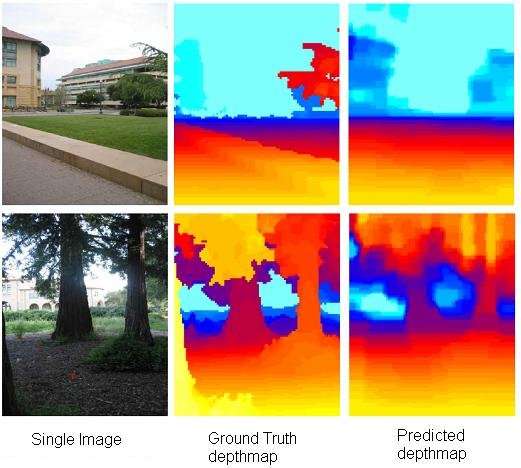

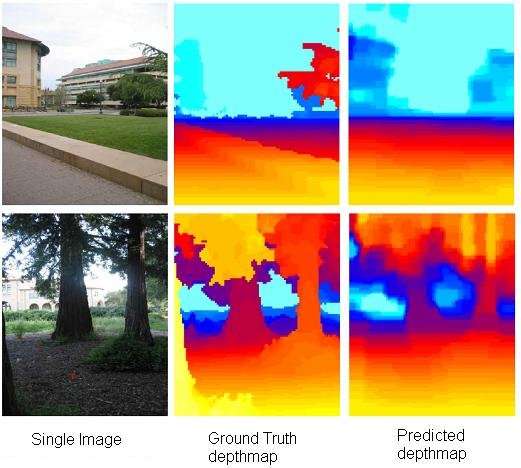

The image processing wouldn't necessarily have to be this complex:

http://www.cs.cornell.edu/~asaxena/learningdepth/

I can't find an example of what I want, but it would basically just need to be able to see the path and its boundaries, and color the traversable area say white and the area to avoid black etc. From that image, the learned behavior decides where to turn and how fast to go.

Do you think the Prop can handle this? If not, what would? Such as Gumstix, BeagleBoard, etc. Something somewhat robust and small.

I attached an example of the sort of power it would need to be able to handle. The image isn't showing up in the preview, I may have to continue searching online.

Thanks,

Keith

http://www.youtube.com/watch?v=UZ7_ED9g4FY

http://www.cs.cornell.edu/~asaxena/rccar/

I would build an outdoor track for it, with contrasting borders. It would learn to drive around the track through a machine learning algorithm. First I would drive it myself, so that it could sense that when the path curves right or left I turn it right or left. After this, I'd let it drive the track itself and occasionally tell it if it's doing something wrong. Eventually, I'd put a timer on the track, and have it attempt to go around the track in the minimum time.

To do this, some Image Processing would have to be possible.

The image processing wouldn't necessarily have to be this complex:

http://www.cs.cornell.edu/~asaxena/learningdepth/

I can't find an example of what I want, but it would basically just need to be able to see the path and its boundaries, and color the traversable area say white and the area to avoid black etc. From that image, the learned behavior decides where to turn and how fast to go.

Do you think the Prop can handle this? If not, what would? Such as Gumstix, BeagleBoard, etc. Something somewhat robust and small.

I attached an example of the sort of power it would need to be able to handle. The image isn't showing up in the preview, I may have to continue searching online.

Thanks,

Keith

Comments

I'd like for it to be able to do this preferably with less than 4 cogs:

I realise the end picture may be 16greyscale etc, but can it handle this basic thing? And be able to see that the road slightly goes left so it should turn the steering servo a little left etc?

Granted, here it would need to turn slightly left to get to the center of the road, then right to align. It would learn that from the Supervised and Reinforcement Learning.

After this seemed to be working, I'd put on the timer and tell it to minimize time around the track.

Post Edited (rough_wood) : 4/23/2010 7:57:47 PM GMT

1. do you have programs for any of this in any language? if so, how big are the executables?

2. how much physical space do you have on your vehicle?

3. would you be able to use a wireless link between the vehicle and a remote PC?

4. how much time do you have to capture and process the images?

5. did you happen to read any issues of Robot magazine last year?

--Steve

▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔

May the road rise to meet you; may the sun shine on your back.

May you create something useful, even if it's just a hack.

Here is a series of articles on a balancing robot he built with it. www.circuitcellar.com/archives/viewable/224-Sander/index.html

Bill

A program that watches for movement is 262KB. It was a .hex file.

There was a color tracking program also for CMUCAM and it was 8KB

2. An RC truck

3. I'd prefer to it to just work through the RC truck and a radio

4. Say around 1/10 of a second, maybe as much as say a half a second.

5. No, why?

So it seems the Prop should be able to handle it. I was hoping to put on an accelerometer so the vehicle can slow down if it jounces too much to avoid damage. Seeing as though this seems to only take a couple cogs this should be entirely possible.

So maybe the vision isn't the hard part, the learning part will be.

It looks like Hanno said it could handle 30FPS, that'll be more than enough.

I still can't tell if ViewPort will be necessary for this.

Can the prop find an area of contrast on its own? If not I might have to get a Gumstix and try to put OpenCV on it or something like that.

It's OK if it's gotta be greyscale etc. I'm just trying to show the basic concept of what I want.

▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔

May the road rise to meet you; may the sun shine on your back.

May you create something useful, even if it's just a hack.

I am allowing in the ballpark of $750-1000 for the project, not counting whatever I already have that may be of use. The only issue is I am a fulltime eng student, so I need to know that the project can be started with a Prop otherwise I'll have to put it off a while cause I don't have the time to learn a new language from scratch.

This is something I intend to have work clean and look clean when it's done. The truck itself will be around $350-500, I am currently looking at the Traxxas T-Maxx. I considered Slash/Slayer/Revo but the T-Maxx chassis, being a flat plate of Alum, seems easier to to attach my additions to. Plus it seems very robust.

I was going to make a Hexapod, but the servos themselves would cost more than what I expect this completed project to cost.

If the Prop can't do this well, or at least get me a very good start and learning experience, I'll wait and do it with ARM or Gumstix or something similar. I have a week before I even need to consider ordering anything so right now I'm looking largely at feasability and a simpler backup project in the case that I don't think I can have a good start on the truck by the end of summer.

Thanks for the link. Unfortunately I won't be lining the track with beacons or anything, it will just need to be able to see contrast etc and find a traversable path.

▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔

May the road rise to meet you; may the sun shine on your back.

May you create something useful, even if it's just a hack.

I'll be writing a high preformance ASM driver for the CMU camera soon that will dump the camera's frames to memory as fast as possible in the background only using one processor.

Since the baud rate of the CMUCam is very slow however the driver will have to use windowing and line mode on the CMU camera to reduce the amount of data being sent over the serial bus so that more frames can be grabed faster per second.

I'll be trying to support all the features of the CMUCam. So, maybe the driver will be helpful to you. I will post it in the OBEX when done.

However, I will not be able to get on this for a month or so as I'll be working on a few other drivers that I plan on releasing soon to the public. (Note: Don't depend on me to build anything for you. I will try to get to making the driver as soon as possible but I could get caught up in other stuff).

Please email me if you would like any specific features built into the driver.

Thanks,

▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔

Nyamekye,

I appreciate the heads up. At the moment I have only been looking into this idea for the past two days in my spare time, so I don't know everything I will need yet.

One feature I'm not sure is offered, is to maybe take two images rapidly and pause, then 2 rapidly then pause, in order to be able to try to give a sense of velocity and angular velocity. (May not be possible with slow baud?)

I understand you may have to delay it, I will plan around not using it but hope things work out.

Thanks,

Keith

No, it's not necessary... I have it running without any PC side at all. It's just two cogs... one for video input (which captures the frame in to the video buffer) and the second to do the processing... in his example, he is simply looking for the "bright" spot, but it can be adapted for other things.

In his example, he is simply using ViewPort to display the memory buffer on the PC, so you can "see" what is actually being captured and processed. ViewPort is a real cool tool for debugging and understanding exactly what is going on. You can use his free/trial version to debug the app, but once that's done, you don't need it and can free up that cog it uses. (I highly recommend ViewPort, however, don't get me wrong... it's just that it is not required for this to work.)

Bill

Do you think the Prop can process images similar to my attachments, and react to them less than say half a second behind real time and making corrections about say 4 times per second?

It will just be driving a throttle servo and steering servo, it will likely be reading an accelerometer along with the images. Maybe a gyroscope later, I just want a starting platform honestly. To get something professional I will likely need to jump up to more expensive chips like OMAP.

I will likely incorporate a form of Fuzzy and PID. There will also be some learning algorithm.

Thanks,

Keith

Programing the prop chip in ASM is not that painful. Its a must have to learn if you want to unlock the chip's true potential.

As for figuring the speed of processing... You really should develope the algorithm first before you start asking questions. Its impossible to guess the speed of an application before having something concrete to work with.

▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔

Nyamekye,

I'll give that a shot, unfortunately when it comes to stuff like this I am very "trial and error" and learn how to do it, as opposed to just doing it.

I'll get started on it and post it in here. It probably won't be very symbolic, I'm not a big Theoretical Math guy, unfortunately I could see Taylor Series or such a thing being useful for this, but I ignored it because it was such a small part of my grade.

Tentatively, here is what I would like to calculate from this image:

The center of 5-10 horizontal cross sections of the traversable path

The center of the projected horizontal cross sections of the traversable path (Guessing how much space there is outside the view of the camera, for example to the left, there is probably more space that is traversable)

Estimating the center of the area of the traversable path (might not even be useful)

Based upon forward/backward acceleration, it would estimate the distance to each of those horizontal cross sections (For example, when it is accelerating hard, something in the center of the frame will be farther away than it would be at standstill due to suspension compression, I would put in a simple approximate linear model for this. Also stopping would make objects closer to the center of the image.)

I would like for these things to be able to be processed and calculated around 4-10 times per second, within 1/4-1/3 seconds of real time.

Another plus would be to be able to take two pictures at the FPS limit of the camera, analyze the first as described above, and with the second, find an object and estimate how much it moved relative to the second picture. Such as the corner of the card board box in this case. This way it could make a rough guess at what 2D and angular velocities it is traveling at.

In this picture, the tic marks are the center of the horizontal sections of traversable path. Cross marks are center of projected traversable area. Horizontal lines are the sections it analyzes and guesses approximate distances to. Green is traversable, Red is not traversable. The cube is an object that can stand out, another example would be a tree or post.

Think this is possible to compute in the given speed ON the prop?

After this, it would need to calculate the action it would take on the throttle and steering servos in a fairly small amount of time. I'd like for it to be able to make about 5-10 corrections per second within a half second of real time (this total time would include taking picture, altering for contrast or whatever, doing the analysis I mentioned above, and choosing an action to perform on the 2 servos. While doing this, it would need to watch for "end of the lap" signal (at which point it calculates time around track), and watching for excessive Z bump on the accelerometer so it can slow down before receiving damage.

I'll work on the learning algoritm and post that when I can. Obviously the Prop will need to be able to do this image function first before it can do the learning.

If I were thinking along these lines I'd be starting with a camera on whatever robot chassis it is and do all the processing on my PC feeding back control commands to the robots servos. I'd start to create the software in whatever language I am comfortable with and is quick and easy to modify/debug there.

I'd also simplify the initial problem greatly. For example have the robot travel on flat black surface and try to follow a path in white on it. When that is working to some level start to think about the real world of subtle colors of scenery, changes due to lighting variations and shadows, changes due to tilt of the vehicle under acceleration/braking. The problems of objects obscuring the view (the box above for example) and all those millions of other messy real world features.

In this case I think the "high level" problem of vision recognition and navigation are hard enough to tackle before getting into all the nitty-gritty of putting the finished program into a Prop or two on the robot.

Here in Propeller land many things that were said to be impossible end up being done anyway[noparse]:)[/noparse] The trick is not to tell who ever is attempting the impossible that it is so.

▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔

For me, the past is not over yet.

▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔

Leon Heller

Amateur radio callsign: G1HSM

Post Edited (Leon) : 4/26/2010 1:53:13 PM GMT

Personally my view is that you can do just about anything on the prop in ASM as long as its theoritically possible with an MCU running at the same speed.

IDK, I would do the job with a prop. I mean, you have eight hardware threads to work with so ou could just devote four to processing in parallel.

It should be doable. Its better to work in a comfortable enviorment than an unknown one if you want to get work done.

▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔▔

Nyamekye,

As far as complexity, if faces and all that can be located, then simple blobs can be located. All I'm really looking for is for the thing to see what general blob of color(shade) it is on and discriminate against the surrounding green and blue. After that it would use simple regression to draw the edges, and calculate the average on each of those 5-10 horizontal cross sections.

I don't know how to make the blob recognition, but from the sounds of it there is an engine for this that exists. After that I think the Prop's math functions should allow a simple form of regression.

Think about insects, etc, with simple vision systems. Hanno's system simply looks for the brightest spot, based on color value stored in the image "array." Finding that spot allows it to calculate where that spot is (x,y) within the image.

In your case, camera position is going to be important, if you have the camera high pointed down, you can "estimate" angle of the plane the robot is driving on... from that, you can estimate distance. You can then do a simple color check to see if it is on the path or not. You don't have to look at the entire picture to determine that... just the few planes (or rows) of the image you're interestd in.

Bill

If you are just trying to follow a path then there is no need for a 2D image, you just need to scan a line. You could just extract a single line from the image and look for the part with the same colour (within some range) as the path and aim for the center of that region. Easy to implement and does not need much processing or memory.

Not sure if Hannos code works for colour, you could always use a colour sensor plus a lens (from cheap small binoculars) scanned back and forth with a servo.

Graham

I picture it being mounted so the top of the image is just over the horizon, and the bottom of the screen is within say a foot of the front of the Nitro Truck.

Again, this image only needs to be around the 200X240X16shade image.

The learning algorithm would involve something like keeping track of a reference lap, and a previouse lap. Say there are 6 turns, so it will have stored values from the supervised teaching session, such as the mathematical snapshot of entry and exit to the corners.

Then the robot will drive the track the same as I did, except each waypoint change 1 thing, like driving faster, slower, a little farther right, or a little farther left. If its lap time is better, or I give it positive reinforcement, it will put that new action into it's 10-20 reference waypoints. It will continue to do this untill optimally, it finds the "Racing Line".

As far as two consecutive snapshots to calculate velocity, that won't be absolutely necesary, just a plus if I can do it.

All I need to know is the Prop can find a contrasting blob, give it very simple borders with regression or something, find the X values at a few Y cross sections, find the center, choose according to reference if it should be right or left of center and how fast it should be, and know how to slightly change a randomish thing like "staying slightly left at waypoint 4" and wait for time or positive reinforcement to decide wether to replace old reference with the new one.

There can probably be 4 cogs available for image processing alone.

Even black and white should pretty easily see the difference between dirt and grass.

As far as one line I don't know that will be quite enough information. I think it may need to at least know it is looking at an arc or line (as far as path centerline)

Otherwise I could see it needing to process those lines much faster in order to not skirt to the outside of hairpin corners.

By scan a line you don't mean an expensive laser scanner, correct? That would be too heavy and expensive, not to mention the Prop might not be able to take in the data.

Starting with a learned example could improve that somewhat, as will limiting the choices available to the algorithm (as in, choosing between 'Left and Right' will be learned faster than choosing between '1/2 left, full left, 1/2 right, full right') - if you limit the learning algorithm to only apply on corners then you will also need some way to tell the robot when it is at a corner (otherwise it might 'think' it's at a corner when it is not).

Space will also be a consideration, if using Q-Learning (state-action pairs) you will need to store every possible state and action, though you might be able to get away with keeping the bulk of the data on an SD card. Alternatively there are ways to do Q-Learning with a neural network which will reduce the memory footprint (just google 'q learning neural network').

Using lap time as the decider will also affect things - learning will be slower because you wont know if a change was·good·or bad until you cross the finish line, and you'll also need to remember all the decisions made on that lap (so that they can be marked as good or bad decisions for the next round).

A former student at my uni did his masters on using reinforcement learning to teach a race car to drive around a track (all simulated in software of course) which might provide you with some useful information: http://researchcommons.waikato.ac.nz/handle/10289/2507

I don't say any of this to discourage you, just want to let you know what you're in for

Thank you very much for that post. I'll start reading this now.

I understand this might not make a Sabatsian Loeb in 3 hours flat. I just don't enjoy doing anything if it isn't a challenge. I've made enough lights blink [noparse]:)[/noparse]

I'll let ya know what questions I have after I've read enough of this. Until then any Textbooks or other resources you recommend? I've found Stanford University's "Machine Learning" lectures on YouTube, which may interest many of you.

Thanks,

Keith

I know what you mean about having a challenge,·you don't have to look far on the forums to see that a lot of·prop-heads enjoy 'pushing the envelope' and the prop is better for it -·it seems the number of "impossible" things being done on the prop is growing every day.

I hope so. Ideally there is an ASM tool out there that will do much of the image processing for me. After that I agree, getting the useful numbers out of it should be relatively easy, the issue will be the Prop's speed.

For anyone who is interested, the book Kal recommended is an eBook at this link:

http://webdocs.cs.ualberta.ca/~sutton/book/ebook/the-book.html

Thanks,

Keith

If you read the Thesis Abstract that Kal provided a link to a few posts above, it may help you understand. Basically, to make a truck that can clumsily wander around the track sounds very doable. The main issue, is this 1 single project can be improved to such a large degree. Eventually, this car will need to graduate to sliding around turns with a sideways velocity in order to reduce lap times. The level of complexity between a cool thing that physically works, and a car that has learned to master the track, are so incredibly far apart that I can use the same platform for quite a while.

Even after this out grows the Prop, I can take the basic learnign algorithm and transfer it to the OMAP system or whatever I'm using. I'll just have to change the computer language etc.