Floating point routines (64 and 32 bit) for P2

ersmith

Posts: 5,909

ersmith

Posts: 5,909

Summary: 3 different floating point objects for P2:

- BinFloat.spin2 is a fairly complete implementation in Spin2 of 32 bit binary floating point numbers (the format is IEEE, although the implementation leaves out parts of the IEEE standard).

- Double.spin2 is a simple implementation in assembler of the basic arithmetic routines for both 32 and 64 bit floating point.

- DecFloat.spin2 is an implementation of decimal (base 10) floating point. In this format base 10 numbers are stored exactly, but it is not compatible with IEEE binary storage.

Update Mar. 22, 2022:

A year has passed, and Spin2 has changed a lot. This required renaming most of the functions. Spin2 has built in (32 bit, base 2) floating point, but I've left the methods like F_Add, F_Sub, and so on in BinFloat just because they provide an alternative and a sanity check for the built in functions.

The new zip file (version 4) is attached.

Update Mar. 23, 2021:

Here are some revised functions, with Log() implemented for BinFloat and some more precision improvements. The UCB/Sun IEEE tests are only failing on a few numbers, and the results are usually just off by a small amount.

Update Mar. 14, 2021:

BinFloat and DecFloat now implement the arcsin, arccos, and arctan functions. BinFloat also implements Pow() and Exp() functions, although the precision isn't great for large or small numbers (as you can see from the failing IEEE tests). Fixed a nasty off-by-one bug in the decfloat multiply that somehow slipped through the tests.

Update Mar. 10, 2021:

In the new attachment there are not just 1 but 3 floating point implementations for the P2, as follows:

(1) BinFloat.spin2: 32 bit only IEEE single precision (only a subset of IEEE, e.g. only round to nearest rounding mode). Written in Spin2, but performs pretty well.

(2) DecFloat.spin2: 32 bit decimal based floating point. Uses base 10 internally, so for example 0.1 is represented exactly (unlike in base 2). Gives up a little bit of precision and speed in return for this simplicity and a larger range of representable numbers

(3) Double.spin2: The original 32/64 bit floating point routines described below. As with BinFloat, uses IEEE binary format, but written in assembler (so a little faster) and supports 64 bit; but lacking trig functions and conversion to/from decimal.

Older

This is still very much a work in progress, so buyer beware!

I've pulled out the floating point functions from riscvp2 and put Spin2 wrappers around them. The Spin wrappers aren't tested yet, but the original functions have gone through things like the "paranoia" floating point test to make sure they handle edge cases correctly.

For now only the basic IEEE required functions (+, -, /, *, sqrt) are provided, along with some conversion functions. But there are both single (32 bit) and double (64 bit) versions of all of these. The double precision ones use 2 variables (lo, hi) for each value, and the functions return 2 values. This is a very nice feature of Spin2.

The sample test.spin2 works correctly with flexspin. It compiles with PNut but doesn't work. I'm guessing this has something to do with the memory usage in COG RAM. @cgracey , the Spin2 docs say that 0-$12f are available for use inside CALL: is that accurate? I'm using some of that space, and some of $1d8-$1e0, for scratch purposes inside the assembly code.

EDIT: found the problem with PNut, "-3.1" is parsed as "-(3.1)", and the "-" is an integer negation, not floating point. I've added FNeg and DNeg methods and now the results are much better, although the PNut compiled version has a rounding error due to the representation of 3.1 not being quite right. I've updated the attached files.

Comments

I should also point out that these haven't really been optimized for speed (and they aren't very readable, having gone through many iterations from P1 gcc through riscvp2). They are accurate though and handle things like denormalized numbers, -0.0, infinity, and NaN correctly.

So... umm... Eric... am I hearing a faint voice in the distance crying “double precision is coming for FlexBASIC”? Or should I change the batteries in my hearing aids?

Eric, yes, those $000..$12F register spaces are available, but the $12F can lower if I grow the interpreter. The $1D8.. $1DF registers are always available, though, and they have names (PR0..PR7).

That's cool that you are adding floating-point. I've been mulling this for some time, but haven't gotten to it, yet.

@cgracey : Cool, thanks. The problem wasn't the register usage, it turns out that PNut parses "-3.1" as "-(3.1)", and does an integer negation, which causes the result to not be the floating point negative of 3.1 (since integer and floating point negation are different). Is that intentional?

Another minor PNut issue: it returns $40466667 for 3.1, instead of the correct $40466666. Not a big difference, but enough to throw the calculations off.

Oooh, I had no idea that was going on. I think I just need to toggle the MSB, instead, right?

This is working okay in PNut:

Can you show me the context where the integer negation was happening?

@cgracey : Actually the PNut representation issue for 3.1 reminds me of a whole other issue: how would you feel about using decimal (base 10) floating point instead of IEEE (base 2) floating point in Spin2? There's no floating point hardware in the P2, so we're not restricted to using a particular representation for floats.

The difference is that with decimal floating point the number is stored as, say, a 24 bit significand (scaled by 1_000_000) between 0.0 and 9.999999, and the exponent represents powers of ten instead of powers of 2. So e.g 3.1 would be stored as (63<<24) | (3_100_000), where (63<<24) is the exponent (offset by 63, similar to how IEEE floating point offsets the exponent) and 3_100_000 is the significand (often called the "mantissa", although that's not technically correct).

The advantages of decimal are:

Disadvantages:

It wasn't in the CON section, I used it directly in an assignment:

CON _clkfreq = 180_000_000 OBJ s: "jm_serial" PUB demo() | x, y s.start(230_400) x := 3.1 y := -3.1 s.fstr2(string("should be 3.1 and -3.1: %8.8x %8.8x\r\n"), x, y)It produces "40466667 BFB99999"

Well, it's coming eventually, but so is Christmas . I'm looking at a bunch of things, and 64 bit integers really need to come to FlexBASIC/FlexC first; double precision will come after that.

. I'm looking at a bunch of things, and 64 bit integers really need to come to FlexBASIC/FlexC first; double precision will come after that.

That decimal idea sounds way better. It's the binary-decimal mismatch that creates all kinds of ugly numerical issues in IEEE-754 format that make no sense to people using floating point.

Would limiting the significand to 9_999_999 even further clean things up?

Yeah, sorry, I was a little imprecise: indeed the significand of a decimal floating point number should be limited to 9_999_999. The format I would propose would be:

1 bit sign (just like IEEE)

7 bits exponent (1 bit less than IEEE binary, but it's powers of 10 instead of powers of 2), offset by 63

24 bit significand, normalized to be between 1_000_000 and 9_999_999. We could also allow denormalized numbers when the exponent is 0, that would be nice but adds some complication.

Special exponent values: 0 means 0 (or potentially a denormalized value), $7f with significand 0 means infinity, $7f with any other significand means "Not a number".

This differs a bit from the IEEE decimal standard, which if you look on Wikipedia ( https://en.wikipedia.org/wiki/Decimal_floating_point ) uses a clever scheme to squeeze some extra exponent space out of the "unused" part of the significand. Frankly I don't think the extra complication is worth it -- it lets you get up to 10^96 instead of 10^63, but at a big cost in complexity for a software implementation.

Calculations are pretty much like in binary floating point, except to renormalize or scale we have to divide/multiply by powers of 10 instead of shifting. That definitely imposes a speed penalty, but the clarity of the results is IMHO worth it.

If you're interested in this I can try to put together some routines to use decimal floating point. I have some C code lying around somewhere that does some of the operations, I don't think it would be too hard to conver them to Spin.

Eric, that sounds really good. Ultimately, I would like to place these routines into the interpreter and come up with some syntax to accommodate them.

OK, I'll see what I can put together. I hope to have something for you this weekend.

No rush, Eric.

Here's an updated version of the float code. The standard IEEE routines in Double.spin2 haven't really changed, but I've added a new object DecFloat.spin2 that implements decimal (base 10) floating point. Note that this is not compatible with the floating point numbers PNut and flexspin produce, so to get floating point numbers you'll have to use one of the conversion functions like FromString, FromInt, or MkFloat. MkFloat is probably the easiest: it lets you create a floating point number based on scientific notation, so to get "123.4567" think of that as "1.234567 * 10^2" and write "MkFloat(1_234567, 2)". Similarly "0.1" would be MkFloat(1, -1) or MkFloat(1_0, -1), or MkFloat(1_00, -1); they're all the same. The internal format uses 7 decimal digits plus a two digit exponent in the range -63 to +63.

Updated the first post with new versions of the float code. As mentioned in that post, there are now 3 different implementations of floating point, so there's something there for everyone .

.

BinFloat.spin2 and DecFloat.spin2 are 32 bit binary and decimal (respectively) floating point packages, written in Spin2. The DecFloat format is not compatible with Spin2's built in binary floats, so be careful with it.

Double.spin2 is written in assembly and provides 32 bit and 64 bit binary based floats, but it isn't as complete (e.g. no trig functions).

Thanks, Eric. I will look at this soon. I'm still stuck on this composite video stuff.

The first post has been updated with some newer routines. BinFloat now implements Pow() and all the arc trig functions. DecFloat has the arc trig functions.

I've updated the first post with newer versions of the float routines. Both BinFloat and DecFloat have been updated, with most of the work going into BinFloat. It now has a FLog function, and its FPow function now does much better on the UCB / Sun IEEE float tests. The FLog still has a few corner cases where the hardware qlog() isn't accurate enough, but I think it'll be close enough for most uses.

I have no idea if anyone is using any of these or cares. If not, I'll probably just leave these as-is -- they're good enough for what I want to do with them, I think.

Downloaded. May be useful when floating point has to be used, which is not supported in standard Spin.

It may be interesting if these procedures can be rewritten in PASM to fit in one cog, which will be a "floating point coprocessor" aka 387

I don't think there would be much, if any, benefit to running floating point in another COG, as opposed to inline PASM on the same COG (e.g. the PASM in the Double.spin2 object). Any gain you get from running in COG/LUT memory would be offset by the cost of inter-cog communication.

Re-upping this thread because last week there was some discussion about floating point in Spin. The most recent IEEE compatible code is in BinFloat.spin, which is both in the github OBEX and in the first post. @cgracey , I sent you some e-mail about floating point and Spin, and we can talk about it here or off-line, whichever you prefer.

@ersmith

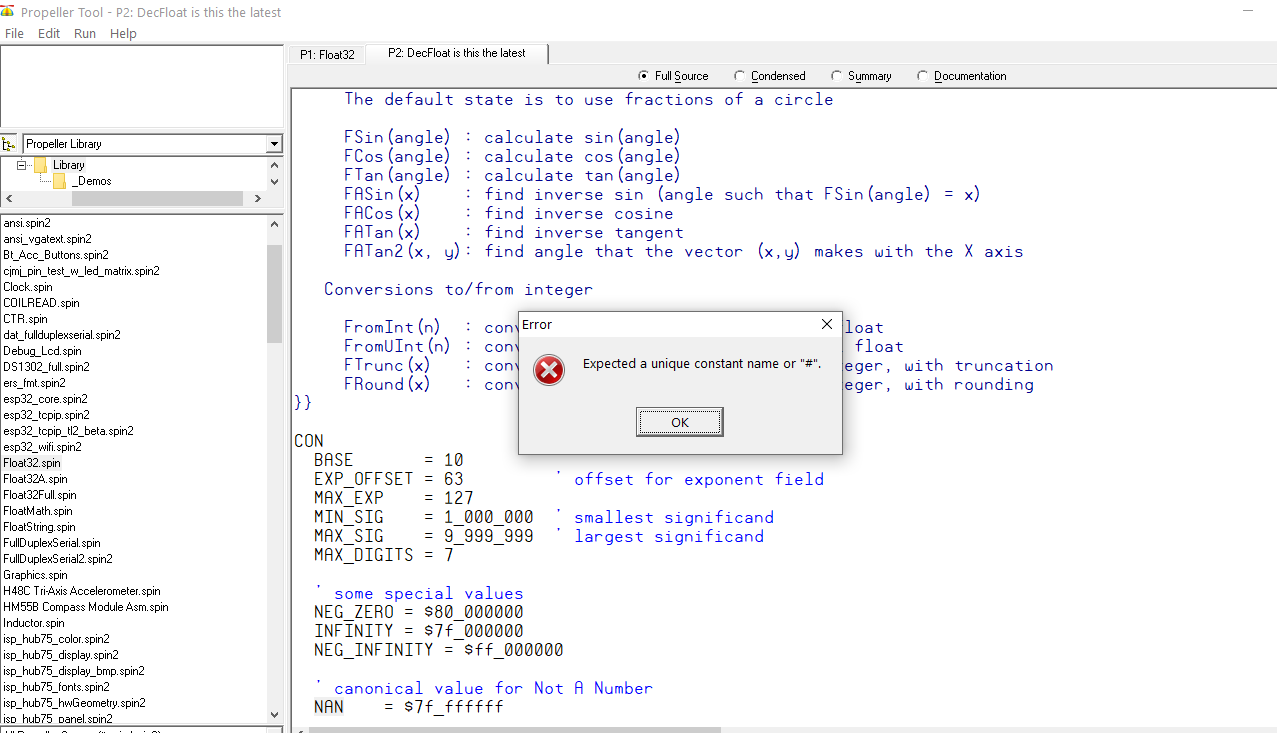

"NAN" error.

"NAN" error.

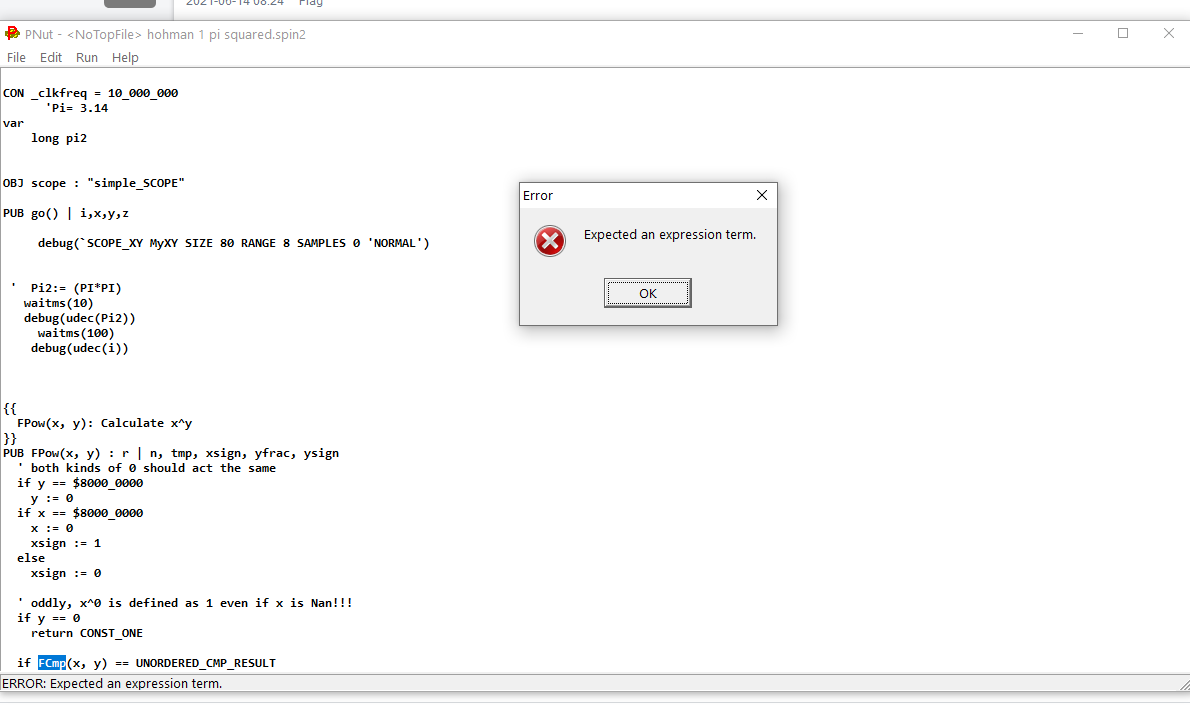

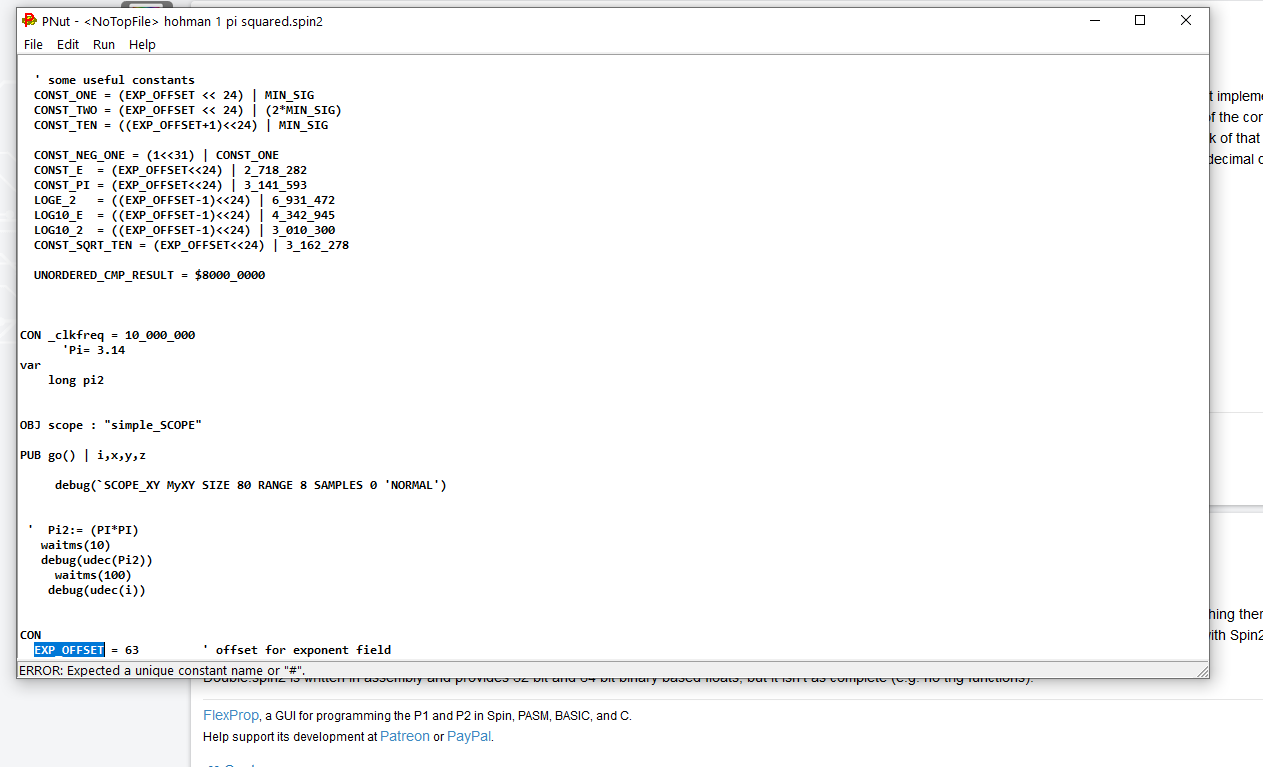

Pnut after commenting out the "NAN" to try to get past the error.

Pnut after commenting out the "NAN" to try to get past the error.

My assumption is that this should work witht he P2 so I tried it. Getting errors.

I got this from Github. Is this the latest I am getting errors when I try to use it in Pnut or Prop tool.

Thanks for the help.

Martin

@ersmith

Downloaded your above file and got this error in Pnut.

Is there something I am missing? I ran them to see if there were any error messages before I tried to tease out what I needed. I am trying to get back to my projects after a two year gap.

Thanks.

Martin

@pilot0315 : Chip changed the Spin2 language, and I never re-visited this thread to update the files. I've done so now, and the new zip file (p2_float_v4.zip) should work with the most recent PropTool.

@ersmith

Thanks.

I am also trying to get it to work in Pnut because of the DEBUG features there. Is there anything special to do?

They should work fine in PNut, as far as I know (I tried with PNut v35q, but I don't think anything has changed since then that would affect them).

Thanks